1. Introduction

SAR is a remote sensing technology that generates high-quality images by processing pulse signals. The working wavelength of SAR ranges from 1 cm to 1 m, whereas camera sensors use wavelengths closer to visible light or 1 micron [

1]. Therefore, SAR exhibits excellent penetration capability and can acquire high-quality remote sensing data in adverse weather conditions such as clouds, haze, rain, or snow, making it an all-weather remote sensing technology [

2,

3,

4,

5,

6]. However, in the process of SAR imaging, the generation of speckle noise is inevitable [

7], which significantly affects the quality and resolution of SAR images [

8]. To overcome this issue, there are currently two main strategies:

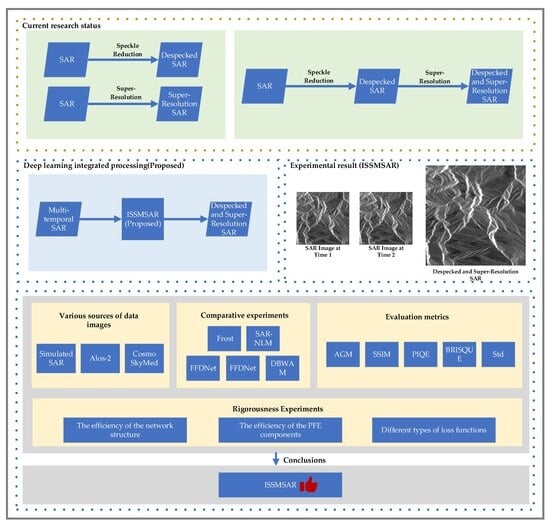

The first strategy adopts a two-stage processing approach, performing speckle reduction and super-resolution processing step by step. This is due to the fact that, in the presence of speckle noise, directly applying super-resolution processing to SAR images may amplify the speckle noise, thereby making it very prominent in the resulting image. Therefore, speckle reduction is applied to the SAR image first to mitigate the impact of speckle noise. Subsequently, super-resolution processing is employed to enhance spatial resolution while maintaining image clarity [

9].

The essence of the step-by-step strategy lies in explicitly separating the speckle reduction and super-resolution processes, which means treating speckle reduction and super-resolution processing as two independent processes. By employing common speckle reduction and super-resolution methods, this processing procedure is implemented in a step-by-step manner. In the speckle reduction process of SAR images, there are primarily two categories of methods: traditional speckle reduction algorithms and deep learning algorithms [

10]. Traditional speckle reduction algorithms include classical methods such as Frost [

11], non-local means (NLMs) [

12], and ratio image speckle reduction [

13], etc. In recent years, with the rapid development of deep learning, it has demonstrated outstanding performance in computer vision tasks. Researchers have proposed a series of efficient deep learning algorithms for speckle reduction. These include FFDNet [

14], SAR2SAR [

15], AGSDNet [

16], etc. In terms of super-resolution reconstruction in SAR images, there are also two categories of methods: traditional super-resolution algorithms and deep learning algorithms [

17]. Traditional super-resolution methods include interpolation methods [

18] and ScSR [

19], etc. Commonly used interpolation methods include nearest neighbor interpolation and bilinear interpolation [

20]. Due to their low computational complexity and high speed, they have become one of the most popular methods for rendering super-resolution images [

21]. However, it is worth mentioning that most deep learning-based super-resolution methods for SAR images often directly transfer processing strategies from optical images without fully considering the special properties of SAR imaging, which may to some extent affect the accuracy and stability of the reconstruction results [

22].

However, when conducting a simple step-by-step strategy, which involves speckle reduction followed by super-resolution, the results often turn out to be unsatisfactory. This is because the speckle reduction stage alone may lead to the loss of some high-frequency details, making it impossible to fully recover them in the subsequent super-resolution process. Additionally, the speckle reduction stage inevitably introduces some erroneous restoration, which may be amplified in the subsequent super-resolution process, leading to a decrease in the final result’s quality [

23]. Zhao et al. pointed out that due to the significant amplitude and phase fluctuations caused by speckle noise, even with the use of state-of-the-art spatial speckle reduction methods (such as SAR-BM3D or SAR-NLM), the image structure may still be disrupted [

13], and some noticeable speckle fluctuations may be retained after speckle reduction. Furthermore, in Zhan et al.’s work [

24], the preprocessing of TERRA-SAR images (including speckle reduction) resulted in a loss of image structure. In subsequent super-resolution processing, the phenomenon of structural loss can be observed to be significantly amplified. This emphasizes the inherent limitations in the step-by-step strategy. In summary, the straightforward step-by-step strategy of speckle reduction followed by super-resolution exhibits significant drawbacks when processing SAR images.

The second strategy involves performing speckle reduction as an auxiliary step, with a focus on enhancing the primary task of super-resolution processing. This approach represents a relatively comprehensive strategy for SAR image processing. In this regard, several studies have proposed a series of methods. Among them, Wu et al. introduced an improved NLM method combined with a back-propagation neural network [

25]. Through this approach, the enhanced NLM not only significantly improves low-resolution images to high-resolution levels but also effectively reduces speckle in SAR images. Kanakaraj et al. proposed a new method for SAR image super-resolution using the importance sampling unscented Kalman filter, which has the capability to handle multiplicative noise [

26]. On the other hand, Karimi et al. introduced a novel convex variational optimization model focused on the single-image super-resolution reconstruction of SAR images with speckle noise [

27]. This model utilizes Total Variation (TV) regularization to achieve edge preservation and speckle reduction. Additionally, Luo et al. introduced the combination of cubature Kalman filter and low-rank approximation, constructing a nonlinear low-rank optimization model [

28]. Finally, Gu et al. presented the Noise-Free Generative Adversarial Network (NF-GAN), which utilizes a deep generative adversarial network to reconstruct pseudo-high-resolution SAR images [

29]. However, it is worth emphasizing that these methods have certain limitations in speckle reduction. For detailed information, refer to

Table 1. Overall, they may exhibit two potential issues: on one hand, they may show a tendency towards excessive smoothing, meaning that image details are excessively blurred during the speckle reduction process, weakening specific image features; on the other hand, the speckle reduction effect may not be sufficiently thorough, leaving traces of speckle noise, which affects the clarity and level of detail in the image. This strategy primarily focuses on prioritizing super-resolution processing, while speckle reduction is relatively secondary. This also suggests that researchers need to seek more comprehensive and refined processing strategies in their future work, especially specialized approaches for speckle noise, in order to achieve the comprehensive optimization of SAR images.

In order to overcome the aforementioned issues, it is imperative to adopt a novel strategy that involves deep exploration of the underlying correlations between speckle reduction and super-resolution in SAR images. This approach aims to achieve more effective speckle reduction and super-resolution processing for SAR images. Recognizing that both speckle reduction and super-resolution processing require high-quality image reconstruction, they exhibit significant correlation. This is because they primarily focus on processing the high-frequency components while simultaneously preserving other crucial information [

30]. Additionally, multi-temporal SAR images are typically acquired by the same satellite at different times for the same target scene, containing rich spatiotemporal information. Compared to single-temporal SAR images, they provide a more abundant source of information [

31]. Utilizing multi-temporal SAR images as input for the network enables the network to comprehensively understand the characteristics of objects, thereby better preserving spatial resolution [

13] and providing favorable conditions for high-quality image reconstruction.

Given that these two tasks mutually reinforce each other, it is essential to develop a novel approach that integrates them into a unified deep learning model. Therefore, this paper proposes ISSMSAR. The objective of this network framework is to process two input multi-temporal SAR images. Through a series of processing modules, including PFE, HFE, FFB, and REC, the aim is to obtain the final high-quality SAR image.

The main contributions of this paper include the following:

This study proposes, for the first time, an integrated network framework utilizing deep learning for speckle reduction and super-resolution reconstruction of multi-temporal SAR images.

Based on the characteristics of SAR images, PFE is designed, incorporating three key innovative elements: parallel multi-scale convolution feature extraction, multi-resolution training strategy, and high-frequency feature enhancement learning. These innovations enable the network to more accurately adapt to the complex features of SAR images.

In the HFE, a clever fusion of techniques including deconvolution, deformable convolution, and skip connections is employed to precisely extract more complex and abstract features from SAR images.

Drawing inspiration from the traditional SAR algorithm, the network introduces the ratio-L1 loss as the optimization objective. Additionally, a hierarchical loss constraint mechanism is introduced to ensure the effectiveness of each critical module, thereby guaranteeing the robustness and reliability of the overall network performance.

Approaching from the perspective of multi-task learning, a dataset named “Multi-Task SAR Dataset” is proposed to provide solid support and foundation for the fusion learning task of speckle reduction and super-resolution of multi-temporal SAR images.

The structure of this paper is as follows: The second section will provide a detailed exposition of the network architecture of ISSMSAR and the ratio-L1 loss function. The third section will elucidate the process of creating the Multi-Task SAR Dataset, presenting concrete experiments and corresponding in-depth analyses. The fourth section will conclude this study.

3. Results

In this section, a comprehensive and detailed description of the process for creating the Multi-Task SAR Dataset is provided first. Next, the specific definitions and calculation methods of the evaluation metrics employed are presented. Finally, an in-depth analysis of the experimental results from multiple perspectives is conducted to gain a comprehensive understanding of the performance advantages and potential strengths of the proposed method in practical applications.

3.1. Multi-Task SAR Dataset

Due to the presence of speckle noise in SAR images, obtaining real noise-free high-resolution SAR images is challenging. To meet the requirements of multi-task learning in the algorithm, a SAR image dataset was simulated based on optical images [

34,

35,

36,

37,

38,

39,

40,

41,

42]. This allowed us to construct a dataset containing pairs of noisy low-resolution multi-temporal SAR images and clean high-resolution SAR images. This dataset is referred to as the Multi-Task SAR Dataset, as illustrated in

Figure 6.

The Multi-Task SAR Dataset originates from optical image data captured by the GaoFen-1 satellite. This optical image dataset was acquired in Hubei Province, China, with a resolution of 5 m, covering typical scenes such as forests, roads, farmland, urban areas, water bodies, villages, wetlands, sandy areas, and mining areas. In this dataset, the optical images have four channels: blue, green, red, and near-infrared. The blue, green, and red channels were extracted and combined to create RGB images. Subsequently, 8800 images with a size of 512 × 512 were cropped from a set of 100 RGB images, each with dimensions of 5556 × 3704. These RGB images were then converted to grayscale images, serving as clean high-resolution SAR images in this dataset. Additionally, varying multiplicative noise was introduced to these grayscale images to simulate speckle noise in multi-temporal SAR images. Subsequently, downsampling was performed on these simulated multi-temporal SAR images, thus constructing pairs of noisy low-resolution multi-temporal SAR images, as illustrated in

Figure 7.

3.2. Evaluation Metrics

3.2.1. AGM

The average gradient magnitude (AGM) is a quality metric used to assess the clarity and edge information in an image. Specifically, it calculates the average gradient magnitude of all pixels in an image, as described in Equation (28). In general, a higher average gradient magnitude indicates that the image contains more rich details, thus implying better image quality.

In this process,

and

represent the gradient values in the horizontal and vertical directions, respectively. The specific calculation method is described in Equations (29) and (30).

In this process, represents the image matrix, while and , respectively, denote the row and column indices of the pixels.

Finally, the arithmetic mean of the gradient values for each pixel in the image is computed. This process can be calculated using the following Equation (31).

where

and

are the number of rows and columns in the image.

3.2.2. SSIM

The Structural Similarity (SSIM) is a metric used to measure the similarity between two images. It is an evaluation method for comparing image similarity from the perspective of human visual perception. This method quantifies the similarity between images by considering their brightness, contrast, and structural information. The SSIM value ranges from −1 to 1, where a value closer to 1 indicates greater similarity between the two images. Conversely, as the dissimilarity increases, the SSIM value approaches −1. When the input images are denoted as

and

, the calculation of SSIM follows Equation (32).

Here, and represent the pixel means of images and y, respectively. and are the pixel standard deviations of images and , respectively. denotes the pixel covariance between images x and . The constants and are used to prevent division by zero in the denominator.

3.2.3. PIQE

The Perception-based Image Quality Evaluator (PIQE) is a no-reference image quality assessment algorithm that can evaluate the quality of an image without the need for a reference image. It analyzes features such as contrast, sharpness, and noise in the image based on human visual perception principles. A lower PIQE score indicates higher perceived image quality, while a higher score indicates lower perceived quality. The specific calculation method of PIQE involves multiple steps and parameters, and its detailed mathematical expression is omitted here.

3.2.4. BRISQUE

The Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) is a no-reference image quality assessment metric that evaluates the distortion level of an image based on its spatial statistical features. A lower BRISQUE score indicates higher perceived image quality, while a higher score suggests lower perceived quality. The calculation of BRISQUE is relatively complex, so its expression is omitted here.

3.2.5. Std

The standard deviation (Std) is a statistical measure used to assess the dispersion of data, serving as an indicator of contrast in images. A higher standard deviation indicates more significant variations in pixel intensities, reflecting higher contrast in the image. Conversely, a smaller standard deviation suggests less variation in pixel intensities and lower image contrast. Moreover, the standard deviation is also a metric used to assess image sharpness. Clear images generally display larger variations in pixel values, resulting in a higher standard deviation. Its calculation is detailed in Equation (33):

where

is the standard deviation,

is the number of pixels,

is the value of the

i-th pixel, and

is the mean of the pixel values.

3.3. Rigorousness Experiments

In this section, the feasibility of the proposed network is validated through extensive ablation experiments. Real multi-temporal SAR images from Alos-2 are selected for experimentation. The performance of the network is comprehensively assessed using multiple evaluation metrics, including AGM, SSIM, PIQE, BRISQUE, and Std.

3.3.1. Component Analysis

Through a systematic series of ablative experiments, this research conducted an in-depth assessment of the functionalities of individual modules within the network. While maintaining other conditions constant, this research successively removed key modules, including PFE, HFB, and FFB. This experimental design facilitates the elucidation of each module’s role and impact within the overall network architecture, providing comprehensive data support for a thorough understanding of network performance. The experimental results are presented in

Table 2. The performance significantly declined when the network lacked the PFE. This underscores the critical role of the PFE as a fundamental component for primary feature extraction. Elements such as parallel multi-scale convolution feature extraction, multi-resolution training strategy, and high-frequency feature enhancement learning synergistically contribute, markedly enhancing the network’s feature extraction capabilities and establishing a robust foundation for subsequent processing steps. In the absence of the HFB, the performance remained suboptimal. Comprising multiple sets of deconvolutions and deformable convolutions with skip connections, this module effectively propagates low-level features to higher levels, facilitating the flow of feature information across different levels and providing the network with richer feature representation and learning capabilities. Similarly, the performance suffered when the FFB was omitted, indicating that inter-channel feature fusion contributes to the collaborative feature integration of the two subnetworks, resulting in improved outcomes. Ultimately, when all modules are combined, the entire network achieves optimal performance. This series of results provides profound insights into the unique contributions of each module within the network, offering robust support for a comprehensive understanding of network performance.

Additionally, as PFE encompasses a multi-resolution training strategy and high-frequency feature enhancement learning, to validate the effectiveness of the module, this research separately removed the upsampling and downsampling modules from the multi-resolution training strategy and the extracting high-frequency information module from high-frequency feature enhancement learning. Detailed experimental results are provided in

Table 3. When the PFE did not include the upsampling and downsampling modules of the multi-resolution training strategy, the test results showed a significant performance decline when using test data with different resolutions from Alos-2. This result indirectly confirms the effectiveness of the proposed multi-resolution training strategy in enabling the model to learn features from input images of different resolutions during the training phase, thereby enhancing the generalization capability. Similarly, when the extracting high-frequency information module for high-frequency feature enhancement learning was excluded from PFE, the performance was subpar. This suggests that without the module, it is challenging to better understand the characteristics of speckle noise and capture detail information in SAR images. Ultimately, when all modules of PFE are combined, the entire network achieves optimal performance. This not only enhances the network’s ability to preserve fine details in image processing but also strengthens its generalization capability. This series of experiments demonstrates the crucial role of each component within the PFE in network performance.

3.3.2. Research on Different Types of Loss Functions

To validate the positive impact of the ratio-based loss function during experimental training, this research conducted performance comparisons using different loss functions, as detailed in

Table 4. Specifically, this research compared the L1 loss with the ratio-L1 loss, as well as the L2 loss with the ratio-L2 loss. The results indicate that integrating the ratio concept into the loss function positively influences the entire network, providing better guidance for network training. This not only offers a novel perspective for loss function selection but also underscores the effectiveness of the ratio concept in optimizing objectives. Furthermore, by comparing the performance of ratio-L1 loss and ratio-L2 loss, it is evident that ratio-L1 loss outperforms ratio-L2 loss. This suggests that this loss function comprehensively considers both overall and detailed aspects, effectively guiding network training.

3.4. Analysis of Experimental Results

Various sources of data images were employed as experimental images, and comparative experiments were conducted with five sets of algorithms. Through in-depth analysis of the experimental results from multiple perspectives, the aim was to derive more scientifically reliable conclusions. Specifically, to validate the network’s generalization ability in different scenarios, four sets of SAR images were selected as experimental images. These images encompass both simulated SAR images and real SAR images, originating from three independent sources: GaoFen-1, Alos-2, and Cosmo SkyMed.

To ensure the effectiveness of comparative experiments, a series of contrastive algorithms were selected, including both traditional methods, deep learning methods, and the multi-temporal SAR image algorithm. In terms of traditional methods, Frost and SAR-NLM were chosen as representatives. In terms of deep learning methods, the recent high-performing algorithms, FFDNet and AGSDNet, were opted for. Regarding multi-temporal algorithms, DBWAM was selected. Considering that current research on speckle reduction and super-resolution of multi-temporal SAR images is still in its early stages, with the mainstream focus primarily on speckle reduction and super-resolution separately for individual SAR images, the approach was taken to effectively conduct comparative experiments by superimposing the multi-temporal SAR images input to this algorithm and using the superimposed image as input for the comparative experiment. Additionally, this algorithm employs bilinear operation in the network for super-resolution learning. Therefore, the bilinear operation was also applied to the results of the comparative experiment to obtain the final comparative experimental results.

3.4.1. Simulated SAR Images

To validate the results of the network, this experiment followed common practices by initially conducting experiments using two groups of simulated SAR images derived from the same training dataset. These two distinct input image groups, denoted as Group 1 and Group 2, are illustrated in

Figure 8. The corresponding parameter configurations for these image sets are detailed in

Table 5. Encompassing diverse terrains such as mountains and villages, these images were designed to comprehensively evaluate the network’s generalization performance across different land features. This experimental design ensures the network’s robustness in handling various terrains, thereby enhancing the credibility of the research outcomes.

Through subjective visual analysis, this study conducted a detailed comparison of the experimental results and enlarged details of simulated SAR images. The specific experimental results are shown in

Figure 9, while the detailed enlarged images are presented in

Figure 10. The Frost exhibited poor performance in preserving image details, resulting in significant detail loss and extremely low clarity, making it challenging to discern the details of terrain features. The SAR-NLM displayed an over-smoothing trend, causing severe image distortion, especially with noticeable information loss in details, thereby reducing image quality. Both the FFDNet and AGSDNet performed well in reducing speckle noise but presented a tendency towards blurred texture edges. This characteristic compromised the clarity of images, leading to a reduced ability to identify terrain features. While the DBWAM partially removed speckle noise, its results showed an over-smoothing phenomenon, leading to substantial overall detail loss, a significant decrease in image contrast, and reduced recognizability of terrain details. In comparison, the proposed network successfully eliminated speckle noise while preserving image clarity. Additionally, it effectively retained detailed information.

For a more objective evaluation of the simulated SAR experimental results, this study employed five evaluation metrics for comprehensive analysis, as detailed in

Table 6. In both groups of experiments, the proposed network consistently achieved three top performances and one second-best performance across all evaluation metrics. Specifically, from the perspective of SSIM, the proposed network’s resulting images exhibited strong brightness, contrast, and structural preservation capabilities, thereby affirming the effectiveness of the proposed network in the integrated processing of speckle reduction and super-resolution. Considering the PIQE, which aims to simulate human perception of image quality, this metric is designed to provide an assessment consistent with human visual quality perception. It is noteworthy that the proposed network achieved the best performance in the PIQE, further ensuring the alignment of PIQE assessment with the subjective visual analysis mentioned earlier.

3.4.2. Real SAR Images from Alos-2

To conduct a thorough evaluation of the proposed network on real SAR images, this study performed experiments using multi-temporal SAR images from Alos-2. Specifically, 3 m resolution images from Alos-2 were chosen to comprehensively assess the effectiveness of the proposed network in terms of the PFE multi-resolution training strategy. Detailed information about this SAR image is presented in

Table 7.

Specific experimental results are detailed in

Figure 11, with enlarged details provided in

Figure 12. The results from the Frost exhibit significant blurring, making it challenging to discern image details. In the SAR-NLM and DBWAM, there is an issue of excessive smoothing, leading to image distortion. While both the FFDNet and AGSDNet effectively denoise, they also introduce relatively blurry features along terrain boundaries. By contrast, the proposed network not only effectively removes speckle noise but also preserves the clarity and details of the SAR image, showcasing outstanding performance. This outcome underscores the superiority of the proposed network in SAR image processing, demonstrating its enhanced ability to balance the speckle reduction and the preservation of SAR image details compared to other algorithms. Regarding the output results, a comprehensive analysis of performance metrics was conducted, as detailed in

Table 8. The proposed network consistently achieved the best performance across all evaluation criteria. This indicates that the proposed network has achieved a high level of performance in speckle reduction, image sharpness, and the preservation of detailed features. This alignment with subjective visual analysis results emphasizes the outstanding performance of the network.

3.4.3. Real SAR Images from Cosmo SkyMed

This study further employed real images with a 3 m resolution from Cosmo SkyMed to validate the performance of the proposed network in handling data from different sources. Detailed parameters about this image are presented in

Table 9.

Through subjective visual analysis, this study conducted a detailed comparison of the results and enlarged details of real SAR experimental images. The experimental results are depicted in

Figure 13, while detailed enlarged images can be found in

Figure 14. In the detailed comparison, it is evident that the proposed network excels in preserving image structure, exhibiting high contrast, and achieving superior clarity. In contrast, the results from the Frost exhibited blurred features in details, making it challenging to distinguish terrain information. The SAR-NLM encountered issues of excessive smoothing, leading to the loss of image details. In the results of FFDNet and AGSDNet, the boundaries of terrain appeared relatively blurry, indicating a poorer preservation of image details. In summary, the proposed network demonstrated excellent capabilities in preserving image details, high contrast, and clarity on real SAR images, presenting a more pronounced advantage compared to other algorithms.

For the evaluation of the output results, a comprehensive analysis of evaluation metrics was conducted, as detailed in

Table 10. From the table, it is evident that the AGM, PIQE, and Std metrics all achieved the best performance, while SSIM attained the second-best performance. Specifically, from the perspective of AGM, this metric sensitively reflects the overall quality and clarity of the images. Consequently, the resulting images from the proposed network exhibited high clarity and robust edge-preservation capabilities. Regarding the Std, its sensitivity to image contrast implies that the proposed network enhances the clarity of terrain information. Meanwhile, given that the SAR image resolution of Cosmo SkyMed is 3 m, and the training set used in this study has a resolution of 5 m, the proposed network still achieved good testing results. This indicates that the proposed network exhibits strong generalization performance, showcasing robust performance across images of different resolutions.