Global and Multiscale Aggregate Network for Saliency Object Detection in Optical Remote Sensing Images

Abstract

:1. Introduction

- (1)

- Optical remote sensing images offer surface information encompassing cities, farmland, rivers, buildings, and roads, reflecting a diversity of object types [14].

- (2)

- Objects in optical remote sensing images exhibit varying sizes, e.g., ships, aeroplanes, bridges, rivers, and islands, signifying diversity in target size [14].

- (3)

- The background of an optical remote sensing image may comprise intricate textures and structures, surpassing the complexity of a natural image [14].

- (1)

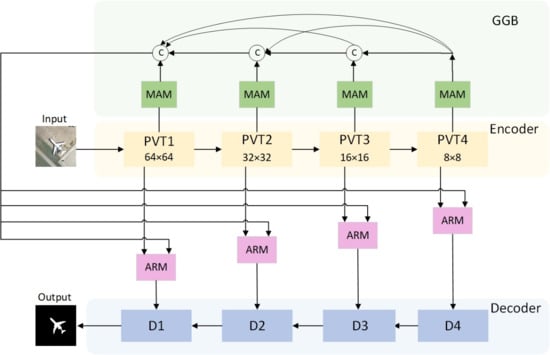

- This research replaces traditional CNN-based ResNet or VGG with a transformer-based backbone network, PVT-v2, to enhance the comprehensiveness of salient regions. Unlike CNN-based methods that primarily capture local information, transformer-based approaches excel in learning remote dependencies and acquiring global information. The proposed encoder-decoder architecture includes a PVT-v2 encoder for learning multiscale features and a DD for hierarchical feature map decoding. At the same time, a Global Guidance Branch is designed on the encoder.

- (2)

- The study introduces the MAM, recognising the challenge of large variations in object scales within optical remote sensing images. This module adeptly extracts multiscale features and establishes densely connected structures for the GGB. The GGB leverages four MAM modules to generate global semantic information, guiding low-level features for more precise localisation.

- (3)

- The ARM is innovatively proposed in this study to amalgamate global guidance information with fine features through a coarse-to-fine strategy. Leveraging global guidance information ensures accurate localisation of salient objects, capturing the complete structural context, while the incorporation of fine features augments details in the preliminary saliency map.

2. Related Work

2.1. Traditional Methods for NSI-SOD

2.2. CNN-Based Methods for NSI-SOD

2.3. CNN-Based Methods for ORSI-SOD

3. Proposed Method

3.1. Network Overview

3.2. Multiscale Attention Module (MAM)

3.3. Global Guided Branch (GGB)

3.4. Aggregation Refinement Module (ARM)

3.5. Dense Decoder (DD)

3.6. Loss Function

4. Experimental Results

4.1. Experimental Protocol

4.1.1. Datasets

4.1.2. Network Training Details

4.1.3. Evaluation Metrics

4.2. Comparison with State-of-the-Arts

4.2.1. Comparison Methods

4.2.2. Quantitative Comparison

4.2.3. Visual Comparison

- (1)

- Multiple tiny objects. This scenario features a combination of multiple and tiny objects. The distinct shooting distance and angle in ORSI images make small objects significantly smaller than those in NSI, presenting a challenge in detecting all small objects comprehensively. The CNN-based methods in the first row often miss or misdetect salient objects, and traditional methods struggle to adapt to ORSI. In contrast, our method comprehensively detects all objects in scenes with multiple salient objects. This is due to the multiscale feature fusion technique that we use in MAM to combine features from different levels. The shallow detail and deep semantic information are fused to better deal with objects of different sizes. Second, we introduce an attention mechanism to focus on the key features of small objects. In the deep layer of the network, we use upsampling to enlarge the feature map and fuse it with the shallow features so as to recover the lost detailed information. In this way, our network can guarantee the effectiveness and accuracy of small object processing.

- (2)

- Irregular geometry structure. These structures exhibit intricate and irregular topologies, making accurate edge delineation challenging. They appear at various positions and sizes in the image. While AccoNet, LVNet, and MINet can only detect a portion of the river, other methods encounter difficulties, such as introducing noise and unclear edges. Our method, however, accurately detects rivers with complete structures and clear boundaries, notably capturing the lower-left region of the island. We extracted the global context information to improve the clarity of the boundary, which is beneficial to identify the irregular geometry structure of the image.

- (3)

- Objects with shadows. Shadows, often misdetected as salient objects, can create inaccurate detection results. Other methods may miss one or two circles, and GateNet incorrectly highlights shadows. In contrast, our method adeptly detects objects without redundant shadow regions.

- (4)

- Objects with complex backgrounds. The multiscale attention module we designed uses the attention mechanism to highlight salient objects while suppressing background information effectively. Enhance the ability to recognise objects with complex backgrounds. Our results exhibit superior noise reduction, effectively shielding background interference and precisely capturing salient objects.

- (5)

- Objects with low contrast. When salient objects closely resemble the background, many existing methods struggle to highlight them accurately. The lines detected using the three NSI-SOD methods appear fuzzy, and MCCNet fails to detect lines altogether. Conversely, our method yields clear detections, particularly demarcating the accurate boundaries of small islands.

- (6)

- Objects with interferences. Some non-salient objects may interfere with detection, leading to incorrect highlights. Our method can distinguish the interfering objects by modelling the context information around the target, including object shape, texture, etc. In addition, we use the attention mechanism to weight the feature selection and weighting, which also makes the model pay more attention to the features that are helpful to the target and reduce the impact of interfering objects. Our method excels in distinguishing and accurately highlighting salient objects in the presence of potential interferences.

4.3. Ablation Experiment

- (1)

- Individual contribution of each module in the network: To assess the distinct contributions of each module, namely the ARM module and GGB, we propose three variants of GMANet in Table 2.

- (2)

- (3)

- The rationality of expansion rate design in the MAM module: We present two MAM module variants to assess the rationality of dilation rates in dilated convolutions within the MAM module. The first variant features dilation rates of 1, 3, 5, and 7, mirroring the dilation rates employed by our network. The second variant adopts dilation rates of 3, 5, 7, and 9, respectively, while keeping other components unchanged. The quantitative results are presented in Table 4.

- (4)

- The efficacy of the Transformer (TF) and Channel Attention (CA) components in the ARM is assessed through ablation experiments, where two ARM variants are presented: (1) “w/o TF,” which excludes transformer blocks, and (2) “w/o CA,” which omits the channel attention module. The complete ARM module, denoted as “w/TF + CA,” is also included for reference. The quantitative results are presented in Table 5.

- (5)

- To demonstrate the role of BCE losses and IoU losses in the loss function, we designed three variants: the first is an approach using only BCE loss. The second is an approach using only IoU loss. The third method is the mixed loss method of BCE and IoU, which is the comprehensive loss used in this paper. The quantitative results are shown in Table 6.

- (6)

- To verify the relative contribution of BCE and IoU loss functions, we set 11 variant forms: 0 × BCE + 1 × IoU, 0.1 × BCE + 0.9× IoU, 0.2 × BCE + 0.8 × IoU, 0.3 × BCE + 0.7 × IoU, 0.4 × BCE + 0.6 × IoU, 0.5 × BCE + 0.5 × IoU, 0.6 × BCE + 0.4 × IoU, 0.7 × BCE + 0.3 × IoU, 0.8 × BCE + 0.2 × IoU, 0.9 × BCE + 0.1 × IoU, 1 × BCE + 0 × IoU, where 0.5 × BCE + 0.5 × IoU is the loss function used by our method. The quantitative results are shown in Figure 8.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Borji, A.; Cheng, M.M.; Jiang, H.; Li, J. Salient object detection: A benchmark. IEEE Trans. Image Process. 2015, 24, 5706–5722. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Liu, Z.; Shi, R.; Wei, W. Constrained fixation point based segmentation via deep neural network. Neurocomputing 2019, 368, 180–187. [Google Scholar] [CrossRef]

- Fang, Y.; Chen, Z.; Lin, W.; Lin, C.W. Saliency detection in the compressed domain for adaptive image retargeting. IEEE Trans. Image Process. 2012, 21, 3888–3901. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Lin, J.; Yuan, Y. Salient band selection for hyperspectral image classification via manifold ranking. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1279–1289. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Borji, A.; Cheng, M.M.; Hou, Q.; Jiang, H.; Li, J. Salient object detection: A survey. Comput. Vis. Media 2019, 5, 117–150. [Google Scholar] [CrossRef]

- Wang, W.; Lai, Q.; Fu, H.; Shen, J.; Ling, H.; Yang, R. Salient object detection in the deep learning era: An in-depth survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3239–3259. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Ling, H. ICNet: Information conversion network for RGB-D based salient object detection. IEEE Trans. Image Process. 2020, 29, 4873–4884. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Ye, L.; Wang, Y.; Ling, H. Cross-modal weighting network for RGB-D salient object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 665–681. [Google Scholar]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. In Proceedings of the IEEE International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Pimentel, M.A.F.; Clifton, D.A.; Clifton, L.; Tarassenko, L. A review of novelty detection. Signal Process. 2014, 99, 215–249. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Madhulatha, T.S. An overview on clustering methods. arXiv 2012, arXiv:1205.1117. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar]

- Liu, Y.; Zhang, X.Y.; Bian, J.W.; Zhang, L.; Cheng, M.M. SAMNet: Stereoscopically attentive multi-scale network for lightweight salient object detection. IEEE Trans. Image Process. 2021, 30, 3804–3814. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.X.; Liu, J.J.; Fan, D.P.; Cao, Y.; Yang, J.; Cheng, M.M. EGNet: Edge guidance network for salient object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8779–8788. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Li, C.; Yuan, Y.; Cai, W.; Xia, Y.; Feng, D.D. Robust saliency detection via regularised random walks ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2710–2717. [Google Scholar]

- Yuan, Y.; Li, C.; Kim, J.; Cai, W.; Feng, D.D. Reversion correction and regularised random walk ranking for saliency detection. IEEE Trans. Image Process. 2017, 27, 1311–1322. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Han, D.; Tai, Y.W.; Kim, J. Salient region detection via high-dimensional color transform and local spatial support. IEEE trans. Image Process. 2015, 25, 9–23. [Google Scholar] [CrossRef]

- Zhou, L.; Yang, Z.; Zhou, Z.; Hu, D. Salient region detection using diffusion process on a two-layer sparse graph. IEEE Trans. Image Process. 2017, 26, 5882–5894. [Google Scholar] [CrossRef]

- Peng, H.; Li, B.; Ling, H.; Hu, W.; Xiong, W.; Maybank, S.J. Salient object detection via structured matrix decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 818–832. [Google Scholar] [CrossRef]

- Zhou, Y.; Huo, S.; Xiang, W.; Hou, C.; Kung, S.Y. Semi-supervised salient object detection using a linear feedback control system model. IEEE Trans. Cybern. 2018, 49, 1173–1185. [Google Scholar] [CrossRef]

- Liang, M.; Hu, X. Feature selection in supervised saliency prediction. IEEE Trans. Cybern. 2014, 45, 914–926. [Google Scholar] [CrossRef]

- Liu, J.J.; Hou, Q.; Cheng, M.M.; Feng, J.; Jiang, J. A simple pooling-based design for real-time salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3917–3926. [Google Scholar]

- Zhao, X.; Pang, Y.; Zhang, L.; Lu, H.; Zhang, L. Suppress and balance: A simple gated network for salient object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 35–51. [Google Scholar]

- Ma, M.; Xia, C.; Li, J. Pyramidal feature shrinking for salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; AAAI Press: Palo Alto, CA, USA, 2021; pp. 2311–2318. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7479–7489. [Google Scholar]

- Zhang, L.; Li, A.; Zhang, Z.; Yang, K. Global and local saliency analysis for the extraction of residential areas in high-spatial-resolution remote sensing image. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3750–3763. [Google Scholar] [CrossRef]

- Li, C.; Cong, R.; Hou, J.; Zhang, S.; Qian, Y.; Kwong, S. Nested network with two-stream pyramid for salient object detection in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9156–9166. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Liu, Q.; Wang, Y.; Zhu, X.X. HSF-Net: Multiscale deep feature embedding for ship detection in optical remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7147–7161. [Google Scholar] [CrossRef]

- Zhang, Q.; Cong, R.; Li, C.; Cheng, M.M.; Fang, Y.; Cao, X.; Zhao, Y.; Kwong, S. Dense Attention Fluid Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Image Process. 2020, 30, 1305–1317. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Cong, R.; Guo, C.; Li, H.; Zhang, C.; Zheng, F.; Zhao, Y. A parallel down-up fusion network for salient object detection in optical remote sensing images. Neurocomputing 2020, 415, 411–420. [Google Scholar] [CrossRef]

- Tu, Z.; Wang, C.; Li, C.; Fan, M.; Zhao, H.; Luo, B. ORSI salient object detection via multiscale joint region and boundary model. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607913. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Lin, W.; Ling, H. Multi-content complementation network for salient object detection in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5614513. [Google Scholar] [CrossRef]

- Dong, C.; Liu, J.; Xu, F.; Liu, C. Ship Detection from Optical Remote Sensing Images Using Multi-Scale Analysis and Fourier HOG Descriptor. Remote Sens. 2019, 11, 1529. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, L.; Shi, W.; Liu, Y. Airport Extraction via Complementary Saliency Analysis and Saliency-Oriented Active Contour Model. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1085–1089. [Google Scholar] [CrossRef]

- Peng, D.; Guan, H.; Zang, Y.; Bruzzone, L. Full-level domain adaptation for building extraction in very-high-resolution optical remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–17. [Google Scholar] [CrossRef]

- Liu, Z.; Zhao, D.; Shi, Z.; Jiang, Z. Unsupervised Saliency Model with Color Markov Chain for Oil Tank Detection. Remote Sens. 2019, 11, 1089. [Google Scholar] [CrossRef]

- Jing, M.; Zhao, D.; Zhou, M.; Gao, Y.; Jiang, Z.; Shi, Z. Unsupervised oil tank detection by shape-guide saliency model. IEEE Trans. Geosci. Remote Sens. 2018, 16, 477–481. [Google Scholar] [CrossRef]

- Dong, B.; Wang, W.; Fan, D.P.; Li, J.; Fu, H.; Shao, L. Polyp-pvt: Polyp segmentation with pyramid vision transformers. arXiv 2021, arXiv:2108.06932. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7132–7141. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Bai, Z.; Lin, W.; Ling, H. Lightweight Salient Object Detection in Optical Remote Sensing Images via Feature Correlation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5617712. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. arXiv 2015, arXiv:1504.06375. [Google Scholar]

- Li, G.; Liu, Z.; Chen, M.; Bai, Z.; Lin, W.; Ling, H. Hierarchical alternate interaction network for RGB-D salient object detection. IEEE Trans. Image Process. 2021, 30, 3528–3542. [Google Scholar] [CrossRef] [PubMed]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelsshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4548–4557. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. In Proceedings of the 27th International Joint Conference on Artifificial Intelligence, Stockholm, Sweden, 13–19 July 2018; AAAI Press: Menlo Park, CA, USA, 2018; pp. 698–704. [Google Scholar]

- Hou, Q.; Cheng, M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P. Deeply Supervised Salient Object Detection with Short Connections. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 815. [Google Scholar] [CrossRef]

- Hu, X.; Zhu, L.; Qin, J.; Fu, C.W.; Heng, P.A. Recurrently aggregating deep features for salient object detection. In Proceedings of the Thirty-Second AAAI Conference on Artifificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Deng, Z.; Hu, X.; Zhu, L.; Xu, X.; Qin, J.; Han, G.; Heng, P.A. R3net: Recurrent residual refifinement network for saliency detection. In Proceedings of the 27th International Joint Conference on Artifificial Intelligence, Stockholm, Sweden, 13–19 July 2018; AAAI Press: Menlo Park, CA, USA, 2018; pp. 684–690. [Google Scholar]

- Chen, Z.; Xu, Q.; Cong, R.; Huang, Q. Global Context-Aware Progressive Aggregation Network for Salient Object Detection. In Proceedings of the AAAI Conference on Artifificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 10599–10606. [Google Scholar]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Multi-Scale Interactive Network for Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 9413–9422. [Google Scholar]

- Zhou, H.; Xie, X.; Lai, J.; Chen, Z.; Yang, L. Interactive two-stream decoder for accurate and fast saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9141–9150. [Google Scholar]

- Li, J.; Pan, Z.; Liu, Q.; Wang, Z. Stacked U-shape network with channel-wise attention for salient object detection. IEEE Trans. Multimed. 2020, 23, 1397–1409. [Google Scholar] [CrossRef]

- Xu, B.; Liang, H.; Liang, R.; Chen, P. Locate globally, segment locally: A progressive architecture with knowledge review network for salient object detection. Proc. AAAI Conf. Artif. Intell. 2021, 35, 3004–3012. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; Zhang, J. Saliency detection based on self-adaptive multiple feature fusion for remote sensing images. Int. J. Remote Sens. 2019, 40, 8270–8297. [Google Scholar] [CrossRef]

- Gao, S.-H.; Tan, Y.-Q.; Cheng, M.-M.; Lu, C.; Chen, Y.; Yan, S. Highly efficient salient object detection with 100k parameters. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 702–721. [Google Scholar]

- Li, G.; Liu, Z.; Zeng, D.; Lin, W.; Ling, H. Adjacent context coordination network for salient object detection in optical remote sensing images. IEEE Trans. Cybern. 2022, 53, 526–538. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Sun, H.; Liu, N.; Bian, Y.; Cen, J.; Zhou, H. A lightweight multi-scale context network for salient object detection in optical remote sensing images. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 238–244. [Google Scholar]

| Methods | Type | Speed | EORSSD [34] | ORSSD [32] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RRWR [20] | T.N. | 0.3 | - | 0.5997 | 0.4496 | 0.2906 | 0.3347 | 0.5696 | 0.1677 | 0.6837 | 0.5950 | 0.4254 | 0.4874 | 0.7034 | 0.1323 |

| HDCT [22] | T.N. | 7 | - | 0.5976 | 0.5992 | 0.1891 | 0.2663 | 0.5197 | 0.1087 | 0.6196 | 0.5776 | 0.2617 | 0.3720 | 0.6289 | 0.1309 |

| DSG [23] | T.N. | 0.6 | - | 0.7196 | 0.6630 | 0.4774 | 0.5659 | 0.7573 | 0.1041 | 0.7196 | 0.6630 | 0.4774 | 0.5659 | 0.7573 | 0.1041 |

| SMD [24] | T.N. | - | - | 0.7112 | 0.6469 | 0.4297 | 0.4094 | 0.6428 | 0.0770 | 0.7645 | 0.7075 | 0.5277 | 0.5567 | 0.7680 | 0.0715 |

| RCRR [21] | T.N. | 0.3 | - | 0.6013 | 0.4495 | 0.2907 | 0.3349 | 0.5646 | 0.1644 | 0.6851 | 0.5945 | 0.4255 | 0.4876 | 0.6959 | 0.1276 |

| DSS [56] | C.N. | 8 | 62.23 | 0.7874 | 0.7159 | 0.5393 | 0.4613 | 0.6948 | 0.0186 | 0.8260 | 0.7838 | 0.6536 | 0.6203 | 0.8119 | 0.0363 |

| RADF [57] | C.N. | 7 | 62.54 | 0.8189 | 0.7811 | 0.6296 | 0.4954 | 0.7281 | 0.0168 | 0.8258 | 0.7876 | 0.6256 | 0.5726 | 0.7709 | 0.0382 |

| R3Net [58] | C.N. | 2 | 56.16 | 0.8192 | 0.7710 | 0.5742 | 0.4181 | 0.6477 | 0.0171 | 0.8142 | 0.7824 | 0.7060 | 0.7377 | 0.8721 | 0.0404 |

| PoolNet [27] | C.N. | 25 | 53.63 | 0.8218 | 0.7811 | 0.5778 | 0.4629 | 0.6864 | 0.0210 | 0.8400 | 0.7904 | 0.6641 | 0.6162 | 0.8157 | 0.0358 |

| EGNet [18] | C.N. | 9 | 108.07 | 0.8602 | 0.8059 | 0.6743 | 0.5381 | 0.7578 | 0.0110 | 0.8718 | 0.8431 | 0.7253 | 0.6448 | 0.8276 | 0.0216 |

| GCPA [59] | C.N. | 23 | 67.06 | 0.8870 | 0.8517 | 0.7808 | 0.6724 | 0.8652 | 0.0102 | 0.9023 | 0.8836 | 0.8292 | 0.7853 | 0.9231 | 0.0168 |

| MINet [60] | C.N. | 12 | 47.56 | 0.9040 | 0.8583 | 0.8133 | 0.7707 | 0.9010 | 0.0090 | 0.9038 | 0.8924 | 0.8438 | 0.8242 | 0.9301 | 0.0142 |

| ITSD [61] | C.N. | 16 | 17.08 | 0.9051 | 0.8690 | 0.8114 | 0.7423 | 0.8999 | 0.0108 | 0.9048 | 0.8847 | 0.8376 | 0.8059 | 0.9263 | 0.0166 |

| GateNet [28] | C.N. | 25 | 100.02 | 0.9114 | 0.8731 | 0.8128 | 0.7123 | 0.8755 | 0.0097 | 0.9184 | 0.8967 | 0.8562 | 0.8220 | 0.9307 | 0.0135 |

| SUCA [62] | C.N. | 24 | 117.71 | 0.8988 | 0.8430 | 0.7851 | 0.7274 | 0.8801 | 0.0097 | 0.8988 | 0.8605 | 0.8108 | 0.7745 | 0.9093 | 0.0143 |

| PA-KRN [63] | C.N. | 16 | 141.06 | 0.9193 | 0.8750 | 0.8392 | 0.7995 | 0.9273 | 0.0105 | 0.9240 | 0.8957 | 0.8677 | 0.8546 | 0.9409 | 0.0138 |

| VOS [39] | T.O. | - | - | 0.5083 | 0.3338 | 0.1158 | 0.1843 | 0.4772 | 0.2159 | 0.5367 | 0.3875 | 0.1831 | 0.2633 | 0.5798 | 0.2227 |

| SMFF [64] | T.O. | - | - | 0.5405 | 0.5738 | 0.1012 | 0.2090 | 0.5020 | 0.1434 | 0.5310 | 0.4865 | 0.1383 | 0.2493 | 0.5674 | 0.1854 |

| CMC [41] | T.O. | - | - | 0.5800 | 0.3663 | 0.2025 | 0.2010 | 0.4891 | 0.1057 | 0.6033 | 0.4213 | 0.2904 | 0.3107 | 0.5989 | 0.1267 |

| LVNet [32] | C.O. | 1.4 | - | 0.8645 | 0.8052 | 0.7021 | 0.6308 | 0.8478 | 0.0145 | 0.8813 | 0.8414 | 0.7744 | 0.7500 | 0.9225 | 0.0207 |

| DAFNet [34] | C.O. | 26 | 29.35 | 0.9167 | 0.8688 | 0.7832 | 0.6435 | 0.8155 | 0.0062 | 0.9187 | 0.9027 | 0.8434 | 0.7869 | 0.9189 | 0.0115 |

| MJRBM [36] | C.O. | 32 | 43.54 | 0.9197 | 0.8765 | 0.8135 | 0.7071 | 0.8901 | 0.0099 | 0.9202 | 0.8932 | 0.8432 | 0.8015 | 0.9331 | 0.0163 |

| CSNet [65] | C.O. | 38 | 0.14 | 0.8229 | 0.8486 | 0.5757 | 0.6321 | 0.8293 | 0.0170 | 0.8889 | 0.8920 | 0.7175 | 0.7614 | 0.9070 | 0.0186 |

| SAMNet [17] | C.O. | 44 | 1.33 | 0.8621 | 0.8075 | 0.7010 | 0.6127 | 0.8114 | 0.0134 | 0.8762 | 0.8331 | 0.7294 | 0.6837 | 0.8549 | 0.0219 |

| AccoNet [66] | C.O. | 10.14 | 80.05 | 0.9095 | 0.8638 | 0.8235 | 0.8053 | 0.9450 | 0.0114 | 0.8975 | 0.8656 | 0.8219 | 0.8227 | 0.9415 | 0.0210 |

| CorrNet [46] | C.O. | 100 | 4.09 | 0.8955 | 0.8423 | 0.8043 | 0.7842 | 0.9294 | 0.0131 | 0.8825 | 0.8547 | 0.8054 | 0.8068 | 0.9338 | 0.0238 |

| MSCNet [67] | C.O. | - | - | 0.9010 | 0.8555 | 0.7712 | 0.7448 | 0.9256 | 0.0118 | 0.9198 | 0.8975 | 0.8277 | 0.8355 | 0.9583 | 0.0174 |

| MCCNet [37] | C.O. | 95 | 67.65 | 0.9152 | 0.8714 | 0.8395 | 0.8256 | 0.9527 | 0.0109 | 0.9163 | 0.8836 | 0.8529 | 0.8490 | 0.9551 | 0.0155 |

| Ours | C.O. | 15 | 64.37 | 0.9227 | 0.8746 | 0.8510 | 0.8262 | 0.9623 | 0.0072 | 0.9268 | 0.9069 | 0.8815 | 0.8643 | 0.9714 | 0.0184 |

| No. | Baesline | ARM | GGB | EORSSD [34] | ORSSD [32] | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | ✓ | 0.8608 | 0.9494 | 0.9135 | 0.0094 | 0.8979 | 0.9606 | 0.9088 | 0.0194 | ||

| 2 | ✓ | ✓ | 0.8691 | 0.9561 | 0.9195 | 0.0079 | 0.8999 | 0.9647 | 0.9104 | 0.0152 | |

| 3 | ✓ | ✓ | 0.8630 | 0.9607 | 0.9139 | 0.0091 | 0.9007 | 0.9654 | 0.9174 | 0.0185 | |

| 4 | ✓ | ✓ | ✓ | 0.8745 | 0.9623 | 0.9227 | 0.0072 | 0.9068 | 0.9714 | 0.9268 | 0.0184 |

| Models | EORSSD [34] | ORSSD [32] | ||||

|---|---|---|---|---|---|---|

| GGB-1 | 0.8688 | 0.9587 | 0.9183 | 0.8939 | 0.9669 | 0.9209 |

| GGB-2(our) | 0.8745 | 0.9623 | 0.9227 | 0.9068 | 0.9714 | 0.9268 |

| Models | EORSSD [34] | ORSSD [32] | ||||

|---|---|---|---|---|---|---|

| d = 1,3,5,7 (our) | 0.8745 | 0.9623 | 0.9227 | 0.9068 | 0.9714 | 0.9268 |

| d = 3,5,7,9 | 0.8669 | 0.9599 | 0.9194 | 0.9049 | 0.9672 | 0.9144 |

| Models | EORSSD [34] | ORSSD [32] | ||||

|---|---|---|---|---|---|---|

| w/o TF | 0.8681 | 0.9599 | 0.9188 | 0.9033 | 0.9612 | 0.9298 |

| w/o CA | 0.8680 | 0.9545 | 0.9175 | 0.8965 | 0.9567 | 0.9162 |

| w/TF + CA(our) | 0.8745 | 0.9623 | 0.9227 | 0.9068 | 0.9714 | 0.9268 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huo, L.; Hou, J.; Feng, J.; Wang, W.; Liu, J. Global and Multiscale Aggregate Network for Saliency Object Detection in Optical Remote Sensing Images. Remote Sens. 2024, 16, 624. https://0-doi-org.brum.beds.ac.uk/10.3390/rs16040624

Huo L, Hou J, Feng J, Wang W, Liu J. Global and Multiscale Aggregate Network for Saliency Object Detection in Optical Remote Sensing Images. Remote Sensing. 2024; 16(4):624. https://0-doi-org.brum.beds.ac.uk/10.3390/rs16040624

Chicago/Turabian StyleHuo, Lina, Jiayue Hou, Jie Feng, Wei Wang, and Jinsheng Liu. 2024. "Global and Multiscale Aggregate Network for Saliency Object Detection in Optical Remote Sensing Images" Remote Sensing 16, no. 4: 624. https://0-doi-org.brum.beds.ac.uk/10.3390/rs16040624