1. Introduction

Land use/cover (LUC) has been changed drastically due to urbanization in the past decades [

1,

2], and more built area has appeared to provide space for development. Such changes also caused a series of negative effects on human society, such as increasing flood risk [

3], deteriorating environment [

4], degrading ecosystem [

5], and so on. To better understand these impacts, LUC changes (LUCC) caused by urbanization need to be quantified accurately. Remote sensing is the latest technique that has been used to estimate LUCC [

6,

7,

8,

9], and the Landsat imagery acquired by MSS, TM, ETM+ and OLI sensors have been widely used for such a purpose [

10] due to its long records and free availability [

11,

12].

Quantitative LUCC estimation has mainly been driven from remotely sensed imagery using various classification algorithms [

13,

14], which can be divided into supervised algorithms and unsupervised algorithm [

15]. Decision trees, support vector machines (SVM), artificial neural networks (ANN) and maximum likelihood classifier are supervised classification algorithms [

16,

17,

18], and K-means algorithm, fuzzy c-means algorithm and AP cluster algorithm are typical unsupervised classification algorithms [

19,

20,

21,

22]. These algorithms have been widely used in estimating LUCC with satellite remote sensing. For example, Pal and Mather [

23] used SVM to classify land cover with Landsat ETM+ images, and Tong et al. [

24] detected urban land changes of Shanghai in 1990, 2000 and 2006 by using artificial backpropagation neural network (BPN) with Landsat TM images.

Different classification algorithms have been found to have different advantages in classifying different LUC categories; however, none could produce perfect classification accuracy to all LUC categories [

25,

26]. One classification algorithm may have pretty good performance to a specific LUC category, but may have some disadvantages on other LUC categories [

27]. For example, SVM with RBF (radial basis function) kernel has been found could have an accuracy of 95% to grassland, but only have a low accuracy of 60.3% to houses [

28]; maximum likelihood classifier performed better for bare soil with a high accuracy (98.01%), but not for built-up area with only a much lower accuracy (75%) [

26]; and, with k-NN (k-Nearest Neighbor), the classification accuracy of continuous urban fabric reached 97%, but that for cultivated soil was only 49% [

29].

There is requirement for higher classifying accuracy in studying the impact of LUC changes on flooding, particularly in large scale watershed [

30]. Multiple classifier systems (MCS) are a newly emerged classification algorithm that combines the classification results from several different classification algorithms. The purpose of MCS is to achieve a better classification result than that acquired by using only one classifier [

31,

32,

33,

34,

35,

36]. MCSs can be divided into two categories according to specific methods for combining the classification results. The first one is called multiple algorithm MCS, and the final result is generated by combining the classification results from a group of specific classifiers as base or component classifiers with identical training samples. The second one is called as single algorithm MCS, and the final result is generated from a single base algorithm. The core of MCS is to combine the results provided by different base classifiers, and the earliest method for combination was through the majority voting. By now, some more approaches have been proposed for classifier combination, such as Bayes approach, Dempster-Shafer theory, fuzzy integral, and so on [

37,

38,

39,

40]. Previous studies have shown that MCS are effective for LUC classification. For example, Dai and Liu [

41] constructed a MCS with six base classifiers, i.e., maximum likelihood classifier (ML), support vector machines (SVM), artificial neural networks (ANN), spectral angle mapper (SAM), minimum distance classifier (MD) and decision tree classifier (DTC), and the classifier combination was through voting strategy. Their results showed that MCS obtained higher accuracy than those achieved by its base classifiers. Zhao and Song [

42] proposed a weighted multiple classifiers fusion method to classify TM images, and their results showed that a higher classification accuracy has been achieved by MCS. Based on different guiding rules of GNN (granular neural networks), Kumar and Meher [

43] proposed an efficient MCS framework with improved performance for LUC classification.

For an improved performance, a base classifier to be included in a multiple classifier system (MCS) should be more accurate in at least one category than other classifiers, suggesting that base classifiers should be selected from diverse families of pattern recognizers [

44]. For a MCS using different classifiers, the diversity is measured by the difference among the base classifier’s pattern recognition algorithms [

45]. We generally prefer to combine the advantages of different algorithms based on priori-knowledge. However, there is a need to train a few different classification algorithms, and they could be easily over-fitted without sufficient priori-knowledge [

34,

46,

47,

48,

49]. Moreover, because the algorithms currently developed for land use/cover classification are relatively limited, the diversity of MCS can be low, which can further affect its performance. For a MCS based on one classification algorithm, classification accuracy can be improved by combining many diverse classifiers [

50,

51], which can be easily produced with plenty of sample sets. Disadvantage of this type of MCS is that the base classifiers are based on one classification algorithm, the difference among various classification algorithms is not considered.

Popular MCS combination techniques include Bagging, Boosting, random forests, and AdaBoost with iterative and convergent nature [

46,

52,

53,

54,

55,

56]. To obtain more base classifiers with differences, Ghimire and Rogan [

54] performed land use/cover classification in a heterogeneous landscape in Massachusetts by comparing three combining techniques, i.e., bagging, boosting, and random, with decision tree algorithm, and their results showed that the MCS performed better than the decision tree classifier. Based on SVM, Khosravi and Beigi [

55] used bagging and AdaBoost to combine a MCS to classify a hyperspectral dataset, and their work has showed a high capability of MCS in classifying high dimensionality data.

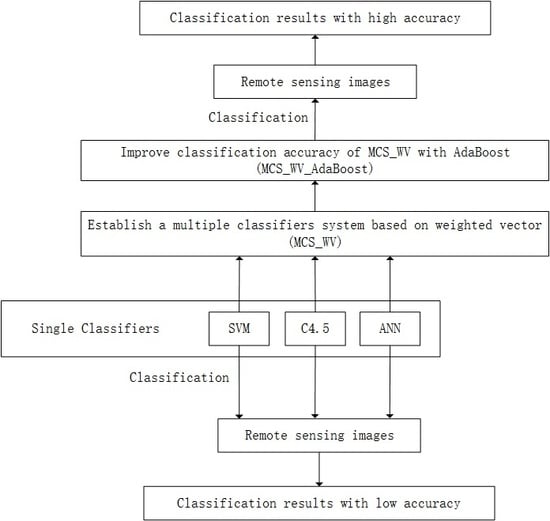

In this paper, a method was proposed, which can help improve the combination for multiple classifiers systems and thus increase land use/cover classification accuracy. It is called as MCS_WV_AdaBoost, which can combine the advantages of the multiple classifier systems based on a single classification algorithm and on multiple algorithms. In this method, a MCS based on weighted vector combination (called as MCS_WV) was established, which can combine decisions of component classifiers trained by different algorithms, and then the AdaBoost method was employed to boost the classification accuracy of MCS_WV (MCS_WV improved by AdaBoost, called as MCS_WV_AdaBoost). MCS_WV_AdaBoost inherits the benefits of MCS_WV which combines the advantages from different classification algorithms and reduces overfitting, resulting in more stable classification performance. In addition, MCS_WV_AdaBoost exhibits more component classifiers with diversity, resulting in larger improvement in classification accuracy. The proposed method was further used to produce a time series of land cover maps from Landsat images for a highly dynamic, large metropolitan area. The proposed method was found to be effective and can help improve land use/cover classification results.

6. Conclusions

In this paper, a multiple classifiers system using SVM, C4.5 and ANN as base classifier and AdaBoost as the combination strategy, namely MCS_WV_AdaBoost, was proposed to derive land use/cover information from a time series of remote sensor images spanning a period from 1987 to 2015, with an average interval of three years. In total, 11 land use/cover maps were produced. The following conclusions have been made.

For the three base classifiers considered, SVM generated the highest average overall classification (82.85), followed by ANN (81.77%) and C4.5 decision tree (80.20%). These classifiers had their own advantages in mapping different LUC types. C4.5 outperformed the other two base classifiers in mapping built-up land. ANN generated the highest classification accuracy for grassland. SVM performed the best in classifying forest and cultivated land. All classifiers did well in mapping waters due to their unique spectral characteristics. Using C4.5 or ANN, built-up land and bare land can be clearly separated. These advantages by different classifiers for different classes were critical for MCS to generate improved classification accuracy.

The MCS_WV classifier was quite efficient in combining the results from different classifiers but its ensemble results can be affected by the representative of the training samples. If the representative is weak, the MCS_WV classifier could be overfitting. The AdaBoost algorithm can overcome this shortage by training more than one MCS_WV classifier. Compared with the individual MCS_WV classifier, MCS_WV_AdaBoost was more robust with higher classification accuracy.

With MCS_WV_AdaBoost, the classification accuracy was improved for each map, with the average overall accuracy higher than that from any base classifiers, which was due to the combined advantages from each base classifier. Based on the accuracy improvement of each class, the overall accuracy was improved by MCS_WV_AdaBoost. MCS_WV_AdaBoost generated higher classification accuracy, especially for those classes with similar spectral characteristics, such as built-up area and bare land, and cultivated land and grassland.

MCS_WV_AdaBoost inherited most benefits from MCS_WV and AdaBoost. However, it also suffers from some disadvantages. For example, it reduces but does not eliminate the overfitting inherited from AdaBoost; if noise exists in the samples, it has a tendency to overfit. In this paper, three classification algorithms were used to train base classifiers of MCS_WV, the performance of MCS_WV_AdaBoost worked on more classification algorithms still needs further study. In summary, with MCS_WV_AdaBoost, a reliable and accurate LUC data set of Guangzhou city was obtained, and could be used analyzing urban characteristics and urbanization effects upon the environment and ecosystem in the future studies.