Analysis of a User Interface Based on Multimodal Interaction to Control a Robotic Arm for EOD Applications

Abstract

:1. Introduction

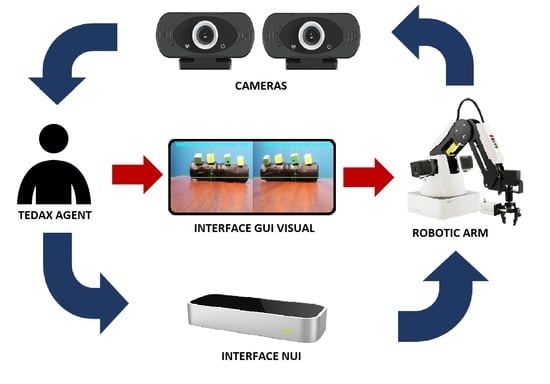

2. Composition of the Interface System

- Visual interface: It consists of 2 stereo cameras and their graphical interface for the selection of a target through the control station display [26]. By means of this interface, the automatic approach of the final actuator of the robotic arm to the selected target is performed, thus providing a better way to control the robotic arm.

- NUI Interface: The interface is conformed by the Leap Motion sensor that allows recognizing the palm of the hand. It has an algorithm that is composed of the sensor SDK with the Kalman filter that will help to decrease the hand tracking error and optimizing the control of the robotic arm [18]. This allows manipulating the robotic arm by replicating the movements made by the person with his hand towards the robotic arm.

- GUI interface: This interface is based on control buttons that achieve the movement of the robotic arm for each degree of freedom (DOF) providing the control of the robotic arm towards a desired position of the end efector.

2.1. Proposed Multimodal System

2.1.1. Natural User Interface (NUI)

2.1.2. Visual Interface

- Load the video sectioned by frames, and initialize with the first one. Each frame of the video is in RGB space, however, it must be converted from RGB space to HSV, because RGB is more sensitive to lighting change [29,30]. Select the ROI and get data related to the hue value at the target to make a color histogram with the formula:

- Create color histograms. The height of each column represents the number of pixels in a frame region that have that hue. Hue is one of three values that describe the color of a pixel in the HSV color model.

- Decide if the sequences of frames are finished:

- YES: Terminate the CAMSHIFT algorithm.

- NO: Follow the monitoring process.

- This is the first step in the CAMSHIFT loop. The probability map shows the probability that each pixel has in each frame, the background is isolated. Calculate the probability, to do so follow the equations:where r is the vertical location of the frame and c is the horizontal location on the frame.

- Since the target moves, the new centroid must be found, the moments of order zero and one are calculated by:where and are the center point of the location of the new centroid.

- Adjust the length of the search window by:Move the center of the search window to the center of mass.

- Decide if this move converges (use the termination criterion):

- –

- YES: Go to step 8.

- –

- NO: Return to step 5.

- Define the new center of the ROI as the calculated center of mass (,).

- Obtain the new ROI table, the second order moments are calculated:

Update the direction and adaptive size of the target area, by:where: - Enter the new ROI value to the next frame of the video.

2.1.3. Kinematic Control of the Robotic Arm

3. Experimental Setup and Evaluation Methodology

3.1. Multimodal Interface Architecture

Interface System

- Configuration A: It is a multimodal interface that is made up of the button interface for robot movement and the NUI interface for object manipulation.

- Configuration B: It is a multimodal interface that is made up of the visual interface for robot movement and the NUI interface for object manipulation.

- Configuration C: It only consists of the button interface for robot movement and object manipulation.

3.2. Human-Robot Workspace

3.3. Test Protocol

- Robot movement: To test our global interface, we will start with the movement of the robotic arm to the selected position.

- Pick and place of objects: It consists of picking up 8 objects from a cubic structure and depositing them in a container.

Evaluation Methodology

4. Results and Discussion

4.1. Experimental Evaluation Results

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Guevara Mamani, J.; Pinto, P.P.; Vilcapaza Goyzueta, D.; Supo Colquehuanca, E.; Sulla Espinoza, E.; Silva Vidal, Y. Compilation and analysis of requirements for the design of an explosive ordnance disposal robot prototype applied in UDEX-arequipa. In Proceedings of the International Conference on Human-Computer Interaction, Online, 24–29 July 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 131–138. [Google Scholar]

- Murphy, R.R.; Nomura, T.; Billard, A.; Burke, J.L. Human–Robot Interaction. IEEE Robot. Autom. Mag. 2010, 17, 85–89. [Google Scholar] [CrossRef]

- Scholtz, J. Theory and evaluation of human robot interactions. In Proceedings of the 36th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6–9 January 2003; p. 10. [Google Scholar] [CrossRef] [Green Version]

- Lunghi, G.; Marin, R.; Di Castro, M.; Masi, A.; Sanz, P.J. Multimodal Human-Robot Interface for Accessible Remote Robotic Interventions in Hazardous Environments. IEEE Access 2019, 7, 127290–127319. [Google Scholar] [CrossRef]

- Tidwell, J. Designing Interfaces: Patterns for Effective Interaction Design; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2010. [Google Scholar]

- Waibel, A.; Vo, M.T.; Duchnowski, P.; Manke, S. Multimodal interfaces. Artif. Intell. Rev. 1996, 10, 299–319. [Google Scholar] [CrossRef]

- Turk, M. Multimodal interaction: A review. Pattern Recognit. Lett. 2014, 36, 189–195. [Google Scholar] [CrossRef]

- Blattner, M.; Glinert, E. Multimodal integration. IEEE Multimed. 1996, 3, 14–24. [Google Scholar] [CrossRef]

- Postigo-Malaga, M.; Supo-Colquehuanca, E.; Matta-Hernandez, J.; Pari, L.; Mayhua-López, E. Vehicle location system and monitoring as a tool for citizen safety using wireless sensor network. In Proceedings of the 2016 IEEE ANDESCON, Arequipa, Peru, 19–21 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Reeves, L.M.; Lai, J.; Larson, J.A.; Oviatt, S.; Balaji, T.; Buisine, S.; Collings, P.; Cohen, P.; Kraal, B.; Martin, J.C.; et al. Guidelines for multimodal user interface design. Commun. ACM 2004, 47, 57–59. [Google Scholar] [CrossRef]

- Zubrycki, I.; Granosik, G. Using integrated vision systems: Three gears and leap motion, to control a 3-finger dexterous gripper. In Recent Advances in Automation, Robotics and Measuring Techniques; Springer: Berlin/Heidelberg, Germany, 2014; pp. 553–564. [Google Scholar]

- Suárez Fernández, R.A.; Sanchez-Lopez, J.L.; Sampedro, C.; Bavle, H.; Molina, M.; Campoy, P. Natural user interfaces for human-drone multi-modal interaction. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 1013–1022. [Google Scholar] [CrossRef] [Green Version]

- Jacoff, A.; Virts, A.; Saidi, K. Counter-Improvised Explosive Device Training Using Standard Test Methods for Response Robots; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2015.

- Gîrbacia, F.; Postelnicu, C.; Voinea, G.D. Towards using natural user interfaces for robotic arm manipulation. In Proceedings of the International Conference on Robotics in Alpe-Adria Danube Region, Kaiserslautern, Germany, 19–21 June 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 188–193. [Google Scholar]

- Mizera, C.; Delrieu, T.; Weistroffer, V.; Andriot, C.; Decatoire, A.; Gazeau, J.P. Evaluation of Hand-Tracking Systems in Teleoperation and Virtual Dexterous Manipulation. IEEE Sens. J. 2020, 20, 1642–1655. [Google Scholar] [CrossRef]

- Artal-Sevil, J.S.; Montañés, J.L. Development of a robotic arm and implementation of a control strategy for gesture recognition through Leap Motion device. In Proceedings of the 2016 Technologies Applied to Electronics Teaching (TAEE), Sevilla, Spain, 22–24 June 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with leap motion and kinect devices. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1565–1569. [Google Scholar] [CrossRef]

- Vilcapaza Goyzueta, D.; Guevara Mamani, J.; Sulla Espinoza, E.; Supo Colquehuanca, E.; Silva Vidal, Y.; Pinto, P.P. Evaluation of a NUI Interface for an Explosives Deactivator Robotic Arm to Improve the User Experience. In Proceedings of the International Conference on Human-Computer Interaction, Online, 24–29 July 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 288–293. [Google Scholar]

- Du, G.; Zhang, P. A Markerless Human–Robot Interface Using Particle Filter and Kalman Filter for Dual Robots. IEEE Trans. Ind. Electron. 2015, 62, 2257–2264. [Google Scholar] [CrossRef]

- Nadarajah, S.; Sundaraj, K. A survey on team strategies in robot soccer: Team strategies and role description. Artif. Intell. Rev. 2013, 40, 271–304. [Google Scholar] [CrossRef]

- Du, Y.C.; Taryudi, T.; Tsai, C.T.; Wang, M.S. Eye-to-hand robotic tracking and grabbing based on binocular vision. Microsyst. Technol. 2021, 27, 1699–1710. [Google Scholar] [CrossRef]

- Taryudi; Wang, M.S. Eye to hand calibration using ANFIS for stereo vision-based object manipulation system. Microsyst. Technol. 2018, 24, 305–317. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Int. J. Hum.-Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Mendes, V.; Bruyere, F.; Escoffre, J.M.; Binet, A.; Lardy, H.; Marret, H.; Marchal, F.; Hebert, T. Experience implication in subjective surgical ergonomics comparison between laparoscopic and robot-assisted surgeries. J. Robot. Surg. 2020, 14, 115–121. [Google Scholar] [CrossRef] [PubMed]

- Andres, M.A.; Pari, L.; Elvis, S.C. Design of a User Interface to Estimate Distance of Moving Explosive Devices with Stereo Cameras. In Proceedings of the 2021 6th International Conference on Image, Vision and Computing (ICIVC), Qingdao, China, 23–25 June 2021; pp. 362–366. [Google Scholar] [CrossRef]

- Menegaz, H.M.T.; Ishihara, J.Y.; Borges, G.A.; Vargas, A.N. A Systematization of the Unscented Kalman Filter Theory. IEEE Trans. Autom. Control 2015, 60, 2583–2598. [Google Scholar] [CrossRef] [Green Version]

- Corke, P. Robot arm kinematics. In Robotics, Vision and Control; Springer: Berlin/Heidelberg, Germany, 2017; pp. 193–228. [Google Scholar]

- Cheng, Y. Mean shift, mode seeking, and clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 790–799. [Google Scholar] [CrossRef] [Green Version]

- Sooksatra, S.; Kondo, T. CAMSHIFT-based algorithm for multiple object tracking. In Proceedings of the 9th International Conference on Computing and InformationTechnology (IC2IT2013), Bangkok, Thailand, 9–10 May 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 301–310. [Google Scholar]

- Yu, Y.; Bi, S.; Mo, Y.; Qiu, W. Real-time gesture recognition system based on Camshift algorithm and Haar-like feature. In Proceedings of the 2016 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Chengdu, China, 19–22 June 2016; pp. 337–342. [Google Scholar] [CrossRef]

- Andres Montoya A, P.P.L.; E, S. Assisted operation of a robotic arm based on stereo vision to position it near an explosive device.

- Gualtieri, L.; Rojas, R.A.; Ruiz Garcia, M.A.; Rauch, E.; Vidoni, R. Implementation of a laboratory case study for intuitive collaboration between man and machine in SME assembly. In Industry 4.0 for SMEs; Palgrave Macmillan: Cham, Switzerland, 2020; pp. 335–382. [Google Scholar]

| NASA-TLX | SUS | |||||

|---|---|---|---|---|---|---|

| Total Workload | Configuration A | Configuration B | Configuration C | Configuration A | Configuration B | Configuration C |

| Average | 58.91 | 40.87 | 69.27 | 79.09 | 75.45 | 43.63 |

| Standard deviation | 11.35 | 10.54 | 8.33 | 9.5 | 4.72 | 7.69 |

| Standard error | 4.63 | 3.18 | 3.40 | 3.88 | 2.11 | 3.44 |

| Configuration A | Configuration B | Configuration C | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Users | Successful | Unsuccessful | Not Achieved | Successful | Unsuccessful | Not Achieved | Successful | Unsuccessful | Not Achieved |

| U1 | 4 | 2 | 2 | 4 | 2 | 4 | 1 | 1 | 6 |

| U2 | 4 | 3 | 1 | 5 | 3 | 5 | 1 | 1 | 6 |

| U3 | 0 | 8 | 0 | 6 | 1 | 6 | 1 | 2 | 5 |

| U4 | 2 | 4 | 2 | 6 | 1 | 6 | 1 | 2 | 5 |

| U5 | 2 | 4 | 2 | 4 | 3 | 1 | 2 | 2 | 4 |

| U6 | 3 | 4 | 1 | 5 | 3 | 0 | 0 | 1 | 7 |

| U7 | 5 | 3 | 0 | 3 | 4 | 1 | 2 | 2 | 4 |

| U8 | 1 | 3 | 4 | 3 | 5 | 0 | 0 | 1 | 7 |

| U9 | 2 | 4 | 2 | 4 | 4 | 0 | 1 | 2 | 5 |

| U10 | 3 | 4 | 1 | 3 | 3 | 2 | 1 | 3 | 4 |

| U11 | 2 | 5 | 1 | 4 | 0 | 4 | 1 | 3 | 4 |

| Average | 2.64 | 4.09 | 1.27 | 4.27 | 2.64 | 1.09 | 1 | 2.73 | 4.27 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goyzueta, D.V.; Guevara M., J.; Montoya A., A.; Sulla E., E.; Lester S., Y.; L., P.; C., E.S. Analysis of a User Interface Based on Multimodal Interaction to Control a Robotic Arm for EOD Applications. Electronics 2022, 11, 1690. https://0-doi-org.brum.beds.ac.uk/10.3390/electronics11111690

Goyzueta DV, Guevara M. J, Montoya A. A, Sulla E. E, Lester S. Y, L. P, C. ES. Analysis of a User Interface Based on Multimodal Interaction to Control a Robotic Arm for EOD Applications. Electronics. 2022; 11(11):1690. https://0-doi-org.brum.beds.ac.uk/10.3390/electronics11111690

Chicago/Turabian StyleGoyzueta, Denilson V., Joseph Guevara M., Andrés Montoya A., Erasmo Sulla E., Yuri Lester S., Pari L., and Elvis Supo C. 2022. "Analysis of a User Interface Based on Multimodal Interaction to Control a Robotic Arm for EOD Applications" Electronics 11, no. 11: 1690. https://0-doi-org.brum.beds.ac.uk/10.3390/electronics11111690