Visualization of Pedestrian Density Dynamics Using Data Extracted from Public Webcams

Abstract

:1. Introduction

2. Methodology and Data

2.1. Data Collection

2.2. Georeferencing

2.3. Spatio-Temporal Analysis of Pedestrian Densities

2.3.1. Bivariate Kernel Density Estimate

2.3.2. Space-Time Cube

2.3.3. Space-Time Cube Visualization

2.4. Case Study Data Processing

2.4.1. Marktplatz Coburg, Germany

2.4.2. Victoria Square, Adelaide

3. Case Study

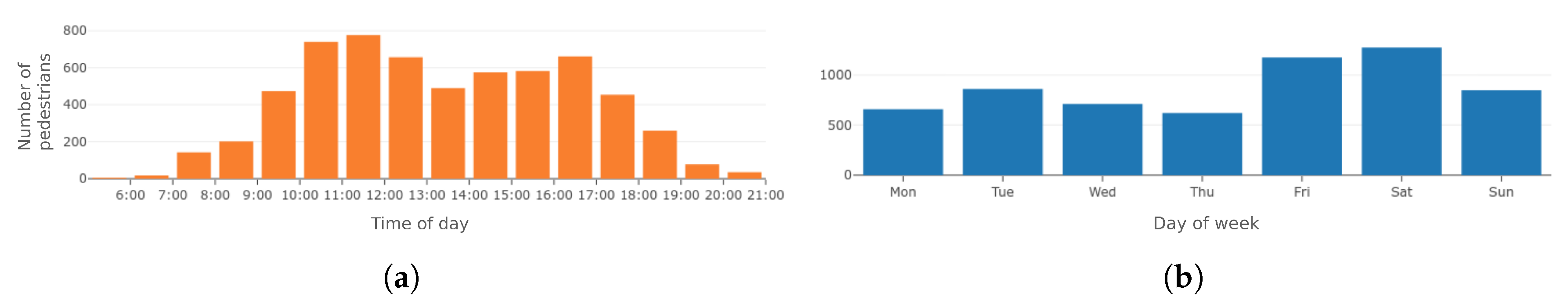

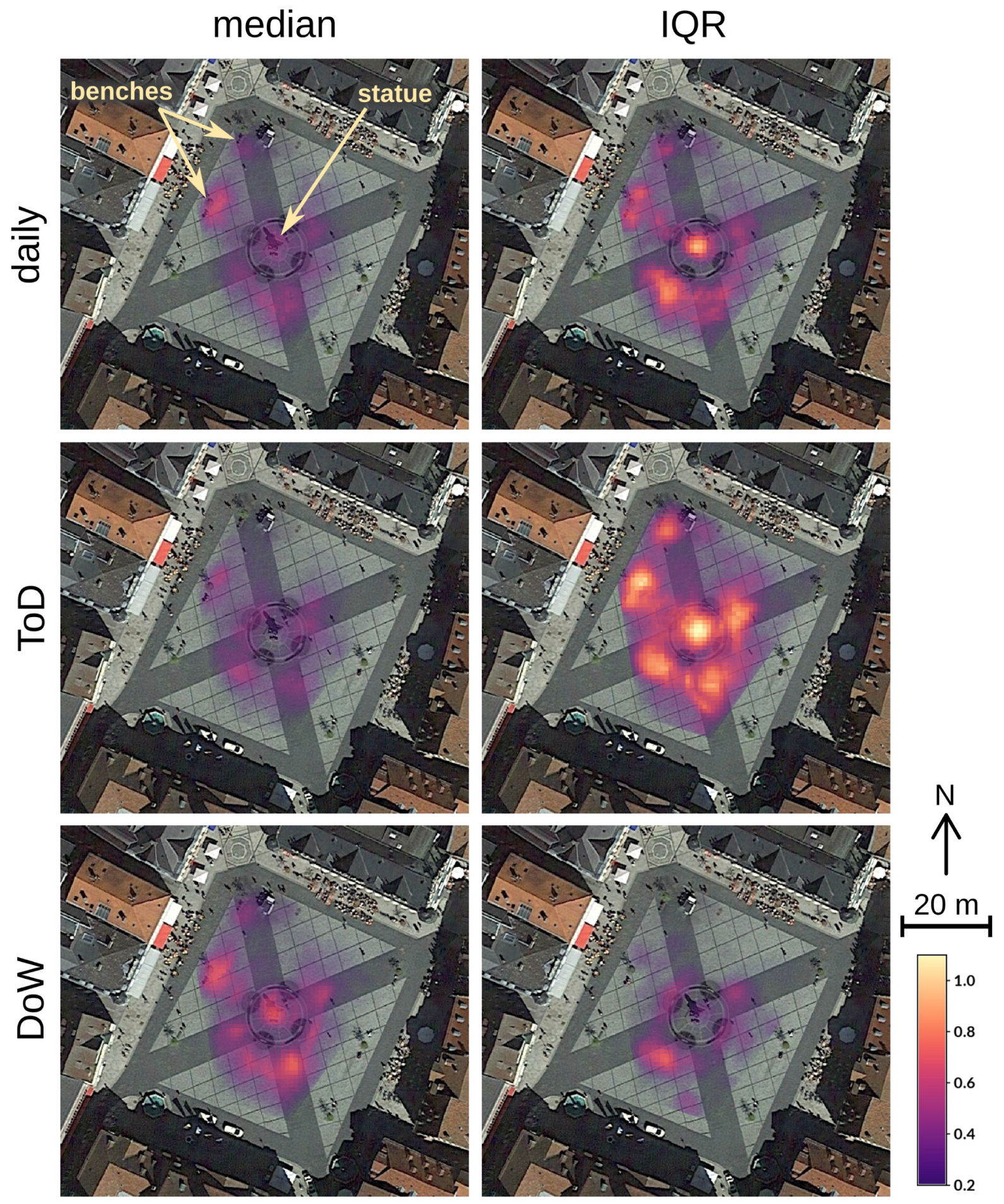

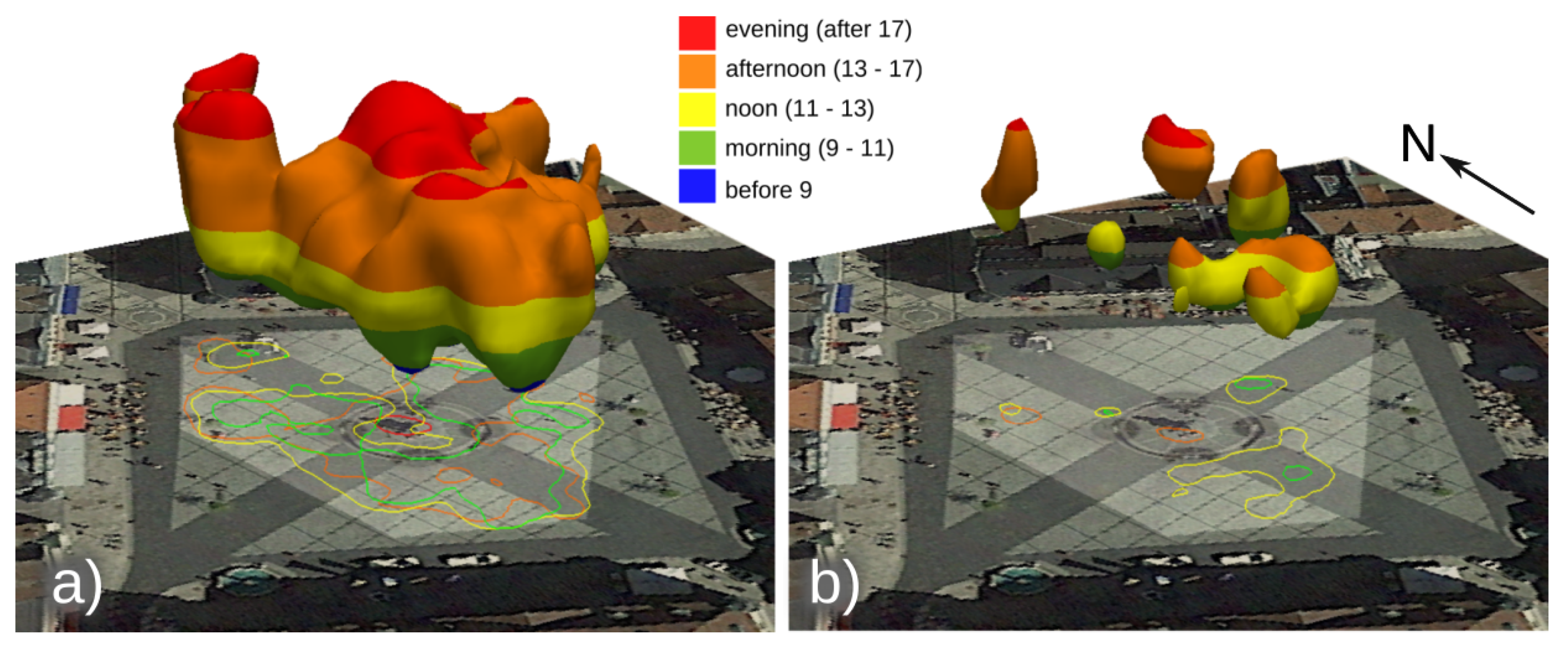

3.1. Marktplatz Coburg, Germany

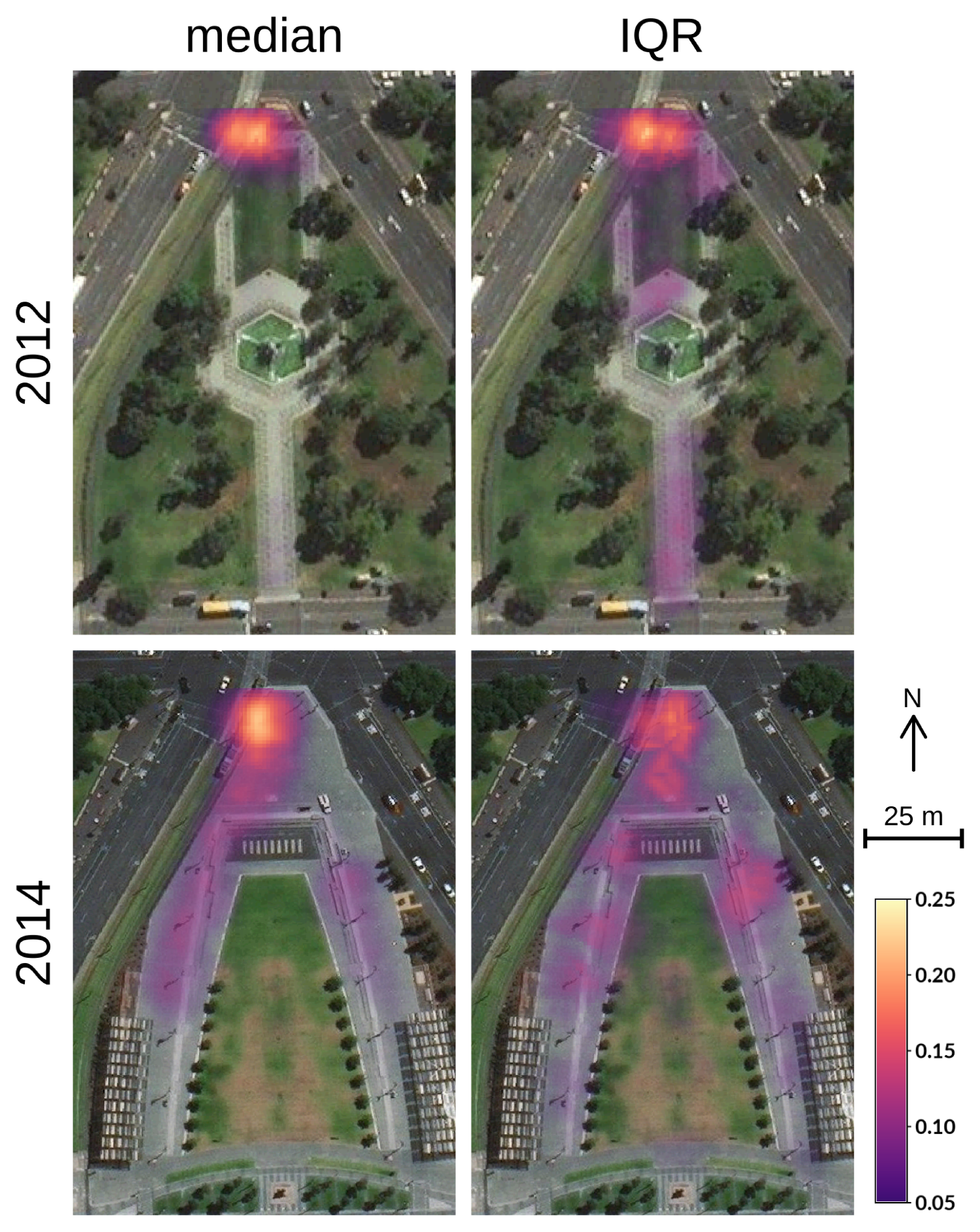

3.2. Victoria Square, Adelaide

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Handy, S.L.; Boarnet, M.G.; Ewing, R.; Killingsworth, R.E. How the Built Environment Affects Physical Activity: Views from Urban Planning. Am. J. Prev. Med. 2002, 23, 64–73. [Google Scholar] [CrossRef]

- Vuchic, V. Transportation for Livable Cities; Routledge: London, UK, 2017. [Google Scholar]

- Livesley, S.J.; McPherson, G.M.; Calfapietra, C. The Urban Forest and Ecosystem Services: Impacts on Urban Water, Heat, and Pollution Cycles at the Tree, Street, and City Scale. J. Environ. Qual. 2016, 45, 119–124. [Google Scholar] [CrossRef] [PubMed]

- Tabrizian, P.; Baran, P.K.; Smith, W.R.; Meentemeyer, R.K. Exploring perceived restoration potential of urban green enclosure through immersive virtual environments. J. Environ. Psychol. 2018, 55, 99–109. [Google Scholar] [CrossRef]

- Wirz, M.; Franke, T.; Roggen, D.; Mitleton-Kelly, E.; Lukowicz, P.; Tröster, G. Probing crowd density through smartphones in city-scale mass gatherings. EPJ Data Sci. 2013, 2, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Korpilo, S.; Virtanen, T.; Lehvävirta, S. Smartphone GPS tracking—Inexpensive and efficient data collection on recreational movement. Landsc. Urban Plan. 2017, 157, 608–617. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Y.; Raubal, M. Extracting dynamic urban mobility patterns from mobile phone data. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2012; Volume 7478 LNCS, pp. 354–367. [Google Scholar] [CrossRef]

- Calabrese, F.; Diao, M.; Di, G.; Ferreira, J.; Ratti, C. Understanding individual mobility patterns from urban sensing data: A mobile phone trace example. Transp. Res. Part C Emerg. Technol. 2013, 26, 301–313. [Google Scholar] [CrossRef]

- Traunmueller, M.W.; Johnson, N.; Malik, A.; Kontokosta, C.E. Digital footprints: Using WiFi probe and locational data to analyze human mobility trajectories in cities. Comput. Environ. Urban Syst. 2018, 72, 4–12. [Google Scholar] [CrossRef]

- Martí, P.; Serrano-Estrada, L.; Nolasco-Cirugeda, A. Social Media data: Challenges, opportunities and limitations in urban studies. Comput. Environ. Urban Syst. 2019, 74, 161–174. [Google Scholar] [CrossRef]

- Hamstead, Z.A.; Fisher, D.; Ilieva, R.T.; Wood, S.A.; McPhearson, T.; Kremer, P. Geolocated social media as a rapid indicator of park visitation and equitable park access. Comput. Environ. Urban Syst. 2018, 72, 38–50. [Google Scholar] [CrossRef]

- Schwartz, R.; Hochman, N. The Social Media Life of Public Spaces: Reading places Through the Lens of Geotagged Data. In Locative Locative Media; Routledge: New York, NY, USA, 2014; pp. 52–65. [Google Scholar]

- Blat, J.; Fiore, F.D.; Girardin, F.; Ratti, C.; Calabrese, F. Digital Footprinting: Uncovering Tourists with User-Generated Content. IEEE Perv. Comput. 2008, 7, 36–43. [Google Scholar] [CrossRef] [Green Version]

- De Choudhury, M.; Lempel, R.; Yu, C.; Golbandi, N.; Feldman, M.; Amer-Yahia, S. Automatic construction of travel itineraries using social breadcrumbs. In Proceedings of the 21st ACM Conference on Hypertext and Hypermedia, Toronto, ON, Canada, 13–16 June 2010; p. 35. [Google Scholar] [CrossRef]

- Jestico, B.; Nelson, T.; Winters, M. Mapping ridership using crowdsourced cycling data. J. Transp. Geogr. 2016, 52, 90–97. [Google Scholar] [CrossRef] [Green Version]

- Heesch, K.C.; James, B.; Washington, T.L.; Zuniga, K.; Burke, M. Evaluation of the Veloway 1: A natural experiment of new bicycle infrastructure in Brisbane, Australia. J. Transp. Health 2016, 3, 366–376. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Mobasheri, A. Utilizing crowdsourced data for studies of cycling and air pollution exposure: A case study using strava data. Int. J. Environ. Res. Public Health 2017, 14, 274. [Google Scholar] [CrossRef] [PubMed]

- Faro, A.; Giordano, D.; Spampinato, C. Evaluation of the traffic parameters in a metropolitan area by fusing visual perceptions and CNN processing of webcam images. IEEE Trans. Neural Netw. 2008, 19, 1108–1129. [Google Scholar] [CrossRef]

- Placemeter Inc. Placemeter | Quantify the World. 2019. Available online: http://www.placemeter.com (accessed on 3 March 2019).

- Raina, D.S.; Parks, N.J.; Li, W.W.; Gray, R.W.; Dattner, S.L. Innovative monitoring of visibility using digital imaging technology in an arid urban environment. Reg. Glob. Perspect. Haze 2004, 134, 971–990. [Google Scholar]

- Richardson, A.D.; Braswell, B.H.; Hollinger, D.Y.; Jenkins, J.P.; Ollinger, S.V. Near-Surface Remote Sensing of Spatial and Temporal Variation in Canopy Phenology. Ecol. Appl. 2009, 19, 1417–1428. [Google Scholar] [CrossRef]

- Ojeda, E.; Guillén, J.; García-Olivares, A.; Chic, O.; González, R.; Osorio, A. Long-Term Quantification of Beach Users Using Video Monitoring. J. Coast. Res. 2008, 246, 1612–1619. [Google Scholar] [CrossRef]

- Jacobs, N.; Miskell, K.; Pless, R.; Richardson, A.D.; Fridrich, N.; Braswell, B.H.; Burgin, W.; Abrams, A. The global network of outdoor webcams. In Proceedings of the 17th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WD, USA, 4–6 November 2009; p. 111. [Google Scholar] [CrossRef]

- Jacobs, N.; Roman, N.; Pless, R. Consistent temporal variations in many outdoor scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar] [CrossRef]

- Hipp, J.A.; Manteiga, A.; Burgess, A.; Stylianou, A.; Pless, R. Webcams, Crowdsourcing, and Enhanced Crosswalks: Developing a Novel Method to Analyze Active Transportation. Front. Public Health 2016, 4, 97. [Google Scholar] [CrossRef] [Green Version]

- Bach, B.; Dragicevic, P.; Archambault, D.; Hurter, C.; Carpendale, S. A Descriptive Framework for Temporal Data Visualizations Based on Generalized Space-Time Cubes. Comput. Graph. Forum 2017, 36, 36–61. [Google Scholar] [CrossRef] [Green Version]

- Gatalsky, P.; Andrienko, N.; Andrienko, G. Interactive analysis of event data using space-time cube. In Proceedings of the Eighth IEEE International Conference on Information Visualisation, London, UK, 16 July 2004; pp. 145–152. [Google Scholar] [CrossRef] [Green Version]

- Brunsdon, C.; Corcoran, J.; Higgs, G. Visualising space and time in crime patterns: A comparison of methods. Comput. Environ. Urban Syst. 2007, 31, 52–75. [Google Scholar] [CrossRef]

- He, J.; Chen, H.; Chen, Y.; Tang, X.; Zou, Y. Diverse Visualization Techniques and Methods of Moving-Object-Trajectory Data: A Review. ISPRS Int. J. Geo-Inf. 2019, 8, 63. [Google Scholar] [CrossRef] [Green Version]

- Nakaya, T.; Yano, K. Visualising crime clusters in a space-time cube: An exploratory data-analysis approach using space-time kernel density estimation and scan statistics. Trans. GIS 2010, 14, 223–239. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, J.; Sila-Nowicka, K. Exploring Spatiotemporal Dynamics of Urban Fires: A Case of Nanjing, China. ISPRS Int. J. Geo-Inf. 2018, 7, 7. [Google Scholar] [CrossRef] [Green Version]

- Delmelle, E.; Dony, C.; Casas, I.; Jia, M.; Tang, W. Visualizing the impact of space-time uncertainties on dengue fever patterns. Int. J. Geogr. Inf. Sci. 2014, 28, 1107–1127. [Google Scholar] [CrossRef]

- Kwan, M.P. Interactive geovisualization of activity-travel patterns using three-dimensional geographical information systems: A methodological exploration with a large data set. Transp. Res. Part C 2000, 8, 185–203. [Google Scholar] [CrossRef]

- Kraak, M.j. The Space-Time Cube Revisited from a Geovisualization Perspective. In Proceedings of the 21st International Cartographic Conference (ICC), Durban, South Africa, 10–16 August 2003; pp. 10–16. [Google Scholar]

- Demšar, U.; Virrantaus, K. Space–time density of trajectories: Exploring spatio–temporal patterns in movement data. Int. J. Geogr. Inf. Sci. 2010, 24, 1527–1542. [Google Scholar] [CrossRef]

- Zou, Y.; Chen, Y.; He, J.; Pang, G.; Zhang, K. 4D Time Density of Trajectories: Discovering Spatiotemporal Patterns in Movement Data. ISPRS Int. J. Geo-Inf. 2018, 7, 212. [Google Scholar] [CrossRef] [Green Version]

- Hipp, J.A.; Adlakha, D.; Gernes, R.; Kargol, A.; Pless, R. Do You See What I See: Crowdsource Annotation of Captured Scenes. In Proceedings of the 4th ACM International SenseCam & Pervasive Imaging Conference, SenseCam ’13, San Diego, CA, USA, 18–19 November 2013; pp. 24–25. [Google Scholar] [CrossRef]

- Kako, S.; Isobe, A.; Magome, S. Sequential monitoring of beach litter using webcams. Mar. Pollut. Bull. 2010, 60, 775–779. [Google Scholar] [CrossRef]

- Magome, S.; Yamashita, T.; Kohama, T.; Kaneda, A.; Hayami, Y.; Takahashi, S.; Takeoka, H. Jellyfish patch formation investigated by aerial photography and drifter experiment. J. Oceanogr. 2007, 63, 761–773. [Google Scholar] [CrossRef]

- Willis, A.; Gjersoe, N.; Havard, C.; Kerridge, J.; Kukla, R. Human movement behaviour in urban spaces: Implications for the design and modelling of effective pedestrian environments. Environ. Plan. B Plan. Des. 2004, 31, 805–828. [Google Scholar] [CrossRef] [Green Version]

- van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.A. scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Racine, J.S. Nonparametric Econometrics: Theory and Practice; Princeton University Press: Princeton, NJ, USA, 2007; p. 746. [Google Scholar]

- Menegon, S.; Blazek, R. GRASS GIS: v.kernel Module. 2017. Available online: https://grass.osgeo.org/grass76/manuals/v.kernel.html (accessed on 3 March 2019).

- Silverman, B.W. Density Estimation for Statistics and Data Analysis; Chapman & Hall/CRC: Boca Raton, FL, USA, 1986; p. 176. [Google Scholar]

- Duin, R.P.W. On the Choice of Smoothing Parameters for Parzen Estimators of Probability Density Functions. IEEE Trans. Comput. 1976, C-25, 1175–1179. [Google Scholar] [CrossRef]

- Seabold, S.; Perktold, J. Statsmodels: Econometric and statistical modeling with python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010. [Google Scholar]

- Mitasova, H.; Mitas, L.; Brown, W.; Gerdes, D.P.; Kosinovsky, I.; Baker, T. Modelling spatially and temporally distributed phenomena: New methods and tools for GRASS GIS. Int. J. Geogr. Inf. Syst. 1995, 9, 433–446. [Google Scholar] [CrossRef]

- Petras, V.; Newcomb, D.J.; Mitasova, H. Generalized 3D fragmentation index derived from lidar point clouds. Open Geospatial Data Softw. Stand. 2017, 2, 9. [Google Scholar] [CrossRef] [Green Version]

- Tateosian, L.; Mitasova, H.; Thakur, S.; Hardin, E.; Russ, E.; Blundell, B. Visualizations of coastal terrain time series. Inf. Vis. 2013, 13, 266–282. [Google Scholar] [CrossRef]

- Krizek, K.J.; Handy, S.L.; Forsyth, A. Explaining changes in walking and bicycling behavior: Challenges for transportation research. Environ. Plan. B Plan. Des. 2009, 36, 725–740. [Google Scholar] [CrossRef]

- Li, X.; Ratti, C. Mapping the spatio-temporal distribution of solar radiation within street canyons of Boston using Google Street View panoramas and building height model. Landsc. Urban Plan. 2018. [Google Scholar] [CrossRef]

- Kim, G.; Kim, A.; Kim, Y. A new 3D space syntax metric based on 3D isovist capture in urban space using remote sensing technology. Comput. Environ. Urban Syst. 2019, 74, 74–87. [Google Scholar] [CrossRef]

- Omer, I.; Kaplan, N. Using space syntax and agent-based approaches for modeling pedestrian volume at the urban scale. Comput. Environ. Urban Syst. 2017, 64, 57–67. [Google Scholar] [CrossRef]

- Crooks, A.; Castle, C.; Batty, M. Key challenges in agent-based modelling for geo-spatial simulation. Comput. Environ. Urban Syst. 2008, 32, 417–430. [Google Scholar] [CrossRef] [Green Version]

- Hillier, B.; Penn, A.; Hanson, J.; Grajewski, T.; Xu, J. Natural movement: Or, configuration and attraction in urban pedestrian movement. Environ. Plan. B Plan. Des. 1993, 20, 29–66. [Google Scholar] [CrossRef] [Green Version]

- Dollár, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian Detection: The State of the Art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Ryan, D.; Denman, S.; Sridharan, S.; Fookes, C. An evaluation of crowd counting methods, features and regression models. Comput. Vis. Image Underst. 2015, 130, 1–17. [Google Scholar] [CrossRef] [Green Version]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Petrasova, A.; Hipp, J.A.; Mitasova, H. Visualization of Pedestrian Density Dynamics Using Data Extracted from Public Webcams. ISPRS Int. J. Geo-Inf. 2019, 8, 559. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi8120559

Petrasova A, Hipp JA, Mitasova H. Visualization of Pedestrian Density Dynamics Using Data Extracted from Public Webcams. ISPRS International Journal of Geo-Information. 2019; 8(12):559. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi8120559

Chicago/Turabian StylePetrasova, Anna, J. Aaron Hipp, and Helena Mitasova. 2019. "Visualization of Pedestrian Density Dynamics Using Data Extracted from Public Webcams" ISPRS International Journal of Geo-Information 8, no. 12: 559. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi8120559