For the evaluation of model performance, we designed a three-experiment framework that assessed model fit.

2.4.1. Exp. I: Single Model without Textual Information

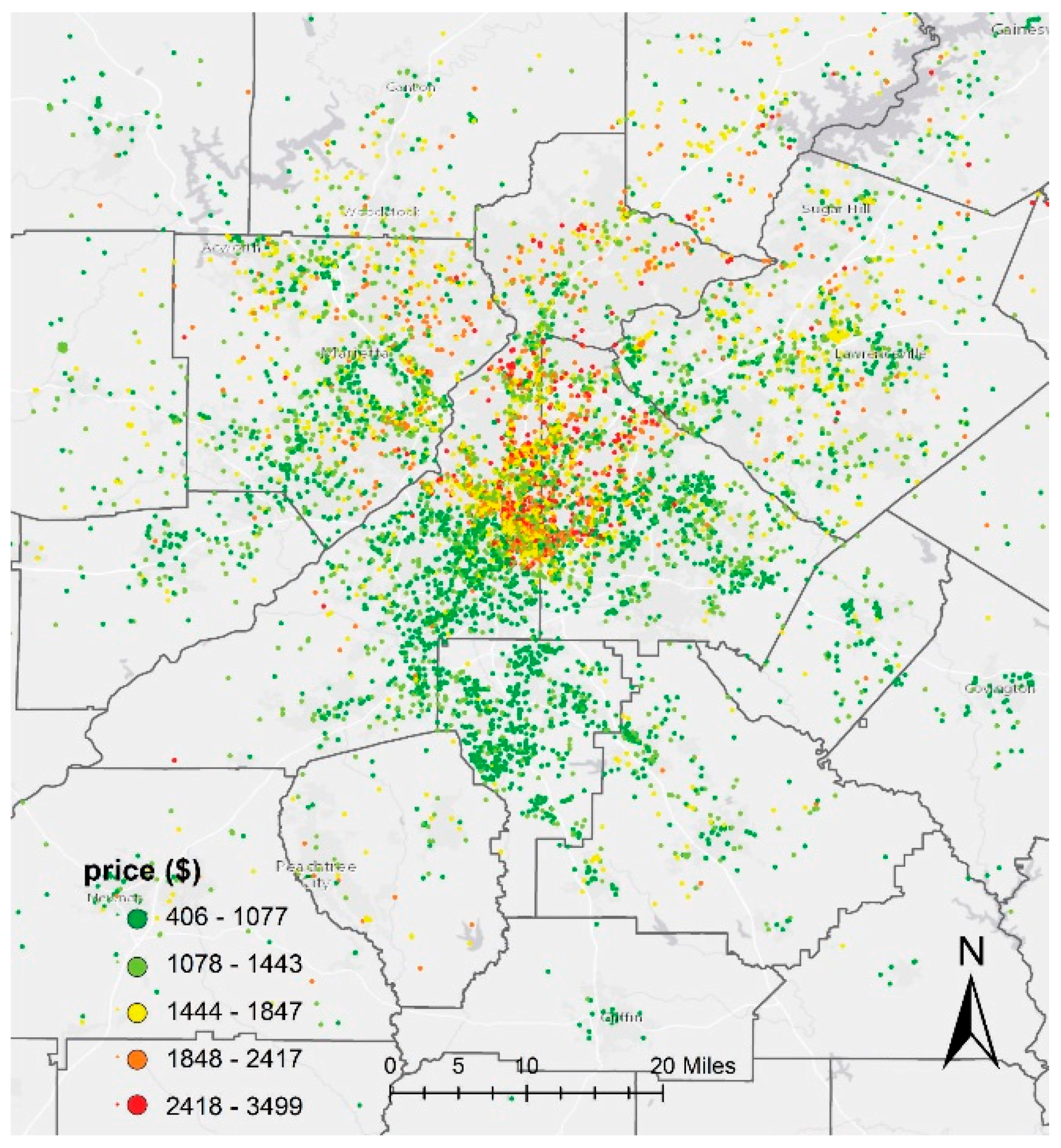

In this experiment, we first modeled rental prices using common interpolation methods, including inverse distance weighting and kriging (Gaussian process regression). Spatial interpolation algorithms follow Tobler’s First Law of Geography [

29]; thus, the value at a point of interest is estimated as the weighted sum of values at surrounding data points such that closer neighbors contribute larger weights. Inverse distance weighting (IDW) explicitly relies on the First Law of Geography by setting the weight as the inverse of the distance (Equation (1)).

where

represents the unmeasured value to be calculated at the data point of interest

,

is the spatial Euclidean distance between

and

,

is an exponent that influences the weighting of

, and

is the number of nearest neighbors that contribute to the point of interest. In this experiment, we tested three

-values, i.e., the first, second, and third orders, in order to evaluate the influence of the exponent on modeling outcomes. When making predictions, we considered the 12 nearest neighbors.

Kriging or Gaussian process regression is modeled by a Gaussian process governed by prior covariance functions. Kriging is named after the South African mining engineer D. G. Krige [

30], who first presented this method to improve the precision of predictions of gold concentration in ore bodies, and is known to be the optimal or best linear unbiased prediction. The main idea of kriging is to analyze the correlations among the residuals of data points. In this method,

can be decomposed into a deterministic trend function

and a real-valued residual random function

. That is,

Similar to IDW, kriging also estimates the residual at as the weighted sum of residuals at surrounding data points, that is . The weight is derived from a covariance function or variogram, which is utilized to characterize the residual component. There are three classical versions of kriging, each employing different assumptions: Simple kriging, ordinary kriging, and universal kriging. Simple kriging assumes the stationarity of the first moment over the entire domain with a known mean ; ordinary kriging assumes a constant unknown mean only over the search neighborhood; and universal kriging assumes a general polynomial trend model, such as the linear trend model

In this study, we tested the ability of both ordinary and universal kriging to estimate rental prices. When using universal kriging, we adopted a linear trend model with f(x) = x. In addition, we used Gaussian process regression to simultaneously model four variables: Latitude, longitude, bedroom number, and square footage. The covariance functions or kernels considered in our experiments are all Gaussian, i.e., squared exponential covariance functions. Because kriging and GPR are computationally intensive, we sampled 1600 feature points for training the models. These models were trained and validated on the same data splits to make sure their outcomes were comparable.

Next, we selected popular linear, nonlinear (not ensemble), and ensemble algorithms to model rental price based on location, square footage, and bedroom number. Each algorithm has its own advantages. For problems that are inherently linear, linear models are fast and simple. Nonlinear algorithms can handle problems that are linearly inseparable. The performance of nonlinear algorithms may vary depending on the nonlinear characteristics of the feature space. Ensemble models combine several base algorithms to achieve an optimal predictive model. We tested a few different algorithms from each category. The linear algorithms tested in this study consisted of linear, lasso, ridge, elastic net, and stochastic gradient descent regressions. Lasso, ridge, and elastic net models provide approaches for regularizing models so as not to overfit the training dataset. Ridge regression penalizes the sum of squared coefficients while lasso regression penalizes the sum of absolute values. Elastic net is a convex combination of ridge and lasso.

The nonlinear algorithms (not ensembles) applied in this study included K-nearest neighbor algorithm (k-NN), regression tree (RT), multilayer perceptron (MLP), and support vector regression (SVR). k-NN is a very useful non-parametric model that does not assume any underlying data distribution; its prediction is based on the most similar k-neighbors in the training set. RT splits samples into relatively homogeneous sets based on the most significant splitter for input variables, with the terminal nodes containing the predicted output values. MLP is an artificial neural network composed of an input layer that receives feature signals, an output layer that makes a prediction, and several hidden layers. MLP is a powerful supervised learning model. SVR is based on a support vector machine, using the concept of maximal margin to make predictions.

Finally, the ensemble models tested in this project include AdaBoost, bagged decision trees, random forest, extra trees, and gradient boosting machines. Ensemble models combine decisions from multiple models to improve final performance. These models usually include two general approaches, bagging and boosting. Random forest and bagged decision trees are considered bagging approaches. Bagging usually samples the training data set with replacement, then creates a model for each sample. The results of those multiple models are combined and the average is used for final predictions. AdaBoost and gradient boosting machines are considered to use the boosting approach. Boosting is a sequential approach in which a first model is trained on the entire dataset, and the subsequent models are built by fitting the residuals of previous models. Such iterations allocate higher weights to observations that were poorly predicted by the previous model [

31].

In this project, the top-performing algorithms were recorded and reported. To fine-tune performance, we also conducted a grid search for the top ten models. We conducted two groups of tests: One using the same training and validation split as with the IDW and kriging models, the other using the full dataset, which is consistent with the experiments described below.

2.4.2. Exp. II: Single Model Based on Textual Information

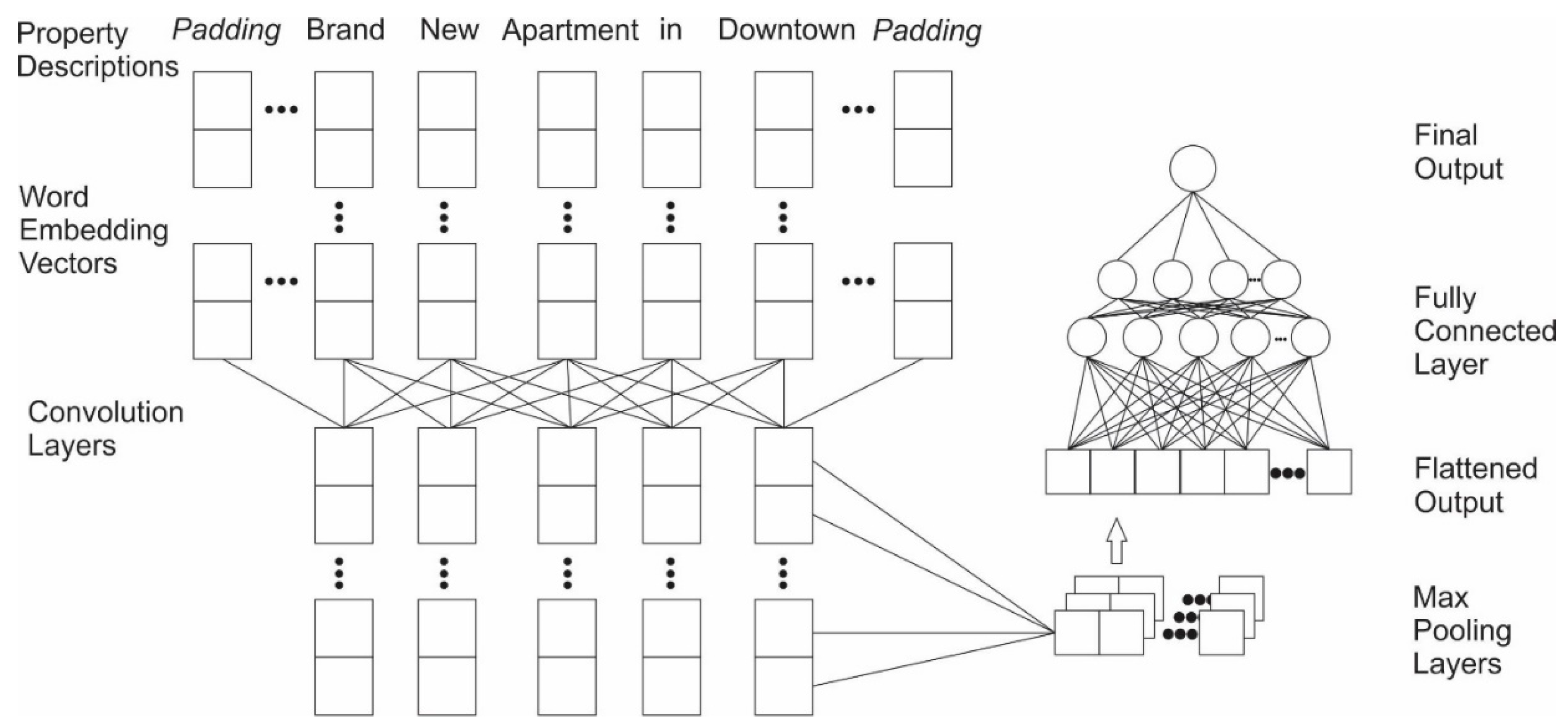

Textual information embedded in the short and long descriptions is critical when modeling rents. For instance, factors such as decorations, neighborhood, amenities and unique upgrades, and interior design cannot be reflected in square footage and bedroom number, yet contribute significantly to rental price. To capture information about room quality for the purpose of predicting price, we used three types of models: Latent semantic analysis (LSA), recurrent neural network (RNN), and convolutional neural network (CNN).

LSA is a technique for creating a vector representation of textual information. We first convert the property descriptions

D to a matrix of word counts

T, with rows being individual properties and columns being all possible words in the descriptions. Then, the matrix

T is transformed based on

tf-idf equations as below:

Term frequency (tf) counts the number of times a word t occurs in a property description d. The inverse document frequency (idf) is an indicator of how much information the word t provides, measured as the frequency of word t across all documents D.

Next, we apply singular value decomposition (SVD) to the transformed matrix T to lower its dimensionality. Specifically, we decomposed the matrix T into three matrices where U and V are orthogonal and is a diagonal matrix containing k singular values. In this study, we used k<<k to approximate T . The new matrix was used to predict prices.

RNN. Traditional neural networks treat input words independently. However, when modeling textual information, spatial adjacency such as ‘close to downtown’ or ‘five minutes away from airport’ is meaningful; this adjacency cannot be captured by traditional neural networks. RNN, however, can make use of the sequential information. It trains a model for every input word sequentially, with the output from the current input word depending on previous computational results. Such a process can be expressed as:

where

is the hidden state at time

t. It is a function of the current word input

, multiplied by a weight matrix

W, and the hidden state derived from previous words at time

, multiplied by its hidden state weight matrix

M. By maintaining the internal hidden state

ht and sharing this parameter across all time steps, an RNN is able to remember past information and repeatedly occurring patterns.

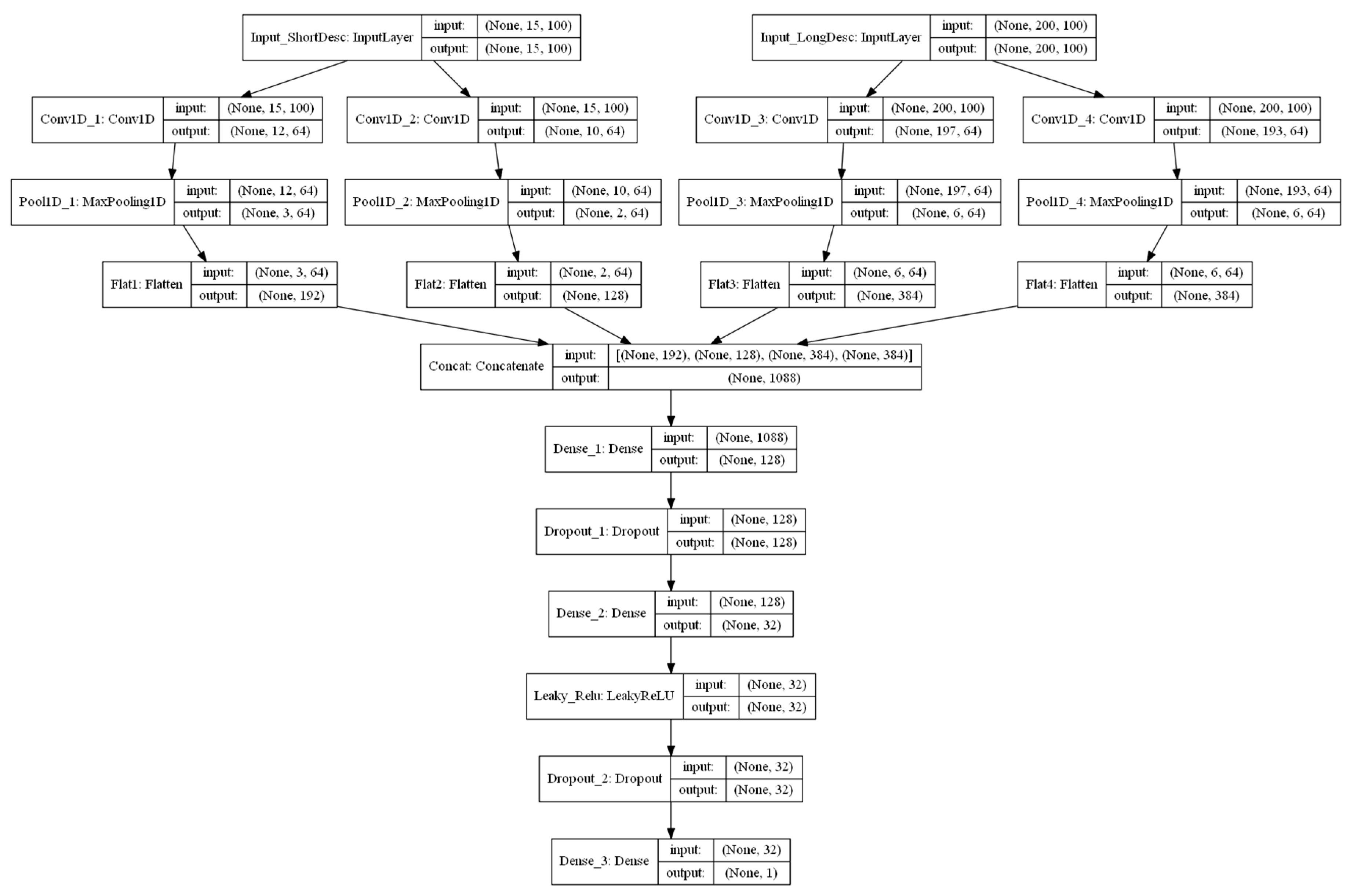

In this study, we first converted each word from the property descriptions into vector spaces using Global Vectors for Word Representation (GloVe) [

32]. This step maps words with similar meanings (e.g., house and apartment) to similar vector representation. Next, because RNN requires each input batch to have the same length, we padded each description with zeros so that the resultant vectors had the same length. We respectively used 15 and 200 vector lengths to pad short descriptions and long descriptions. Then, vector spaces for short and long descriptions were connected to two long-short term memory networks (LSTM). LSTM is a particular architecture of RNN designed to minimize the vanishing gradients problem, which is caused by the fading memory of past learned patterns over time [

33]. Outputs from the two LSTM models were concatenated and sent to a densely connected network for final predictions. The detailed scheme of the model is provided in

Figure 1.

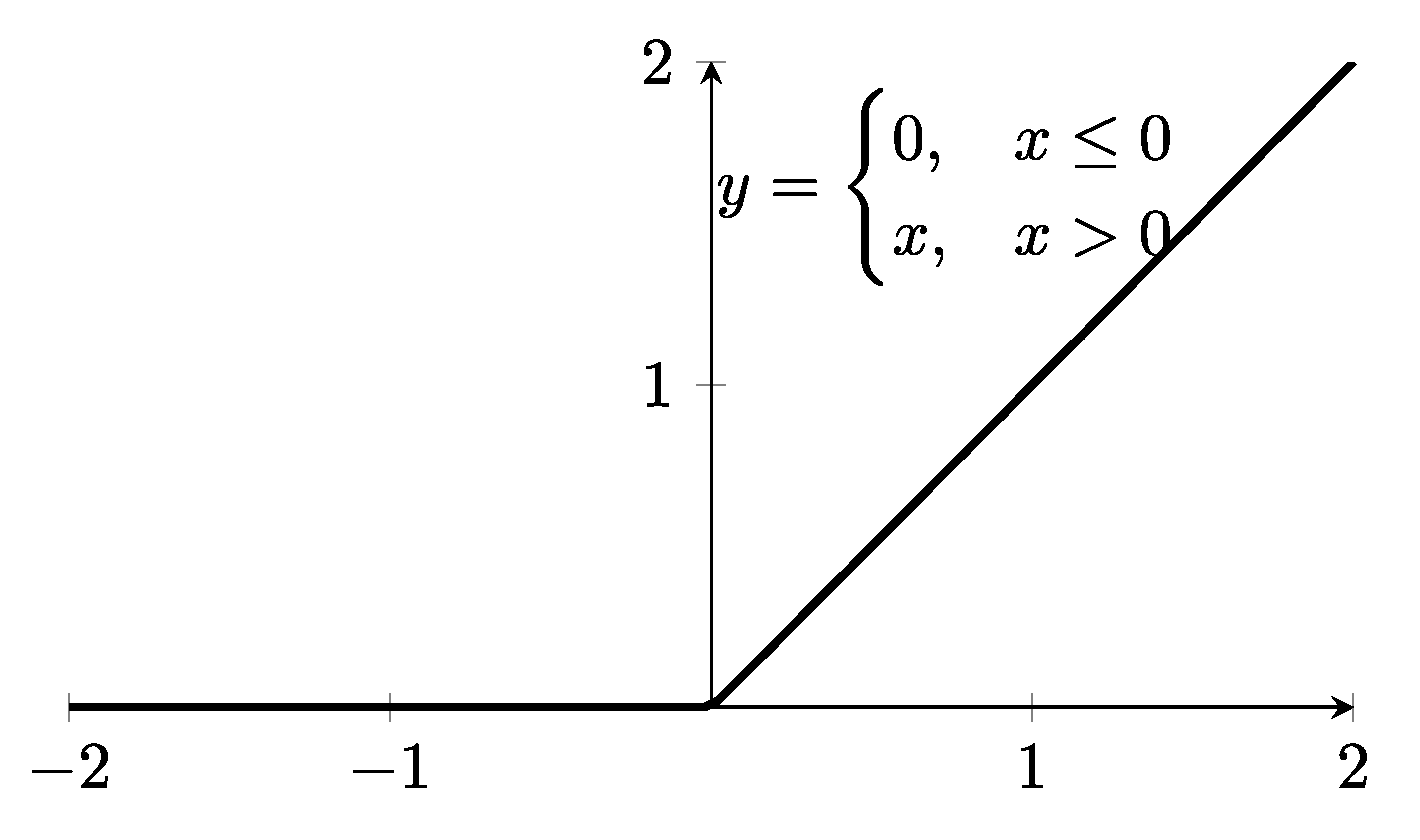

CNN. The convolutional neural network (CNN) is inspired by biological processes in the visual cortex of animals, and thus commonly applied to image recognition. Since a one-dimensional-CNN scans data in a one-dimensional and sequential manner, it can also be used to capture sequential textual information. We tested a 1D-CNN for capturing relationships between adjacent words. A typical CNN network usually contains a convolutional layer, a pooling layer, and a fully-connected layer. We created our network following this paradigm. First, as with LSTM-RNN, we converted the words in property descriptions into vector spaces using GloVe. Similarly, word sequences were padded to form a homogeneous vector space. We then employed a 1D convolution layer to slide the filter over each vector space. As the core building block of a CNN, the convolution layer partitions input into partially overlapping regions and utilizes convolution operations to explore these regions, which emulates the response of an individual cortical neuron to visual stimulus. We also applied the activation function ReLU (defined as

) to introduce non-linearity into the network (

Figure 2).

Next, we applied a max pooling layer, which takes the maximum value in each one-dimensional window as the output. This process decreases the number of features while retaining the most important information. Finally, we flattened the combined outputs from pooling layers and concatenated layers from both short descriptions and long descriptions to construct a fully-connected network (

Figure 3).

For both RNN and CNN, we used Adadelta as the optimizer and mean absolute error (MAE) as the loss function. Adadelta is a robust optimizer, which adapts the learning rates used in stochastic gradient descent [

34]. Model performance was evaluated by cross-validation. In addition, for both RNN and CNN, we used Leaky ReLU (Equation (6)) at the second-to-last layer rather than the common ReLU activation function. Leaky ReLU allows a small, non-zero gradient when the neurons are not active. This change helped to maintain a small fraction rather than zero when the input was negative, which was helpful in Exp. III when we fed outputs from the second-to-last layers into other machine learning models (e.g., random forest).

2.4.3. Exp. III: Combined Models Using both Numeric and Textual Information

We combined numeric information (e.g., location, bedrooms, and square footage) and textual information (short and long property descriptions) derived from Exp. II to jointly model rental prices. We first kept the weights trained in Exp. II, transferring them directly to Exp. III. Notably, the second-to-last outputs of LSTM and 1D-CNN are 32-dimensional vectors that embed the information derived from apartment descriptions. In order to reduce the dimensionality of these vectors, we extracted the major components using principal component analysis (PCA). All features were trained on a number of models in order to evaluate model performance.

We used multiple indicators to assess the models; specifically, the mean absolute error (MAE), root-mean-square error (RMSE), and mean absolute percentage error (MAPE). Both MAE and RMSE evaluate absolute error, while MAPE measures relative error [

35].

where

Oi denotes the observed property price,

Pi denotes the estimated property price, and

N denotes the number of samples.