Building Change Detection Using a Shape Context Similarity Model for LiDAR Data

Abstract

:1. Introduction

2. Materials and Methods

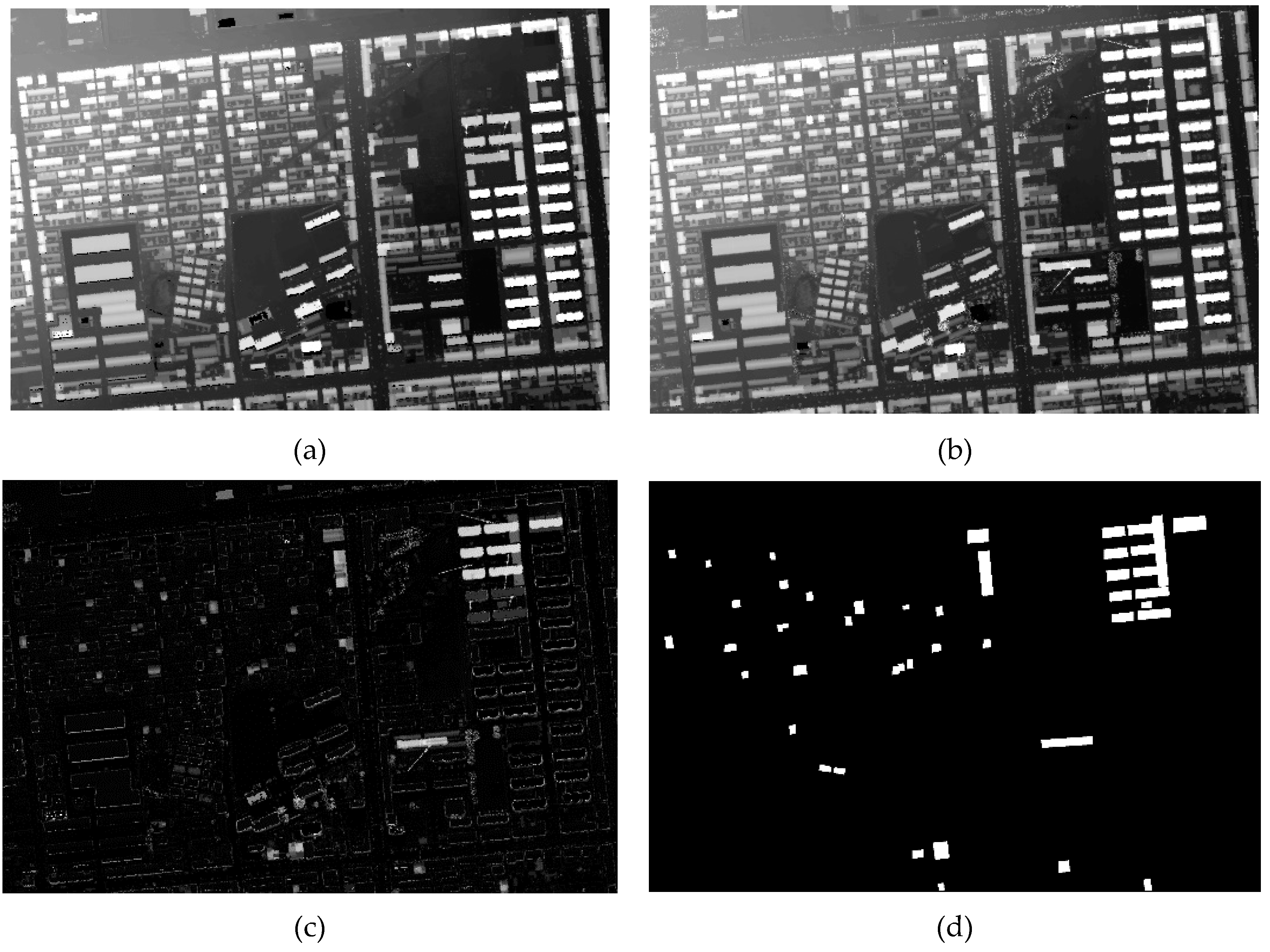

2.1. Data

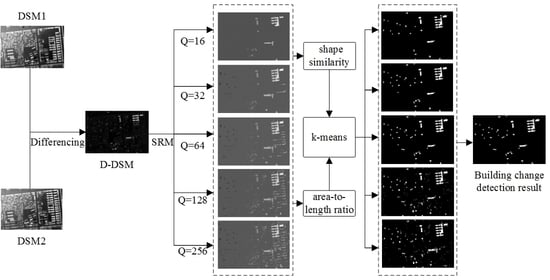

2.2. Methodology

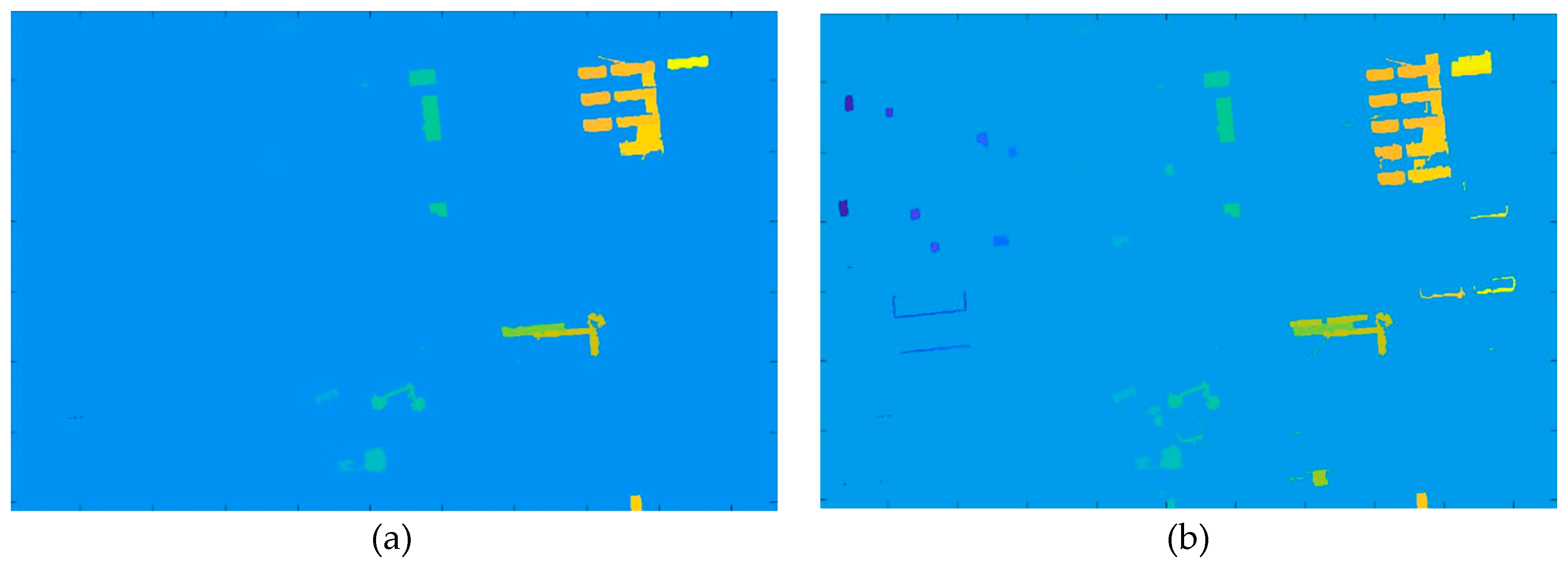

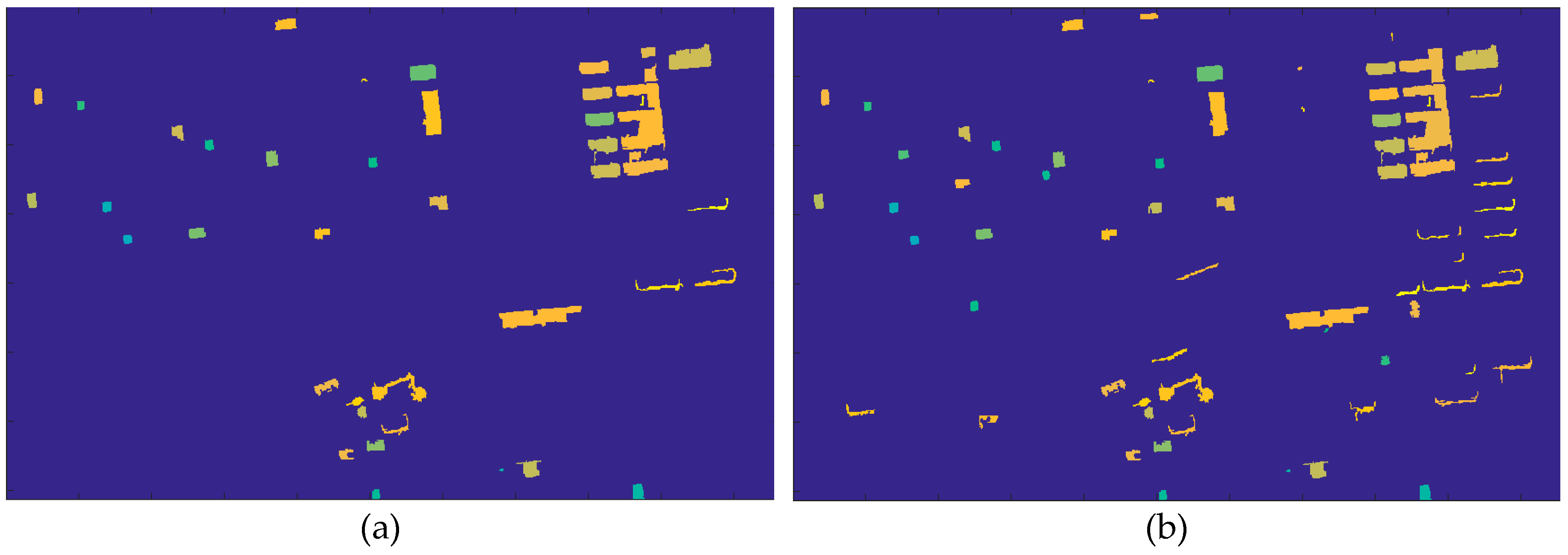

2.2.1. Segmentation of D-DSM Using SRM

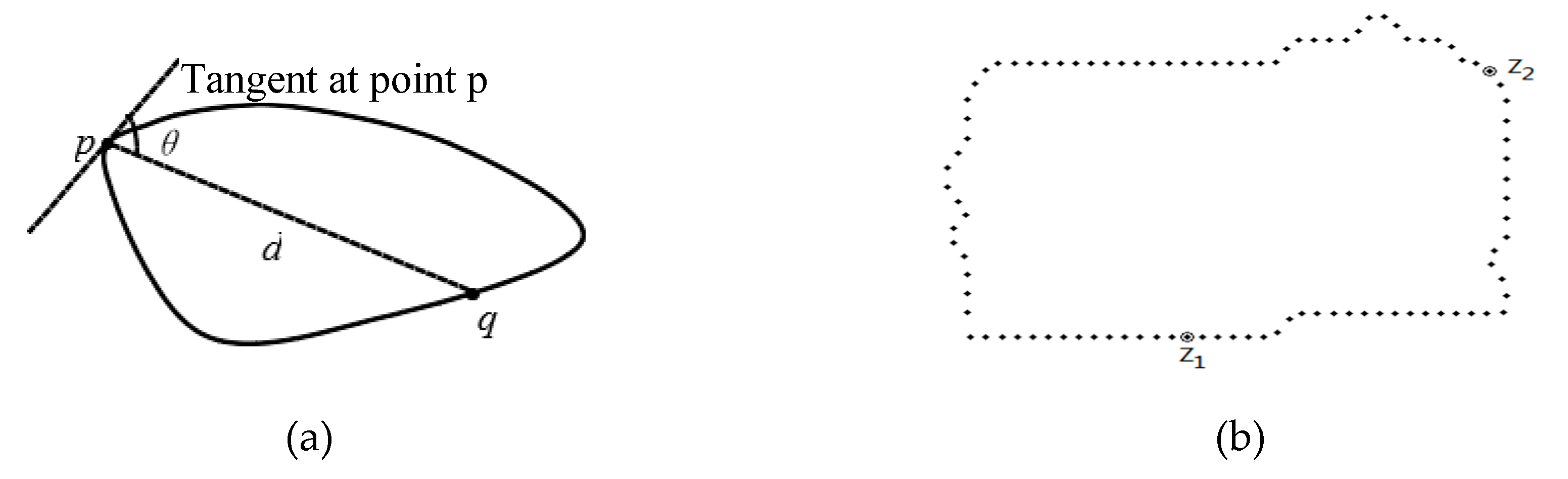

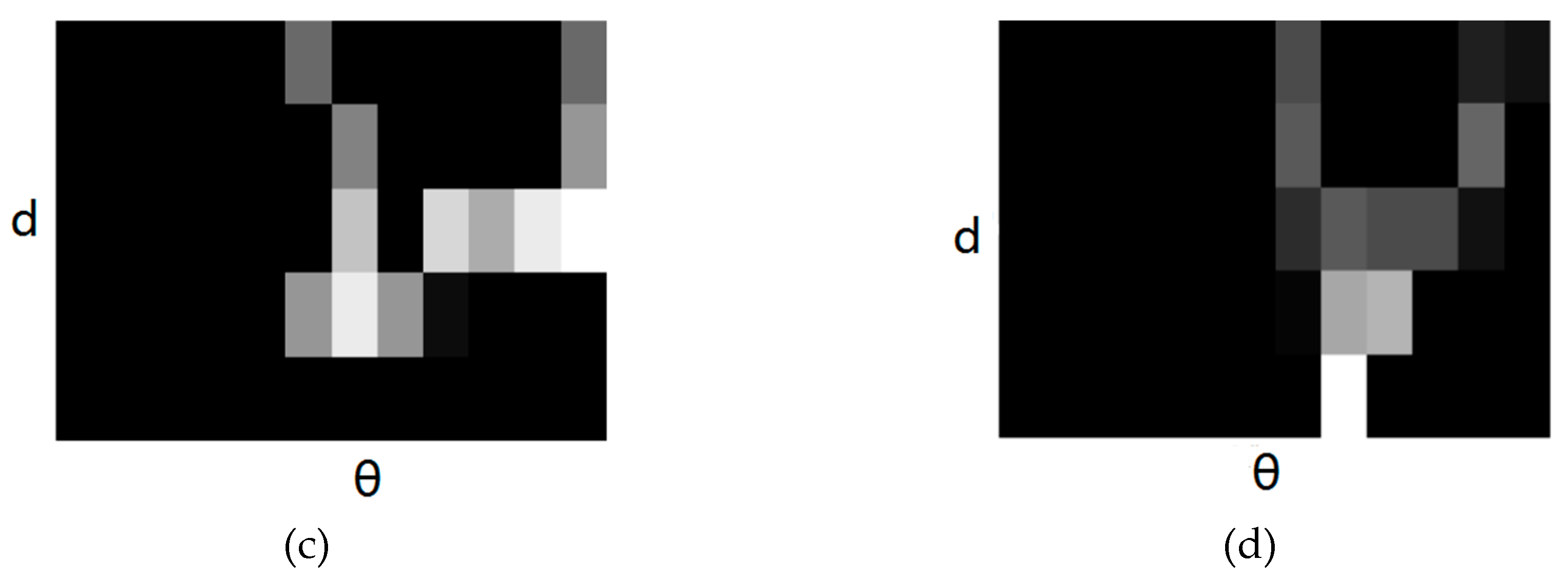

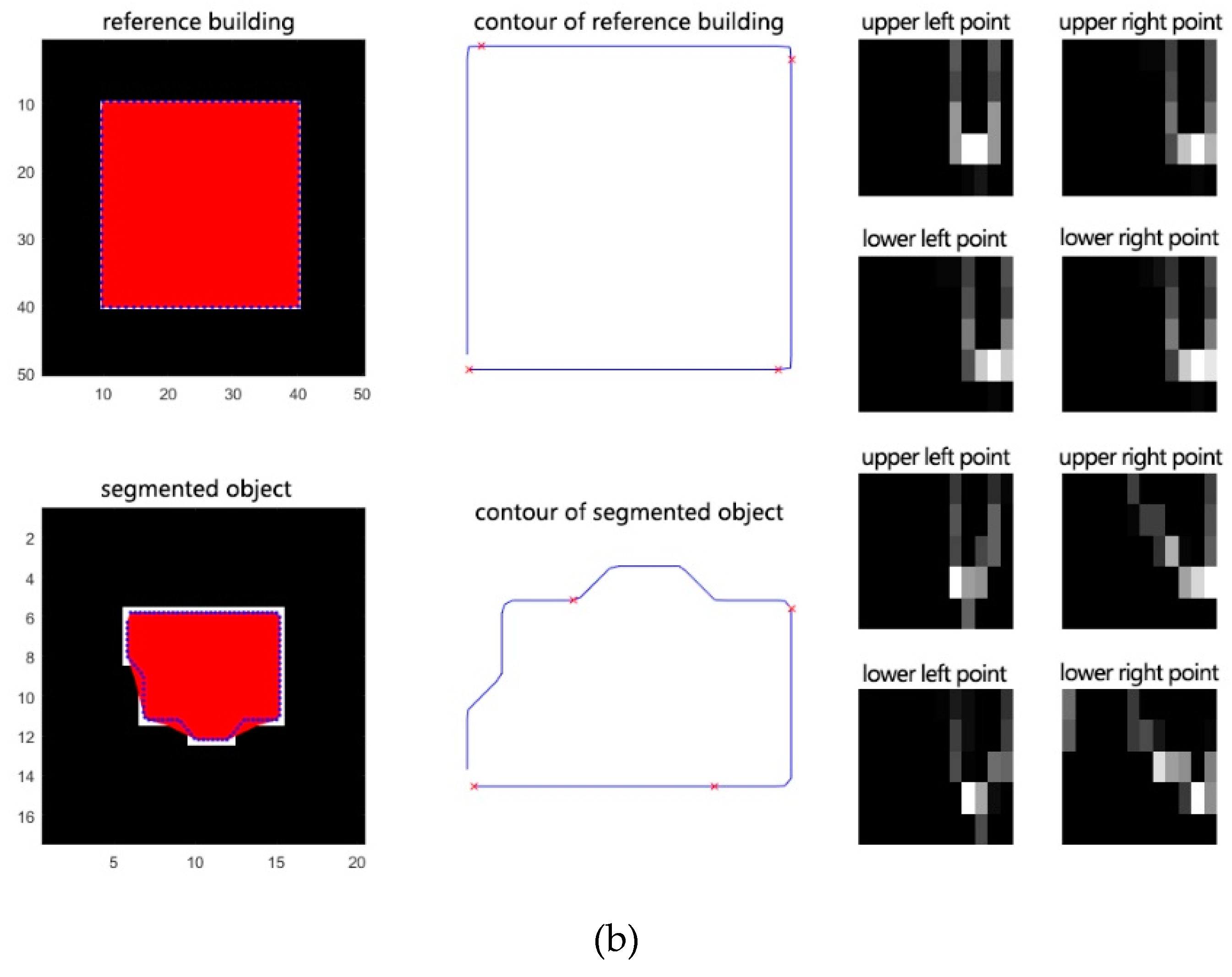

2.2.2. Shape Similarity Calculation Using Shape Context Model

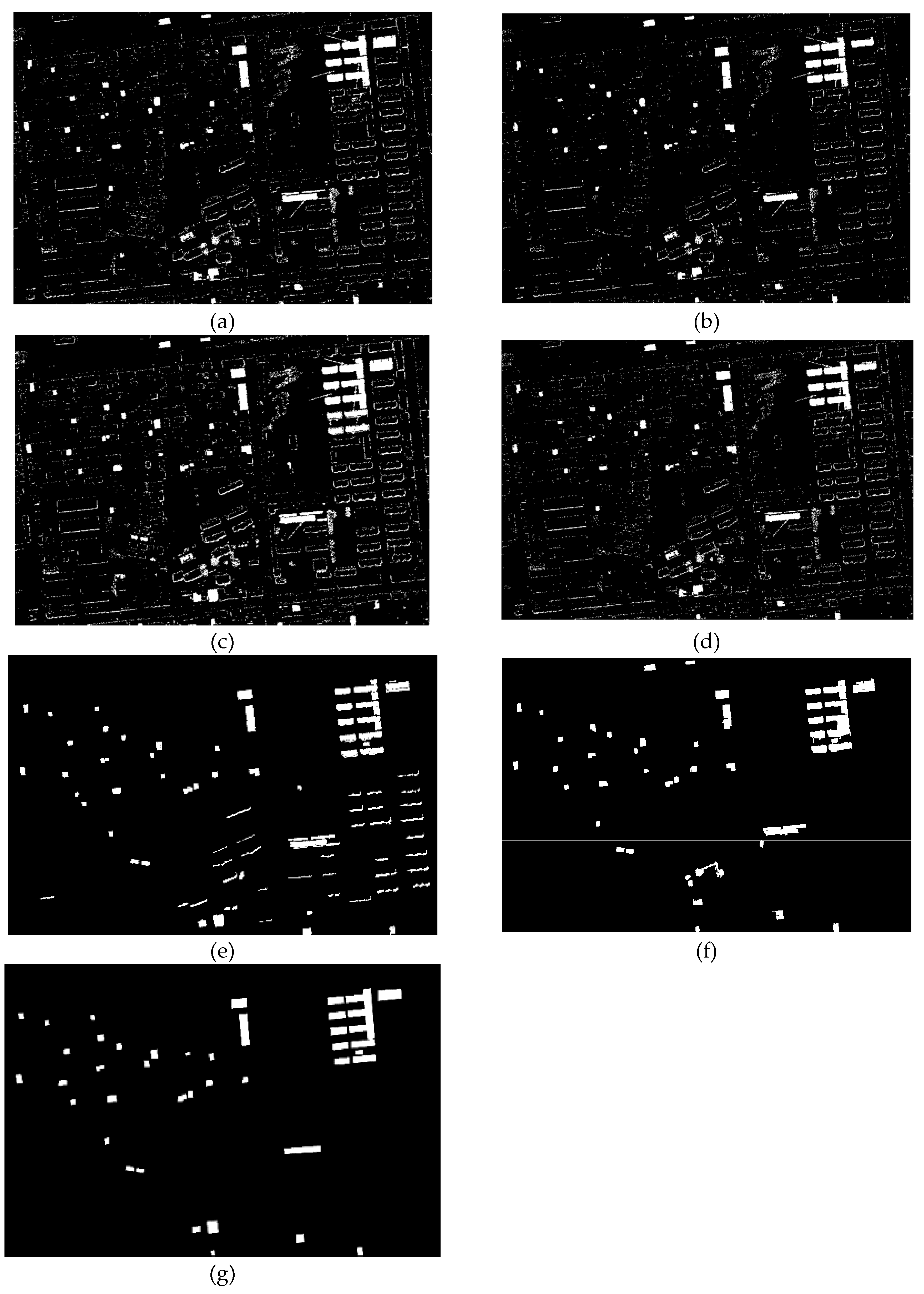

2.2.3. Building Change Detection Using the K-Means Algorithm

- (1)

- Missed detections (MD): the number of unchanged pixels in the change detection map incorrectly classified when compared to the ground reference map. The missed detection rate is calculated by the ratio , where is the total number of changed pixels counted in the ground reference map.

- (2)

- False alarms (FA): the number of changed pixels in the change detection map incorrectly classified when compared to the ground reference. The false detection rate is calculated by the ratio , where is the total number of unchanged pixels counted in the ground reference map.

- (3)

- Total errors (TE): the total number of detection errors including both miss and false detections, which is the sum of the FA and the MD. The total error rate is described by the ratio .

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Singh, A. Review Article Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Bruzzone, L.; Serpico, S.B. Detection of changes in remotely-sensed images by the selective use of multi-spectral information. Int. J. Remote Sens. 1997, 18, 3883–3888. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondízio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Lai, X.; Yang, J.; Li, Y.; Wang, M. A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features. Remote Sens. 2019, 11, 1636. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Cui, S.; Reinartz, P. Building Change Detection Based on Satellite Stereo Imagery and Digital Surface Models. IEEE Trans. Geosci. Remote Sens. 2014, 52, 406–417. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Chaabouni-Chouayakh, H.; Reinartz, P.; Krauß, T.; d’Angelo, P. Automatic 3d Change Detection Based On Optical Satellite Stereo Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2010, 38, 586–591. [Google Scholar]

- Zheng, Y.; Weng, Q.; Zheng, Y. A Hybrid Approach for Three-Dimensional Building Reconstruction in Indianapolis from LiDAR Data. Remote Sens. 2017, 9, 310. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Kang, Z.; Akwensi, P. A Label-Constraint Building Roof Detection Method From Airborne LiDAR Point Clouds. IEEE Geosci. Remote Sens. Lett. 2020, PP, 1–5. [Google Scholar] [CrossRef]

- Murakami, H.; Nakagawa, K.; Hasegawa, H.; Shibata, T.; Iwanami, E. Change detection of buildings using an airborne laser scanner. ISPRS J. Photogramm. Remote Sens. 1999, 54, 148–152. [Google Scholar] [CrossRef]

- Tuong Thuy, V.; Matsuoka, M.; Yamazaki, F. LIDAR-based change detection of buildings in dense urban areas. In Proceedings of the IGARSS 2004. 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; IEEE: Piscataway, NJ, USA, 2004; 5, pp. 3413–3416. [Google Scholar] [CrossRef]

- Chen, L.; Lin, L.-J. Detection of building changes from aerial images and light detecting and ranging (LIDAR) data. J. Appl. Remote Sens. 2010, 4. [Google Scholar] [CrossRef]

- Stal, C.; Tack, F.; De Maeyer, P.; De Wulf, A.; Goossens, R. Airborne photogrammetry and lidar for DSM extraction and 3D change detection over an urban area – a comparative study. Int. J. Remote Sens. 2013, 34, 1087–1110. [Google Scholar] [CrossRef] [Green Version]

- Rottensteiner, F. Automated updating of building data bases from digital surface models and multi-spectral images: Potential and limitations. ISPRS Congr. 2008, 265–270. [Google Scholar]

- Grigillo, D.; Fras, M.; Petrovič, D. Automatic extraction and building change detection from digital surface model and multispectral orthophoto. Geod. Vestn. 2011, 55, 011–027. [Google Scholar] [CrossRef]

- Malpica, J.; Alonso, M. Urban changes with satellite imagery and LIDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2012, 38. [Google Scholar] [CrossRef]

- Trinder, J.; Salah, M. Aerial images and lidar data fusion for disaster change detection. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci 2012, 1, 227–232. [Google Scholar] [CrossRef] [Green Version]

- Zong, K.; Sowmya, A.; Trinder, J. Kernel Partial Least Squares Based Hierarchical Building Change Detection Using High Resolution Aerial Images and Lidar Data. In Proceedings of the 2013 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Hobart, TAS, Australia, 26–28 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–7. [Google Scholar] [CrossRef]

- Choi, K.; Lee, I.; Kim, S. A feature based approach to automatic change detection from LiDAR data in urban areas. Laserscanning09 2009, 38, 259–264. [Google Scholar]

- Matikainen, L.; Hyyppä, J.; Ahokas, E.; Markelin, L.; Kaartinen, H. Automatic Detection of Buildings and Changes in Buildings for Updating of Maps. Remote Sens. 2010, 2, 1217. [Google Scholar] [CrossRef] [Green Version]

- Matikainen, L.; Kaartinen, H.; Hyyppä, J. Classification tree based building detection from laser scanner and aerial image data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 36. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Fan, J.; Yau, D.Y.; Elmagarmid, A.K.; Aref, W.G. Automatic image segmentation by integrating color-edge extraction and seeded region growing. IEEE Trans. Image Process 2001, 10, 1454–1466. [Google Scholar] [CrossRef] [Green Version]

- Vincent, L.; Soille, P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef] [Green Version]

- Duarte-Carvajalino, J.M.; Sapiro, G.; Velez-Reyes, M.; Castillo, P.E. Multiscale Representation and Segmentation of Hyperspectral Imagery Using Geometric Partial Differential Equations and Algebraic Multigrid Methods. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2418–2434. [Google Scholar] [CrossRef]

- Nock, R.; Nielsen, F. Statistical region merging. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1452–1458. [Google Scholar] [CrossRef] [PubMed]

- Ling, H.; Jacobs, D.W. Shape Classification Using the Inner-Distance. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 286–299. [Google Scholar] [CrossRef] [PubMed]

- Belongie, S.; Malik, J.; Puzicha, J. Shape matching and object recognition using shape contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 509–522. [Google Scholar] [CrossRef] [Green Version]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [Green Version]

- Bazi, Y.; Melgani, F.; Al-Sharari, H.D. Unsupervised change detection in multispectral remotely sensed imagery with level set methods. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3178–3187. [Google Scholar] [CrossRef]

- Krinidis, S.; Chatzis, V. A robust fuzzy local information C-means clustering algorithm. IEEE Trans. Image Process. 2010, 19, 1328–1337. [Google Scholar] [CrossRef]

| Q | Missed Detections (No. of Pixels) | Pm (%) | False Alarms (No. of Pixels) | Pf (%) | Total Errors (No. of Pixels) | Pt (%) | Kappa Coefficient |

|---|---|---|---|---|---|---|---|

| 16 | 21,259 | 24.97 | 15,084 | 0.51 | 36,343 | 1.20 | 0.7720 |

| 32 | 14,575 | 17.12 | 19,694 | 0.67 | 34,269 | 1.13 | 0.7988 |

| 64 | 13,662 | 16.05 | 20,776 | 0.71 | 34,438 | 1.14 | 0.8 |

| 128 | 14,369 | 16.88 | 43,610 | 1.48 | 57,979 | 1.91 | 0.6997 |

| 256 | 15,406 | 18.09 | 49,289 | 1.67 | 64,695 | 2.13 | 0.6724 |

| Method | Missed Detection (No. of Pixels) | Pm (%) | False Alarms (No. of Pixels) | Pf (%) | Total Errors (No. of Pixels) | Pt (%) | Kappa Coefficient | Time (s) |

|---|---|---|---|---|---|---|---|---|

| CV | 23,865 | 28.03 | 84,947 | 2.88 | 108,812 | 3.59 | 0.5124 | 31 |

| MLSK | 30,319 | 35.61 | 56,454 | 1.92 | 86,773 | 2.86 | 0.5430 | 24 |

| MLSK+MRF | 11,083 | 13.02 | 117,028 | 3.97 | 128,111 | 4.23 | 0.5175 | 52 |

| FLICM | 28,143 | 33.05 | 78,932 | 2.68 | 107,075 | 3.53 | 0.4983 | 17 |

| CNN | 18,642 | 22.39 | 32,735 | 1.21 | 51,377 | 1.84 | 0.7061 | / |

| Proposed method | 13,662 | 16.05 | 20,776 | 0.71 | 34,438 | 1.14 | 0.8 | 29 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, X.; Hao, M.; Shi, W. Building Change Detection Using a Shape Context Similarity Model for LiDAR Data. ISPRS Int. J. Geo-Inf. 2020, 9, 678. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9110678

Lyu X, Hao M, Shi W. Building Change Detection Using a Shape Context Similarity Model for LiDAR Data. ISPRS International Journal of Geo-Information. 2020; 9(11):678. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9110678

Chicago/Turabian StyleLyu, Xuzhe, Ming Hao, and Wenzhong Shi. 2020. "Building Change Detection Using a Shape Context Similarity Model for LiDAR Data" ISPRS International Journal of Geo-Information 9, no. 11: 678. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9110678