Efficient Coarse Registration of Pairwise TLS Point Clouds Using Ortho Projected Feature Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Point Clouds Datasets

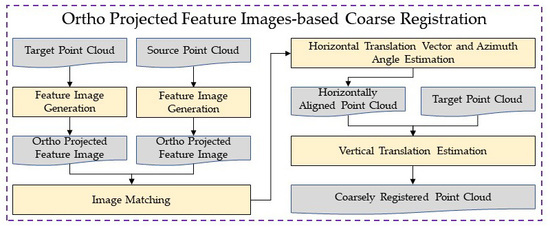

2.2. Ortho Projected Feature Image-Based Coarse Registration Method

2.2.1. Ortho Projected Feature Image Generation

2.2.2. Horizontal Translation Vector and Azimuth Angle Estimation

2.2.3. Vertical Translation Estimation

2.2.4. Accuracy Evaluation Criteria

3. Results

4. Discussion

4.1. Parameter Setting

4.2. Registration of Point Clouds with Different Point Density

4.3. Accuracy and Efficiency

4.4. Robustness of Feature Image Matching

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liang, X.; Kankare, V.; Hyyppä, J.; Wang, Y.; Kukko, A.; Haggrén, H.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Guan, F.; et al. Terrestrial laser scanning in forest inventories. ISPRS J. Photogramm. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Liang, X.; Kukko, A.; Kaartinen, H.; Hyyppä, J.; Yu, X.; Jaakkola, A.; Wang, Y. Possibilities of a Personal Laser Scanning System for Forest Mapping and Ecosystem Services. Sensors 2014, 14, 1228–1248. [Google Scholar] [CrossRef] [PubMed]

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Baltsavias, E.P. Airborne laser scanning: Existing systems and firms and other resources. ISPRS J. Photogramm. 1999, 54, 164–198. [Google Scholar] [CrossRef]

- Herráez, J.; Martínez, J.C.; Coll, E.; Martín, M.T.; Rodríguez, J. 3D modeling by means of videogrammetry and laser scanners for reverse engineering. Measurement 2016, 87, 216–227. [Google Scholar] [CrossRef]

- Jarząbek-Rychard, M.; Borkowski, A. 3D building reconstruction from ALS data using unambiguous decomposition into elementary structures. ISPRS J. Photogramm. 2016, 118, 1–12. [Google Scholar] [CrossRef]

- Wang, J.; Hu, Z.; Chen, Y.; Zhang, Z. Automatic Estimation of Road Slopes and Superelevations Using Point Clouds. Photogramm. Eng. Remote Sens. 2017, 83, 217–223. [Google Scholar] [CrossRef]

- Yang, B.; Dai, W.; Dong, Z.; Liu, Y. Automatic Forest Mapping at Individual Tree Levels from Terrestrial Laser Scanning Point Clouds with a Hierarchical Minimum Cut Method. Remote Sens. 2016, 8, 372. [Google Scholar] [CrossRef] [Green Version]

- Chen, Q.; Wang, H.; Zhang, H.; Sun, M.; Liu, X. A Point Cloud Filtering Approach to Generating DTMs for Steep Mountainous Areas and Adjacent Residential Areas. Remote Sens. 2016, 8, 71. [Google Scholar] [CrossRef] [Green Version]

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [CrossRef] [Green Version]

- Zhu, H.; Guo, B.; Zou, K.; Li, Y.; Yuen, K.; Mihaylova, L.; Leung, H. A Review of Point Set Registration: From Pairwise Registration to Groupwise Registration. Sensors 2019, 19, 1191. [Google Scholar] [CrossRef] [Green Version]

- Cai, Z.; Chin, T.; Bustos, A.P.; Schindler, K. Practical optimal registration of terrestrial LiDAR scan pairs. ISPRS J. Photogramm. 2019, 147, 118–131. [Google Scholar] [CrossRef] [Green Version]

- Yu, C.; Ju, D. A Maximum Feasible Subsystem for Globally Optimal 3D Point Cloud Registration. Sensors 2018, 18, 544. [Google Scholar] [CrossRef] [Green Version]

- Ge, X. Automatic markerless registration of point clouds with semantic-keypoint-based 4-points congruent sets. ISPRS J. Photogramm. 2017, 130, 344–357. [Google Scholar] [CrossRef] [Green Version]

- Zai, D.; Li, J.; Guo, Y.; Cheng, M.; Huang, P.; Cao, X.; Wang, C. Pairwise registration of TLS point clouds using covariance descriptors and a non-cooperative game. ISPRS J. Photogramm. 2017, 134, 15–29. [Google Scholar] [CrossRef]

- Kelbe, D.; van Aardt, J.; Romanczyk, P.; van Leeuwen, M.; Cawse-Nicholson, K. Multiview Marker-Free Registration of Forest Terrestrial Laser Scanner Data With Embedded Confidence Metrics. IEEE Trans. Geosci. Remote Sens. 2017, 55, 729–741. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z.; Liang, F.; Liu, Y. Automatic registration of large-scale urban scene point clouds based on semantic feature points. ISPRS J. Photogramm. 2016, 113, 43–58. [Google Scholar] [CrossRef]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Globally consistent registration of terrestrial laser scans via graph optimization. ISPRS J. Photogramm. 2015, 109, 126–138. [Google Scholar] [CrossRef]

- Yang, B.; Zang, Y.; Dong, Z.; Huang, R. An automated method to register airborne and terrestrial laser scanning point clouds. ISPRS J. Photogramm. 2015, 109, 62–76. [Google Scholar] [CrossRef]

- Automatic Registration of TLS-TLS and TLS-MLS Point Clouds Using a Genetic Algorithm. Sensors 2017, 17, 1979. [CrossRef] [PubMed] [Green Version]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Gruen, A.; Akca, D. Least squares 3D surface and curve matching. ISPRS J. Photogramm. 2005, 59, 151–174. [Google Scholar] [CrossRef] [Green Version]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003; pp. 2743–2748. [Google Scholar]

- Park, S.; Subbarao, M. A fast point-to-tangent plane technique for multi-view registration. In Proceedings of the Fourth International Conference on 3-D Digital Imaging and Modeling, Banff, AB, Canada, 6–10 October 2003; pp. 276–283. [Google Scholar]

- Akca, D. Co-registration of surfaces by 3D least squares matching. Photogramm. Eng. Remote Sens. 2010, 76, 307–318. [Google Scholar] [CrossRef] [Green Version]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-Points Congruent Sets for Robust Pairwise Surface Registration. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Keypoint-based 4-Points Congruent Sets—Automated marker-less registration of laser scans. ISPRS J. Photogramm. 2014, 96, 149–163. [Google Scholar] [CrossRef]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Markerless point cloud registration with keypoint-based 4-points congruent sets. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 1, 283–288. [Google Scholar] [CrossRef] [Green Version]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Gao, Y.; Du, Z.; Xu, W.; Li, M.; Dong, W. HEALPix-IA: A Global Registration Algorithm for Initial Alignment. Sensors 2019, 19, 427. [Google Scholar] [CrossRef] [Green Version]

- Salti, S.; Tombari, F.; Di Stefano, L. SHOT: Unique signatures of histograms for surface and texture description. Comput. Vis. Image Underst. 2014, 125, 251–264. [Google Scholar] [CrossRef]

- Yang, B.; Zang, Y. Automated registration of dense terrestrial laser-scanning point clouds using curves. ISPRS J. Photogramm. 2014, 95, 109–121. [Google Scholar] [CrossRef]

- Dold, C.; Brenner, C. Registration of terrestrial laser scanning data using planar patches and image data. ISPRS Arch. 2006, 36, 78–83. [Google Scholar]

- Kelbe, D.; van Aardt, J.; Romanczyk, P.; van Leeuwen, M.; Cawse-Nicholson, K. Marker-Free Registration of Forest Terrestrial Laser Scanner Data Pairs With Embedded Confidence Metrics. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4314–4330. [Google Scholar] [CrossRef]

- Yang, M.Y.; Cao, Y.; McDonald, J. Fusion of camera images and laser scans for wide baseline 3D scene alignment in urban environments. ISPRS J. Photogramm. 2011, 66, S52–S61. [Google Scholar] [CrossRef] [Green Version]

- Barnea, S.; Filin, S. Keypoint based autonomous registration of terrestrial laser point-clouds. ISPRS J. Photogramm. 2008, 63, 19–35. [Google Scholar] [CrossRef]

- Weinmann, M.; Weinmann, M.; Hinz, S.; Jutzi, B. Fast and automatic image-based registration of TLS data. ISPRS J. Photogramm. 2011, 66, S62–S70. [Google Scholar] [CrossRef]

- RiScan Pro. Available online: http://www.riegl.com/products/software-packages/riscan-pro/ (accessed on 18 June 2019).

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vision 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision ICCV, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Muja, M.; Lowe, D.G. Fast approximate nearest neighbors with automatic algorithm configuration. In International Conference on Computer Vision Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2009; Volume 2, p. 2. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for Registration of 3-D Shapes; International Society for Optics and Photonics: Bellingham, WA, USA, 1992; pp. 586–607. [Google Scholar]

- Zhou, Q.; Park, J.; Koltun, V. Fast Global Registration; Springer: Berlin/Heidelberg, Germany, 2016; pp. 766–782. [Google Scholar]

- Demo---Practical-Optimal-Registration-of-Terrestrial-LiDAR-Scan-Pairs. Available online: https://github.com/ZhipengCai/Demo---Practical-optimal-registration-of-terrestrial-LiDAR-scan-pairs (accessed on 18 June 2019).

- CloudCompare. Available online: http://cloudcompare.org/ (accessed on 18 June 2019).

- Kashani, A.; Olsen, M.; Parrish, C.; Wilson, N. A Review of LIDAR Radiometric Processing: From Ad Hoc Intensity Correction to Rigorous Radiometric Calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef] [Green Version]

- Long, T.; Jiao, W.; He, G.; Wang, W. Automatic line segment registration using Gaussian mixture model and expectation-maximization algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1688–1699. [Google Scholar] [CrossRef]

- Ok, A.O.; Wegner, J.D.; Heipke, C.; Rottensteiner, F.; Soergel, U.; Toprak, V. Matching of straight line segments from aerial stereo images of urban areas. ISPRS J. Photogramm. 2012, 74, 133–152. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, Y.; Zhu, Q. Integrated point and edge matching on poor textural images constrained by self-adaptive triangulations. ISPRS J. Photogramm. 2012, 68, 40–55. [Google Scholar] [CrossRef]

| OPFI | FMP-BnB | BnB | LM | RANSAC | |

|---|---|---|---|---|---|

| DSimRIEGL | 0.06 | 0.04 | 0.04 | 0.01 | 0.01 |

| DRIEGL | 0.02 | 0.09 | 0.09 | 0.05 | 0.10 |

| DTRIMBLE-1 | 0.07 | 0.02 | 0.02 | 0.01 | 0.06 |

| DTRIMBLE-2 | 0.07 | 0.15 | 0.15 | 0.13 | 0.06 |

| OPFI | FMP-BnB | BnB | LM | RANSAC | |

|---|---|---|---|---|---|

| DSimRIEGL | 0.01 | 0.04 | 0.04 | 0.01 | 0.01 |

| DRIEGL | 0.02 | 0.08 | 0.08 | 0.01 | 0.01 |

| DTRIMBLE-1 | 0.01 | 0.03 | 0.03 | 0.01 | 0.09 |

| DTRIMBLE-2 | 0.01 | 0.08 | 0.08 | 0.07 | 0.17 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Zhang, X.; Xu, Y.; Chen, X. Efficient Coarse Registration of Pairwise TLS Point Clouds Using Ortho Projected Feature Images. ISPRS Int. J. Geo-Inf. 2020, 9, 255. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9040255

Liu H, Zhang X, Xu Y, Chen X. Efficient Coarse Registration of Pairwise TLS Point Clouds Using Ortho Projected Feature Images. ISPRS International Journal of Geo-Information. 2020; 9(4):255. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9040255

Chicago/Turabian StyleLiu, Hua, Xiaoming Zhang, Yuancheng Xu, and Xiaoyong Chen. 2020. "Efficient Coarse Registration of Pairwise TLS Point Clouds Using Ortho Projected Feature Images" ISPRS International Journal of Geo-Information 9, no. 4: 255. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9040255