Consider a couple of public camera parameters evaluations, where the images and small sets of coordinates and of limited accuracy are available.

5.1. Example 1: Inclined Driving Surface and a Camera with Tilted Horizon

Figure 3 shows a video frame from the public camera.

The camera is a good example, as its video contains noticeable perspective and radial distortion. In the image, there is a line demonstrating the camera’s slight horizon tilt (the wall at the base of which is

). The visible part of the road has a significant inclination. This is a FullHD camera (

). Select a point

as the origin of

. Assess ENU coordinates of the point

O (the camera position), point

G (the source of the principal point

C,

Figure 1 and

Figure 3), and points

and

on the lines

and

, respectively. Using maps and online photos, we obtained the values listed in

Table 1. Convert global coordinates to the

coordinates (see [

8]) and add it to the table (in meters).

The points from

Table 1 can be found on the satellite layer of [

18] using the latitude and longitude of the query.

We obtain

and

by using (

15), (

16) and

Table 1 data. Street camera image sensors usually have square pixels, so

and

(

4). If the difference

and

is small, let

be equal

(we use mean value). So we have an approximation of matrix

A.

Now, we can estimate radial distortion coefficients

and

by minimizing distances (

19) or in another way. Put

and

to compute the mapping

(

17) and apply it to eliminate radial distortion

and

from the original image (

). The mapping does not change for different frames from the camera video. We obtained the undistorted version of image

, which we have identified

(

Figure 4).

The radial distortion of the straight lines in the vicinity of the road has almost disappeared in . The camera’s field of view decreased, the C point remained in place, and the and points moved further along the lines and . The values of and can be recalculated, but radial distortion is not the only cause of errors. Therefore, we will perform additional cross-validation and compensate the values of and if required.

We can estimate the horizon tilt angle from the image (

Figure 4) and rotate the plane with the axes

and

of frame

around the optical axis

at this angle. As a result of rotating the image around the optical axis of the camera on

, the verticality of the required line was achieved (

Figure 5), so let

in (

24).

We compute the camera orientation matrix

R with (

20)–(

26). Now, we can convert

coordinates to

with (

27).

Let us use the area nearest to the camera carriageway region as the ROI (area

Q). We select several points in

Q, estimate their global coordinates, and convert them to

[

8]. Next, we convert

coordinates to

with (

27). The results are in

Table 2.

We obtain et the road plane

approximation with the (

29) and (

32):

We choose four corners of

and

of the domain

in the

(see

Table 3 and

Figure 6).

We calculate the lines that bound domain

by (

41) and detect the set of pixel coordinates that belong to

. Note that this set does not change for different frames from the camera video. We can save it for later usage with the camera and the

Q.

We compute the

as

by (

48) and (

35)–(

39). We convert the pixel coordinates from

to

by (

43)–(

47):

and save the result. The coordinates set

does not change for the (fixed) camera and the

Q. The obtained discrete sets

and

are sets of metric coordinates. We can use

for measurements on the plane

as is or apply an interpolation.

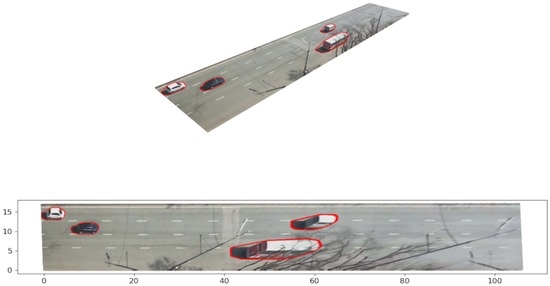

Since

, the point set is suitable to output in a plane picture. If for each point, we use

and the color of the pixel

g for all

, and output the plane as the scatter map, we obtain the bottom image from

Figure 6. We chose a multi-car scene in the center of

Q for the demonstration. We obtain the accurate ”view from above” for the points that initially lie on the road’s surface. The positions of the pixels representing objects that tower above plane

have shifted. There are options for estimating and accounting for the height of cars and other objects so that their images look realistic in the bottom image. However, this is the subject of a separate article.

It is worth paying attention to the axes of the bottom figure (they show meters). In comparison with the original (top image of

Figure 6), there are noticeable perspective distortion changes in the perception of distance. The width and length of the area are in good agreement with the measurements made by the rangefinder and estimates from online maps. The horizontal lines in the image are almost parallel which indicates the good quality of

and

. In this way, we cross-checked the camera parameters obtained earlier. Obviously, all vehicles are in contact with the road surface.

We do not need to remove distortions (radial and perspective) from all video frames to estimate traffic statistics. The object detection or instance segmentation works with original video frames in . We use the distortions compensation for traffic measurements to obtain distances in meters for selected pixels. We calculate the needed maps once for the camera parameters and Q and use them as needed for measurements. Let us demonstrate this on a specific trajectory.

The detected trajectory of the vehicle consists of 252 contours, 180 of which are in

. A total of 200 contours in the image look messy, so we draw every 20th (

Figure 7). We deliberately did not choose rectangles to demonstrate a more general case. Vertex coordinates describe the contour. It is enough to select one point on or inside each contour to evaluate the speed or acceleration of an object. The point should not move around the object; we want the point source closer to the road’s surface. The left bottom corner of a contour is well suited for this camera. However, what does it mean for a polygon? For a contour

we can build the pixel coordinates

We call the left bottom corner of a contour the vertex

for that

considering the axes direction of the

. Another option is to search the point nearest to

on the edges of the contour with the help of (

19).

We map all contour vertexes on the

to obtain

Figure 8. However, we need to map only these “corners” for measurements.

The “corners” of the selected contours have the following

coordinates after the mapping:

We can use a variety of formulas to estimate speed, acceleration, and variation. The simplest estimation of the vehicle speed is (the camera frame rate is 25 frames/second, we use 1/20 of the frames):

or

km/h.

5.2. Example 2. More Radial Distortion and Vegetation, More Calibration Points

Figure 9 illustrates a frame image from another public camera of the same operator. This camera has zero horizon tilt and more substantial radial distortion. We have calibrated the camera and calculated the maps in the season of rich vegetation. Tree foliage complicates the selection and estimation of coordinates of points. The two-way road is visible to the camera. This is a FullHD camera (

). The zero horizon tilt is visible on the line

near

(see

Table 4). We select the point

as the origin of

(see

Table 4). We assess global coordinates of the point

O (the camera position), point

G (the source of the principal point

C,

Figure 1,

Figure 9), and points

and

on the lines

(index

u) or

(index

v). Next, we convert the global coordinates to

and append them to

Table 4.

We obtained estimations

and

using Formulas (

15) and (

16) and

Table 4 data. There are a few points, but one can assume a pattern

. Perhaps the camera’s sensor pixel has a rectangular shape (see (

4)). Consider the two hypotheses:

The second variant means the square pixel.

Let us try variant (

50). With

and

we obtained the intrinsic parameters matrix

A (

1). We estimate radial distortion coefficients

and

, put

and

to compute the mapping

(

17), and apply it to eliminate radial distortion from the (

). We obtained the undistorted image

(see

Figure 10). We showed a cropped and interpolated version of

in Example 1 (

Figure 4). Let us demonstrate the result of mapping

without postprocessing. The image’s resolution

is

, but it contains only

pixels colored by the camera. So, the black grid is the unfilled pixels of the large rectangular.

Let us return to the illustration that is more pleasing to the eye by cutting off part of

and filling the void with interpolation (see

Figure 11).

We noted that in the field of view of the camera, there is a section of the wall of the building (near

), allowing you to put

(see

Figure 9). We are ready to compute the orientation matrix

R (

20)–(

26), so we can convert

coordinates to

(

27). We select the points to approximate the road surface (see

Table 5).

We calculate road plane

with (

29) and (

32):

We choose four corners of

and

of

in the image

(see

Table 6 and

Figure 12). Let us try the nonrectangular area

.

We calculate the lines that bound domain

with (

41) and detect the set of pixel coordinates that belongs to

. We compute the

as

with (

48) and (

35)–(

39). Next, we convert the pixel coordinates from

to

with (

43)–(

47) and (

49) and save the result.

The distances in Q correspond to estimates obtained online and a simple rangefinder (accuracy up to a meter). We plan more accurate assessments using the geodetic tools.

We repeat the needed steps for hypothesis (

51) and compare the results (see

Figure 12 and

Figure 13).

We observed a change in the geometry of

for the hypothesis (

51). For example, the pedestrian crossing changed its inclination; the road began to expand to the right. In this example, it is not easy to obtain several long parallel lines due to vegetation, and it is an argument for examining the cameras in a suitable season.