Hybrid Filter and Genetic Algorithm-Based Feature Selection for Improving Cancer Classification in High-Dimensional Microarray Data

Abstract

:1. Introduction

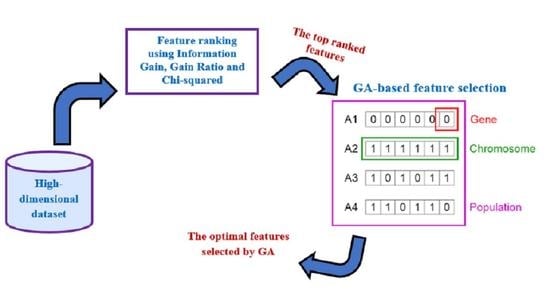

- Compared to previous works, we used IG, IGR and CS as three popular, simple and fast filter techniques to choose highly relevant features in order to reduce high-dimensional datasets: Brain, Breast, Lung, and CNS datasets. Although many microarray datasets are used in the literature, recent work [25] reported that the popular machine learning techniques achieved the lowest classification accuracy on these specific four microarray datasets: Brain, Breast, Lung, and CNS datasets. Furthermore, the performance improvements produced by several existing works on these specific four cancer datasets were limited.

- Since IG, IGR and CS evaluate features individually by finding the relationship between each feature individually with the class label, GA is then utilized to find the relationship between a set of features together with the class label to further optimize the selected features obtained from the filter methods to enhance the cancer classification performance.

- The experimental results showed outstanding enhancements accomplished using the proposed hybrid filter-genetic feature selection approach.

2. Related Work

3. Filter Feature Selection

4. Genetic Algorithm

5. Proposed Methodology

5.1. Collection of High-Dimensional Microarray Data

5.2. Training Phase

5.2.1. Feature Ranking Using Filter Algorithms

- Information gain

- Information gain ratio

- Chi-squared

5.2.2. GA-Based Feature Selection

- Chromosome encoding: GA population includes a set of chromosomes and denotes search space which represents all possible feature subsets. Each chromosome in the population represents a feature subset and it is encoded with a binary string containing m genes, where m is the number of available features. If the feature is selected, the gene will be encoded by one, otherwise, it will be represented by zero.

- Population initialization: initially, GA generates arbitrarily an initial population of chromosomes that correspond to subsets of the potential attributes.

- Fitness evaluation: GA evaluates the fitness of the individual chromosome by computing the fitness function of each individual chromosome. In the GA-based feature selection, the training dataset containing the features selected for a chromosome is utilized to train the machine learning technique and then GA calculates the classification accuracy, which is used as the fitness of that chromosome. In this step, GA tries to find the ideal subset of features that maximizes the machine learning performance.

- Reproduction: like biological evolution, the fittest chromosomes are selected and recombined to reproduce and evolve better new chromosomes or solutions. In GA reproduction, three genetic operators are used in GA to perform the reproduction procedure:

- Selection: the chromosomes that have better fitness values are chosen as parents to generate new children.

- Crossover: in this process, GA exchanges the genes of two parent chromosomes after a crossover point chosen randomly in order to produce a new child chromosome.

- Mutation: the GA mutation is performed by changing occasionally value of a gene for the child chromosome from 1 to 0 or from 0 to 1.

| Algorithm 1: The pseudocode of the hybrid filter genetic algorithm-based feature selection approach | |

| Input: F: Original feature set N: Size of population (Number of chromosomes) | |

| Output: SF: The optimal selected features | |

| 1 | Begin |

| 2 | Compute score of each feature in F using Information gain, Gain ratio, or Chi-squared |

| 3 | RF = Select only the top 5% of ranked features |

| 4 | D = Dimension of RF |

| 5 | Initialize population P by generating N chromosomes C including D genes(features) with random values [0, 1] for each gene g |

| 6 | // Convert chromosomes to binary chromosomes (If the feature is selected, g = 1; otherwise, g = 0) If g >= 0.5 then g = 1; otherwise, g = 0 |

| 7 | While termination criteria not meet do |

| 8 | Compute fitness value (classification accuracy) for each chromosome |

| 9 | Select two parents based on better fitness values |

| 10 | Perform Crossover |

| 11 | Perform Mutation |

| 12 | End While |

| 13 | Obtain the best chromosome |

| 14 | Extract the optimal selected features SF from (the genes with 1) |

| 15 | Return SF |

| 16 | End Algorithm |

5.2.3. Training of Machine Learning Techniques

5.3. Classification Phase

6. Experiments and Evaluation

6.1. Experimental Settings

6.2. Performance Metrics

6.3. Experimental Results and Discussion

6.3.1. Performance Comparison of Proposed Hybrid Filter-GA Feature Selection

6.3.2. Comparison of Features Reduced by Applying Proposed Hybrid Filter-GA Methods

6.3.3. Comparison with Existing Works

6.3.4. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hameed, S.S.; Petinrin, O.O.; Hashi, A.O.; Saeed, F. Filter-Wrapper Combination and Embedded Feature Selection for Gene Expression Data. Int. J. Adv. Soft Comput. Appl. 2018, 10, 90–105. [Google Scholar]

- Hameed, S.S.; Hassan, R.; Muhammad, F.F. Selection and Classification of Gene Expression in Autism Disorder: Use of a Combination of Statistical Filters and a GBPSO-SVM Algorithm. PLoS ONE 2017, 2, e0187371. [Google Scholar] [CrossRef] [PubMed]

- Afolabi, L.T.; Saeed, F.; Hashim, H.; Petinrin, O.O. Ensemble Learning Method for the Prediction of New Bioactive Molecules. PLoS ONE 2018, 13, e0189538. [Google Scholar] [CrossRef]

- Anbarasi, M.; Anupriya, E.; Iyengar, N.C.S.N. Enhanced Prediction of Heart Disease with Feature Subset Selection Using Genetic Algorithm Enhanced Prediction of Heart Disease with Feature Subset Selection Using Genetic Algorithm. Int. J. Eng. Sci. Technol. 2010, 2, 5370–5376. [Google Scholar]

- Srinivas, K.; Rani, B.; Govrdhan, A. Applications of Data Mining Techniques in Healthcare and Prediction of Heart Attacks. Int. J. Comput. Sci. Eng. 2010, 2, 250–255. [Google Scholar]

- Soni, S.; Vyas, O.P. Using Associative Classifiers for Predictive Analysis in Health Care Data Mining. Int. J. Comput. Appl. 2010, 4, 33–37. [Google Scholar] [CrossRef]

- Rajkumar, A.; Reena, G.S. Diagnosis Of Heart Disease Using Datamining Algorithm. Glob. J. Comput. Sci. Technol. 2010, 5, 1678–1680. [Google Scholar]

- Bolón-Canedo, V.; Sánchez-Maroño, N.; Alonso-Betanzos, A.; Benítez, J.M.; Herrera, F. A Review of Microarray Datasets and Applied Feature Selection Methods. Inf. Sci. 2014, 282, 111–135. [Google Scholar] [CrossRef]

- Cosma, G.; Brown, D.; Archer, M.; Khan, M.; Pockley, A.G. A Survey on Computational Intelligence Approaches for Predictive Modeling in Prostate Cancer. Expert Syst. Appl. 2017, 70, 1–19. [Google Scholar] [CrossRef]

- Singh, R.K.; Sivabalakrishnan, M. Feature Selection of Gene Expression Data for Cancer Classification: A Review. Procedia Comput. Sci. 2015, 50, 52–57. [Google Scholar] [CrossRef]

- Wang, L. Feature Selection in Bioinformatics. In Independent Component Analyses, Compressive Sampling, Wavelets, Neural Net, Biosystems, and Nanoengineering X; SPIE: Bellingham, WA, USA, 2012. [Google Scholar]

- Song, Q.; Ni, J.; Wang, G. A Fast Clustering-Based Feature Subset Selection Algorithm for High-Dimensional Data. IEEE Trans. Knowl. Data Eng. 2013, 25, 1–14. [Google Scholar] [CrossRef]

- Saeys, Y.; Inza, I.; Larrañaga, P. A Review of Feature Selection Techniques in Bioinformatics. Bioinformatics 2007, 23, 2507–2517. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Liu, H.; Yang, J.; Chen, G. Ensemble Feature Selection for Stable Biomarker Identification and Cancer Classification from Microarray Expression Data. Comput. Biol. Med. 2022, 142, 105208. [Google Scholar] [CrossRef]

- Liu, S.; Xu, C.; Zhang, Y.; Liu, J.; Yu, B.; Liu, X.; Dehmer, M. Feature Selection of Gene Expression Data for Cancer Classification Using Double RBF-Kernels. BMC Bioinform. 2018, 19, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Taveira De Souza, J.; Carlos De Francisco, A.; Macedo, D.C. De Dimensionality Reduction in Gene Expression Data Sets. IEEE Access 2019, 7, 61136–61144. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Sánchez-Maroño, N.; Alonso-Betanzos, A. Distributed Feature Selection: An Application to Microarray Data Classification. Appl. Soft Comput. J. 2015, 30, 136–150. [Google Scholar] [CrossRef]

- Bhui, N. Ensemble of Deep Learning Approach for the Feature Selection from High-Dimensional Microarray Data. In Proceedings of the International Conference on Paradigms of Communication, Computing and Data Sciences, Kurukshetra, India, 7–9 May 2021; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Alhenawi, E.; Al-Sayyed, R.; Hudaib, A.; Mirjalili, S. Feature Selection Methods on Gene Expression Microarray Data for Cancer Classification: A Systematic Review. Comput. Biol. Med. 2022, 140, 105051. [Google Scholar] [CrossRef]

- Abdulla, M.; Khasawneh, M.T. G-Forest: An Ensemble Method for Cost-Sensitive Feature Selection in Gene Expression Microarrays. Artif. Intell. Med. 2020, 108, 101941. [Google Scholar] [CrossRef]

- Tao, P.; Sun, Z.; Sun, Z. An Improved Intrusion Detection Algorithm Based on GA and SVM. IEEE Access 2018, 6, 13624–13631. [Google Scholar] [CrossRef]

- Ghareb, A.S.; Bakar, A.A.; Hamdan, A.R. Hybrid Feature Selection Based on Enhanced Genetic Algorithm for Text Categorization. Expert Syst. Appl. 2016, 49, 31–47. [Google Scholar] [CrossRef]

- Ali, W.; Malebary, S. Particle Swarm Optimization-Based Feature Weighting for Improving Intelligent Phishing Website Detection. IEEE Access 2020, 8, 116766–116780. [Google Scholar] [CrossRef]

- Ali, W.; Ahmed, A.A. Hybrid Intelligent Phishing Website Prediction Using Deep Neural Networks with Genetic Algorithm-Based Feature Selection and Weighting. IET Inf. Secur. 2019, 13, 659–669. [Google Scholar] [CrossRef]

- Almutiri, T.; Saeed, F. Review on Feature Selection Methods for Gene Expression Data Classification. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Shah, S.H.; Iqbal, M.J.; Ahmad, I.; Khan, S.; Rodrigues, J.J.P.C. Optimized Gene Selection and Classification of Cancer from Microarray Gene Expression Data Using Deep Learning. Neural Comput. Appl. 2020, 1–12. [Google Scholar] [CrossRef]

- Parhi, P.; Bisoi, R.; Dash, P.K. Influential Gene Selection From High-Dimensional Genomic Data Using a Bio-Inspired Algorithm Wrapped Broad Learning System. IEEE Access 2022, 10, 49219–49232. [Google Scholar] [CrossRef]

- Kourou, K.; Rigas, G.; Papaloukas, C.; Mitsis, M.; Fotiadis, D.I. Cancer Classification from Time Series Microarray Data through Regulatory Dynamic Bayesian Networks. Comput. Biol. Med. 2020, 116, 103577. [Google Scholar] [CrossRef]

- Saeid, M.M.; Nossair, Z.B.; Saleh, M.A. A Microarray Cancer Classification Technique Based on Discrete Wavelet Transform for Data Reduction and Genetic Algorithm for Feature Selection. In Proceedings of the Proceedings of the 4th International Conference on Trends in Electronics and Informatics, ICOEI 2020, Tirunelveli, India, 15–17 June 2020. [Google Scholar]

- Passi, K.; Nour, A.; Jain, C.K. Markov Blanket: Efficient Strategy for Feature Subset Selection Method for High Dimensional Microarray Cancer Datasets. In Proceedings of the 2017 IEEE International Conference on Bioinformatics and Biomedicine, BIBM 2017, Kansas City, MO, USA, 13–16 November 2017. [Google Scholar]

- Sayed, S.; Nassef, M.; Badr, A.; Farag, I. A Nested Genetic Algorithm for Feature Selection in High-Dimensional Cancer Microarray Datasets. Expert Syst. Appl. 2019, 121, 233–243. [Google Scholar] [CrossRef]

- Ghosh, M.; Adhikary, S.; Ghosh, K.K.; Sardar, A.; Begum, S.; Sarkar, R. Genetic Algorithm Based Cancerous Gene Identification from Microarray Data Using Ensemble of Filter Methods. Med. Biol. Eng. Comput. 2019, 57, 159–176. [Google Scholar] [CrossRef]

- Abasabadi, S.; Nematzadeh, H.; Motameni, H.; Akbari, E. Hybrid Feature Selection Based on SLI and Genetic Algorithm for Microarray Datasets. J. Supercomput. 2022, 78, 19725–19753. [Google Scholar] [CrossRef]

- Xie, W.; Fang, Y.; Yu, K.; Min, X.; Li, W. MFRAG: Multi-Fitness RankAggreg Genetic Algorithm for Biomarker Selection from Microarray Data. Chemom. Intell. Lab. Syst. 2022, 226, 104573. [Google Scholar] [CrossRef]

- Hameed, S.S.; Muhammad, F.F.; Hassan, R.; Saeed, F. Gene Selection and Classification in Microarray Datasets Using a Hybrid Approach of PCC-BPSO/GA with Multi Classifiers. J. Comput. Sci. 2018, 14, 868–880. [Google Scholar] [CrossRef]

- Almutiri, T.; Saeed, F.; Alassaf, M.; Hezzam, E.A. A Fusion-Based Feature Selection Framework for Microarray Data Classification. In Lecture Notes on Data Engineering and Communications Technologies; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Almutiri, T.; Saeed, F. A Hybrid Feature Selection Method Combining Gini Index and Support Vector Machine with Recursive Feature Elimination for Gene Expression Classification. Int. J. Data Min. Model. Manag. 2022, 14, 41–62. [Google Scholar] [CrossRef]

- Almugren, N.; Alshamlan, H. A Survey on Hybrid Feature Selection Methods in Microarray Gene Expression Data for Cancer Classification. IEEE Access 2019, 7, 78533–78548. [Google Scholar] [CrossRef]

- Aziz, R.; Verma, C.K.; Srivastava, N. A Novel Approach for Dimension Reduction of Microarray. Comput. Biol. Chem. 2017, 71, 161–169. [Google Scholar] [CrossRef] [PubMed]

- Jain, I.; Jain, V.K.; Jain, R. Correlation Feature Selection Based Improved-Binary Particle Swarm Optimization for Gene Selection and Cancer Classification. Appl. Soft Comput. 2018, 62, 203–215. [Google Scholar] [CrossRef]

- Alshamlan, H.; Badr, G.; Alohali, Y. MRMR-ABC: A Hybrid Gene Selection Algorithm for Cancer Classification Using Microarray Gene Expression Profiling. Biomed Res. Int. 2015, 2015, 604910. [Google Scholar] [CrossRef]

- Vafaee Sharbaf, F.; Mosafer, S.; Moattar, M.H. A Hybrid Gene Selection Approach for Microarray Data Classification Using Cellular Learning Automata and Ant Colony Optimization. Genomics 2016, 107, 231–238. [Google Scholar] [CrossRef]

- Dashtban, M.; Balafar, M. Gene Selection for Microarray Cancer Classification Using a New Evolutionary Method Employing Artificial Intelligence Concepts. Genomics 2017, 109, 91–107. [Google Scholar] [CrossRef]

- Lu, H.; Chen, J.; Yan, K.; Jin, Q.; Xue, Y.; Gao, Z. A Hybrid Feature Selection Algorithm for Gene Expression Data Classification. Neurocomputing 2017, 256, 56–62. [Google Scholar] [CrossRef]

- Vijay, S.A.A.; GaneshKumar, P. Fuzzy Expert System Based on a Novel Hybrid Stem Cell (HSC) Algorithm for Classification of Micro Array Data. J. Med. Syst. 2018, 42, 61. [Google Scholar] [CrossRef]

- Hancer, E.; Xue, B.; Zhang, M. Differential Evolution for Filter Feature Selection Based on Information Theory and Feature Ranking. Knowl.-Based Syst. 2018, 140, 103–119. [Google Scholar] [CrossRef]

- Holland, J.H. Adaption in Natural and Artificial Systems; The University of Michigan: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Kawamura, A.; Chakraborty, B. A Hybrid Approach for Optimal Feature Subset Selection with Evolutionary Algorithms. In Proceedings of the 2017 IEEE 8th International Conference on Awareness Science and Technology, iCAST 2017, Taichung, Taiwan, 8–10 November 2017. [Google Scholar]

- Li, J.; Liu, H. Kent Ridge Biomedical Data Set Repository. Available online: http://sdmc-lit.org.sg/GEDatasets (accessed on 15 December 2020).

- Van’t Veer, L.J.; Dai, H.; Van de Vijver, M.J.; He, Y.D.; Hart, A.A.M.; Mao, M.; Peterse, H.L.; Van Der Kooy, K.; Marton, M.J.; Witteveen, A.T.; et al. Gene Expression Profiling Predicts Clinical Outcome of Breast Cancer. Nature 2002, 415, 530–536. [Google Scholar] [CrossRef] [PubMed]

- Pomeroy, S.L.; Tamayo, P.; Gaasenbeek, M.; Sturla, L.M.; Angelo, M.; McLaughlin, M.E.; Kim, J.Y.H.; Goumnerova, L.C.; Black, P.M.; Lau, C.; et al. Prediction of Central Nervous System Embryonal Tumour Outcome Based on Gene Expression. Nature 2002, 15, 436–442. [Google Scholar] [CrossRef] [PubMed]

- Whitehead Institute Center for Genomic Research Cancer Genomics. Available online: http://www-genome.wi.mit.edu/cancer (accessed on 15 November 2022).

- Liu, H.; Setiono, R. Chi2: Feature Selection and Discretization of Numeric Attributes. In Proceedings of the 7th IEEE International Conference on Tools with Artificial Intelligence, Herndon, VA, USA, 5–8 November 1995. [Google Scholar]

| Dataset | No. of Features | No. of Instances | No. of Classes |

|---|---|---|---|

| Breast | 24,481 | 97 | 2 |

| Lung | 12,600 | 203 | 5 |

| CNS | 7129 | 60 | 2 |

| Brain | 5597 | 42 | 5 |

| GA Parameter | Value |

|---|---|

| Crossover rate | 0.6 |

| Mutation rate | 0.02 |

| Number of chromosomes | 20 |

| Number of generations | 50 |

| Selection scheme | Tournament (0.25) |

| All Features | IG | IG-GA | IGR | IGR-GA | CS | CS-GA | ||

|---|---|---|---|---|---|---|---|---|

| SVM | Accuracy | 69.05 | 73.81 | 85.71 | 78.57 | 97.62 | 83.33 | 97.62 |

| Recall | 58 | 63 | 75 | 78 | 97.5 | 83 | 97.5 | |

| Precision | 42.28 | 64.05 | 68.57 | 66.52 | 98.18 | 88.41 | 98.18 | |

| F-measure | 48.91 | 63.52 | 71.64 | 71.8 | 97.84 | 85.62 | 97.84 | |

| NB | Accuracy | 69.05 | 88.1 | 92.86 | 85.71 | 95.24 | 83.33 | 95.24 |

| Recall | 60.5 | 80.5 | 88 | 78 | 93 | 76.5 | 93 | |

| Precision | 58.69 | 90.91 | 94.55 | 88.72 | 95.96 | 88.77 | 95.96 | |

| F-measure | 59.58 | 85.39 | 91.16 | 83.02 | 94.46 | 82.18 | 94.46 | |

| kNN | Accuracy | 78.57 | 80.95 | 92.86 | 83.33 | 97.62 | 80.95 | 97.62 |

| Recall | 75.5 | 80.5 | 92.5 | 83 | 97.5 | 80.5 | 97.5 | |

| Precision | 86.36 | 84.33 | 94.85 | 88.33 | 98.18 | 87.05 | 98.18 | |

| F-measure | 80.57 | 82.37 | 93.66 | 85.58 | 97.84 | 83.65 | 97.84 | |

| DT | Accuracy | 50 | 61.9 | 85.71 | 64.29 | 88.1 | 69.05 | 85.71 |

| Recall | 48.5 | 65 | 87 | 61.5 | 89.5 | 69 | 83.5 | |

| Precision | 53.06 | 61.73 | 87.22 | 63.83 | 88.5 | 69.89 | 88.01 | |

| F-measure | 50.68 | 63.32 | 87.11 | 62.64 | 89 | 69.44 | 85.7 | |

| RF | Accuracy | 78.57 | 90.48 | 100 | 92.86 | 100 | 88.1 | 100 |

| Recall | 76.5 | 88 | 100 | 93 | 100 | 88 | 100 | |

| Precision | 80.21 | 92.73 | 100 | 94.36 | 100 | 91.33 | 100 | |

| F-measure | 78.31 | 90.3 | 100 | 93.68 | 100 | 89.63 | 100 | |

| All Features | IG | IG-GA | IGR | IGR-GA | CS | CS-GA | ||

|---|---|---|---|---|---|---|---|---|

| SVM | Accuracy | 52.58 | 74.23 | 84.54 | 69.07 | 82.47 | 73.2 | 82.47 |

| Recall | 50 | 73.89 | 84.34 | 68.67 | 82.27 | 73.34 | 82.48 | |

| Precision | 26.29 | 74.41 | 84.69 | 69.21 | 82.6 | 73.3 | 82.43 | |

| F-measure | 34.46 | 74.15 | 84.51 | 68.94 | 82.43 | 73.32 | 82.45 | |

| NB | Accuracy | 48.45 | 55.67 | 57.73 | 54.64 | 62.89 | 72.16 | 79.38 |

| Recall | 46.93 | 53.47 | 55.54 | 52.71 | 60.87 | 72.04 | 79.33 | |

| Precision | 45.32 | 62.94 | 70.63 | 56.23 | 79.31 | 72.09 | 79.33 | |

| F-measure | 46.11 | 57.82 | 62.18 | 54.41 | 68.88 | 72.06 | 79.33 | |

| kNN | Accuracy | 55.67 | 71.13 | 89.69 | 64.95 | 86.6 | 72.16 | 84.54 |

| Recall | 54.43 | 70.52 | 89.77 | 63.58 | 85.98 | 71.5 | 84.44 | |

| Precision | 55.78 | 71.93 | 89.67 | 69.3 | 88.89 | 73.25 | 84.53 | |

| F-measure | 55.1 | 71.22 | 89.72 | 66.32 | 87.41 | 72.36 | 84.48 | |

| DT | Accuracy | 57.73 | 67.01 | 86.6 | 60.82 | 90.72 | 69.07 | 84.54 |

| Recall | 57.25 | 66.71 | 86.3 | 60.51 | 90.43 | 68.88 | 84.34 | |

| Precision | 57.52 | 66.97 | 87.09 | 60.67 | 91.31 | 69 | 84.69 | |

| F-measure | 57.38 | 66.84 | 86.69 | 60.59 | 90.87 | 68.94 | 84.51 | |

| RF | Accuracy | 63.92 | 86.6 | 89.69 | 87.63 | 93.81 | 81.44 | 85.57 |

| Recall | 63.55 | 86.51 | 89.66 | 87.49 | 93.8 | 81.29 | 85.64 | |

| Precision | 63.85 | 86.6 | 89.66 | 87.71 | 93.8 | 81.48 | 85.54 | |

| F-measure | 63.7 | 86.55 | 89.66 | 87.6 | 93.8 | 81.38 | 85.59 | |

| All Features | IG | IG-GA | IGR | IGR-GA | CS | CS-GA | ||

|---|---|---|---|---|---|---|---|---|

| SVM | Accuracy | 78.82 | 92.12 | 94.09 | 83.25 | 94.58 | 84.24 | 95.07 |

| Recall | 41.13 | 71.6 | 75.68 | 52.8 | 86.47 | 55.15 | 90.03 | |

| Precision | 75.27 | 75.72 | 76.41 | 54.47 | 98.53 | 54.9 | 98.66 | |

| F-measure | 53.19 | 73.6 | 76.04 | 53.62 | 92.11 | 55.02 | 94.15 | |

| NB | Accuracy | 90.15 | 95.07 | 98.52 | 93.6 | 97.54 | 92.12 | 97.04 |

| Recall | 79.07 | 88.5 | 97.73 | 93.64 | 97.44 | 93.21 | 97.3 | |

| Precision | 88.21 | 94.36 | 98.54 | 88.9 | 93.92 | 84.92 | 93.05 | |

| F-measure | 83.39 | 91.34 | 98.13 | 91.21 | 95.65 | 88.87 | 95.13 | |

| kNN | Accuracy | 92.61 | 92.61 | 97.04 | 89.66 | 96.06 | 92.12 | 95.57 |

| Recall | 80.73 | 87.91 | 94.87 | 73.98 | 92.74 | 80.36 | 91.79 | |

| Precision | 95.22 | 89.1 | 95.4 | 93.1 | 97.78 | 95.09 | 97.65 | |

| F-measure | 87.38 | 88.5 | 95.13 | 82.45 | 95.19 | 87.11 | 94.63 | |

| DT | Accuracy | 84.73 | 86.7 | 96.55 | 84.73 | 96.06 | 85.22 | 96.55 |

| Recall | 69.07 | 73.69 | 94.68 | 69.07 | 92.2 | 72.4 | 93.15 | |

| Precision | 84.15 | 80.63 | 94.35 | 84.15 | 95.21 | 85.92 | 96.21 | |

| F-measure | 75.87 | 77 | 94.51 | 75.87 | 93.68 | 78.58 | 94.66 | |

| RF | Accuracy | 83.74 | 93.6 | 96.06 | 91.13 | 95.57 | 92.61 | 96.06 |

| Recall | 59.1 | 85.6 | 90.58 | 78.46 | 92.01 | 83.47 | 92.97 | |

| Precision | 93.54 | 97.15 | 97.86 | 94.45 | 97.73 | 96.82 | 97.86 | |

| F-measure | 72.43 | 91.01 | 94.08 | 85.72 | 94.78 | 89.65 | 95.35 | |

| All Features | IG | IG-GA | IGR | IGR-GA | CS | CS-GA | ||

|---|---|---|---|---|---|---|---|---|

| SVM | Accuracy | 65 | 65 | 86.67 | 65 | 65 | 65 | 83.33 |

| Recall | 50 | 50 | 82.05 | 50 | 50 | 50 | 77.29 | |

| Precision | 32.5 | 32.5 | 88.89 | 32.5 | 32.5 | 32.5 | 86.58 | |

| F-measure | 39.39 | 39.39 | 85.33 | 39.39 | 39.39 | 39.39 | 81.67 | |

| NB | Accuracy | 61.67 | 75 | 90 | 78.33 | 88.33 | 70 | 83.33 |

| Recall | 59.52 | 74.18 | 87.91 | 75.64 | 87.73 | 69.23 | 81.68 | |

| Precision | 59.03 | 72.92 | 89.86 | 76.25 | 86.96 | 68 | 81.68 | |

| F-measure | 59.27 | 73.54 | 88.87 | 75.94 | 87.34 | 68.61 | 81.68 | |

| kNN | Accuracy | 61.67 | 75 | 93.33 | 68.33 | 83.33 | 75 | 88.33 |

| Recall | 54.03 | 71.98 | 91.58 | 61.36 | 78.39 | 69.78 | 84.43 | |

| Precision | 55.12 | 72.5 | 93.71 | 64.44 | 84.44 | 73.01 | 90.06 | |

| F-measure | 54.57 | 72.24 | 92.63 | 62.86 | 81.3 | 71.36 | 87.15 | |

| DT | Accuracy | 58.33 | 70 | 93.33 | 68.33 | 93.33 | 61.67 | 88.33 |

| Recall | 54.76 | 67.03 | 91.58 | 63.55 | 91.58 | 54.03 | 84.43 | |

| Precision | 54.67 | 67.03 | 93.71 | 64.68 | 93.71 | 55.12 | 90.06 | |

| F-measure | 54.71 | 67.03 | 92.63 | 64.11 | 92.63 | 54.57 | 87.15 | |

| RF | Accuracy | 53.33 | 80 | 91.67 | 80 | 90 | 83.33 | 88.33 |

| Recall | 45.42 | 75.82 | 89.19 | 72.53 | 85.71 | 78.39 | 85.53 | |

| Precision | 44.44 | 78.93 | 92.46 | 72.53 | 93.33 | 84.44 | 88.49 | |

| F-measure | 44.92 | 77.34 | 90.8 | 72.53 | 89.36 | 81.3 | 86.98 |

| All Features | No. of Features after Applying IG | No. of Features after Applying IG-GA | No. of Features after Applying IGR | No. of Features after Applying IGR-GA | No. of Features after Applying CS | No. of Features after Applying CS-GA | ||

|---|---|---|---|---|---|---|---|---|

| Brain dataset | SVM | 5597 | 280 | 136 | 280 | 138 | 280 | 134 |

| NB | 148 | 133 | 147 | |||||

| kNN | 132 | 129 | 136 | |||||

| DT | 121 | 131 | 122 | |||||

| RF | 138 | 144 | 155 | |||||

| Average | 135 | 135 | 139 | |||||

| Breast dataset | SVM | 24,481 | 1224 | 611 | 1224 | 624 | 1224 | 612 |

| NB | 642 | 604 | 617 | |||||

| kNN | 621 | 613 | 641 | |||||

| DT | 602 | 623 | 606 | |||||

| RF | 610 | 618 | 639 | |||||

| Average | 617 | 616 | 623 | |||||

| Lung dataset | SVM | 12,600 | 630 | 329 | 630 | 310 | 630 | 330 |

| NB | 327 | 316 | 326 | |||||

| kNN | 323 | 329 | 327 | |||||

| DT | 313 | 323 | 296 | |||||

| RF | 336 | 310 | 321 | |||||

| Average | 326 | 318 | 320 | |||||

| CNS dataset | SVM | 7129 | 356 | 194 | 356 | 178 | 356 | 157 |

| NB | 168 | 181 | 183 | |||||

| kNN | 182 | 189 | 196 | |||||

| DT | 182 | 189 | 159 | |||||

| RF | 190 | 156 | 167 | |||||

| Average | 183 | 179 | 172 |

| The Proposed Methods | GI-SVM-RFE [37] | Fusion [36] | PCC-GA [35] | PCC-BPSO [35] | ||||

|---|---|---|---|---|---|---|---|---|

| IG-GA | IGR-GA | CS-GA | ||||||

| Brain dataset | SVM | 85.71 | 97.62 | 97.62 | N/A | 95 | 97.62 | 97.62 |

| NB | 92.86 | 95.24 | 95.24 | 88 | N/A | 90.48 | 92.86 | |

| kNN | 92.86 | 97.62 | 97.62 | 87.50 | N/A | 95.24 | 97.62 | |

| DT | 85.71 | 88.1 | 85.71 | 71.50 | N/A | N/A | N/A | |

| RF | 100 | 100 | 100 | 90 | 88.67 | 95.24 | 85.71 | |

| Breast dataset | SVM | 84.54 | 82.47 | 82.47 | N/A | 75.11 | 88.66 | 90.72 |

| NB | 57.73 | 62.89 | 79.38 | 90.67 | N/A | 85.57 | 88.66 | |

| kNN | 89.69 | 86.6 | 84.54 | 87.67 | N/A | 86.60 | 87.63 | |

| DT | 86.6 | 90.72 | 84.54 | 72.22 | N/A | N/A | N/A | |

| RF | 89.69 | 93.81 | 85.57 | 88.67 | 84.65 | 84.54 | 85.57 | |

| Lung dataset | SVM | 94.09 | 94.58 | 95.07 | N/A | N/A | 97.54 | 97.04 |

| NB | 98.52 | 97.54 | 97.04 | 91.17 | N/A | 97.04 | 98.03 | |

| kNN | 97.04 | 96.06 | 95.57 | 92.62 | N/A | 97.54 | 96.06 | |

| DT | 96.55 | 96.06 | 96.55 | 88.71 | N/A | N/A | N/A | |

| RF | 96.06 | 95.57 | 96.06 | 93.64 | N/A | 96.06 | 96.06 | |

| CNS dataset | SVM | 86.67 | 65 | 83.33 | N/A | 75.00 | 98.33 | 91.94 |

| NB | 90 | 88.33 | 83.33 | 85 | N/A | 90 | 91.94 | |

| kNN | 93.33 | 83.33 | 88.33 | 81.67 | N/A | 96.67 | 93.55 | |

| DT | 93.33 | 93.33 | 88.33 | 75 | N/A | N/A | N/A | |

| RF | 91.67 | 90 | 88.33 | 83.33 | 76.48 | 85.00 | 91.94 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, W.; Saeed, F. Hybrid Filter and Genetic Algorithm-Based Feature Selection for Improving Cancer Classification in High-Dimensional Microarray Data. Processes 2023, 11, 562. https://0-doi-org.brum.beds.ac.uk/10.3390/pr11020562

Ali W, Saeed F. Hybrid Filter and Genetic Algorithm-Based Feature Selection for Improving Cancer Classification in High-Dimensional Microarray Data. Processes. 2023; 11(2):562. https://0-doi-org.brum.beds.ac.uk/10.3390/pr11020562

Chicago/Turabian StyleAli, Waleed, and Faisal Saeed. 2023. "Hybrid Filter and Genetic Algorithm-Based Feature Selection for Improving Cancer Classification in High-Dimensional Microarray Data" Processes 11, no. 2: 562. https://0-doi-org.brum.beds.ac.uk/10.3390/pr11020562