Figure 1.

Example of close-up images (on the left), large field images (on the right) and the corresponding ROI map (the binary images).

Figure 1.

Example of close-up images (on the left), large field images (on the right) and the corresponding ROI map (the binary images).

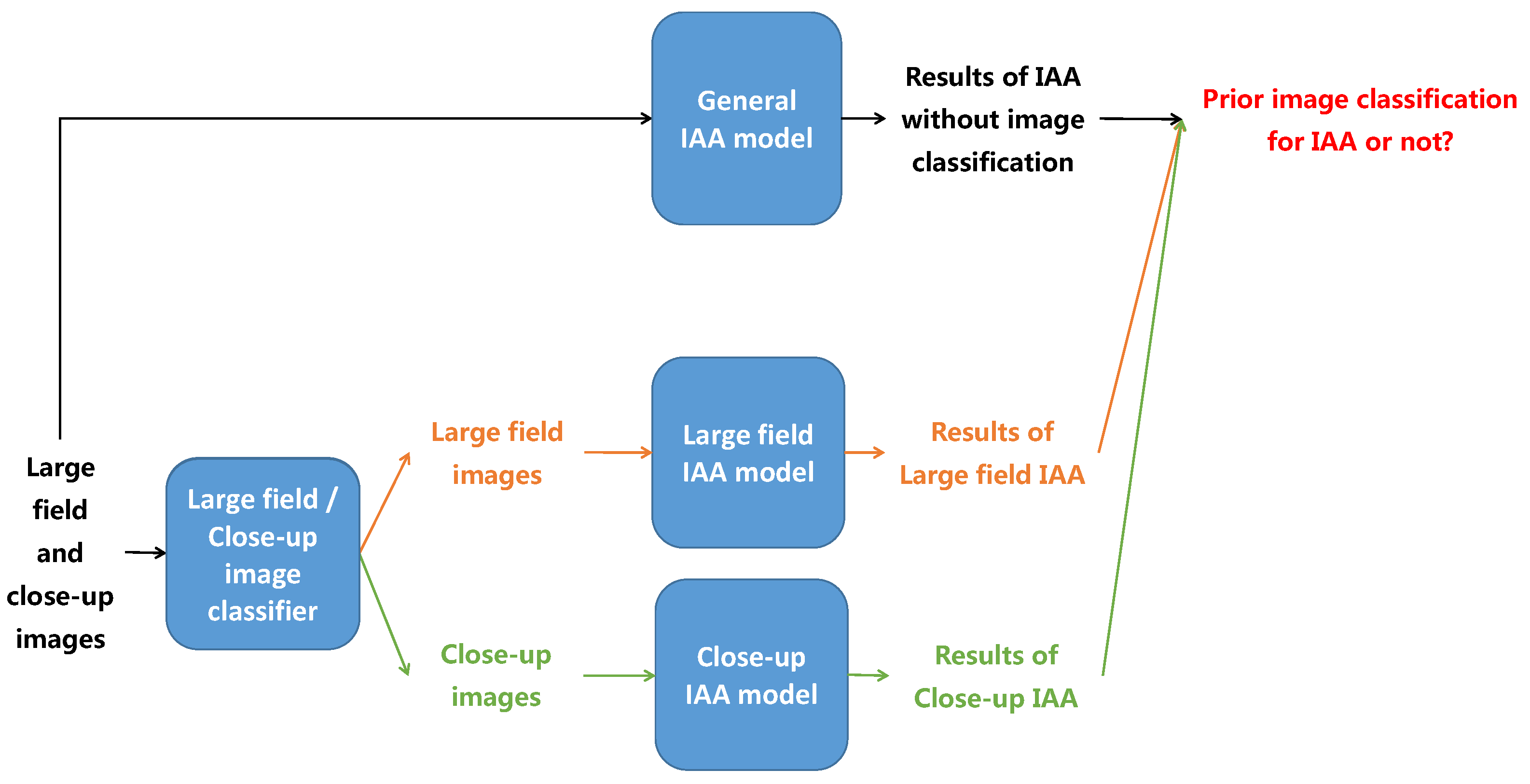

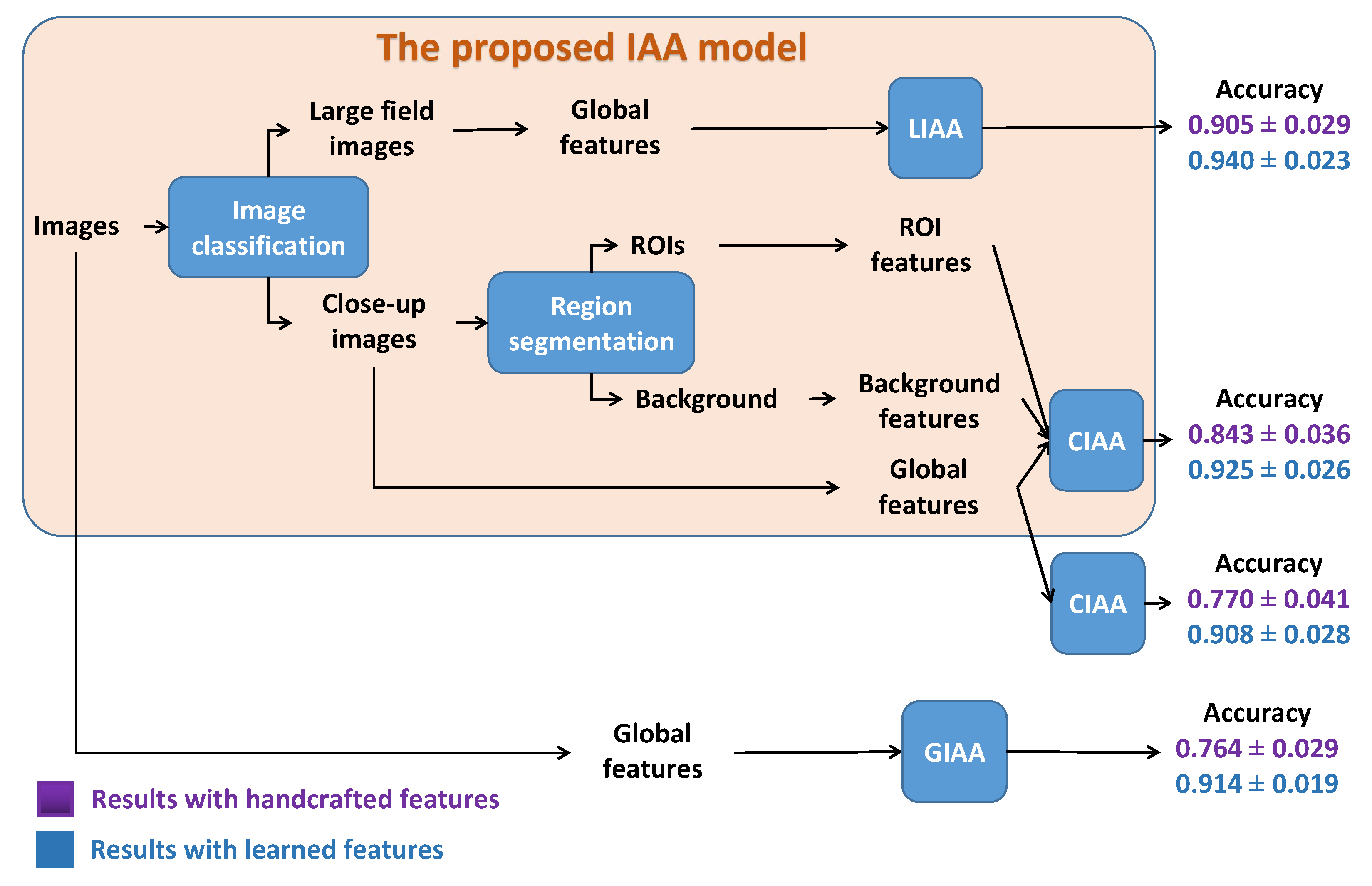

Figure 2.

The process of image aesthetic study based on LCIC results.

Figure 2.

The process of image aesthetic study based on LCIC results.

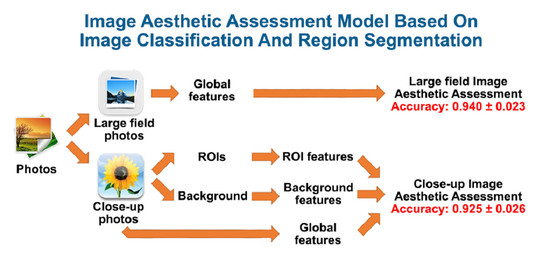

Figure 3.

The process of image aesthetic study based on ROIE results.

Figure 3.

The process of image aesthetic study based on ROIE results.

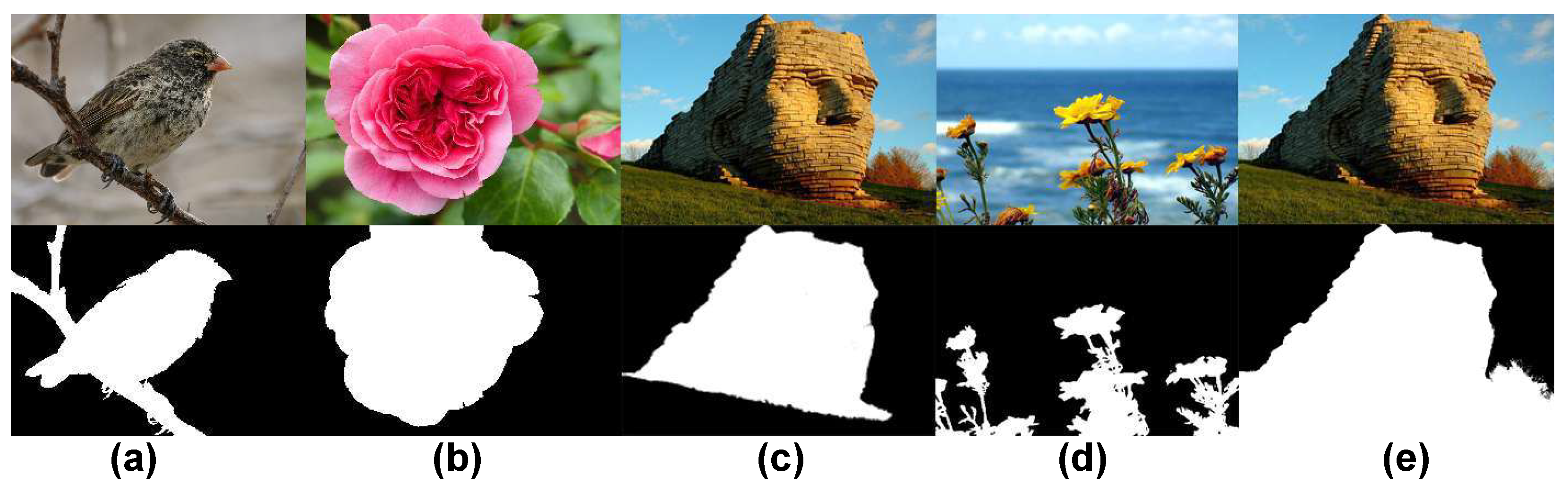

Figure 4.

Examples of different definitions of ROIs. The first row contains color images and the second row contains the corresponding ROI maps (a) ROIs defined according to sharpness. (b) ROIs defined according to color saliency. (c) ROIs defined as object regions (d,e) Our ROI definition based on both sharpness AND color saliency.

Figure 4.

Examples of different definitions of ROIs. The first row contains color images and the second row contains the corresponding ROI maps (a) ROIs defined according to sharpness. (b) ROIs defined according to color saliency. (c) ROIs defined as object regions (d,e) Our ROI definition based on both sharpness AND color saliency.

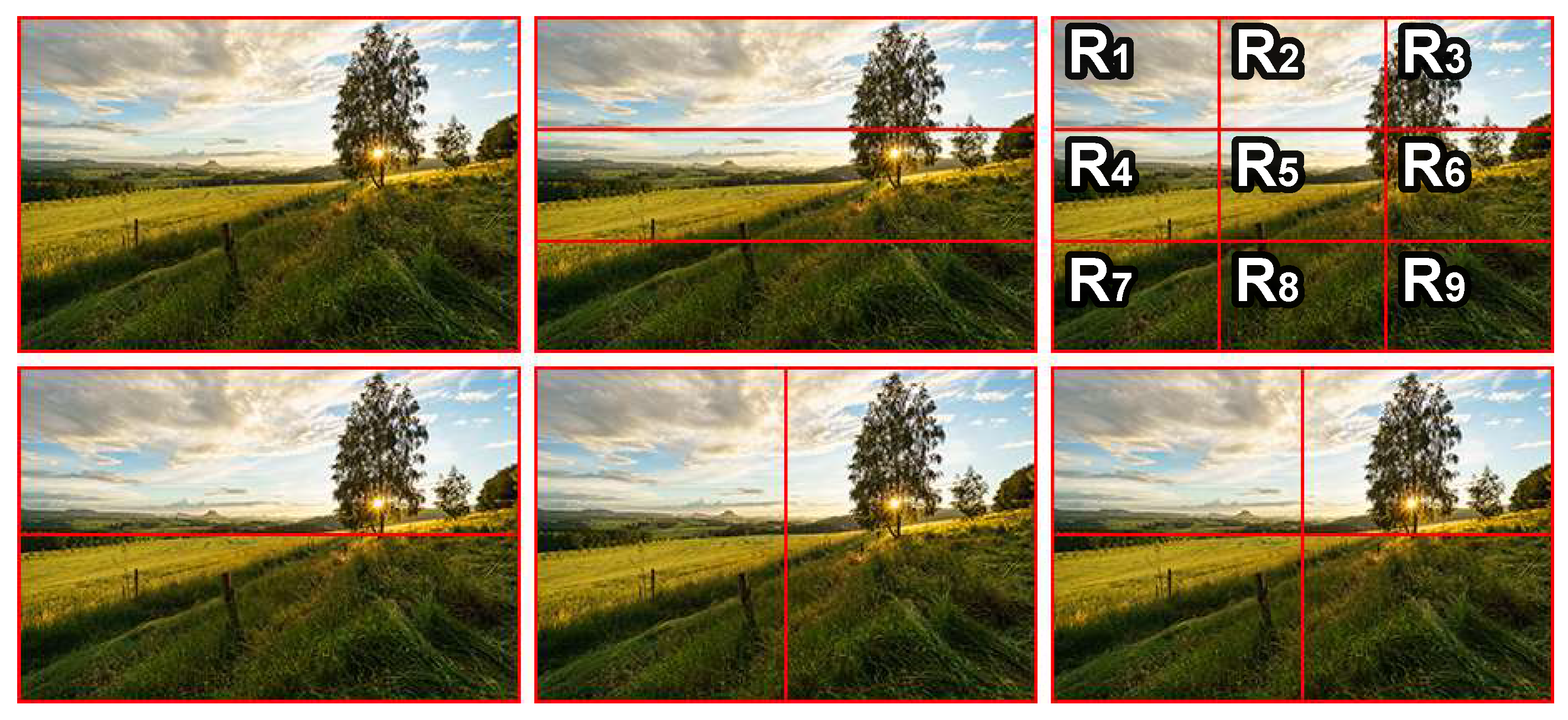

Figure 5.

Illustrations of region splits. First row: whole scene, regions split by landscape rule and rule of thirds respectively. Second row: regions split by symmetry rules.

Figure 5.

Illustrations of region splits. First row: whole scene, regions split by landscape rule and rule of thirds respectively. Second row: regions split by symmetry rules.

Figure 6.

Sharpness estimation process. (a) original image, (b) Aydin’s clearness map, (c) sharpness distribution at level 2, (d) sharpness distribution at level 5, (e) sharpness map, (f) in-focus map.

Figure 6.

Sharpness estimation process. (a) original image, (b) Aydin’s clearness map, (c) sharpness distribution at level 2, (d) sharpness distribution at level 5, (e) sharpness map, (f) in-focus map.

Figure 7.

ROI map computation process. (a) original images, (b) sharpness maps, (c) color saliency maps, (d) ROI maps. (e) binarized ROI maps.

Figure 7.

ROI map computation process. (a) original images, (b) sharpness maps, (c) color saliency maps, (d) ROI maps. (e) binarized ROI maps.

Figure 8.

Examples of rectangles representing the distribution of pixel values. (a,d) original images, (b,e) sharpness maps, (c,f) color saliency maps. Red rectangles represent the distributions of pixel values in those images while blue rectangles reflect the distributions for the corresponding video inverted images.

Figure 8.

Examples of rectangles representing the distribution of pixel values. (a,d) original images, (b,e) sharpness maps, (c,f) color saliency maps. Red rectangles represent the distributions of pixel values in those images while blue rectangles reflect the distributions for the corresponding video inverted images.

Figure 9.

Structure of the three deep models: (a) structure of the two first models containing three main components: encoding component, transformation component and decoding component. (b) structure of a residual block. (c) structure of a convolutional block. (d) structure of the third model with convolutional blocks only.

Figure 9.

Structure of the three deep models: (a) structure of the two first models containing three main components: encoding component, transformation component and decoding component. (b) structure of a residual block. (c) structure of a convolutional block. (d) structure of the third model with convolutional blocks only.

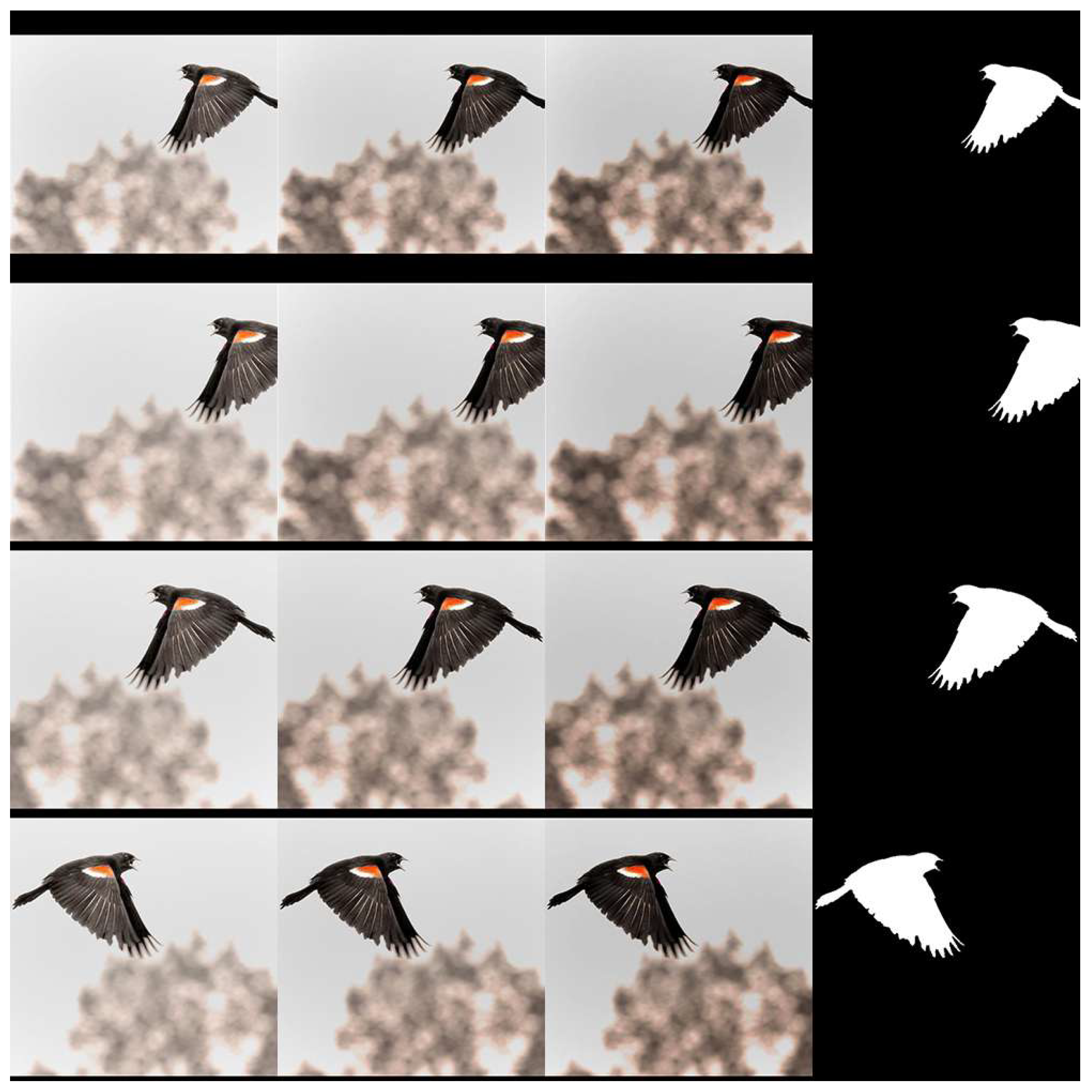

Figure 10.

Examples of data augmentation. The three left columns contain the augmented versions while the last column shows the corresponding ROI ground truth.

Figure 10.

Examples of data augmentation. The three left columns contain the augmented versions while the last column shows the corresponding ROI ground truth.

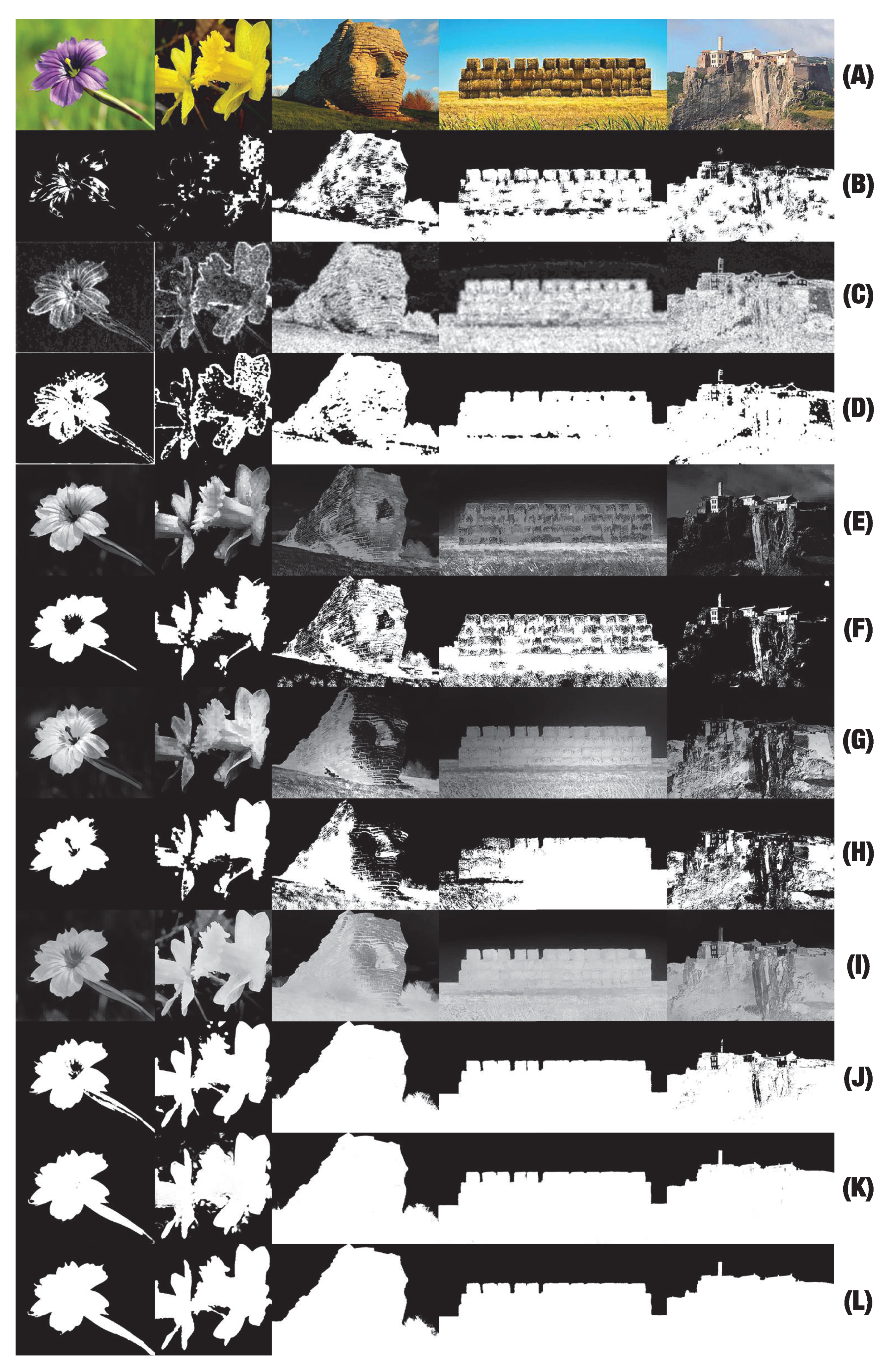

Figure 11.

Examples of ROI maps. (

a) Original images. (

b) Tang’s [

25] sharpness maps. (

c,

d) Aydin’s [

5] clearness maps and the binarized versions of them. (

e,

f) Perazzi’s [

27] color saliency maps and the binarized versions of them. (

g,

h) Zheng’s [

28] color saliency maps and the binarized versions of them. (

i,

j) Handcrafted ROI maps based on both sharpness and color information and the binarized versions of them. (

k) ROI maps generated by the first deep model. (

l) ground truth.

Figure 11.

Examples of ROI maps. (

a) Original images. (

b) Tang’s [

25] sharpness maps. (

c,

d) Aydin’s [

5] clearness maps and the binarized versions of them. (

e,

f) Perazzi’s [

27] color saliency maps and the binarized versions of them. (

g,

h) Zheng’s [

28] color saliency maps and the binarized versions of them. (

i,

j) Handcrafted ROI maps based on both sharpness and color information and the binarized versions of them. (

k) ROI maps generated by the first deep model. (

l) ground truth.

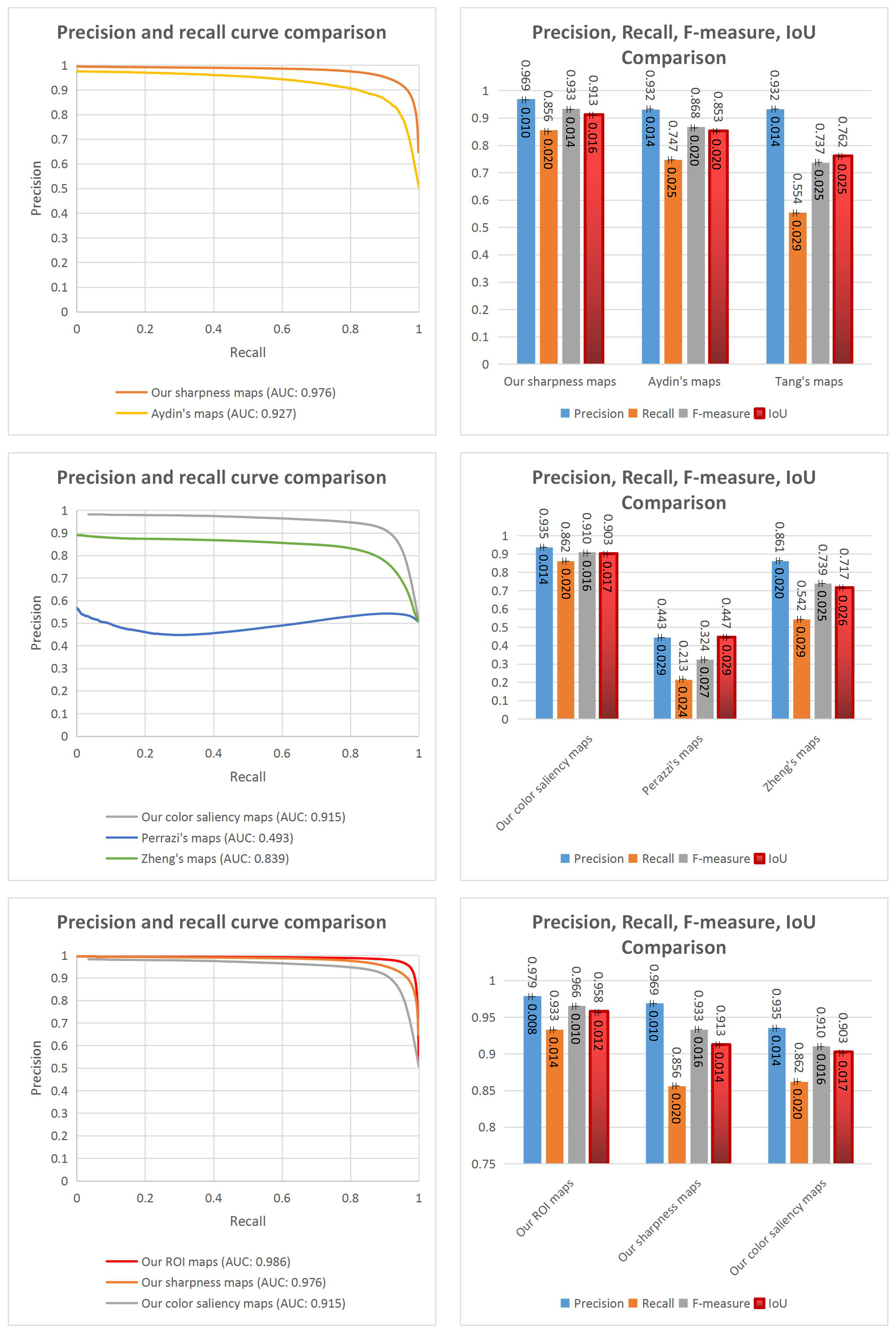

Figure 12.

Evaluations for ROI maps. First row: Evaluations for the proposed sharpness estimation method, Aydin’s method and Tang’s method (Tang’s ROI maps are binary maps so it is not necessary to consider their precision and recall curve). Second row: Evaluations for the proposed color saliency estimation method, Perazzi’s method and Zheng’s method. Third row: Evaluations for our handcrafted ROIE method, sharpness estimation method and color saliency estimation method.

Figure 12.

Evaluations for ROI maps. First row: Evaluations for the proposed sharpness estimation method, Aydin’s method and Tang’s method (Tang’s ROI maps are binary maps so it is not necessary to consider their precision and recall curve). Second row: Evaluations for the proposed color saliency estimation method, Perazzi’s method and Zheng’s method. Third row: Evaluations for our handcrafted ROIE method, sharpness estimation method and color saliency estimation method.

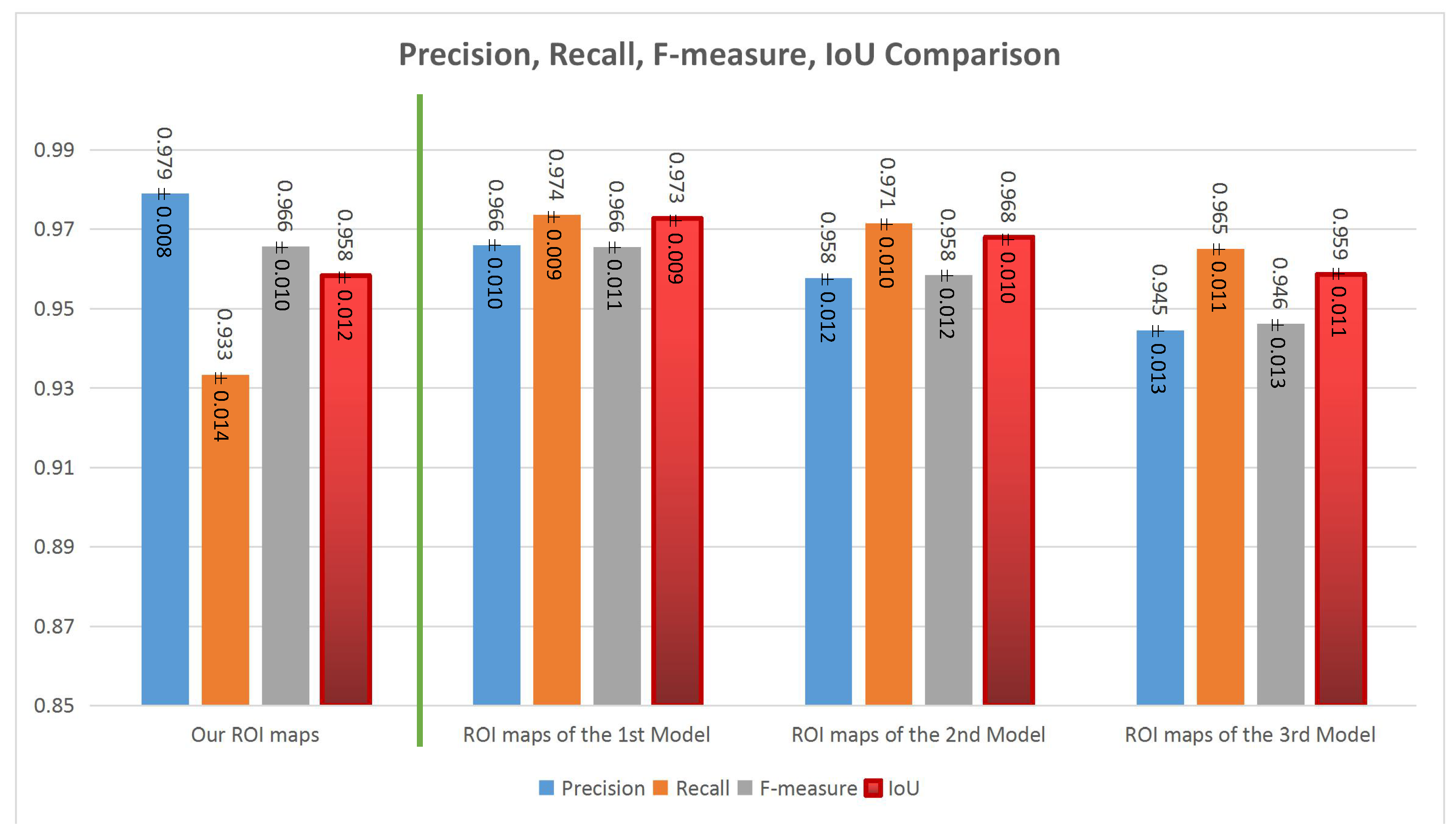

Figure 13.

Evaluations for our handcrafted ROIE method (on the left side) and our deep models (on the right side).

Figure 13.

Evaluations for our handcrafted ROIE method (on the left side) and our deep models (on the right side).

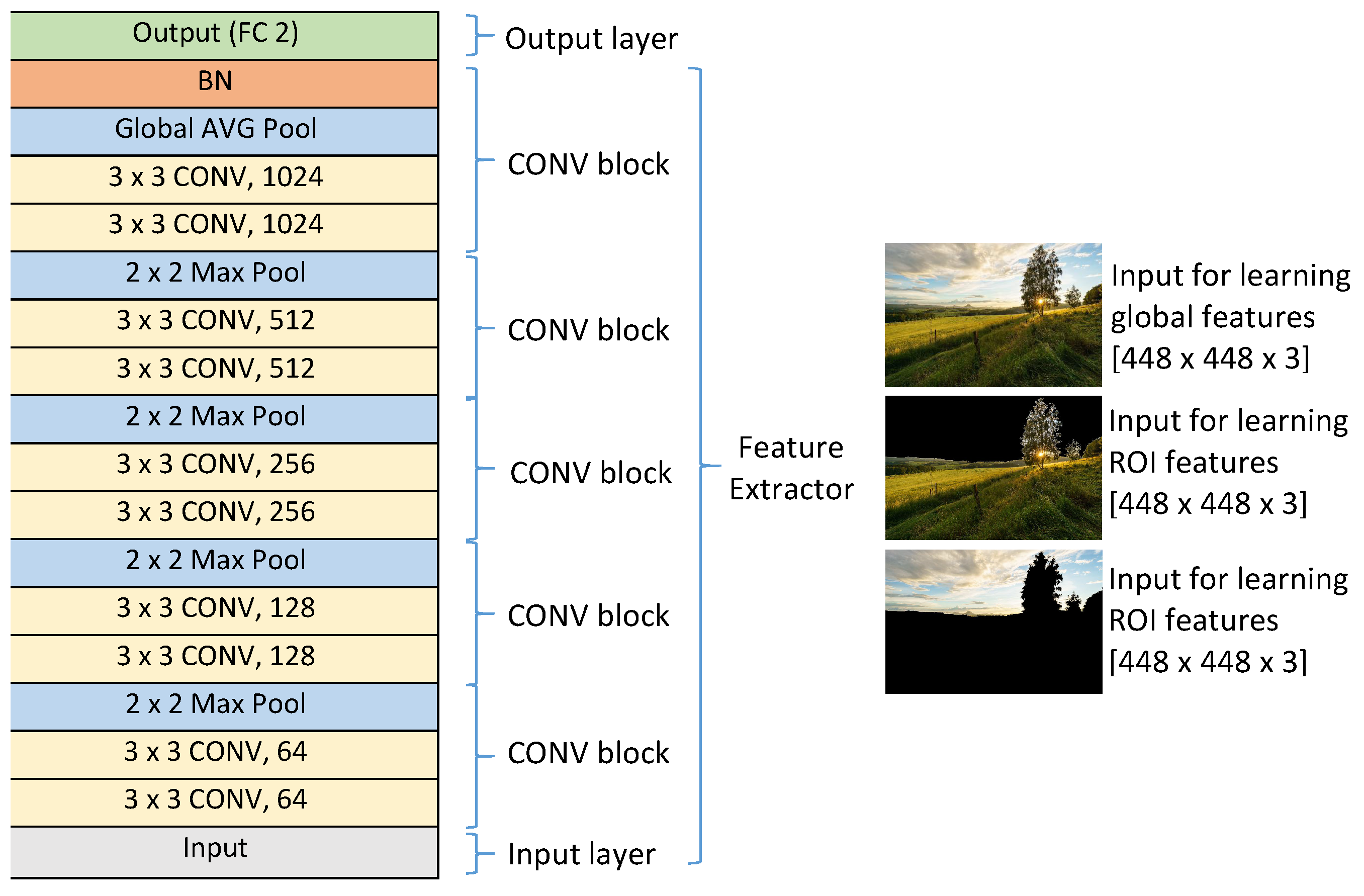

Figure 14.

General structure of the models learning aesthetic features from the whole image, ROIs and background.

Figure 14.

General structure of the models learning aesthetic features from the whole image, ROIs and background.

Figure 15.

Examples of the two generated versions based on ROIE. (a) The original image. (b) The ROI map. (c) The first version. (d) The second version.

Figure 15.

Examples of the two generated versions based on ROIE. (a) The original image. (b) The ROI map. (c) The first version. (d) The second version.

Figure 16.

Models using transferred features for LIAA, CIAA and GIAA.

Figure 16.

Models using transferred features for LIAA, CIAA and GIAA.

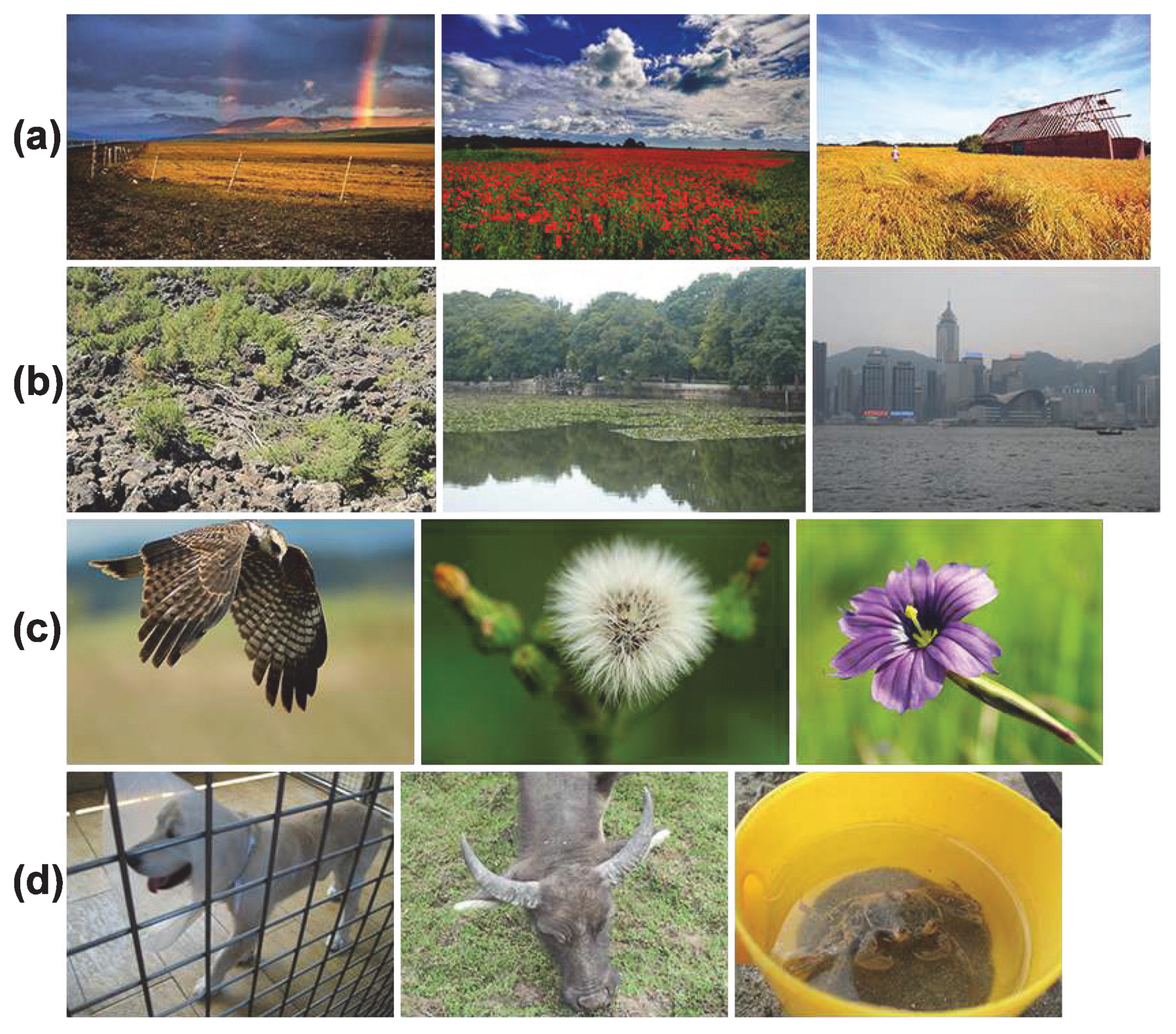

Figure 17.

Examples of high and low aesthetic images: (a) high aesthetic large field images, (b) low aesthetic large field images, (c) high aesthetic close-up images, (d) low aesthetic close-up images.

Figure 17.

Examples of high and low aesthetic images: (a) high aesthetic large field images, (b) low aesthetic large field images, (c) high aesthetic close-up images, (d) low aesthetic close-up images.

Figure 18.

Proposed algorithm for IAA.

Figure 18.

Proposed algorithm for IAA.

Table 1.

Overview of the proposed handcrafted features for LCIC.

are the regions split by the rule of thirds (see the top right photo in

Figure 5).

Table 1.

Overview of the proposed handcrafted features for LCIC.

are the regions split by the rule of thirds (see the top right photo in

Figure 5).

| Features | Formula |

|---|

| Sharpness features | mean of gradient values in |

| | mean of gradient values in |

| | mean of gradient values in |

| | standard deviation of gradient values in |

| | gradient contrast between and |

| | gradient contrast between and |

| | gradient contrast between and |

| | standard deviation of gradient values in the whole image |

| Color features | brightness contrast between and |

| | brightness contrast between and |

| | brightness contrast between and |

| | color contrast between and |

| | color contrast between and |

| | color contrast between and |

| ROI/background features | proportion of ROI pixels in |

| | proportion of ROI pixels in |

| | proportion of ROI pixels in |

| | mean of gradient values in ROIs |

| | relations between ROIs and background |

Table 2.

Overview of evaluation criteria for LCIC. for 95% confidence interval and the number of samples N is 800

Table 2.

Overview of evaluation criteria for LCIC. for 95% confidence interval and the number of samples N is 800

| Evaluation Criteria | Formula |

|---|

| Accuracy | |

| Confidence interval of accuracy | |

| Feature computational time | |

| Classification time | |

| Total computational time | |

Table 3.

LCICs using EXIF features, handcrafted features and learned features.

Table 3.

LCICs using EXIF features, handcrafted features and learned features.

| LCIC Using the Four EXIF Features |

| = | Without the GPU | = 1 ms | = 1 ms | = 2 ms |

| LCIC Using the 21 Handcrafted Features |

| = | Without the GPU | = 30 ms | = 1 ms | = 31 ms |

| LCIC using | Wang’s feature set (105 features) | Zhuang’s feature set (257 features) |

| | = | = |

| LCIC Using the 925 Most Relevant VGG16 Features |

| = | Without the GPU | = 434 ms | = 2 ms | = 436 ms |

| | With the GPU | = 16 ms | = 2 ms | = 18 ms |

| LCIC Using the 21 Most Relevant VGG16 Features |

| = | Without the GPU | = 434 ms | = 1 ms | = 435 ms |

| | With the GPU | = 16 ms | = 1 ms | = 17 ms |

| LCIC Using the Four Most Relevant VGG16 Features |

| = | Without the GPU | = 434 ms | = 1 ms | = 435 ms |

| | With the GPU | = 16 ms | = 1 ms | = 17 ms |

Table 4.

Evaluation criteria of ROI detection methods: Precision, Recall, F-measure and Intersection over Union where are a number of pixels. , are predicted ROIs and ROIs according to the ground truth. , are predicted background and background according to the ground truth. , .

Table 4.

Evaluation criteria of ROI detection methods: Precision, Recall, F-measure and Intersection over Union where are a number of pixels. , are predicted ROIs and ROIs according to the ground truth. , are predicted background and background according to the ground truth. , .

| Evaluation Criteria of ROI Detection Methods |

|---|

| Precision | | |

| Recall | | |

| F-measure | | |

| Intersection over Union | | |

| | | |

Table 5.

Overview of the proposed handcrafted features for GIAA.

Table 5.

Overview of the proposed handcrafted features for GIAA.

| Features | Formula |

|---|

| Global features | : mean of gradient values |

| | : mean of brightness values |

| | : standard deviation of brightness values |

| | : number of main brightness bins (brightness |

| | range is split into 64 bins) |

| | : mean of saturation values |

| | : standard deviation of saturation values |

| | : kurtosis of saturation values |

| | : standard deviation of hue values |

| | : number of main hue bins (hue range is |

| | split into 64 bins) |

| | : number of main colors |

| | |

| | , and are standard deviation of red, green |

| | and blue values |

| | , : coordinate of the center point determined |

| | by gradient values |

| | , : coordinate of the center point determined |

| | by saturation values |

| | , : coordinate of the center point determined |

| | by brightness values |

| ROI and background features | : number of main hue bins of ROIs |

| | : mean of gradient values of ROIs |

| | : brightness contrast between ROIs and background |

| | : mean of gradient values of background |

| | : mean of brightness values of background |

| | : number of main saturation bins of background |

| | : number of main hue bins of background |

Table 6.

Overview of the proposed handcrafted features for LIAA.

Table 6.

Overview of the proposed handcrafted features for LIAA.

| Features | Formula |

|---|

| Global features | : mean of gradient values |

| | : standard deviation of gradient values |

| | : mean of brightness values |

| | : standard deviation of brightness values |

| | : mean of saturation values |

| | : standard deviation of saturation values |

| | : colorfulness |

| | : min distance to intersection points (based on the |

| | rule of thirds) determined by sharpness values |

| | : min distance to intersection points (based on the |

| | rule of thirds) determined by color saliency values |

| | : min distance to intersection points (based on the |

| | rule of thirds) determined by brightness values |

| | |

| ROI and background features | : mean of gradient values of ROIs |

| | : mean of color saliency values of ROIs |

| | : mean of saturation values of ROIs |

| | : mean of brightness values of ROIs |

| | : colorfulness of ROIs |

| | : sharpness contrast between ROIs and background |

| | : color contrast between ROIs and background |

| | : brightness contrast between ROIs and background |

| | : saturation contrast between ROIs and background |

| | |

Table 7.

Overview of the proposed handcrafted features for CIAA.

Table 7.

Overview of the proposed handcrafted features for CIAA.

| Features | Formula |

|---|

| Global features | : colorfulness |

| | : min distance to intersection points (based on the |

| | rule of thirds) determined by sharpness values |

| | : min distance to intersection points (based on the |

| | rule of thirds) determined by color saliency values |

| | : min distance to intersection points (based on the |

| | rule of thirds) determined by brightness values |

| | |

| | : distribution of sharpness values |

| | : distribution of color saliency values |

| ROI and background features | : mean of gradient values of ROIs |

| | : standard deviation of gradient values of ROIs |

| | : mean of color saliency values of ROIs |

| | : standard deviation of color saliency values of ROIs |

| | : mean of saturation values of ROIs |

| | : standard deviation of saturation values of ROIs |

| | : mean of brightness values of ROIs |

| | : standard deviation of brightness values of ROIs |

| | : colorfulness of ROIs |

| | : mean of gradient values of background |

| | : colorfulness of background |

| | : sharpness contrast between ROIs and background |

| | : color contrast between ROIs and background |

| | : brightness contrast between ROIs and background |

| | : saturation contrast between ROIs and background |

| | |

Table 8.

Overview of evaluation criteria for IAA. for 95% confidence interval and the number of samples N is 800, 400 and 400 for GIAA, LIAA and CIAA respectively. TP, FP, TN, FN are a number of images.

Table 8.

Overview of evaluation criteria for IAA. for 95% confidence interval and the number of samples N is 800, 400 and 400 for GIAA, LIAA and CIAA respectively. TP, FP, TN, FN are a number of images.

| Evaluation Criteria | Formula |

|---|

| Accuracy | |

| Confidence interval | |

| Lower accuracy | |

| Upper accuracy | |

Table 9.

Evaluations of IAA with and without image classification using handcrafted and learned features.

Table 9.

Evaluations of IAA with and without image classification using handcrafted and learned features.

| Feature Vector | A | | | |

|---|

| GIAA—IAA without image classification |

| 0.785 | 0.028 | 0.757 | 0.813 |

| 0.845 | 0.025 | 0.820 | 0.870 |

| 0.800 | 0.028 | 0.772 | 0.828 |

| 0.921 | 0.018 | 0.903 | 0.939 |

| LIAA—IAA for large field images only |

| 0.913 | 0.028 | 0.885 | 0.941 |

| 0.880 | 0.031 | 0.849 | 0.911 |

| 0.878 | 0.032 | 0.846 | 0.910 |

| 0.940 | 0.023 | 0.917 | 0.963 |

| CIAA—IAA for close-up images only |

| 0.843 | 0.036 | 0.807 | 0.879 |

| 0.860 | 0.034 | 0.816 | 0.894 |

| 0.833 | 0.037 | 0.796 | 0.870 |

| 0.925 | 0.026 | 0.899 | 0.951 |

Table 10.

The number of global features, RB features in the two feature sets and for LIAA and CIAA respectively.

Table 10.

The number of global features, RB features in the two feature sets and for LIAA and CIAA respectively.

| Feature Set | The Number of |

|---|

| | Global Features | RB Features |

| 21 | 0 |

| 18 | 5 |

Table 11.

Evaluations of CIAA using global features, RB features and both global features and RB features.

Table 11.

Evaluations of CIAA using global features, RB features and both global features and RB features.

| Feature Vector | A | | | |

|---|

| CIAA—IAA for close-up images only |

| 0.925 | 0.026 | 0.899 | 0.951 |

| 0.908 | 0.028 | 0.880 | 0.936 |

| 0.868 | 0.033 | 0.835 | 0.901 |

Table 12.

Evaluations of LIAA, CIAA using Suran’s and Aydin’s global features, RB features and both global features and RB features.

Table 12.

Evaluations of LIAA, CIAA using Suran’s and Aydin’s global features, RB features and both global features and RB features.

| Feature Vector | A | | | |

|---|

| LIAA using Suran’s features |

| 0.880 | 0.031 | 0.849 | 0.911 |

| 0.875 | 0.032 | 0.843 | 0.907 |

| 0.848 | 0.035 | 0.813 | 0.883 |

| CIAA using Suran’s features |

| 0.860 | 0.034 | 0.826 | 0.894 |

| 0.853 | 0.035 | 0.818 | 0.888 |

| 0.728 | 0.044 | 0.684 | 0.772 |

| LIAA using Aydin’s features |

| 0.878 | 0.032 | 0.846 | 0.910 |

| 0.888 | 0.031 | 0.857 | 0.919 |

| 0.540 | 0.049 | 0.491 | 0.589 |

| CIAA using Aydin’s features |

| 0.833 | 0.037 | 0.796 | 0.870 |

| 0.740 | 0.043 | 0.697 | 0.783 |

| 0.818 | 0.038 | 0.780 | 0.856 |