Comparison between Geostatistical Interpolation and Numerical Weather Model Predictions for Meteorological Conditions Mapping

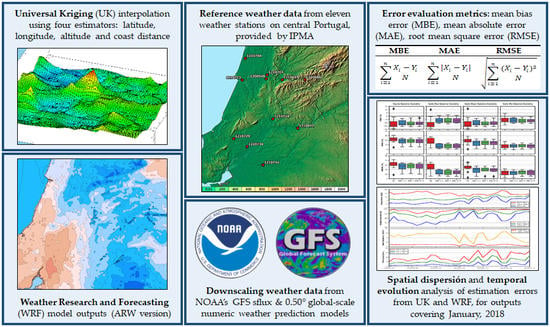

Abstract

:1. Introduction

2. Materials and Methods

2.1. Downscaling Algorithms

2.2. Data Sources

2.2.1. Input Weather Data

2.2.2. Reference Weather Data

2.2.3. Auxiliary Data

2.3. Study Operational Conditions

2.3.1. Studied Locations

2.3.2. Temporal Span

2.3.3. Weather Variables

2.4. Compared Datasets

2.5. Error Comparison Metrics

3. Results and Discussion

3.1. Reference Ranges

3.2. Spatial Dispersion Analysis

3.3. Temporal Evolution Analysis

3.4. Further Considerations

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ARW | Advanced Research WRF |

| ECMWF | European Centre for Medium-Range Weather Forecasts |

| GFS | Global Forecast System |

| IFS | Integrated Forecast System |

| IPMA | Portuguese Meteorological Agency (Instituto Português do Mar e da Atmosfera) |

| MAE | Mean Absolute Error |

| MBE | Mean Bias Error |

| NCAR | National Centre for Atmospheric Research |

| NCEP | National Centres for Environmental Prediction |

| NMM | Non hydrostatic Mesoscale Model |

| NOAA | National Oceanic and Atmospheric Administration |

| NOMADS | National Oceanic and Atmospheric Administration Operational Model Archive and Distribution System |

| NWP | Numerical Weather Prediction |

| RMSE | Root Mean Square Error |

| UK | Universal Kriging |

| WPS | WRF Pre-processing System |

| WRF | Weather Research and Forecasting |

| WRF-1.5 | WRF dataset using a 1.5 km spaced innermost mesh |

| WRF-1 | WRF dataset using a 1 km spaced innermost mesh |

| WRF-0.75 | WRF dataset using a 0.75 km spaced innermost mesh |

References

- Saha, S.; Schramm, P.; Nolan, A.; Hess, J. Adverse weather conditions and fatal motor vehicle crashes in the United States, 1994-2012. Environ. Health Global Access Sci. Sour. 2016, 15, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mills, B.N.; Andrey, J.; Hambly, D. Analysis of precipitation-related motor vehicle collision and injury risk using insurance and police record information for Winnipeg, Canada. J. Saf. Res. 2011, 42, 383–390. [Google Scholar] [CrossRef] [PubMed]

- Norros, I.; Kuusela, P.; Innamaa, S.; Pilli-Sihvola, E.; Rajamäki, R. The Palm distribution of traffic conditions and its application to accident risk assessment. Anal. Methods Accid. Res. 2016, 12, 48–65. [Google Scholar] [CrossRef]

- Jung, S.; Qin, X.; Noyce, D.A. Rainfall effect on single-vehicle crash severities using polychotomous response models. Accid. Anal. Prev. 2010, 42, 213–224. [Google Scholar] [CrossRef] [PubMed]

- Jägerbrand, A.K.; Sjöbergh, J. Effects of weather conditions, light conditions, and road lighting on vehicle speed. SpringerPlus 2016, 5, 505. [Google Scholar] [CrossRef]

- Carvalho, D.; Rocha, A.; Gómez-Gesteira, M.; Silva Santos, C. WRF wind simulation and wind energy production estimates forced by different reanalyses: Comparison with observed data for Portugal. Appl. Energy 2014, 117, 116–126. [Google Scholar] [CrossRef]

- Fernández-González, S.; Martín, M.L.; García-Ortega, E.; Merino, A.; Lorenzana, J.; Sánchez, J.L.; Valero, F.; Rodrigo, J.S. Sensitivity analysis of the WRF model: Wind-resource assessment for complex terrain. J. Appl. Meteorol. Climatol. 2018, 57, 733–753. [Google Scholar] [CrossRef]

- Sharmila, S.; Pillai, P.A.; Joseph, S.; Roxy, M.; Krishna, R.P.M.; Chattopadhyay, R.; Abhilash, S.; Sahai, A.K.; Goswami, B.N. Role of ocean-atmosphere interaction on northward propagation of Indian summer monsoon intra-seasonal oscillations (MISO). Clim. Dyn. 2013, 41, 1651–1669. [Google Scholar] [CrossRef]

- Mathiesen, P.; Kleissl, J. Evaluation of numerical weather prediction for intra-day solar forecasting in the continental United States. Sol. Energy 2011, 85, 967–977. [Google Scholar] [CrossRef] [Green Version]

- Perez, R.; Lorenz, E.; Pelland, S.; Beauharnois, M.; Van Knowe, G.; Hemker, K.; Heinemann, D.; Remund, J.; Müller, S.C.; Traunmüller, W.; et al. Comparison of numerical weather prediction solar irradiance forecasts in the US, Canada and Europe. Sol. Energy 2013, 94, 305–326. [Google Scholar] [CrossRef]

- Rasouli, K.; Hsieh, W.W.; Cannon, A.J. Daily streamflow forecasting by machine learning methods with weather and climate inputs. J. Hydrol. 2012, 414-415, 284–293. [Google Scholar] [CrossRef]

- Pan, L.L.; Chen, S.H.; Cayan, D.; Lin, M.Y.; Hart, Q.; Zhang, M.H.; Liu, Y.; Wang, J. Influences of climate change on California and Nevada regions revealed by a high-resolution dynamical downscaling study. Clim. Dyn. 2011, 37, 2005–2020. [Google Scholar] [CrossRef] [Green Version]

- Zhao, P.; Wang, J.; Xia, J.; Dai, Y.; Sheng, Y.; Yue, J. Performance evaluation and accuracy enhancement of a day-ahead wind power forecasting system in China. Renew. Energy 2012, 43, 234–241. [Google Scholar] [CrossRef]

- Atlaskin, E.; Vihma, T. Evaluation of NWP results for wintertime nocturnal boundary-layer temperatures over Europe and Finland. Q. J. R. Meteorol. Soc. 2012, 138, 1440–1451. [Google Scholar] [CrossRef]

- NCEP. NCEP Products Inventory. Available online: https://www.nco.ncep.noaa.gov/pmb/products/gfs/ (accessed on 25 November 2019).

- Owens, R.G.; Tim, H. ECMWF Forecast User Guide. Available online: https://confluence.ecmwf.int//display/FUG/Forecast+User+Guide (accessed on 25 November 2019).

- Morel, B.; Pohl, B.; Richard, Y.; Bois, B.; Bessafi, M. Regionalizing rainfall at very high resolution over La Réunion Island using a regional climate model. Mon. Weather Rev. 2014, 142, 2665–2686. [Google Scholar] [CrossRef] [Green Version]

- Mourre, L.; Condom, T.; Junquas, C.; Lebel, T.E.; Sicart, J.; Figueroa, R.; Cochachin, A. Spatio-temporal assessment of WRF, TRMM and in situ precipitation data in a tropical mountain environment (Cordillera Blanca, Peru). Hydrol. Earth Syst. Sci. 2016, 20, 125–141. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Heap, A.D. A review of spatial interpolation methods for environmental scientists; Geoscience Australia, Record 2008/23: Canberra, Australia, 2008. [Google Scholar]

- SAFEWAY Project. GIS-based Infrastructure Management System for Optimized Response to Extreme Events on Terrestrial Transport Networks. Available online: https://www.safeway-project.eu/en (accessed on 8 October 2019).

- Eguía Oller, P.; Alonso Rodríguez, J.M.; Saavedra González, Á.; Arce Fariña, E.; Granada Álvarez, E. Improving the calibration of building simulation with interpolated weather datasets. Renew. Energy 2018, 122, 608–618. [Google Scholar] [CrossRef]

- Eguía Oller, P.; Alonso Rodríguez, J.M.; Saavedra González, Á.; Arce Fariña, E.; Granada Álvarez, E. Improving transient thermal simulations of single dwellings using interpolated weather data. Energy Build. 2017, 135, 212–224. [Google Scholar] [CrossRef]

- Eguía, P.; Granada, E.; Alonso, J.M.; Arce, E.; Saavedra, A. Weather datasets generated using kriging techniques to calibrate building thermal simulations with TRNSYS. J. Build. Eng. 2016, 7, 78–91. [Google Scholar] [CrossRef]

- Krige, D.G. A statistical approach to some basic mine valuation problems on the Witwatersrand. J. S. Afr. Inst. Min. Metall. 1951, 52, 119–139. [Google Scholar]

- Matheron, G. Principles of geostatistics. Econ. Geol. 1963, 58, 1246–1266. [Google Scholar] [CrossRef]

- Hiemstra, P.H.; Pebesma, E.J.; Twenhöfel, C.J.W.; Heuvelink, G.B.M. Real-time automatic interpolation of ambient gamma dose rates from the Dutch radioactivity monitoring network. Comput. Geosci. 2009, 35, 1711–1721. [Google Scholar] [CrossRef]

- Nguyen, A.T.; Reiter, S.; Rigo, P. A review on simulation-based optimization methods applied to building performance analysis. Appl. Energy 2014, 113, 1043–1058. [Google Scholar] [CrossRef]

- Hofstra, N.; Haylock, M.; New, M.; Jones, P.; Frei, C. Comparison of six methods for the interpolation of daily, European climate data. J. Geophys. Res. D Atmos. 2008, 113. [Google Scholar] [CrossRef] [Green Version]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Liu, Z.; Berner, J.; Wang, W.; Powers, J.G.; Duda, M.G.; Barker, D.; et al. A description of the advanced research WRF model version 4; National Center for Atmospheric Research: Boulder, CO, USA, 2019; p. 145. [Google Scholar]

- Wang, W.; Bruyère, C.; Duda, M.; Dudhia, J.; Gill, D.; Kavulich, M.; Werner, K.; Chen, M.; Lin, H.-C.; Michalakes, J.; et al. Weather Research & Forecasting Model ARW. Version 4.1 Modeling System User’s Guide; Mesoscale and Microscale Meteorology Laboratory, National Center for Atmospheric Research: Boulder, CO, USA, 2019. [Google Scholar]

- NOAA. NOMADS Servers. Available online: https://nomads.ncep.noaa.gov/txt_descriptions/servers.shtml (accessed on 4 September 2019).

- Marteau, R.; Richard, Y.; Pohl, B.; Smith, C.C.; Castel, T. High-resolution rainfall variability simulated by the WRF RCM: Application to eastern France. Clim. Dyn. 2014, 44, 1093–1107. [Google Scholar] [CrossRef]

- EEA. European Digital Elevation Model (EU-DEM), version 1.1. Available online: https://land.copernicus.eu/imagery-in-situ/eu-dem/eu-dem-v1.1?tab=metadata (accessed on 28 April 2019).

- EEA. EEA coastline for analysis. Available online: https://www.eea.europa.eu/data-and-maps/data/eea-coastline-for-analysis-2#tab-metadata (accessed on 28 April 2019).

- WPS V4 Geographical Static Data Downloads Page. Available online: http://www2.mmm.ucar.edu/wrf/users/download/get_sources_wps_geog.html (accessed on 8 July 2019).

| Name | Station Code | Longitude | Latitude | Elevation [m] | Coast Distance [km] |

|---|---|---|---|---|---|

| Coimbra Aeródromo | 01200548 | −8.4685 | 40.1576 | 170.08 | 33.67 |

| Pampilhosa da Serra/Fajão | 01210686 | −7.9270 | 40.1456 | 834.67 | 79.86 |

| Lousã/Aeródromo | 01210697 | −8.2442 | 40.1439 | 195.26 | 52.79 |

| Dunas de Mira | 01210704 | −8.7617 | 40.4460 | 9.47 | 3.79 |

| Coimbra/Bencanta | 01210707 | −8.4552 | 40.2135 | 26.09 | 35.54 |

| Fogueira da Foz/Vila Verde | 01210713 | −8.8059 | 40.1398 | 2.50 | 4.86 |

| Tomar/Aeródromo | 01210724 | −8.3740 | 39.5921 | 74.91 | 59.70 |

| Rio Maior | 01210729 | −8.9236 | 39.3139 | 53.25 | 24.58 |

| Santarém/Fonte Boa | 01210734 | −8.7367 | 39.2013 | 70.93 | 35.92 |

| Coruche | 01210744 | −8.5133 | 38.9415 | 17.79 | 39.48 |

| Alvega | 01210812 | −8.0270 | 39.4611 | 50.35 | 91.36 |

| Mesh | Min-Max Latitudes | Min-Max Longitudes | Horizontal Resolution [km] | ||||

|---|---|---|---|---|---|---|---|

| WRF-1.5 | WRF-1 | WRF-0.75 | |||||

| D0 | 35.000000 | 45.000000 | 15.000000 | −4.000000 | 13.5 | 9 | 12 |

| D1 | 37.500000 | 42.500000 | −12.000000 | −6.000000 | 4.5 | 3 | 3 |

| D2 | 38.603340 | 40.625430 | −9.386102 | −7.270932 | 1.5 | 1 | 0.75 |

| Station Code | Distance to Nearest WRF Point [m] | ||

|---|---|---|---|

| WRF-1.5 | WRF-1 | WRF-0.75 | |

| 1200548 | 871 | 317 | 348 |

| 1210686 | 395 | 179 | 241 |

| 1210697 | 767 | 651 | 473 |

| 1210704 | 335 | 82 | 370 |

| 1210707 | 739 | 189 | 263 |

| 1210713 | 472 | 637 | 158 |

| 1210724 | 552 | 507 | 227 |

| 1210729 | 992 | 342 | 455 |

| 1210734 | 643 | 332 | 224 |

| 1210744 | 694 | 638 | 458 |

| 1210812 | 225 | 35 | 396 |

| MBE 1 | MAE 1 | RMSE 1 |

|---|---|---|

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

López Gómez, J.; Troncoso Pastoriza, F.; Granada Álvarez, E.; Eguía Oller, P. Comparison between Geostatistical Interpolation and Numerical Weather Model Predictions for Meteorological Conditions Mapping. Infrastructures 2020, 5, 15. https://0-doi-org.brum.beds.ac.uk/10.3390/infrastructures5020015

López Gómez J, Troncoso Pastoriza F, Granada Álvarez E, Eguía Oller P. Comparison between Geostatistical Interpolation and Numerical Weather Model Predictions for Meteorological Conditions Mapping. Infrastructures. 2020; 5(2):15. https://0-doi-org.brum.beds.ac.uk/10.3390/infrastructures5020015

Chicago/Turabian StyleLópez Gómez, Javier, Francisco Troncoso Pastoriza, Enrique Granada Álvarez, and Pablo Eguía Oller. 2020. "Comparison between Geostatistical Interpolation and Numerical Weather Model Predictions for Meteorological Conditions Mapping" Infrastructures 5, no. 2: 15. https://0-doi-org.brum.beds.ac.uk/10.3390/infrastructures5020015