1. Introduction

Since the liberalisation of the electricity market, forecasting electricity prices has been an important factor in decision making for energy suppliers and generators. Day-ahead wholesale electricity price forecasts are an essential component of the electricity market. In the wholesale market, electricity is traded between suppliers and generators by placing offers and bids, respectively, for different volumes of electricity. This is used to set the wholesale price at which day-ahead electricity is purchased. Energy suppliers generally hedge (purchase ahead) their best forecast of volumes and refine their positions closer to delivery, such as in the day-ahead market. The overall cost of wholesale energy is combined with other cost elements in the tariffs offered to consumers. The wholesale electricity price forecasts are a fundamental input for an energy company’s decision making. Prices are relatively volatile, and hence, probabilistic forecasts are more useful, as they describe the uncertainty associated with different events. In this article, three day-ahead probabilistic electricity price forecasts are developed and tested for Great Britain’s day-ahead wholesale electricity market. One of the focuses will be on a recent method developed in [

1] called the X-model, whose focus is on the prediction of spikes in the electricity price.

Until recently, wholesale price forecasting was typically focused on point forecasts. However, in the last few years, probabilistic price forecasting has been gaining interest. The comprehensive 2014 review by Weron [

2] showed that very few papers at the time considered probabilistic forecasts. The 2018 review update by Nowotarski and Weron [

3], however, highlighted the importance of probabilistic forecasts due to the introduction of the so-called smart grid and the increased uncertainty in supply and demand. A more recent review [

4] published after the development presented in this article underlines the need for a rigorous approach and sensible benchmarking in the electricity price forecasting field.

The 2018 review showed that there was an increase in probabilistic electricity price forecasting papers, but the literature is relatively sparse, especially compared to load forecasting, with only 38 papers on probabilistic electricity price forecasting for the period of 2002–2016. There has not been a dramatic change in the number of probabilistic electricity price forecasting papers since the review by Nowotarski and Weron [

3]. Using the same search terms as the authors (Scopus query used to find probabilistic electricity price forecasting publications: (TITLE((“probabilistic” AND “forecasting”) OR interval OR density) OR TITLE-ABS-KEY(“probabilistic forecast*” OR “interval forecast*” OR “density forecast*” OR “prediction interval*”)) AND (TITLE (((((“electric*” OR “energy market” OR “power price” OR “power market” OR “power system” OR pool OR “market clearing” OR “energy clearing”) AND (price OR prices OR pricing)) OR lmp OR “locational marginal price”) AND (forecast OR forecasts OR forecasting OR prediction OR predicting OR predictability OR “predictive densit*”)) OR (“price forecasting” AND “smartgrid*”)) OR TITLE-ABS (“electricity price forecasting” OR “forecasting electricity price” OR “day-ahead price forecasting” OR “day-ahead mar*price forecasting” OR (gefcom2014 AND price) OR ((“electricity market” OR “electric energy market”) AND “priceforecasting”) OR (“electricity price” AND (“prediction interval”OR“interval forecast”OR“density forecast” OR “probabilisticforecast”))) AND NOT TITLE (“unit commitment”)) AND (EXCLUDE (AU-ID, “[No Author ID found]” undefined))), we found 11 papers in 2018, 10 in 2019, 16 in 2020, and 8 in 2021 so far. So there has only been a small uptick in probabilistic price forecasting, and much more work in this area is still required. In particular, there was only one paper found on probabilistic price forecasting within the day-ahead wholesale market in Great Britain [

5]. In this paper, Maciejowska et al. produced prediction intervals by applying a quantile regression with several individual point forecast methods used as independent variables and the spot price as the dependent variable. The presented method also updated this model by using a PCA to extract common factors, which were used as independent inputs within a quantile regression. A limitation to this work is that they only considered prediction intervals and, hence, did not produce a detailed description of the uncertainty. In addition, they did not consider price spikes.

As summarized in the review by Nowotarski and Weron [

3], probabilistic forecasts in the electricity price sector started to appear after 2002 and gained momentum thanks to the Global Energy Forecasting Competition of 2014. Such models can be characterized by the family of the modelling technique used (neural network, traditional statistical time series, hybrid, or other) and the method used to compute the prediction interval. The prediction interval can be computed from historical simulations (also called empirical or sample prediction intervals), from distribution-based forecasts (usually approximating the error distribution with a Gaussian distribution), from bootstrapping, or from quantile regression averaging. The bootstrapping method [

6] is particularly popular for neural network methods, but is also used in statistical time-series approaches [

7]. The quantile regression averaging method (QRA) proposed by Nowotarski and Weron [

8] has gained popularity in the probabilistic electricity price forecasting field and was used by the top two winning teams of the Global Energy Forecasting Competition of 2014 [

9,

10]. More recently, Uniejewski et al. [

11] presented a regularised form of QRA that utilises the LASSO method. A regularised approach was also considered by Banitalebi et al. [

12], but while using a triple seasonal exponential smoothing model.

As the probabilistic electricity price forecasting field has developed, statistical time-series approaches have seemed to become proportionally more popular than in the electricity price point forecasting landscape, but neural network approaches—in particular, deep learning—have gained interest in the last few years. He et al. [

13] considered deep convolutional neural networks. Brusaferri et al. [

14] present a Bayesian deep learning approach to probabilistic day-ahead price forecasts and demonstrated it on both the Italian and Belgian markets.

Statistical time-series approaches, such as linear autoregressive methods and ARIMA models, are common in price forecasting, and have continued to be developed. Recent developments from Marcjasz et al. [

15] showed that a nonlinear version of an ARX could outperform its linear counterparts. Uniejewski et al. [

16] considered a new class of probabilistic models called seasonal component autoregressive models (SCARX). Since their successful application within the Global Energy Forecasting competition in 2014 [

10], generalised additive models have become more popular and have been applied extensively in load and price forecasting. Bernardi and Lisi [

17] use a generalised additive model to generate point and prediction intervals and applied it to the Italian electricity market.

There are also some newer and more unique approaches emerging, as well as models that are perhaps common in areas such as load forecasting, but have not been applied as much in price forecasting. Gaussian processes are common in load forecasting. Mehmood et al. [

18] used a Gaussian process regression for interval forecasting of SPOT prices. An interesting paper by Taylor [

19] considered the generation of prediction intervals by using a quantile approach versus using the less common “expectile” approach. Finally, a unique approach was applied by Kath and Ziel [

20], who considered a conformal prediction approach in order to estimate prediction intervals. CP is a framework that considers a non-conformity score on an out-of-sample dataset, and then a threshold is calculated for the one-step-ahead forecast to produce the prediction intervals.

Of particular importance is accurately forecasting price spikes, which are large deviations from the mean price that are either big or small. As discussed in [

1], many researchers have considered regime-switching models or jump-diffusion models to predict extreme values, and these do not typically forecast the entire series. The authors of [

3] mentioned a few forecasting studies that were designed to estimate spikes; for example, [

21] considered an autoregressive conditional hazard model, Ref. [

22] used a specific error metric to train and select models while accounting for spikes, and the authors of [

23] used a threshold forecasting model. However, there is still unexploited potential in terms of investigating the underlying mechanisms behind price spikes. As pointed out in [

1], many studies only model the individual spike and not the entire price time series, and all of them ignore the underlying mechanism that generates the prices. Accounting for some of these drawbacks, Ziel and Steinert developed the so-called X-model, a unique approach that forecasts entire supply and demand curves to produce a probabilistic price forecast [

1]. By forecasting the supply and demand curves, the model can capture potential price spikes and the uncertainty around them, since sensitivities to small changes in bids can be modelled. In particular, the likelihood of price spikes is strongly related to the steepness of the supply and demand curves near the clearance price. In these cases, a small shift in the bids or offers could cause a dramatic increase/decrease in the final clearance price. Estimating the supply and demand curves increases the chances that such price spikes can be estimated. The authors compared their models with a simple weekly persistence model and a regime-switching model.

The aim of this paper was primarily to bridge some of the gaps in probabilistic price forecasts applied to day-ahead wholesale market data from Great Britain. In particular, we applied the X-model to forecast the aggregated supply and demand curves. In addition to this, we also considered and compared some more traditional time-series-based methods. Hence, in addition to expanding the literature into probabilistic price forecasting for the market in GB, we will also be investigating the ability to predict price spikes by focusing on a particular real-life case study using data from the EPEX SPOT power exchange. To the authors’ knowledge, there has not been an investigation of probabilistic price spike forecasting in the British day-ahead market.

The paper is organised as follows. In

Section 2, we introduce the background of the wholesale market in Great Britain (GB); in

Section 3, we then introduce the methodology of the methods that we implement in this paper. In

Section 4, we introduce the data that will be used for our experiment. In

Section 5, we investigate some further details of the class forecasts for the X-model before presenting both point and probabilistic price forecasting results in

Section 6 using real data from the EPEX SPOT day-ahead market. In particular, we consider a particular price spike that occurred in the data in January 2019. Finally, we summarise in

Section 7.

2. Background of Wholesale Electricity Price Forecasting

There are two day-ahead electricity power exchange markets operating in the wholesale market of Great Britain (GB): APX, which is owned by the EPEX (European Power Exchange) SPOT SE, and N2EX, which is owned by Nord Pool Spot AS in cooperation with Nasdaq Commodities. The EPEX SPOT exchange is the focus of the research presented in this document.

For each hour of the next day, offers of power (in MW) from generators are matched with bids from suppliers and large consumers within the day-ahead wholesale market auction operated by the power exchanges. The possible bids have different restrictions depending on the particular power exchange. Within the market, suppliers and generators can make bids for specific volumes. For the EPEX SPOT (

https://www.epexspot.com/sites/default/files/download_center_files/20-01-24_TradingBrochure.pdf (accessed on 26 August 2021)) day-ahead market, the maximum/minimum bids are +3000/−500 EUR per MWh, with a minimum price and volume increment of 0.1 EUR/MWh and 0.1 MW, respectively.

The auction ends at 12:00 CET before delivery on the next day. This means that there are 35,001 possible prices on the price grid (EUR), which are given by .

GB’s wholesale electricity market clearance price is the intersection between the supply and demand curves. However, this is not completely accurate because, prior to Brexit and before Jan 2021, the EPEX and N2EX markets were coupled via the Pan-European Hybrid Electricity Market Integration Algorithm (EUPHEMIA) (

https://www.n-side.com/pcr-euphemia-algorithm-european-power-exchanges-price-coupling-electricity-market/ (accessed on 10 August 2021)), which defines the market clearing price from all bids (including block bids, linked block orders etc.) across all European markets. However, it is expected that the market clearing price will be closely approximated to the intersection of the aggregated supply and demand curves for the individual exchange market (in this case, EPEX SPOT). This was confirmed in [

1] for the German and Austrian market, where 99.8% of the estimates were within 1 EUR/MWh of the true clearing price. This was also confirmed in the research presented here with GB’s EPEX SPOT market with 97% of bids within 2 GBP/MWh (87% within 1 GBP/MWh; see

Appendix A.1 for further details). Note that in this paper, the data will be from before Brexit, so the EUPHEMIA price coupling algorithm will still apply.

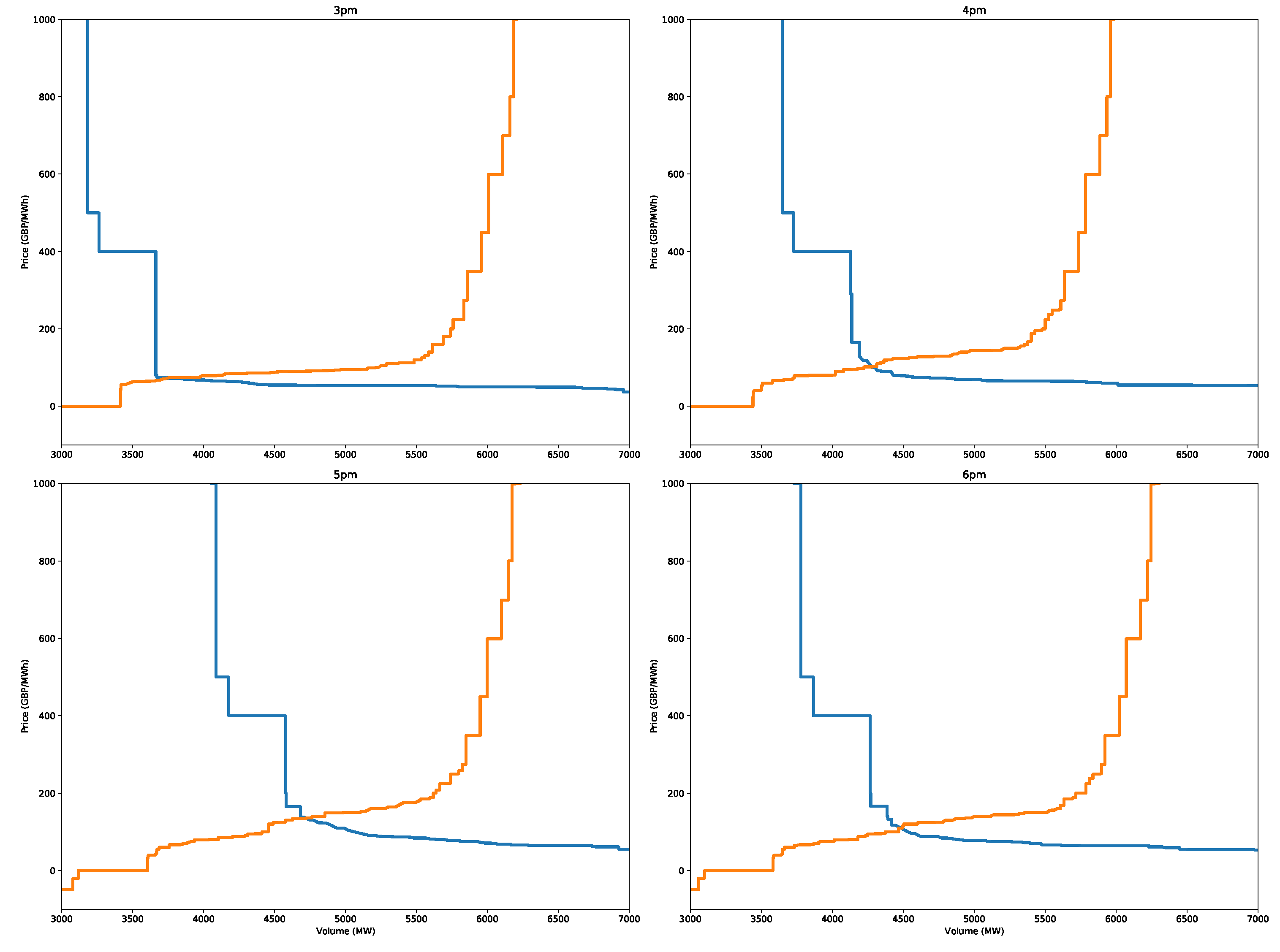

The clearing price can be calculated with the following procedure. First, for the supply curve, the offered volumes are ordered by price from lowest to highest, and for the demand curve, the bid prices are ordered from highest to lowest price. The supply and demand curves are then formed by calculating the cumulative volumes for the offers and bids, respectively, in the current ordering. An example of the supply and demand curves and their intersection is shown in

Figure 1 for one particular hour from the aggregated supply and demand data from EPEX SPOT. Note that the markets are more complicated than presented here. As in [

1], all bids will be considered simple bids, and additionally, the virtual interconnector between the N2EX and EPEX SPOT markets is not considered. However, these restrictions are not expected to have too much of an impact on the overall results presented.

3. Methodology

This section describes the different models that will considered and includes a recent innovative model described by Ziel and Steinert [

1], the so-called X-model. The X-model is relatively unique in that it generates a forecast of the entire supply and demand curves, and then the clearance price is calculated; the intersection of the two is described in

Section 2. This model will be compared to some simpler but common time-series models that forecast the price directly. The main focus will be on generating probabilistic forecasts, but point forecasts can also be generated and will be considered briefly.

3.1. X-Model—Outline

The first model considered is the X-model, as developed by Ziel and Steinert [

1]. The details will not be repeated here, but the general concepts and adjustments for Great Britain’s market are presented in this section. Some extra details are given in the Appendix.

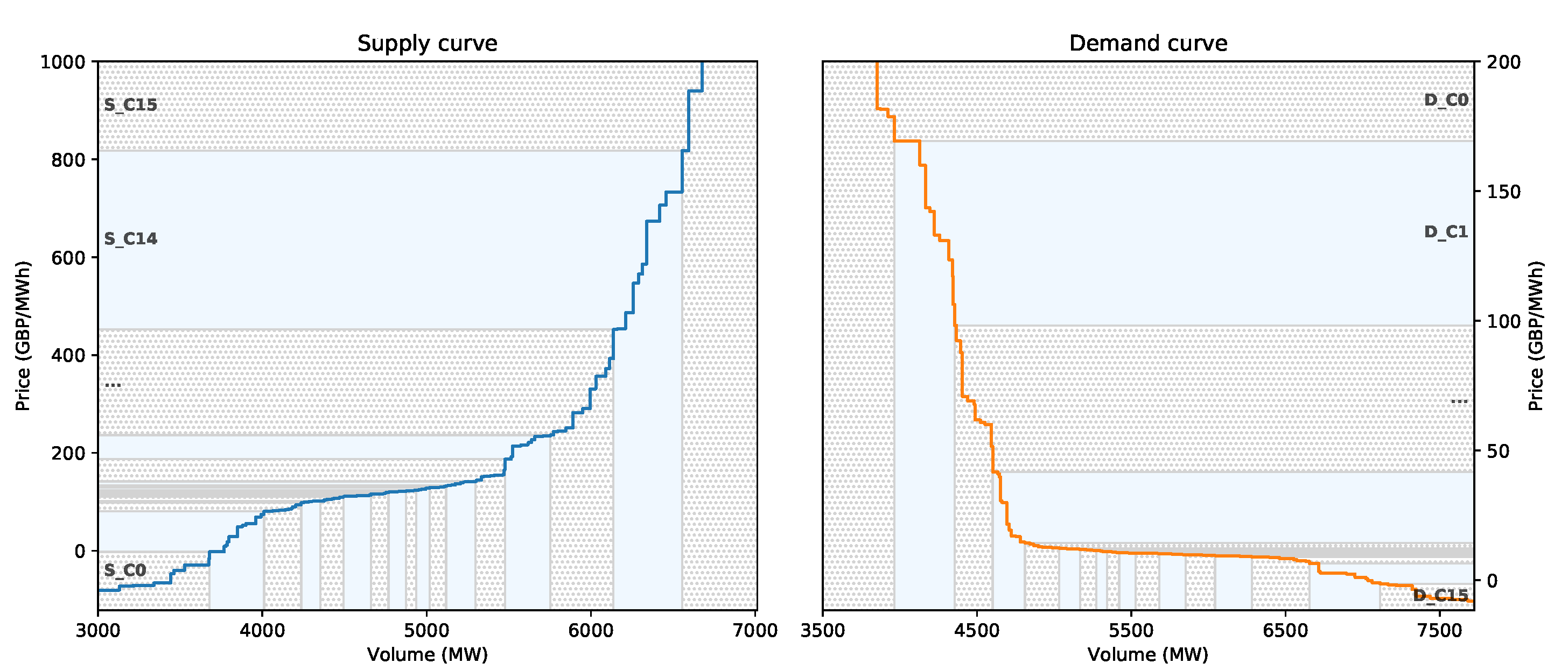

The novelty of the model is that the future supply and demand curves are estimated directly rather than explicitly forecasting the clearance price. However, forecasting every incremental price would incur an excessive computational cost and is not necessary, since the supply and demand curves are monotonic functions that can be accurately interpolated given sufficient points (

Appendix A.2.2). For these reasons, the supply and demand curves for each hour of the dataset are split into a limited number of price bands, as illustrated in

Figure 2.

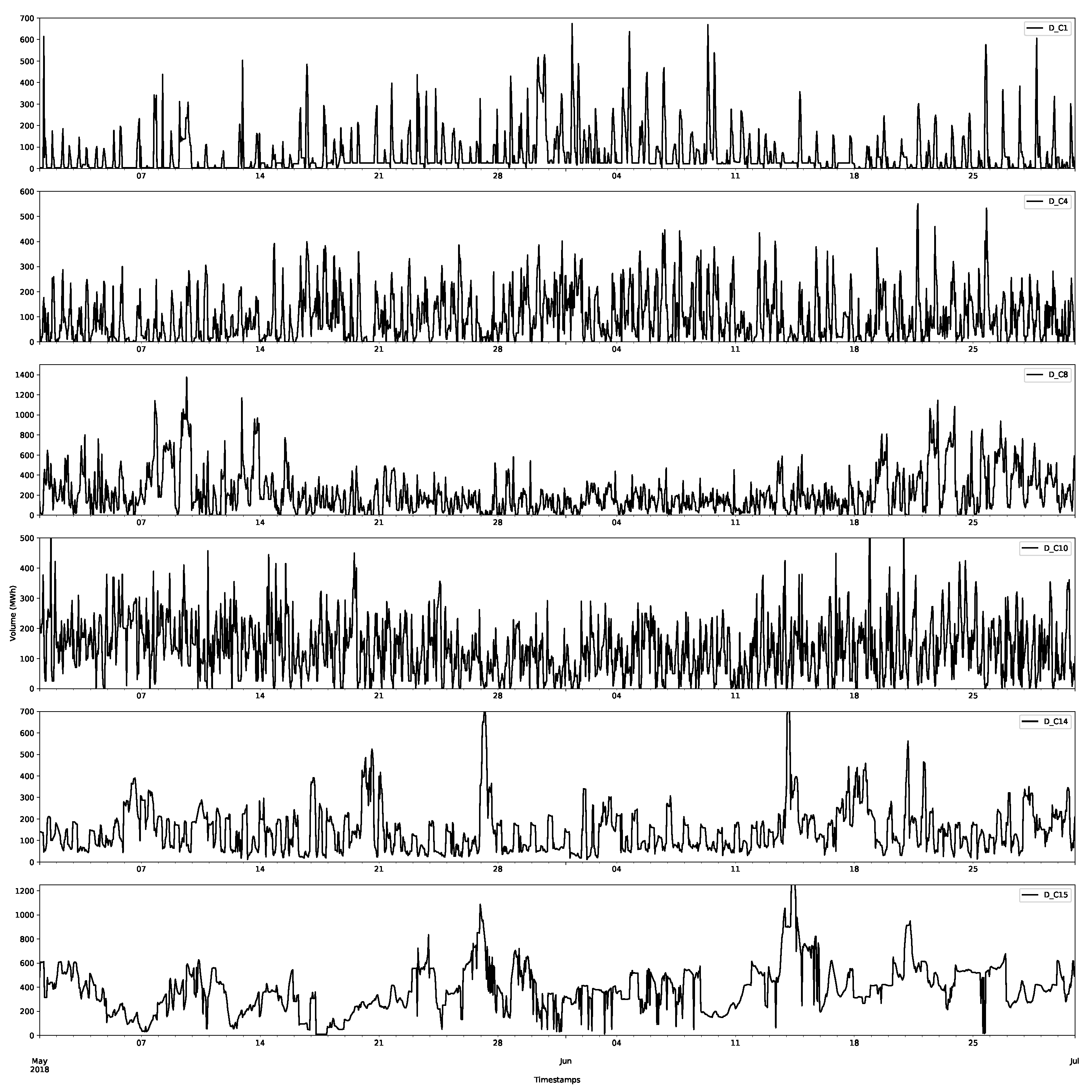

The volume of bids in each price band is aggregated for all hours in the dataset to create an individual time series for each price band, which is then forecasted using a linear regression model. Once the forecast for each individual class has been produced, the supply and demand curves are reconstructed, and their intersection and, hence, the clearing price can be calculated.

The model can be summarised in the following steps:

Split the supply and demand curves into price classes and store the volumes of bids for each price class

c, which we label

and

, respectively, for day

d and hour

h (see

Section 3.1.1);

Model each price class using a linear regression model;

Find the coefficients for each model using a LASSO model. This both trains the coefficients and chooses the most appropriate inputs for the model;

Using the trained model, produce rolling day-ahead forecasts for each class for each day of the testing set;

Reconstruct the supply and demand curves and find the intersection to estimate the clearance price (see

Section 3.1.2);

Develop a probabilistic forecast by bootstrapping the residual time series in daily chunks (see

Section 3.1.3).

The possible inputs for each price class model and the reasons behind the choice are as follows:

Historical data from the same price class—past volumes in the current price class may be related;

Historical data from other price classes—other price classes are likely to be related—higher bids for low prices may mean that there are lower bids for higher price classes;

Historical clearing prices and volumes—as mentioned in

Section 2, the clearance prices and volumes are determined by the intersection of the supply and demand curves. Hence, the intersection of the curves may be related to the volumes traded in each price class;

Daily dummy variables—different days of the week have different bidding behaviours, and demand is likely to be driven by daily seasonal effects;

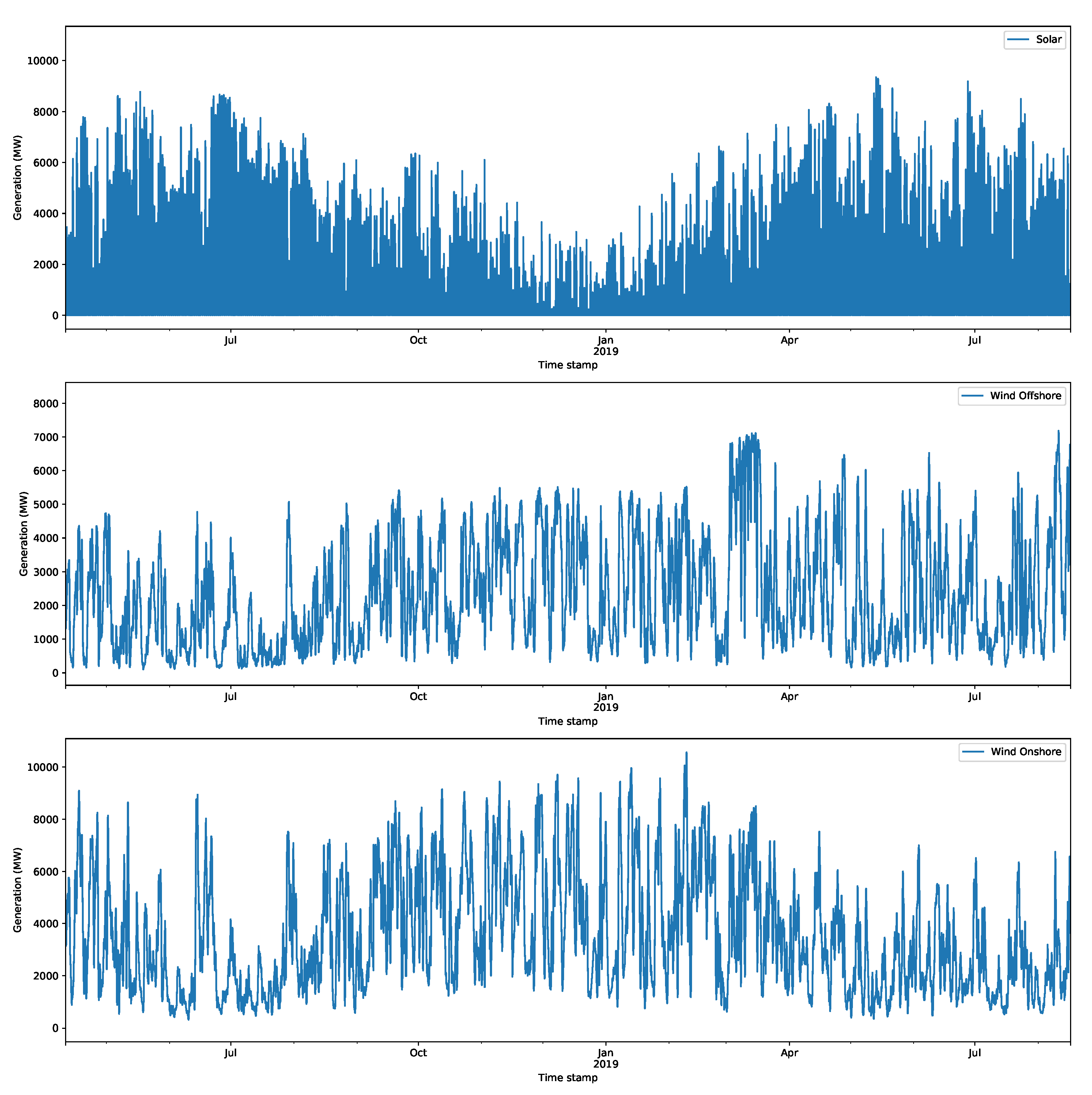

Day-ahead renewable generation forecasts—as discussed in

Section 4.2, renewable generation can have a strong effect on the spot price, particularly through the merit-order effect, especially for the lower-offer supply classes.

A LASSO model is implemented, since it simultaneously trains the coefficients and selects the most appropriate inputs. This will allow improved interpretation of the model, as variables that have little impact on the overall forecasts are not included. The LASSO therefore acts as a regularisation method by preventing overfitting of the model to the underlying noise in the data signal. The LASSO model is described in detail in

Appendix A.2 and follows the procedure defined by Ziel and Steinert in [

1].

The core element of the probabilistic forecasts is a bootstrap method on daily residuals in the trained data. This ensures that daily interdependencies are maintained in the errors, and the forecasts should be more realistic. This block bootstrap also ensures that the different hours of the day are modelled with different levels of variation that are dependent on historical residuals at the same hour. The details of this are found in

Appendix A.2.3.

3.1.1. Deriving the Price Classes

The aim of the X-model is to produce probabilistic forecasts by forecasting the supply and demand curves. However, for the EPEX SPOT day-ahead market, the maximum/minimum bids are +3000/−500 EUR per MWh, with the following minimum price/volume increments: price tick, 0.1 EUR/MWh; volume tick, 0.1 MW. Hence, there is a large number of possible prices at which a supplier or generator could place bids, many of which are often never used. As mentioned above, to simplify the task, price classes are produced, and for each hour, the bid volumes are aggregated to produce several time series.

The price classes are developed based on a consideration of all historical supply and demand curves. In [

1], the price classes were chosen to ensure that, on average, each price class consisted of the same volume (in this case,

1000 to produce 16 classes for both supply and demand). The boundaries of the price classes could be chosen on the grid at 0.1 EUR/MWh with clearly defined maximum and minimum bids. However, in our situation, the aggregated sale and purchase curve data for Great Britain are in GBP, which poses a problem because they are subject to exchange rates and thus do not align with a well-defined discrete pricing grid. In particular, in GBP, the maximum and minimum price bids/offers are not fixed for GB’s market data. Another difference from the original X-model paper is in the sizes of the volumes that are being traded. In [

1], the volumes being traded are of the order of 30,000 MW on average. For GB, the volumes are relatively small in comparison, with orders of around 3000 MW, i.e., lower by a factor of 10.

For these reasons, rather than choosing the classes based on a uniform volume, the classes are chosen to ensure that there is a sufficient number of bids per price band. We create price classes (to the nearest 0.1 GBP) so that there is a uniform number of bids/offers across the classes, as illustrated in

Figure 2. Let

and

be the set of prices seen in the historical data for the supply and demand bids. Suppose that there are

N bids through the entire training period; then, we create 16 classes (to be comparable to [

1]) so that there are

bids in each volume. We denote the price classes for the supply curve as

and for the demand curve as

. Notice that, for this procedure, the lowest (and similarly, the highest) price class will cover any value less (greater) than a particular price value.

The supply volume for class c at day d and hour h of the day (h = 1, …, 24) is given by for , where is simply all prices in that are in class c for the supply curve, and is the volume bid for supplying at price P at day d and hour h. Similarly, the demand volume for class c at day d and hour h of the day is given by for , where is simply all prices in that are in class c for the demand, and is the volume bid for demand at price P at day d and hour h.

3.1.2. Reconstructing the Clearance Price

Once the price classes are predicted, the intersection of the resultant supply and demand curves can be used to create a clearance price. However, the supply and demand curves for the reduced price classes are not granular enough, and this leads to sensitive intersection points [

1]. Instead, the supply and demand curves must be transformed into a higher-resolution grid. In our case, we use a finer grid with increments of 0.1 GBP. The volumes in each price class are distributed according to how often the prices on the finer grid occur in the historical data. There are two main ways to do this:

Assign a volume to a price on the finer grid if it occurs frequently enough according to some threshold.

Assign a volume to a price in a stochastic way, but with increased likelihood the more frequently it occurs.

The details are given in

Appendix A.2.2, but the first approach is used when generating point forecasts and the second when generating a probabilistic approach. This adds more variability and takes into account uncertainty in individual bidding prices.

3.1.3. Generating Probabilistic Forecasts

Generating a point forecast is relatively simple. Once the supply and demand curves have been reconstructed (

Section 3.1.2), the intersection produces the clearance price. Generating a probabilistic forecast is slightly more complicated. The reconstruction uses the stochastic version (

Section 3.1.2) to model the uncertainty when moving to a higher-resolution price grid. However, the main contribution to modelling the uncertainty comes via block bootstraps of the historical residuals that are added to each class. However, to retain the interdependencies in the data, the residuals are sampled as daily blocks across the classes to retain any temporal or class correlations. The details are given in

Appendix A.2.3.

3.2. ARX Model

This model is similar to the X-model in that it is a linear model with similar dependent variables, and it is solved via LASSO. However, the main differences are that no price classes are used, nor any volume inputs. Instead, the clearance price is directly estimated using the same lag template (see

Appendix A.2.1) for the price, solar, offshore wind, and onshore wind variables. In other words, 8 days of lags are used for all hours of all variables, in addition to 36 days of lags for lags from the same hour of the day. LASSO is used again to select the features to be included in the model, as well as to train the coefficients of the linear model.

As with the X-model, the probabilistic forecasts are produced via a bootstrap procedure, which preserves the daily correlation structure of the residuals. This will be referred to as the AR or ARX model.

3.3. ARIMAX Model

We also consider a traditional time-series model. In this report, an ARIMAX(12, 1, 4) model is used (i.e., autoregressive order p = 12, a single difference d = 1, and an order q = 4 moving average term). The lagged values are treated as the exogenous variables, with price values at lags of 24, 48, 196, and 336 h. Technically, using a lag of 24 breaks the rules of not using any price data from the day of interest, but this is only for the final hour of the day over a less volatile period (midnight), and it is not expected to make much difference in the overall result. Note that no generation inputs are used in this model.

3.4. Daily Seasonal Persistence Model

There are strong daily patterns in the data. A very basic forecast model is therefore simply a daily seasonal persistence model. In other words, today’s forecast is simply yesterday’s price. No exogenous variables, such as wind generation, are used in this model.

3.5. Error Measures

This report focuses on probabilistic forecasts, but will also briefly consider point forecasts. For point forecasts, the root-mean-squared error (RMSE) is used. Consider a point forecast

at time steps

and actual observations

defined at the same time steps

. Then, the RMSE is defined by

The smaller the RMSE is, the more accurate the forecast will be. The choice of RMSE over other common measures, such as mean absolute error or mean absolute percentage error, is that the others are based on the 1-norm, rather than the 2-norm, which means that they are less representative of measurements of errors in peaks [

24].

To assess a probabilistic forecast requires a proper scoring function [

25]; this guarantees that the minimum of the score is achieved by the true underlying distribution. Since this paper considers quantiles, the pinball (or quantile) scoring function was deemed to be appropriate [

26]. Given a quantile forecast

for some probability value

(called the

-quantile), then for an observation

, the pinball loss score for the quantile

is defined as

The pinball score is an asymmetric function that penalises the difference between the quantile and the observation and weighs this difference depending on the sign of the difference.

The pinball measure produces an average score that measures how well the model is calibrated in terms of matching the observations, as well as how wide the distributions are (a thinner spread means that the estimates are more precise). However, they are limited in terms of assessing biases in the probabilistic forecasts. Here, we consider the probability integral transform (PIT) to understand how well calibrated the forecasts are. The PIT is simply a histogram that counts how many observations fall between each quantile. For example, for ventiles, 20 buckets are used, and hence, one would expect one observation in every 20 to fall into each bucket (or between each ventile/quantile), unless the quantiles are not accurately estimated for the underlying data. As will be shown in the results, the PIT can also show whether the forecast is biased or the quantiles are too wide or narrow in places.

6. Results

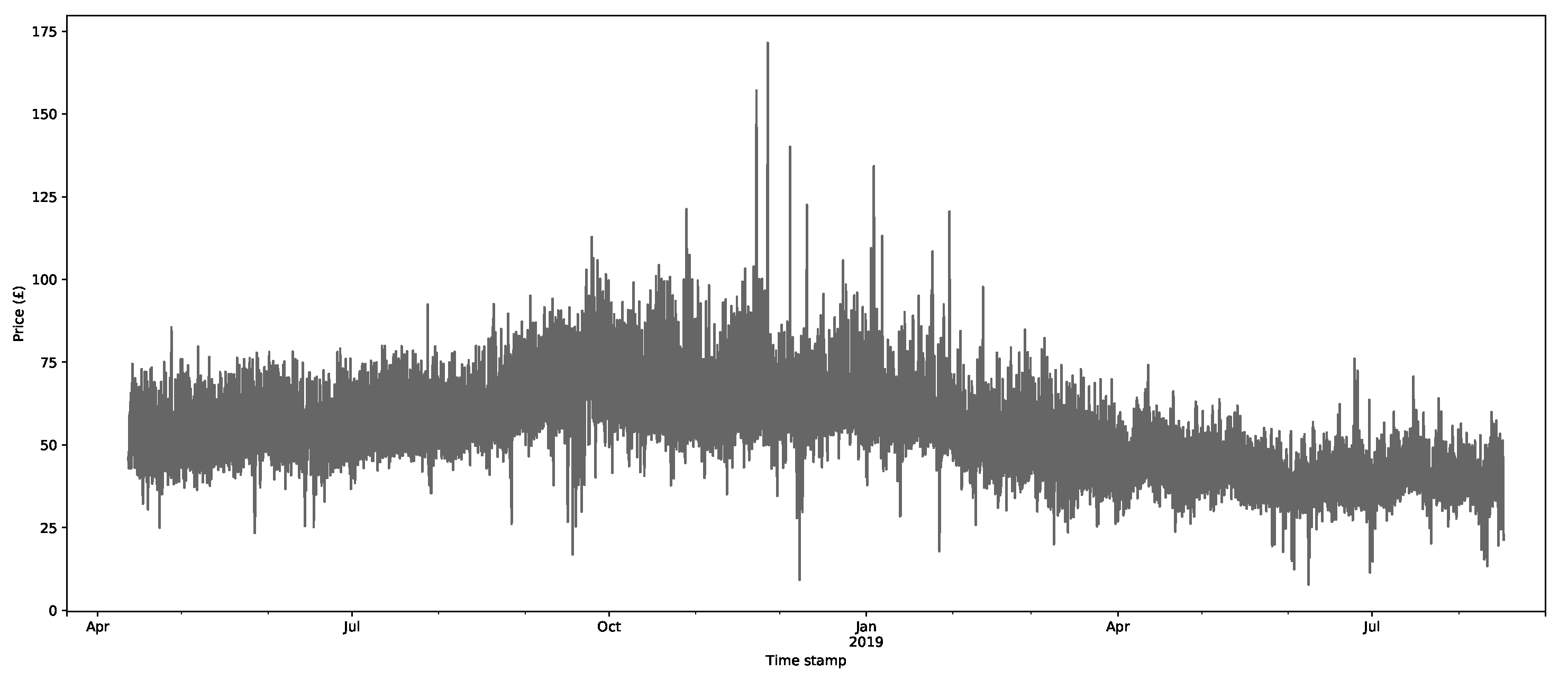

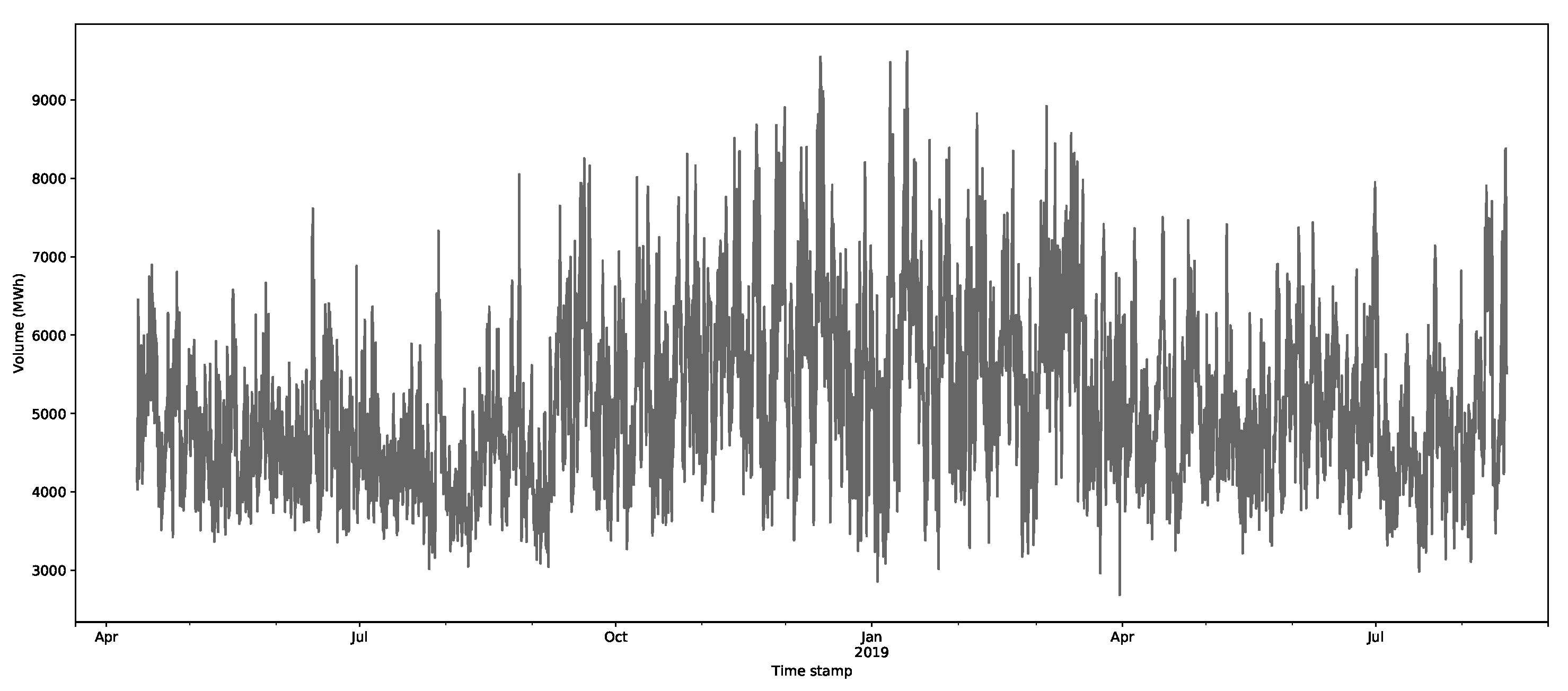

This section will briefly describe some of the results for both the point and probabilistic forecasts, as well as their comparison with the benchmarks. As mentioned in

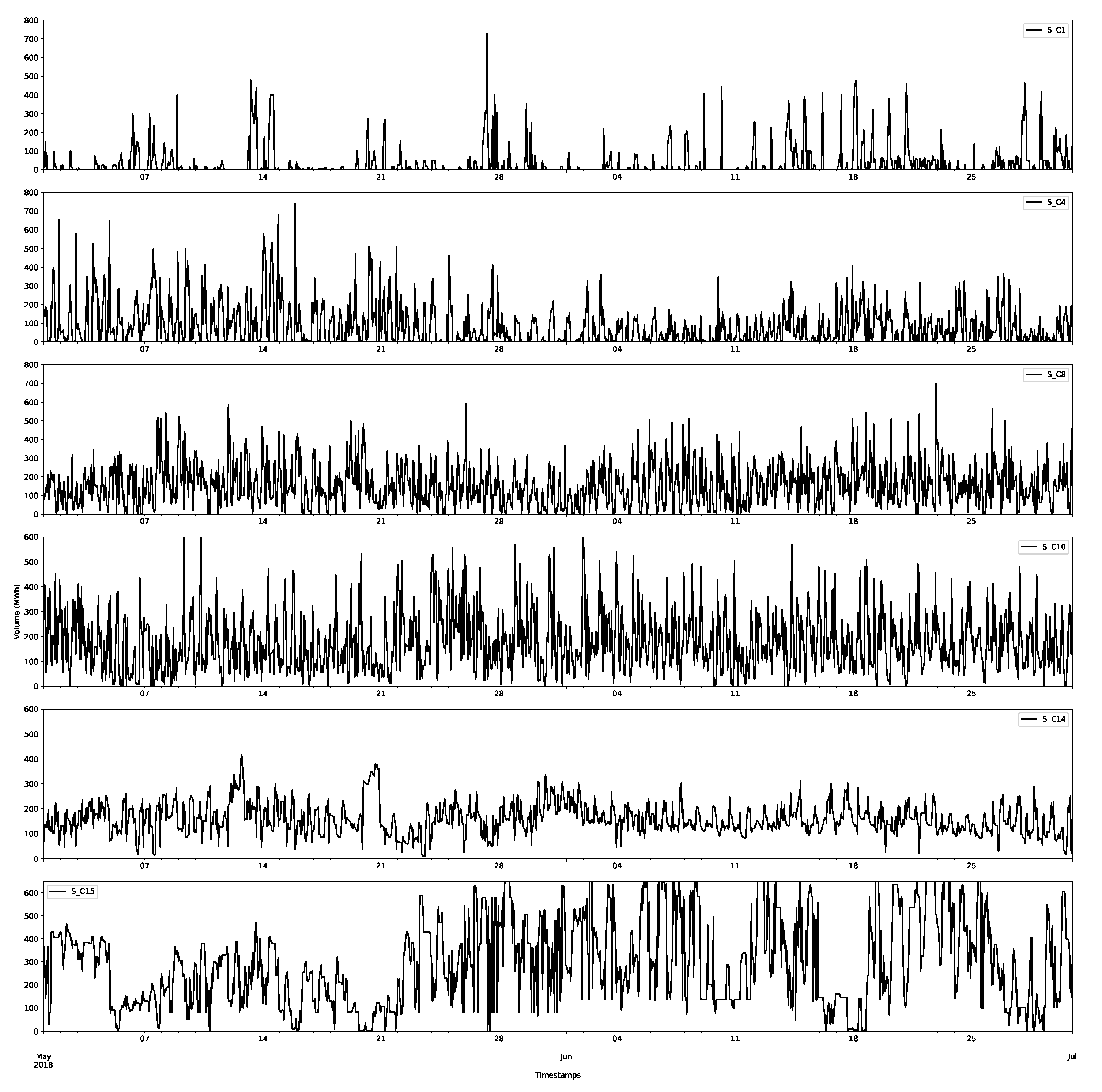

Section 5.1, for the X-model, the supply and demand volumes were split into 16 price classes each, and linear day-ahead forecast models were trained on the hourly data from 1 January 2017 to 1 January 2019; these were then applied in a rolling manner to the testing dataset from 1 January 2019 to 17 August 2019, starting at midnight on 1 January. The price classes are presented in

Section 5.1 and the methods for generating the X-model forecasts are explained in detail in

Appendix A.2. The other methods only use the historical prices and, in the case of the ARX model, wind and solar generation forecast data.

6.1. Point Forecasts

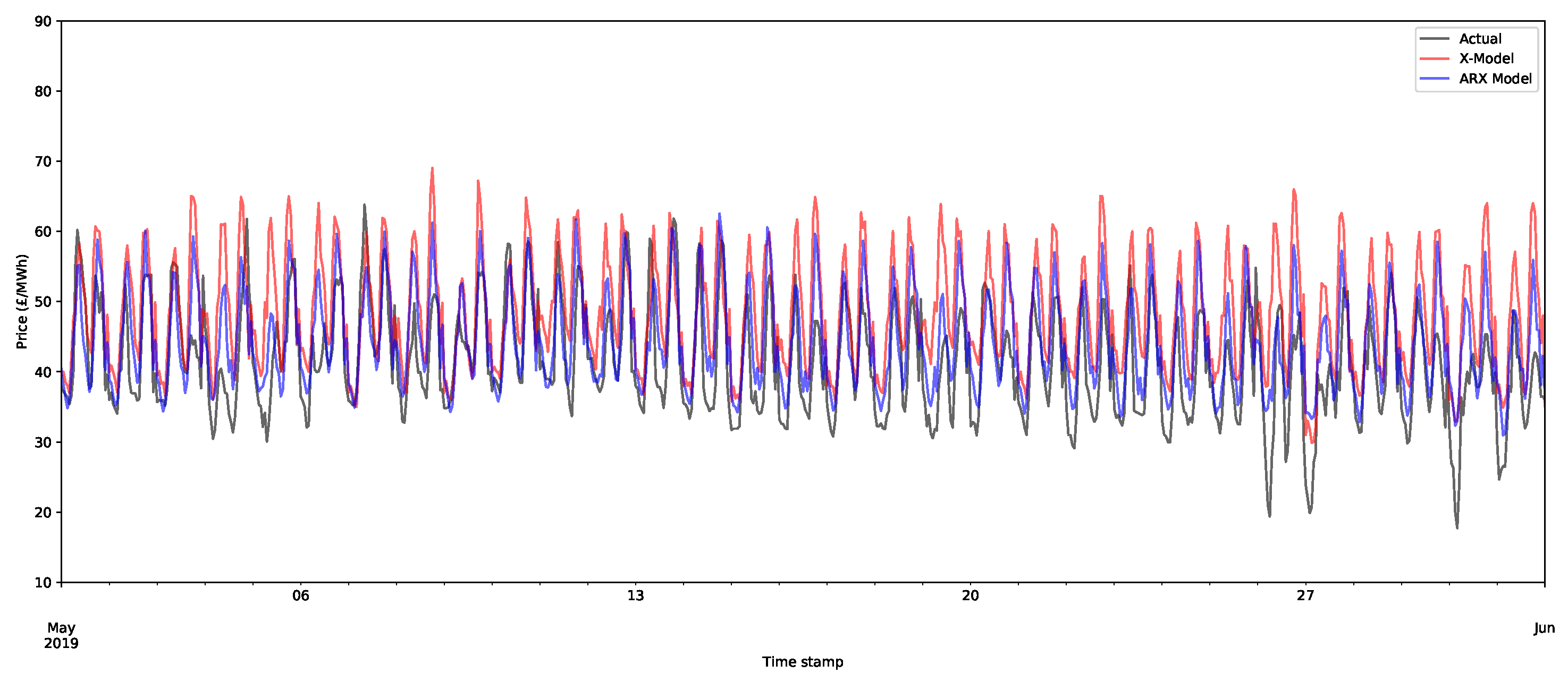

Although point forecasts are not the aim of the models, it is worth investigating them to begin to better understand some of the features of the models. An X-model point forecast model was produced by reconstructing the supply and demand curves from their class forecasts and then calculating the intersection of the two lines. An example of the day-ahead price forecasts is shown in

Figure 13 for the X-model and the ARX model for the first month of the training set. As can be seen, the forecasts appear to be closely aligned to the actual data, although some of the peaks are inaccurately estimated.

For later months, the forecasts still appear to be doing a decent job of capturing the main shape of the demand, but there is a slight deviation from the average level of demand. This is illustrated for the fifth month of the test set (May 2019) in

Figure 14. The main cause of this is that the models need retraining. Both the X-model and ARX model potentially use a large number of inputs, many of which can be relatively old (up to 36 days). Hence, these models can be improved by retraining on more recent data with respect to the periods being forecasted. Due to time constraints, these were not applied for this test case.

The RMSE (for the definition of the RMSE, see

Section 3.5) for the daily seasonal persistence (

Section 3.4) for each forecast model is shown in

Table 1. It is clear now that the ARIMAX model produces the best error measures, but is very similar in accuracy to the ARX model. The X-model even has larger RMSE values than the daily persistence. As will be shown later, this is due to the bias in the data, especially for the later months. Although this paper was applied to the German and Austrian electricity market, we can compare the performance of the X-model with the performance in the original X-model paper relative to a simple persistence model [

1]. In the original paper, the X-model was, in fact, better in comparison with the weekly persistence model and was 44% of the size. In contrast, our X-model is actually larger than the daily persistence model. In addition, our autoregressive model is 80% of the persistence model, but it was 57% in the original X-model paper. One reason for the lower performance of both the ARX model and X-model compared to the persistence model is likely the lack of use of conventional generation as an input. In addition, more frequent retraining of the model parameters could be another reason for the difference.

The errors broken down by month are shown in

Table 2. The ARX model performs the best in the earliest month, but is the second-best model for the later months of the test set. Similarly, the X-model also has a decrease in accuracy in the later months, highlighting the need to retrain the model. In fact, the average error over the January and February months shows that, in this case, the X-model is at least as competitive as the persistence benchmark.

The ARIMAX model has relatively few parameters that use only quite recent information. This means that it is not unduly affected by seasonal effects or old data. In contrast, the ARX model and X-model not only use generation data, but they potentially also use large amounts of lagged data—up to 36 days in some cases. In a future study, it would be worth experimenting with reduced input variables to make these models more accurate when the training data are scarce (another experiment was performed with the ARX model with fewer lags, which did indeed improve the accuracy of the point forecast; however, this reduced the probabilistic accuracy, as it likely reduced the sample of residuals, the source of variation in the bootstrap forecast method.).

6.2. Probabilistic Forecasts

Probabilistic forecasts contain estimates about the distribution of the prices rather than giving a single estimated value. There are two stochastic elements that contribute to the generation of the probabilistic forecasts in the X-model. One component is Bernoulli random number generation in the reconstruction of the supply and demand curves. On its own, this is not expected to play much of a part in modelling the uncertainty. For one, the available data are currently relatively small, and hence, many of the prices will have no volumes offered or bid upon in the auction; thus, there may be very few changes based on the Bernoulli random draws (see

Appendix A.2.2 for details). Secondly, the reconstruction has a limited effect, as it only influences the classes that are close to the intersection price. To illustrate this, an example of three reconstructions of the price forecasts is given in

Figure 15 over 15 days of the test set. In black are the actual prices, the threshold reconstruction is in blue (in other words, that which was used to produce the point forecasts in

Section 6.1 and described in

Appendix A.2.2), and two examples of reconstructed curves using the Bernoulli random sampling are shown, as described in detail in

Appendix A.2.2. As the figure shows, they are all quite similar, with minimal deviations from each other.

The main contributor to the probabilistic forecasts is from bootstrapping from the residuals (i.e., the deviation of the observations and the trained model) in the historical dataset. As described in

Appendix A.2.2, full days are sampled (as well as across classes for the X-model) to retain any interdependencies in the data. The bootstraps are calculated for the 0.05-quantiles (ventiles) for each time step in the test set. The error score for each time step in the test set is then calculated using the pinball loss score (see

Section 3.5 for details). In this example, 2000 bootstraps are used.

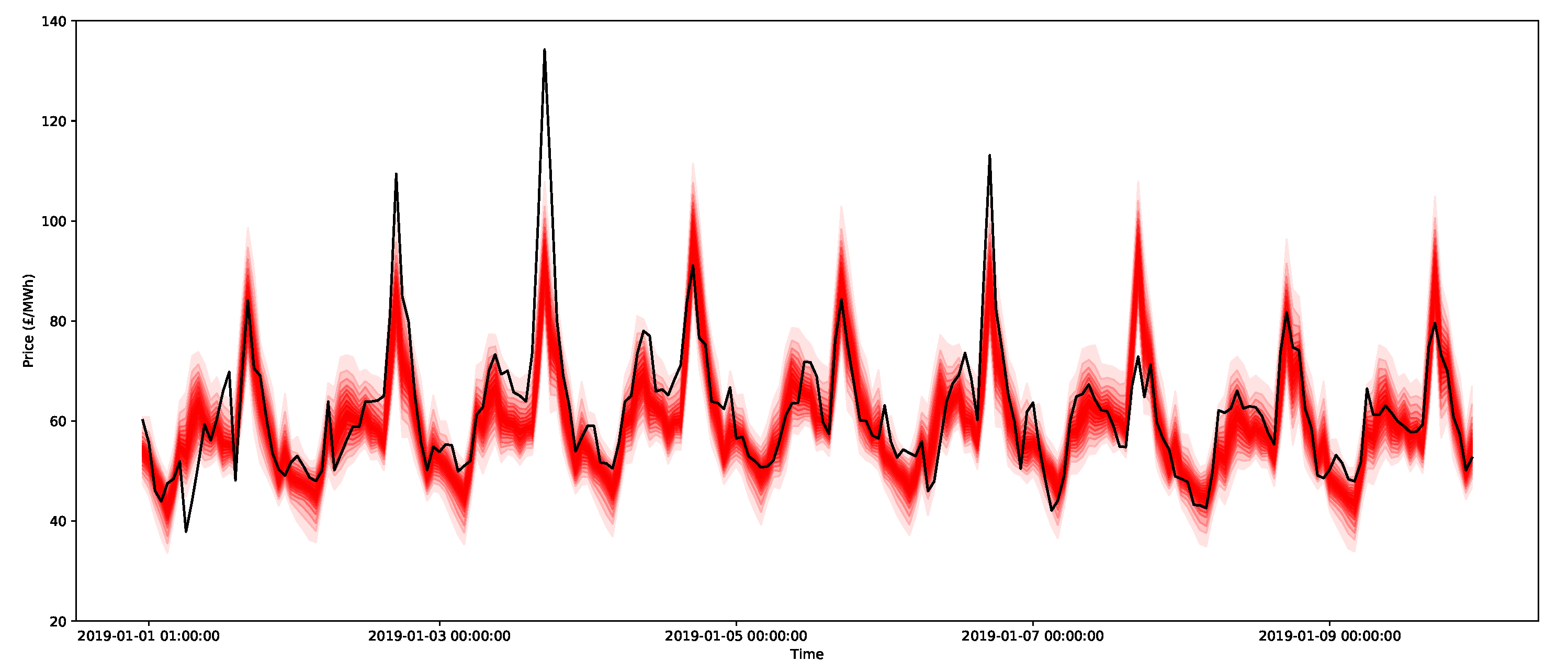

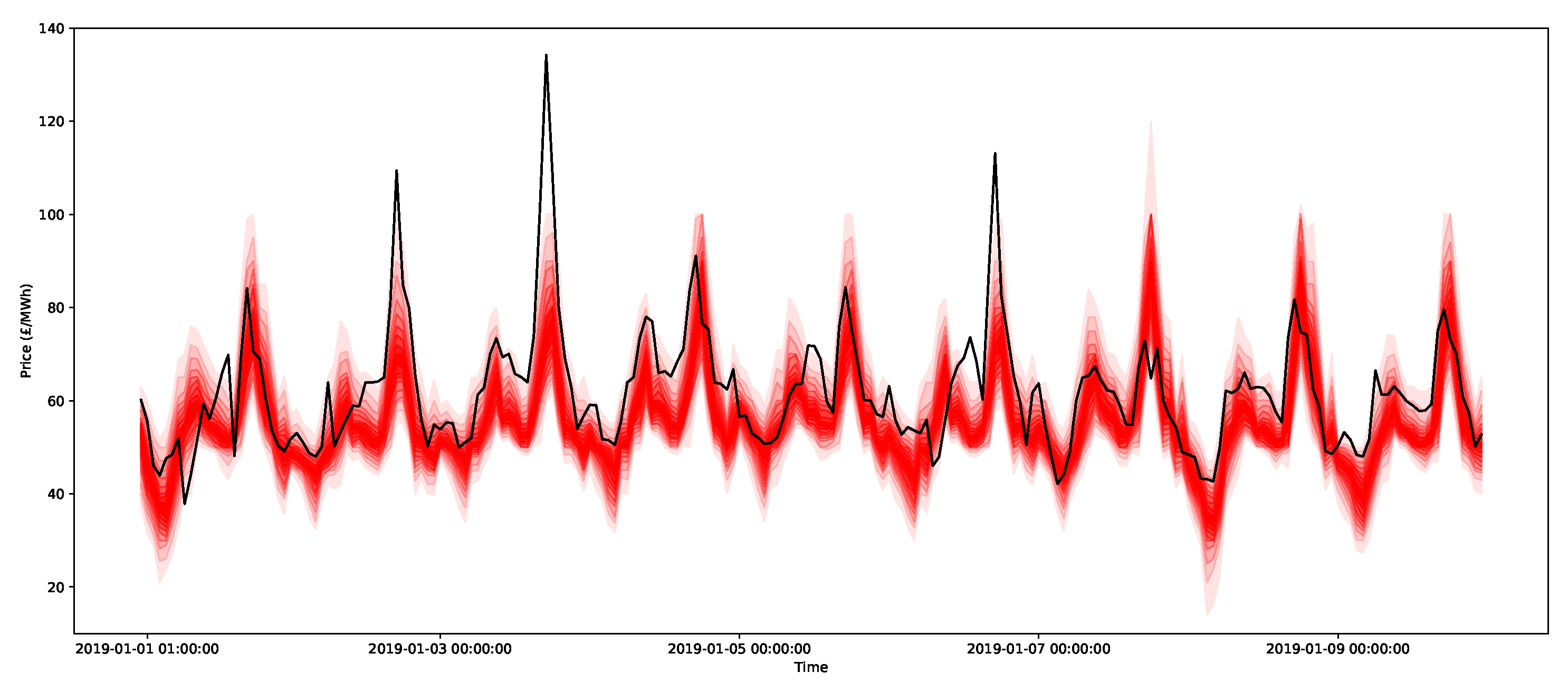

An example of the 0.05-quantiles (or ventiles) for the day-ahead forecasts for nine days for the three methods is presented in

Figure 16,

Figure 17 and

Figure 18 for the ARIMAX model, ARX model, and X-model, respectively. The different shades represent the different predictive intervals between ventiles, where the darkest shade is for the middle ventiles (50–55 percentile and 45–50 percentiles), with gradually lighter shades until the outer ventiles (90–95 percentile and 5–10 percentiles). Comparing the ARIMAX and ARX models, one of the most clear differences is that the bounds of ARIMAX are a little bit wider, which is likely due to the restriction on the bounds being from a Gaussian distribution, which is relatively broad. However, compared to the X-model, the distributions for the ARIMAX and ARX models are much more regular and similar from one day to the next. These plots highlight some interesting features. First, it is clear that the ARIMAX and ARX models provide the most stable forecasts and express regularity from one day to the next. The X-model in

Figure 18 shows much more varied distribution shapes, such as the low-cost spike that occurs between 7 and 9 January. This is likely due to the incorporation of the sensitivities in the intersection point of the supply and demand curves, which will be shown in more detail in the next section.

The average pinball scores over the entire test period are shown in

Table 3. The ARX model is the best-scoring method. Once again, the ARIMAX model is one of the best-scoring models, and its score is slightly higher than that of the ARX model, although they are not significantly different. The X-model’s pinball score is about 50% higher than that of the ARX model, and is hence considered the least accurate when using this measure. As we will show later, this is likely due to bias in the estimate.

For completeness, the average pinball scores are also shown in

Table 4 for each complete month in the test set and for each of the three methods. Notice that the scores of all three methods are similar for January 2019. This is the month right after the end of the training set. Further, the X-model is relatively competitive with the other models for January and February 2019. This again suggests that a large factor in the X-model’s reduction in accuracy is the requirement of more frequently retraining on more recent data. The ARX model is the most accurate model for the first four months of the test data, but then decreases in accuracy relative to the ARIMAX model starting in May 2019. This again suggests that retraining is necessary, but since the ARX model has much fewer parameters than the X-model, the reduction in accuracy occurs much later in the test set.

As shown in

Section 6.1, the ARX model and X-model need retraining, as the models begin to drift for later months. Hence, the results investigated here will be for the first two months of the test set (January and February 2019). The results for the full dataset are given in

Appendix B for completeness, but they confirm the bias introduced into the X-model and ARX-model for the later test data.

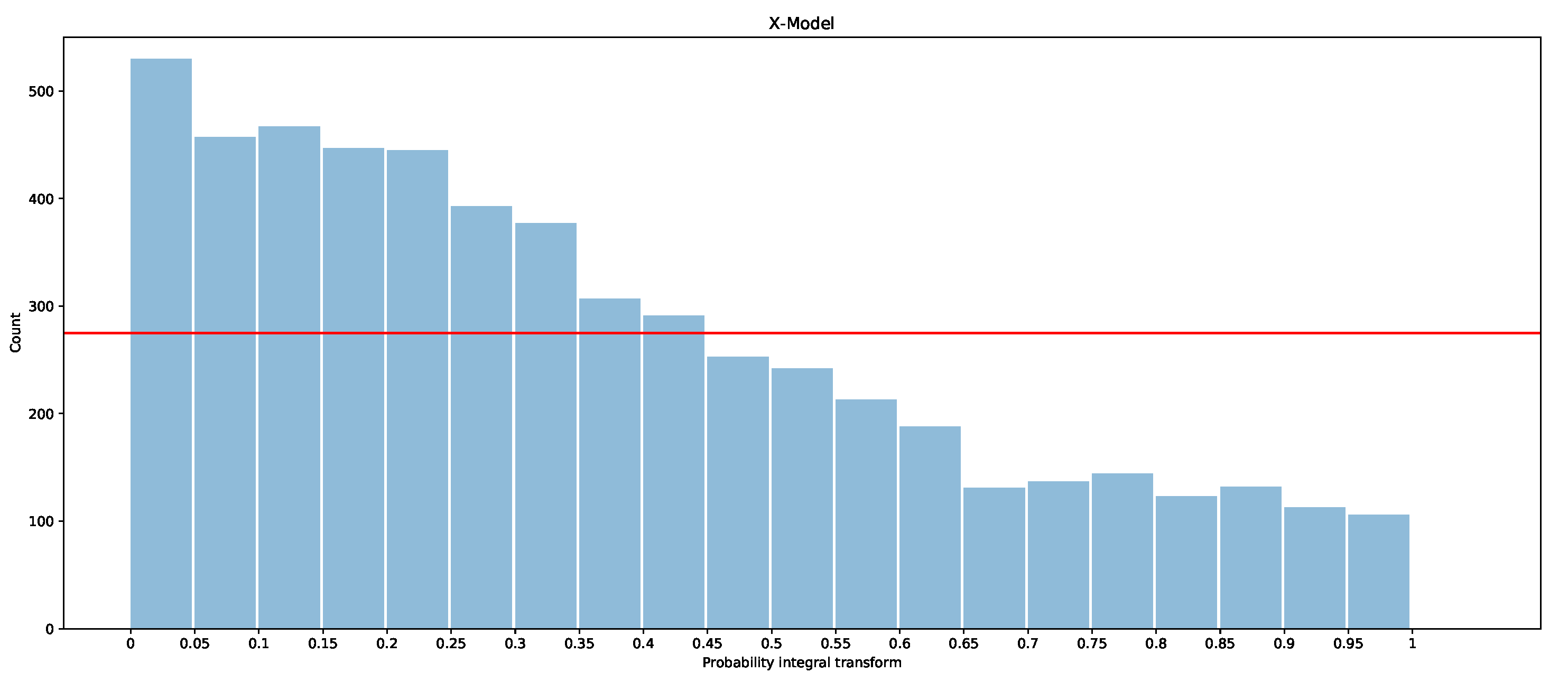

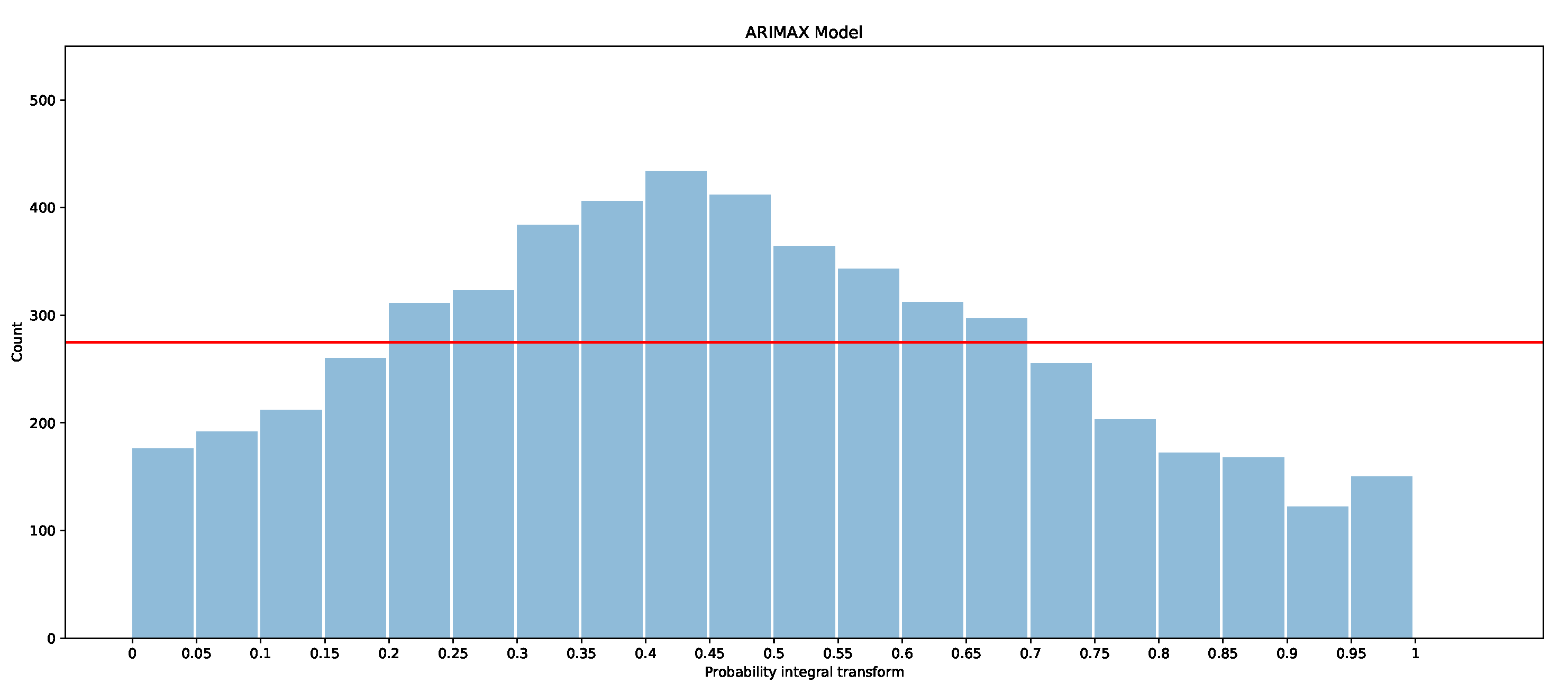

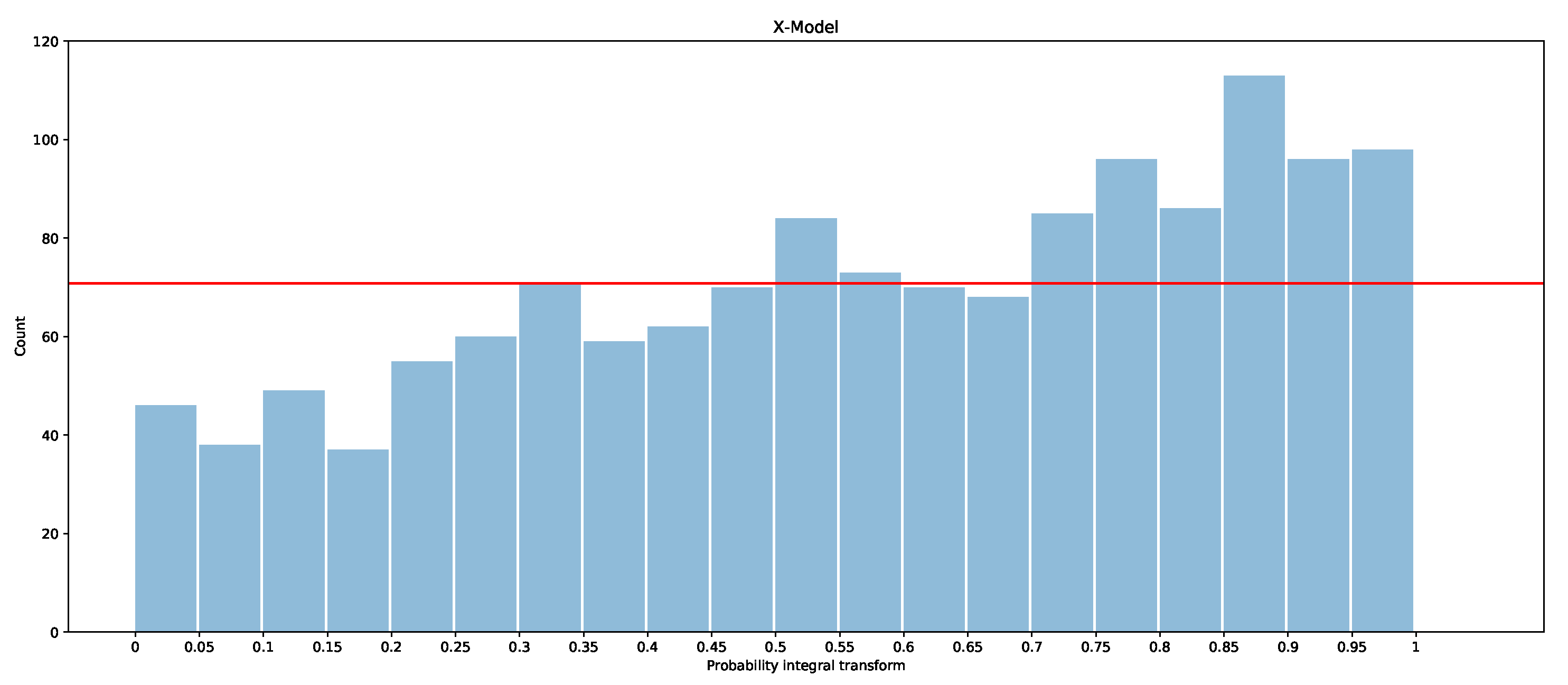

The PITs for the ARIMAX model, ARX model, and X-model are shown in

Figure 19,

Figure 20 and

Figure 21 for the first two months of the test dataset. Additionally included is a red line with which the bars should be level if the models were to be calibrated (i.e., if all observations were evenly divided into the 20 boxes,

, where

is number of observations in January and February).

It is clear that the ARX model is the best calibrated of the three models, as the PIT is closer to a uniform distribution than in the other models. The ARIMAX model and X-model both have some biases, but the ARIMAX model also appears to be a little over-dispersed, which suggests that the quantiles are more spread out than the underlying distribution [

28]. The X-model, on the other hand, has more observations in the larger quantiles than in the smaller quantiles. This suggests that the overall distribution estimated by the X-model is slightly shifted so that more observations fall into the larger quantiles. One explanation of the bias in the X-model is due to the use of the LASSO regressor, which is a bias estimator. This is exacerbated in the X-model, since the biases will accumulate by summing across each class forecast when producing the supply and demand curves. Comparison of the probabilistic forecasts of the original X-model papers [

1,

27] is difficult, as there is no proper scoring function evaluation included. However, a qualitative comparison with the probabilistic integral transforms is possible. The PIT in [

1] shows that the X-model is under-dispersed with over-representation in the lower quantiles. In [

27], both the X-model and the autoregression are biased and over-represent the lower quantiles. This is similarly the case for our ARX model and X-model for the entire dataset (see

Appendix B.2). However, for the smaller test dataset, our models actually have an over-representation of data in the upper quantiles. The difference between the X-model in this paper and the ones in [

1,

27] could be because they only include renewable generation as an input rather than conventional generation, hence the bias towards lower prices (e.g., the merit-order effect). We do not produce a comparison of these results with those of the only other paper while considering the probabilistic forecasts of GB’s market [

5], since they only consider prediction intervals, whereas we are interested in a wide range of quantiles—from 5% to 95%.

The PIT suggests that methods should be used to try to improve the models, such as by using a reducing bias or by scaling or shifting the estimated distributions [

28]. This will be explored in future work.

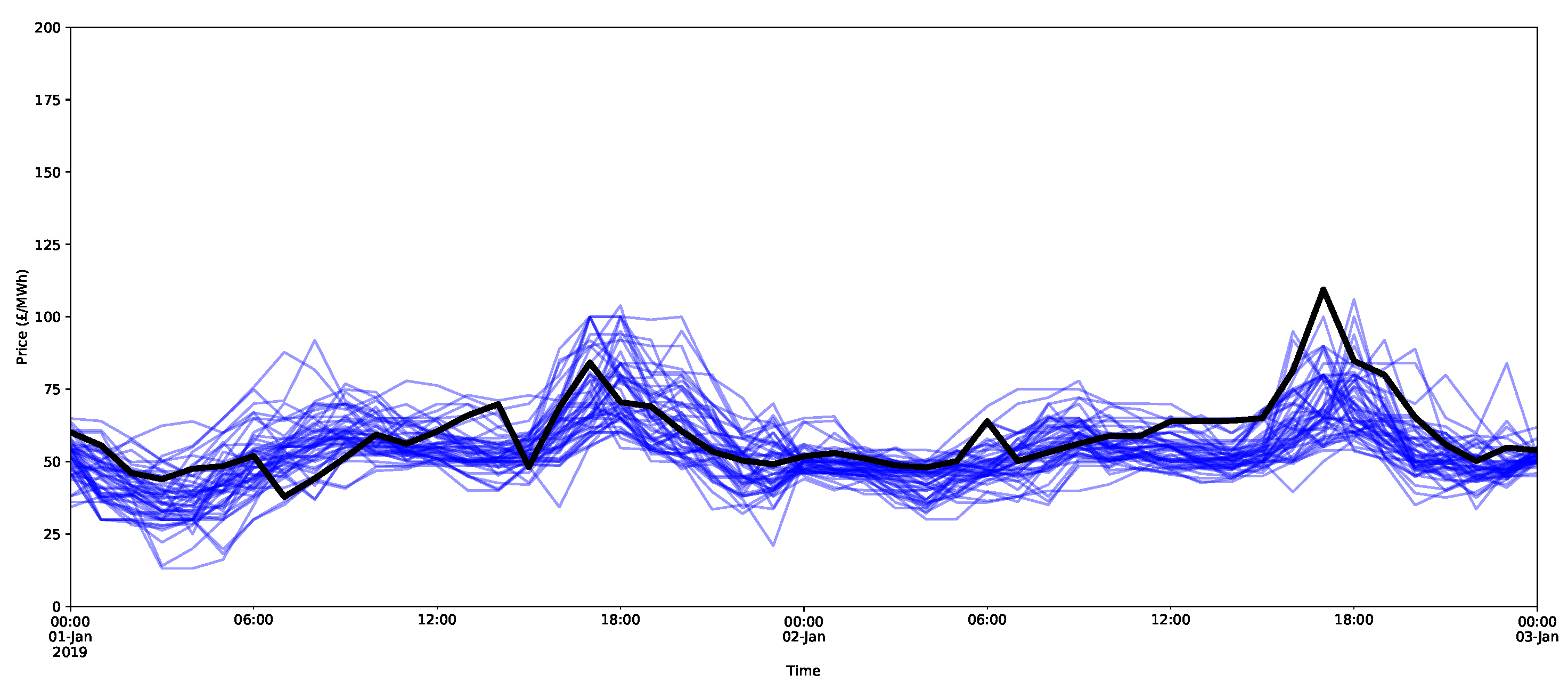

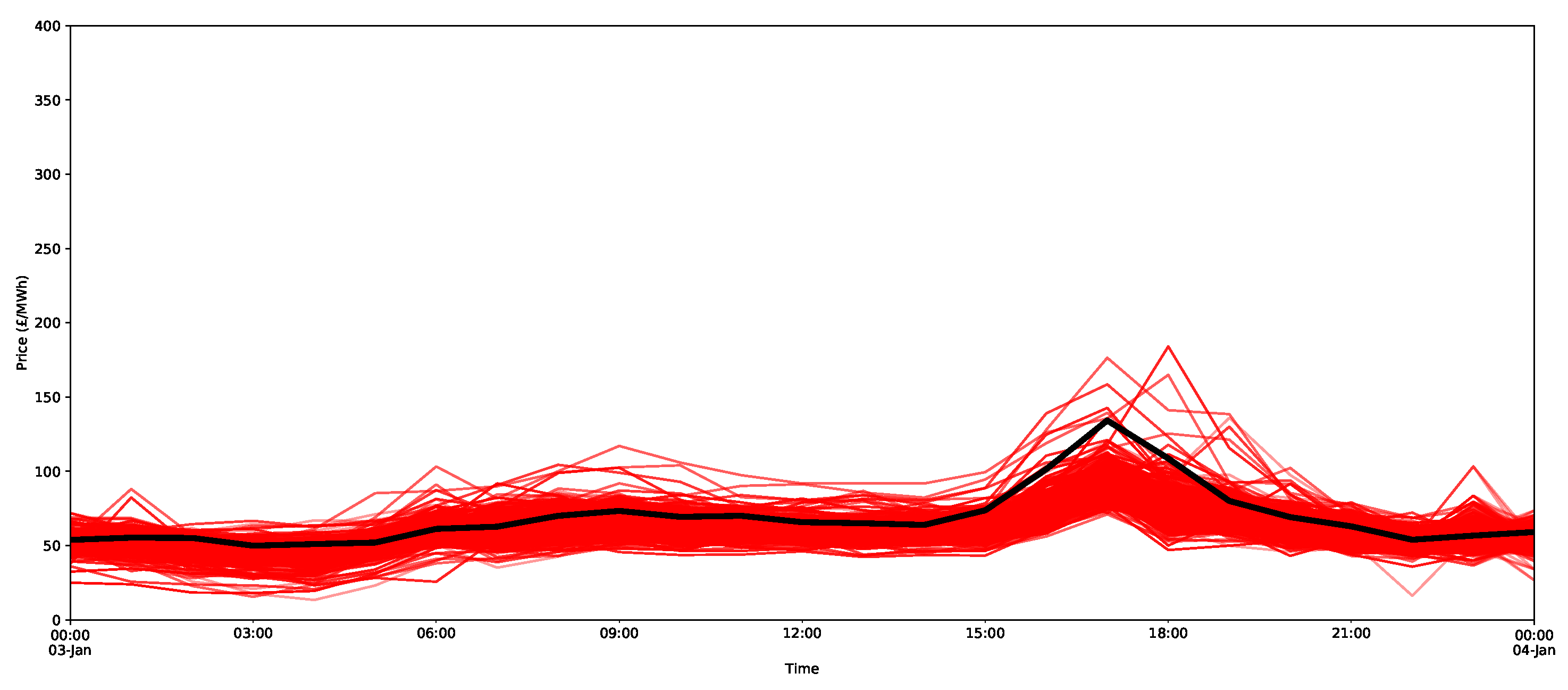

6.3. Ensembles and Peak Forecasts

The quantile regressions are good for showing the uncertainty around the values produced by each model. However, each time step in the day ahead is a univariate distribution and does not explain the interdependencies in the data. The interdependencies may be more useful for an aggregator who needs to understand how the prices in one part of the day may affect other periods. The ARIMAX model does not have the capability of modelling this; hence, only the ARX and X-model are compared here.

Understanding interdependencies requires estimates of a multivariate distribution for the following day. In the forecasts of the ARX model and X-model, each individual bootstrap can be viewed as an individual draw from the multivariate distribution. An example using the ARX model is shown in

Figure 22, for which 50 bootstraps were randomly selected (in red). Note that the bootstraps are based on randomly sampled full daily residuals and, therefore, maintain the daily interdependencies. A similar process was performed for the X-model in

Figure 23.

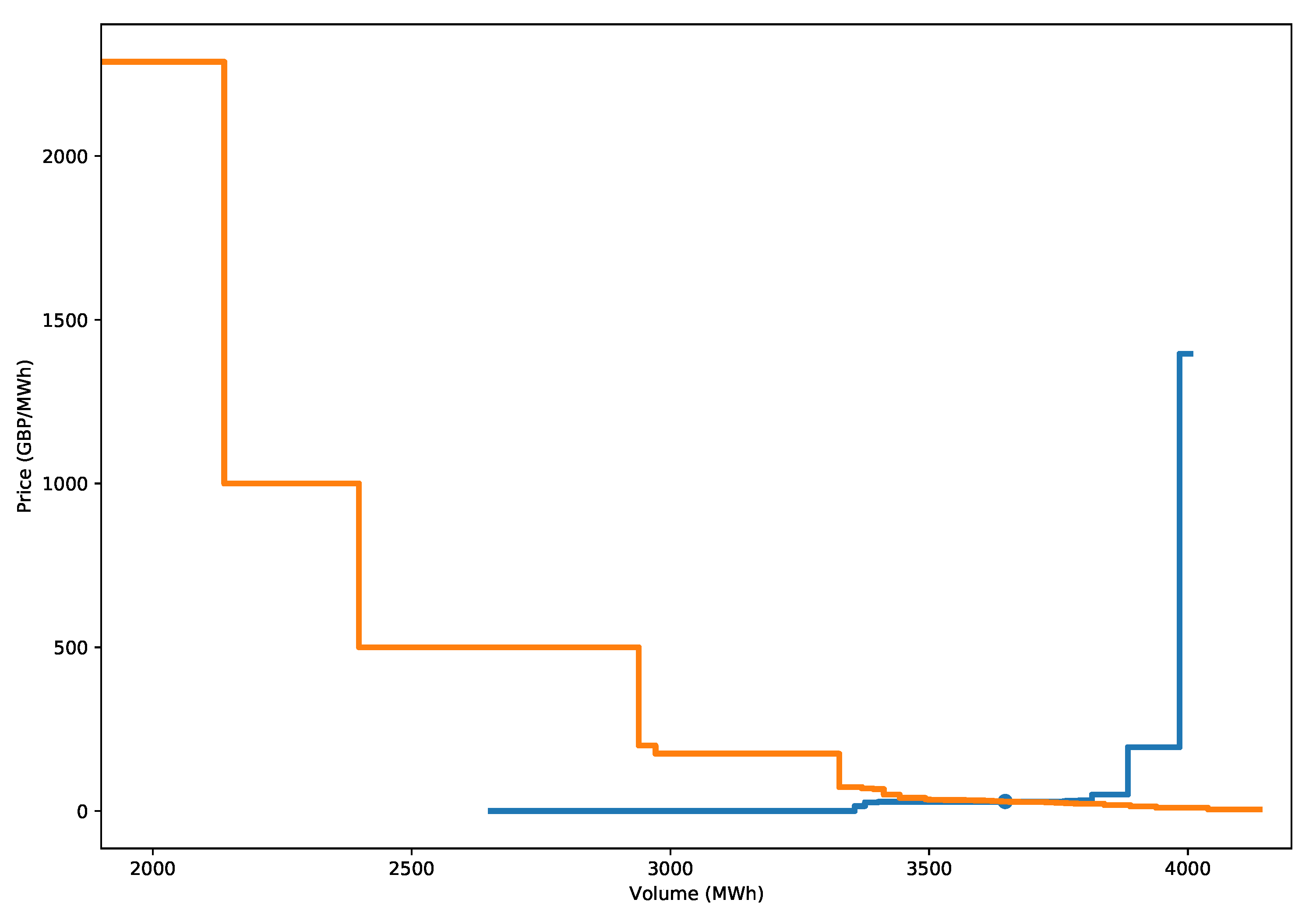

Now consider the large peak at 5 p.m. on 3 January 2019 with a size of 134.24 GBP/MWh (this can be seen in

Figure 18). In addition, the surrounding hours of 4 p.m. and 6 p.m. are also very large at over 100 GBP/MWh. As indicated in

Figure 24, the peak is higher than the estimates for the 95 percentile for all of the models considered, and the quantile estimates do not appear to capture the potential peak, since this extreme behaviour likely lies in the larger quantiles of the distribution (for example, the 99th percentile). Notice that, just using ventiles, the methods all score relatively poorly (in terms of the pinball score) for the peak period, as shown in

Table 5. It does appear that the X-model does encompass some of the uncertainty around the higher demands surrounding the main peaks within its quantiles.

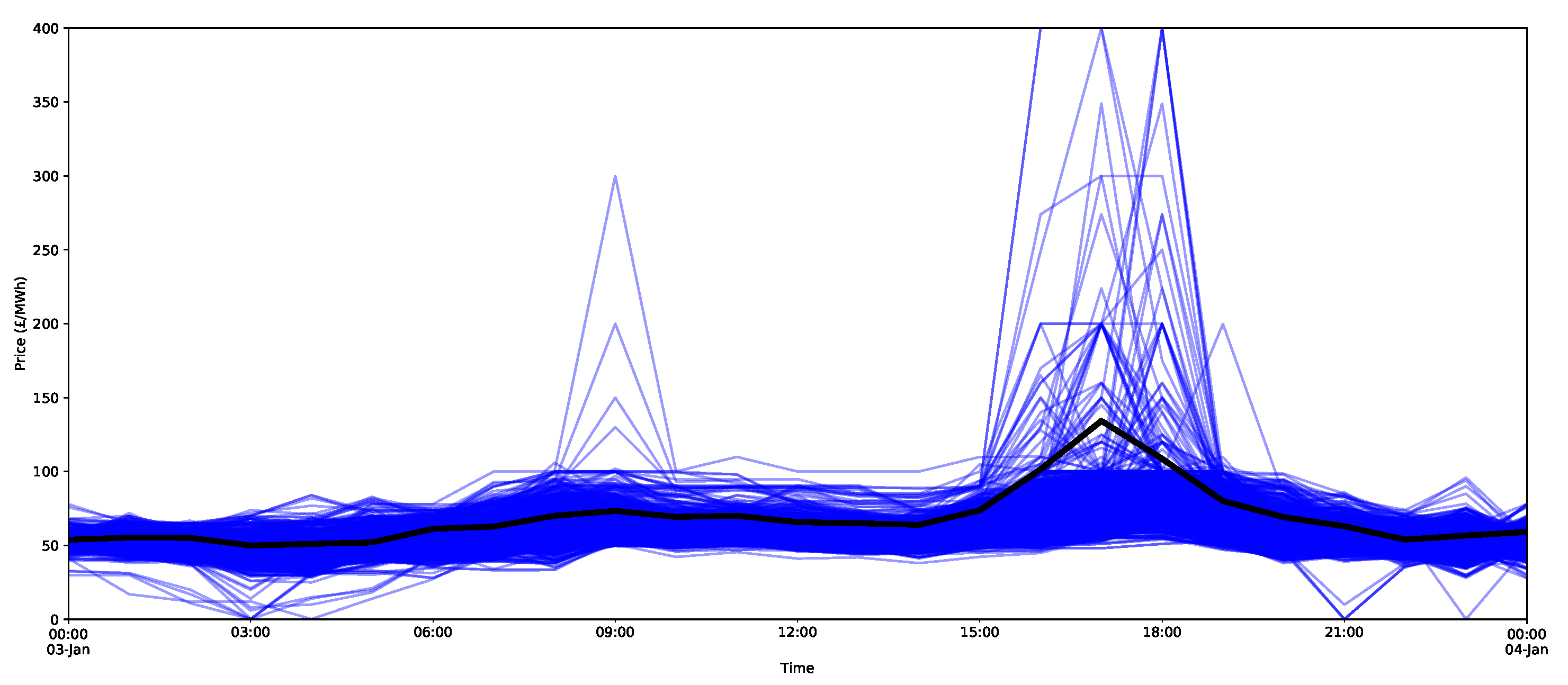

Since peaks are relatively rare, we may not expect the peaks to be captured very well, especially for the broad buckets defined by the ventiles, which were created from relatively few bootstraps (2000 bootstraps means that each bucket would only have about 100 points on average). The individual ensembles/bootstraps themselves may give more insight into these rare events.

To demonstrate the forecasts of the peaks, all 2000 bootstrap forecasts are shown for both the ARX model and the X-model in

Figure 25 and

Figure 26, respectively. Notice that the X-model has some ensembles with more extreme peak predictions (and note that they were not visible in the prediction interval estimates in

Figure 18, since they were in the top 5% of values). Additionally notice that the adjacent time periods also have larger estimates with the X-model.

Hence, both models make it clear that there is potential for a price spike at 5 p.m., and many of the ensembles indicate a peak. An explanation for the peaks (especially the larger ones) in the X-model is offered in the original X-model paper [

1], which suggests that the steepness in the gradient of the supply curve produces a sensitivity to the final clearance price.

In fact, this is seen to be the case for the 5 p.m. peak on the 3 January 2019, as shown in

Figure 27 for the peak hour and selected hours around the peak period. Notice the large rate of change in the demand curve near the intersection point. A small change in the bids and offers could shift the curves, so the intersection and, hence, the clearance price could change quite dramatically. In addition, for the same hour, peaks as high as 400 GBP/MWh are predicted by the X-model (

Figure 26). As shown by the supply–demand curves, such a peak is, in fact, quite possible, as a small change in the supply or demand curve could easily shift the intersection point to this extremely high price.

The potential peak of 400 GBP/MWh was not reproduced in the ensembles of the ARX model. This method only utilises the final clearance prices, so very large deviations will only be included in the residual bootstrap if they are observed as historical deviations from the model. This means that the ARX will model the typical behaviours, but is unlikely to include features due to the sensitivities in the supply and demand curves.

In contrast, the X-model is designed to forecast the supply and demand curves. The bootstrapping of the residuals is added to the forecasted price classes, creating several reconstructed supply and demand curves. Some of these pairs of curves will produce small changes, which will result in a high clearance price, as shown in this example.

The X-model process has both positive and negative implications for practitioners. On the one hand, it can highlight moments that are particularly sensitive to potential price spikes. However, at the same time, it may give a false impression of how often these occur. This suggests that one of the best usages of the X-model is in conjunction with other techniques and methods. If the X-model suggests a price spike, this should suggest to the practitioner to look deeper into the supply and demand curves estimated by the method. These should be further compared to findings with other models, such as the ARX model, to see what the typical behaviour is likely to be in order to better inform the business decisions to be made.

7. Conclusions and Discussion

The aim of this paper is to present the first implementation of a relatively new day-ahead probabilistic forecasting method for electricity prices with X-model specifically for Great Britain’s market, as well as to discuss its performance in comparison to two more common methods that were implemented in the same context and also presented in this paper. The research presented here suggested the X-model could be useful for predicting price spikes and the uncertainty around them, and a particular example from January 2019 that was examined in this report showed that the X-model correctly indicated a spike and highlighted the sensitivity of the prices at that period of time.

In addition to the X-model investigation, two other models were also tested and introduced. A simpler version of the X-model—labelled ARX here—was found to be the most accurate model, especially for probabilistic forecasting, although it may not be as useful in predicting price spikes as the X-model. An ARIMAX-type model was also introduced. This model also performed well with a small number of parameters and required less training. This suggests that if data are limited, this model would be the most suitable for standard probabilistic forecasts. However, if more data are available, then the ARX model is preferable, as it can also produce ensemble forecasts that capture the intraday day-ahead price dependencies, which could help inform decision making and could be useful for other applications.

The study of data-driven probabilistic forecasts has important implications for future energy markets and the policies that support them. Firstly, increasing numbers of trades are being algorithmically implemented (65% of EPEX SPOT trades in 2019) [

29]. Hence, there is a requirement for more data-driven models, such as those presented in this paper. Secondly, localised energy markets are being emphasised as a potential solution in many marketplaces as a way to coordinate an increasingly complex decentralised energy system [

30]. This will require more advanced probabilistic modelling, such as that demonstrated in this paper, in order to cope with the increasing uncertainty and irregularity in pricing at the localised level. This will also require increased data availability for the more granular demand and distributed generation. At a policy level, this may require changes in how markets are regulated. These will be important areas of investigation and research, but are beyond the scope of this paper.

Some limitations of this study include:

Only one specific price spike event has been studied in detail, but if additional price spike events would have been identified and studied, a more quantitative conclusion on the benefits of the X-model for price spike events with respect to more traditional modelling techniques could have been reached;

Two more traditional modelling techniques were used as benchmarks. However, these techniques were not specifically designed to handle price spike events. Additional benchmarks that consider price spike events could be informative;

Only means of renewable energy generation (solar and wind) were considered as exogenous variables. In other studies, such as [

27], conventional energy generation was also considered as an input, and it would be informative to compare the current results with those of the same techniques when including these variables.

There are several useful extensions of the models and analyses that could be included in future work. One useful extension of the research presented here is the expansion of the model to longer-term forecasts. This would improve the planning and understanding of the impact of higher proportions of renewables on the network. A medium- and long-term extension of the X-model is described in [

27].

Since there are multiple components of the X-model, the algorithm presented here was not optimised for computational efficiency. Most of the components are relatively efficient, and many are only implemented once—for example, identifying the supply and demand classes. The LASSO model and, hence, the class forecasts are all relatively quick to implement. There are only two main elements that are quite computationally expensive:

Generating the probability and average volumes for each price class.

Generating the bootstrap forecast. This is expensive because each bootstrap also includes a stochastic reconstruction of the supply and demand curves.

The first operation is not an issue, as this can be derived offline and is only updated at irregular intervals. The second, however, is quite computationally expensive, since it requires two expensive steps for each bootstrap: first, a Bernoulli random draw for all elements in the forecast vector, and second, a calculation of the intersection of the reconstructed curves. However, each bootstrap can be calculated on its own, and hence, the code can be easily parallelised. This will be considered in future work.

Future work can also focus on the more recent evolution of the market in Great Britain. The current paper analysed a dataset collected prior to January 2021. After January 2021, the application of Brexit will slightly modify Great Britain’s individual exchange market, particularly regarding the algorithm coupling the GB’s and EU’s prices.