3.2. Tessellation Comparison

The density of ground points per tessellation varied from one tessellation size to the next. For example, the density of points in the 5 m

2 tessellation ranged from 0.0 to 518.4 points/m

2 for the Quanergy and 0.0 to 693.8 points/m

2 for the Velodyne and the 25 m

2 tessellation ranged from 0.0 to 485.2 points/m

2 for the Quanergy and 0.0 to 683.1 points/m

2 for the Velodyne (

Table 4). The results for mean and standard deviation also decreased with increasing tessellation size. The average point density in the 5 m

2 tessellation was 56.8 ± 78.99 points/m

2 for the Quanergy and 50.4 ± 95.5 points/m

2 for the Velodyne and the average points density in the 25 m

2 tessellation was 55.7 ± 69.6 points/m

2 for the Quanergy and 49.4 ± 87.3 points/m

2 for the Velodyne (

Table 4). These metrics differed just slightly from the average point density computed using the cloud-to-cloud comparison. The range and average of the QL2 point density were much lower than both UAS sensors we tested (as expected given the reported collection rate of 2 points/m

2), where the range was 0.0 to 7.4 points/m

2 in the 5 m

2 tessellation and 0.0 to 6.5 in the 25 m

2 tessellation and average was 1.1 ± 0.7 points/m

2 in the 5 m

2 tessellation (

Table 4). Although the Velodyne had higher maximum point densities compared to the Quanergy, the Quanergy had higher average point densities at all tessellation sizes and the Quanergy sensor also had a higher proportion of polygons with densities above the average (31.8% ± 0.4 compared to 24.8% ± 0.4 for the Velodyne). The tessellation polygons that had densities over 200 points/m

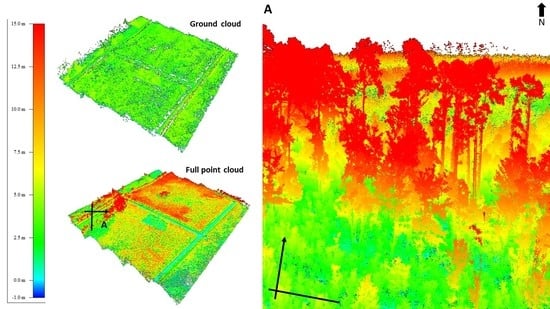

2 slightly varied by sensor where the Quanergy had an average of 5.1% ± 0.4 and Velodyne had slightly more at 5.5% ± 0.3. The areas with the lowest point density for the Quanergy and Velodyne were in the densest forest cover and the areas of greatest point collection occurred along roads and sparsely vegetated areas (

Figure 4).

The average lowest, or minimum, elevation increased with increasing tessellation size (

Table 5). For example, the Quanergy was 9.97 m at 5 m

2 to 10.11 m at 25 m

2 and Velodyne was 9.95 m at 5 m

2 to 10.08 m at 25 m

2. The average minimum elevation of the two UAS sensors were lower than the QL2 LiDAR (differences were all negative) and statistically different from one another (

p = 0.0399). Conversely, the average highest, or maximum elevation, decreased with increasing tessellation size, were also significantly different (

p = 0.00001), and differences were all positive which indicates that the two UAS sensors had higher maximum elevations compared with the QL2 LiDAR. Average elevation did not change with increasing tessellation size; however, the sensors were again significantly different (

p = 0.0004), and the average elevation of the QL2 data was higher than the Quanergy and Velodyne at all tessellation sizes. The UAS sensors had the same average mean Z difference with each other (−0.01) and they had similar differences when compared with the QL2. For example, the Quanergy averaged −0.12 and Velodyne averaged −0.13, indicating the elevation values produced by the UAS sensors were slightly lower than QL2. The average elevation difference between the two UAS sensors, Velodyne to Quanergy, was minor (−0.01 m); however, the difference between the lowest elevation was on average −1.79 (± 0.09 m) and the maximum elevation was 1.52 (± 0.11 m) which indicates that the Velodyne did not capture lowest elevations but it captured higher maximum elevations.

Mean Z differences across the 5 m

2 tessellation surface are shown in

Figure 5. Most (86% ± 0.86) of the difference between Velodyne and Quanergy were minor (−0.09 to 0.10 m) (

Figure 5C and

Table 6). The ditches on either side of the gravel roads had the greatest differences in elevation. Notably, there was an area/patch in the middle with large elevation differences (positive and negative) which had higher elevations and also had the lowest density of points (

Figure 5). Additionally, there was a clear banding/striping in the negative elevation difference (−0. 24 to −0.10 m) (Quanergy had higher elevations than Velodyne). In comparison with the QL2 (

Figure 6C,D), the area was dominated by the largest negative difference (−1.30 to −0.25 m), where Velodyne was higher (23% ± 0.33) than Quanergy (19% ± 0.51). Little difference (−0.09 to 0.10 m) was greater for Quanergy (38% ± 1.04) than Velodyne (32% ± 1.84). Lastly, the area was less dominated by UAS elevations greater than QL2; however, the Quanergy slightly out-performed Velodyne where Quanergy had lower area (2.73% ± 0.57) than Velodyne (3.32% ± 0.60). In summary, although the elevations were very similar between Quanergy and Velodyne, both were dominated by negative (lower elevations) difference with QL2 (

Figure 7).

3.3. DTM Comparison

Elevation in the DTM surfaces ranged from 9.9 to 13.8 m, with an average of 11.6 ± 0.3 m for the Quanergy and 9.8 to 13.1 m with an average of 11.6 ± 0.3 m for the Velodyne (

Table 7). The range of the QL2 was 9.9 to 13.4 m with an average of 11.8 ± 0.3 m. Comparison of the DTMs generated from the Quanergy and Velodyne are shown in

Figure 7. Visual comparisons of the DTMs generated from the Quanergy and Velodyne are shown in

Figure 7 which highlights two areas of low and high elevation difference between the two sensors, respectively, located in the center of our study area. The areas of low difference in DTMs observed between the two sensors present overall little elevation differences and are covered by sparse vegetation while the converse is true where the canopy cover is very dense. The largest difference between the two sensors was observed in the part of the study area with the densest stand of trees (

Figure 7C and insets to the right) where LiDAR penetration was the lowest, especially for the Quanergy. Noticeably, there are marked areas of high difference between the DTMs resulting from the two sensors along the ditches immediately adjacent to roads. The vegetation on either side of the road overlying these ditches is unusually dense, pointing to the same issue with cover penetration in dense canopies highlighted above.