Investigation of Isolation Forest for Wind Turbine Pitch System Condition Monitoring Using SCADA Data

Abstract

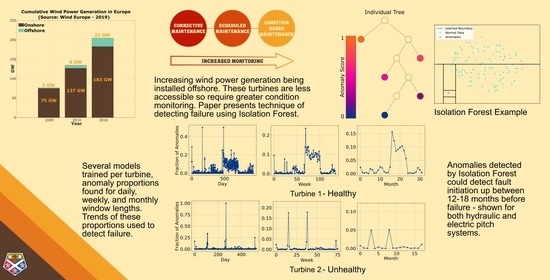

:1. Introduction

Objectives and Novelty

- This paper also examines the turbines with electric pitch systems to compare the model effectiveness for different components;

- Examines different aggregate window lengths, these being daily, weekly, and monthly anomaly proportions to assess what window length improves;

- Compares healthy and unhealthy turbine performance, to assess if the model can differentiate.

2. Literature Review

2.1. Pitch System Condition Monitoring

2.2. Anomaly Detection for Wind Turbines

3. Methodology

3.1. Data

3.1.1. Case Study 1

3.1.2. Case Study 2

3.2. Isolation Forest

3.3. Condition Monitoring Technique

4. Results

4.1. Condition Monitoring Technique

4.2. Comparison of Number of Training Months

4.3. Comparison of Window Length

4.4. Effect of Feedback Current

4.5. Validation against Case Study 2

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Offshore Wind Outlook 2019: World Energy Outlook Special Report. Technical Report. 2019. Available online: https://www.iea.org/reports/offshore-wind-outlook-2019 (accessed on 7 October 2021).

- Costa, Á.M.; Orosa, J.A.; Vergara, D.; Fernández-Arias, P. New tendencies in wind energy operation and maintenance. Appl. Sci. 2021, 11, 1386. [Google Scholar] [CrossRef]

- Ren, Z.; Verma, A.S.; Li, Y.; Teuwen, J.J.; Jiang, Z. Offshore wind turbine operations and maintenance: A state-of-the-art review. Renew. Sustain. Energy Rev. 2021, 144, 110886. [Google Scholar] [CrossRef]

- Stehly, T.; Heimiller, D.; Scott, G. 2016 Cost of Wind Energy Review; Technical Report December; National Renewable Energy Lab.: Golden, CO, USA, 2016. [Google Scholar]

- Carroll, J.; McDonald, A.; McMillan, D. Failure rate, repair time and unscheduled O&M cost analysis of offshore wind turbines. Wind Energy 2016, 19, 1107–1119. [Google Scholar] [CrossRef] [Green Version]

- Rinaldi, G.; Thies, P.R.; Johanning, L. Current Status and Future Trends in the Operation and Maintenance of Offshore Wind Turbines: A Review. Energies 2021, 14, 2484. [Google Scholar] [CrossRef]

- Faulstich, S.; Hahn, B.; Tavner, P. Wind Turbine Downtime and its Importance for Offshore Deployment. Wind Energy 2011, 14, 327–337. [Google Scholar] [CrossRef]

- Pinar Pérez, J.M.; García Márquez, F.P.; Tobias, A.; Papaelias, M. Wind turbine reliability analysis. Renew. Sustain. Energy Rev. 2013, 23, 463–472. [Google Scholar] [CrossRef]

- Dalgic, Y.; Lazakis, I.; Turan, O. Vessel charter rate estimation for offshore wind O&M activities. Int. Marit. Assoc. Mediterr. IMAM 2013, 899–908. [Google Scholar] [CrossRef] [Green Version]

- Nielsen, J.J.; Sørensen, J.D. On risk-based operation and maintenance of offshore wind turbine components. Reliab. Eng. Syst. Saf. 2011, 96, 218–229. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M. Isolation Forest. In Proceedings of the Eighth IEE International Confrence on Data Mining, Pisa, Italy, 15–19 December 2008. [Google Scholar] [CrossRef]

- Mckinnon, C.; Carroll, J.; Mcdonald, A.; Koukoura, S.; Plumley, C. Investigation of anomaly detection technique for wind turbine pitch systems. In Proceedings of the IET RPG 2020, Dublin, Ireland, 1–2 March 2021. [Google Scholar]

- Nielsen, J.S.; van de Pieterman, R.P.; Sorensen, J.D. Analysis of pitch system data for condition monitoring. Wind Energy 2014, 17, 435–449. [Google Scholar] [CrossRef]

- Kandukuri, S.T.; Huynh, V.K.; Karimi, H.R.; Robbersmyr, K.G. Fault Diagnostics for Electrically Operated Pitch Systems in Offshore Wind Turbines. J. Phys. Conf. Ser. 2016, 753. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Hao, X.; Kai, H. Fault identification of new energy based on online monitoring. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference, IMCEC 2016, Xi’an, China, 3–5 October 2016; pp. 1275–1278. [Google Scholar] [CrossRef]

- Yang, C.; Qian, Z.; Pei, Y.; Wei, L. A data-driven approach for condition monitoring of wind turbine pitch systems. Energies 2018, 11, 2142. [Google Scholar] [CrossRef] [Green Version]

- Zhu, J.; Ma, K.; Hajizadeh, A.; Soltani, M.; Chen, Z. Fault detection and isolation for wind turbine electric pitch system. In Proceedings of the International Conference on Power Electronics and Drive Systems, Honolulu, HI, USA, 12–15 December 2018; pp. 618–623. [Google Scholar] [CrossRef]

- Wei, L.; Qian, Z.; Yang, C.; Pei, Y. Wind turbine pitch system condition monitoring based on performance curves in multiple states. In Proceedings of the 2018 9th International Renewable Energy Congress, IREC 2018, Hammamet, Tunisia, 20–22 March 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cho, S.; Gao, Z.; Moan, T. Model-based fault detection, fault isolation and fault-tolerant control of a blade pitch system in floating wind turbines. Renew. Energy 2018, 120, 306–321. [Google Scholar] [CrossRef]

- Cho, S.; Choi, M.; Gao, Z.; Moan, T. Fault detection and diagnosis of a blade pitch system in a floating wind turbine based on Kalman filters and artificial neural networks. Renew. Energy 2021, 169, 1–13. [Google Scholar] [CrossRef]

- He, L.; Hao, L.; Pan, D.; Qiao, W. Detection of single-axis pitch bearing defect in a wind turbine using electrical signature analysis. In Proceedings of the 2019 IEEE International Electric Machines and Drives Conference, IEMDC 2019, San Diego, CA, USA, 12–15 May 2019; pp. 31–36. [Google Scholar] [CrossRef]

- Kandukuri, S.T.; Senanyaka, J.S.L.; Huynh, V.K.; Robbersmyr, K.G. A Two-Stage Fault Detection and Classification Scheme for Electrical Pitch Drives in Offshore Wind Farms Using Support Vector Machine. IEEE Trans. Ind. Appl. 2019, 55, 5109–5118. [Google Scholar] [CrossRef]

- Yang, C.; Qian, Z.; Pei, Y. Condition Monitoring for Wind Turbine Pitch System Using Multi-parameter Health Indicator. In Proceedings of the 2018 International Conference on Power System Technology, POWERCON 2018, Guangzhou, China, 6–8 November 2018; pp. 4820–4825. [Google Scholar] [CrossRef]

- Guo, J.; Wu, J.; Zhang, S.; Long, J.; Chen, W.; Cabrera, D.; Li, C. Generative transfer learning for intelligent fault diagnosis of the wind turbine gearbox. Sensors 2020, 20, 1361. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, L.; Qian, Z.; Zareipour, H. Fault Detection Based on Optimized. IEEE Trans. Sustain. Energy 2020, 11, 2326–2336. [Google Scholar] [CrossRef]

- Sandoval, D.; Leturiondo, U.; Vidal, Y.; Pozo, F. Entropy indicators: An approach for low-speed bearing diagnosis. Sensors 2021, 21, 849. [Google Scholar] [CrossRef]

- Leukel, J.; González, J.; Riekert, M. Adoption of machine learning technology for failure prediction in industrial maintenance: A systematic review. J. Manuf. Syst. 2021, 61, 87–96. [Google Scholar] [CrossRef]

- Nasiri, S.; Khosravani, M.R. Machine learning in predicting mechanical behavior of additively manufactured parts. J. Mater. Res. Technol. 2021, 14, 1137–1153. [Google Scholar] [CrossRef]

- Xu, X.; Lei, Y.; Zhou, X. A LOF-based method for abnormal segment detection in machinery condition monitoring. In Proceedings of the 2018 Prognostics and System Health Management Conference (PHM-Chongqing), Chongqing, China, 26–28 October 2018; pp. 125–128. [Google Scholar] [CrossRef]

- Abouel-seoud, S.A. Fault detection enhancement in wind turbine planetary gearbox via stationary vibration waveform data. J. Low Freq. Noise Vib. Act. Control 2018, 37, 477–494. [Google Scholar] [CrossRef]

- Huitao, C.; Shuangxi, J.; Xianhui, W.; Zhiyang, W. Fault diagnosis of wind turbine gearbox based on wavelet neural network. J. Low Freq. Noise Vib. Act. Control 2018, 37, 977–986. [Google Scholar] [CrossRef] [Green Version]

- Yu, D.; Chen, Z.M.; Xiahou, K.S.; Li, M.S.; Ji, T.Y.; Wu, Q.H. A radically data-driven method for fault detection and diagnosis in wind turbines. Int. J. Electr. Power Energy Syst. 2018, 99, 577–584. [Google Scholar] [CrossRef]

- Liu, X.; Azzam, B.; Harzendorf, F.; Kolb, J.; Schelenz, R.; Hameyer, K.; Jacobs, G. Early stage white etching crack identification using artificial neural networks. Forsch. Ing. 2021, 85, 153–163. [Google Scholar] [CrossRef]

- Turnbull, A.; Carroll, J.; McDonald, A. Combining SCADA and vibration data into a single anomaly detection model to predict wind turbine component failure. Wind Energy 2021, 24, 197–211. [Google Scholar] [CrossRef]

- Yan, Y.; Li, J.; Gao, D.W. Condition Parameter Modeling for Anomaly Detection in Wind Turbines. Energies 2014, 7, 3104–3120. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Li, D.; Dong, A.; Lin, J.; Kang, D.; Shang, L. Fault prognosis of wind turbine generator using SCADA data. In Proceedings of the NAPS 2016—48th North American Power Symposium, Denver, CO, USA, 18–20 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Pei, Y.; Qian, Z.; Tao, S.; Yu, H. Wind Turbine Condition Monitoring Using SCADA Data and Data Mining Method. In Proceedings of the 2018 International Conference on Power System Technology (POWERCON), Guangzhou, China, 6–8 November 2018; pp. 3760–3764. [Google Scholar]

- Zhao, H.; Liu, H.; Hu, W.; Yan, X. Anomaly detection and fault analysis of wind turbine components based on deep learning network. Renew. Energy 2018, 127, 825–834. [Google Scholar] [CrossRef]

- Bangalore, P.; Letzgus, S.; Karlsson, D.; Patriksson, M. An artificial neural network-based condition monitoring method for wind turbines, with application to the monitoring of the gearbox. Wind Energy 2017, 20, 1421–1438. [Google Scholar] [CrossRef]

- Cui, Y.; Bangalore, P.; Tjernberg, L.B. An anomaly detection approach based on machine learning and scada data for condition monitoring of wind turbines. In Proceedings of the 2018 International Conference on Probabilistic Methods Applied to Power Systems, Boise, ID, USA, 24–28 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cui, Y.; Bangalore, P.; Tjernberg, L.B. An Anomaly Detection Approach Using Wavelet Transform and Artificial Neural Networks for Condition Monitoring of Wind Turbines’ Gearboxes. In Proceedings of the 2018 Power Systems Computation Conference (PSCC), Power Systems Computation Conference, Dublin, Ireland, 11–15 June 2018; pp. 1–7. [Google Scholar]

- Sun, Z.; Sun, H. Stacked Denoising Autoencoder With Density-Grid Based Clustering Method for Detecting Outlier of Wind Turbine Components. IEEE Access 2019, 7, 13078–13091. [Google Scholar] [CrossRef]

- Zeng, X.J.; Yang, M.; Bo, Y.F. Gearbox oil temperature anomaly detection for wind turbine based on sparse Bayesian probability estimation. Int. J. Electr. Power Energy Syst. 2020, 123. [Google Scholar] [CrossRef]

- Lutz, M.A.; Vogt, S.; Berkhout, V.; Faulstich, S.; Dienst, S.; Steinmetz, U.; Gück, C.; Ortega, A. Evaluation of anomaly detection of an autoencoder based on maintenace information and SCADA-data. Energies 2020, 13, 1063. [Google Scholar] [CrossRef] [Green Version]

- Dhiman, H.S.; Deb, D.; Muyeen, S.M.; Kamwa, I. Wind Turbine Gearbox Anomaly Detection based on Adaptive Threshold and Twin Support Vector Machines. IEEE Trans. Energy Convers. 2021, 8969, 1–8. [Google Scholar] [CrossRef]

- Moreno, S.R.; Coelho, L.D.S.; Ayala, H.V.; Mariani, V.C. Wind turbines anomaly detection based on power curves and ensemble learning. IET Renew. Power Gener. 2020, 14, 4086–4093. [Google Scholar] [CrossRef]

- Skrimpas, G.A.; Marhadi, K.S.; Gomez, R.; Sweeney, C.W.; Jensen, B.B.; Mijatovic, N.; Holboell, J. Detection of pitch failures in wind turbines using environmental noise recognition techniques. In Proceedings of the Annual Conference of the Prognostics and Health Management Society, PHM, Coronado, CA, USA, 18–24 October 2015; pp. 280–287. [Google Scholar]

- Astolfi, D.; Castellani, F.; Lombardi, A.; Terzi, L. Multivariate SCADA Data Analysis Methods for Real-World Wind Turbine Power Curve Monitoring. Energies 2021, 14, 1105. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, X.; Collu, M. Electrical Power and Energy Systems Wind power prediction based on high-frequency SCADA data along with isolation forest and deep learning neural networks. Electr. Power Energy Syst. 2020, 118, 105835. [Google Scholar] [CrossRef]

- Chen, H.; Ma, H.; Chu, X.; Xue, D. Anomaly detection and critical attributes identification for products with multiple operating conditions based on isolation forest. Adv. Eng. Inform. 2020, 46, 101139. [Google Scholar] [CrossRef]

- McKinnon, C.; Carroll, J.; McDonald, A.; Koukoura, S.; Infield, D.; Soraghan, C. Comparison of new anomaly detection technique for wind turbine condition monitoring using gearbox SCADA data. Energies 2020, 13, 5152. [Google Scholar] [CrossRef]

- Gil, A.; Sanz-Bobi, M.A.; Rodríguez-López, M.A. Behavior anomaly indicators based on reference patterns – Application to the gearbox and electrical generator of a wind turbine. Energies 2018, 11, 87. [Google Scholar] [CrossRef] [Green Version]

| Case Study | Features | ||||

|---|---|---|---|---|---|

| 1 | Average Wind Speed | Average Power Output | Blade Pitch Angle | Pitch System Motor Temperature | Pitch System Feedback Current |

| 2 | Average Wind Speed | Average Power Output | Average Pitch Angle | Average Hydraulic Pitch System Temperature | |

| Feature | Avg | Max | Min | STD |

|---|---|---|---|---|

| Generator Speed | x | |||

| Hydraulic Pitch Oil Temperature | x | |||

| Nacelle Temperature | x | |||

| Wind Speed | x | x | x | x |

| Absolute Wind Direction | x | |||

| Relative Wind Direction | x | x | x | x |

| Power | x | |||

| Pitch Angle | x | x | x | x |

| Nacelle Direction | x |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

McKinnon, C.; Carroll, J.; McDonald, A.; Koukoura, S.; Plumley, C. Investigation of Isolation Forest for Wind Turbine Pitch System Condition Monitoring Using SCADA Data. Energies 2021, 14, 6601. https://0-doi-org.brum.beds.ac.uk/10.3390/en14206601

McKinnon C, Carroll J, McDonald A, Koukoura S, Plumley C. Investigation of Isolation Forest for Wind Turbine Pitch System Condition Monitoring Using SCADA Data. Energies. 2021; 14(20):6601. https://0-doi-org.brum.beds.ac.uk/10.3390/en14206601

Chicago/Turabian StyleMcKinnon, Conor, James Carroll, Alasdair McDonald, Sofia Koukoura, and Charlie Plumley. 2021. "Investigation of Isolation Forest for Wind Turbine Pitch System Condition Monitoring Using SCADA Data" Energies 14, no. 20: 6601. https://0-doi-org.brum.beds.ac.uk/10.3390/en14206601