An Omnidirectional Morphological Method for Aerial Point Target Detection Based on Infrared Dual-Band Model

Abstract

:1. Introduction

2. Target Detection Model

2.1. Single-Band Detection Model

2.2. Dual-Band Detection Model

2.3. Simulation and Analysis

3. Point Target Detection

3.1. Omnidirectional Multiscale Morphological Filtering

3.2. Local Difference Criterion

- When it is a background edge pixel, there exist at least one very small and one very large because of the differences in the four directions of the background edge. Together, they give rise to a large DR.

- When it is a point target, all four direction differences in gray value are similar due to the isolated characteristics of the spatial distribution of the point target. Hence, the DR of a point target is approximately 1.

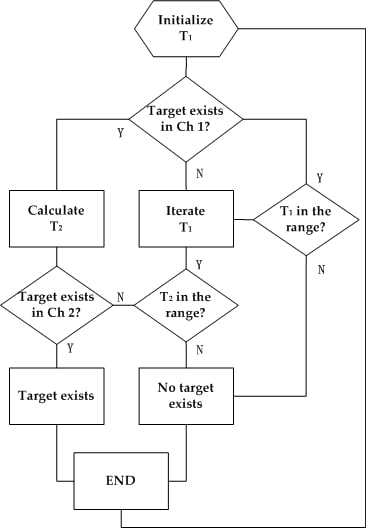

3.3. Adaptive CFAR Threshold under Dual-Band Model

- (1)

- The omnidirectional morphological filtering and the local difference criterion are employed to suppress the complex background for the original infrared images captured by the dual-band detectors.

- (2)

- Initialize TNR1 of Channel 1 (TNR1 = 2.326) according to the CFAR criterion.

- (3)

- TNR2 of Channel 2 is calculated by Equation (8), and the threshold is obtained from Equation (24).

- (4)

- The threshold calculated by Equation (24) is used to segment the image of the Channel 1 after background suppression.

- (5)

- Judge whether there is a suspected target in Channel 1; if any, perform the following steps; if not, decrease TNR1 (0.2 per time), and return to Step (3) until out of range (assuming 1.5). If TNR1 is out of range, it will announce the end of the iteration, and judge the next frame.

- (6)

- The threshold is employed to segment the image of Channel 2 after background suppression.

- (7)

- Judge whether there is a suspicious target in Channel 2, if any, and if the coordinate position of the target coincides with the suspected target in Channel 1, typically within 5 × 5 pixels, it is declared a point target; if not, increase TNR1 (0.2 per time), and return to Step (3).

4. Experimental Results

5. Comparison and Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cao, Y.; Wang, G.; Yan, D.; Zhao, Z. Two algorithms for the detection and tracking of moving vehicle targets in aerial infrared image sequences. Remote Sens. 2016, 8, 28. [Google Scholar] [CrossRef]

- Leitloff, J.; Rosenbaum, D.; Kurz, F.; Meynberg, O.; Reinartz, P. An operational system for estimating road traffic information from aerial images. Remote Sens. 2014, 6, 11315–11341. [Google Scholar] [CrossRef] [Green Version]

- Liu, R.; Wang, D.J.; Zhou, D.B.; Jia, P. Point target detection based on multiscale morphological filtering and an energy concentration criterion. Appl. Opt. 2017, 56, 6796–6805. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Wen, C.; Liu, M. Robust small target co-detection from airborne infrared image sequences. Sensors 2017, 17, 2242. [Google Scholar] [CrossRef] [PubMed]

- Singh, B.K.; Nagar, H.; Roichman, Y.; Arie, A. Particle manipulation beyond the diffraction limit using structured super-oscillating light beams. Light Sci. Appl. 2017, 6, e17050. [Google Scholar] [CrossRef]

- Chan, L.A.; Der, S.Z.; Nasrabadi, N.M. Improved target detection algorithm using dualband infrared imagery. Proc. SPIE 2001, 4379, 187–199. [Google Scholar]

- Jin, M.Z.; Lu, F.; Belkin, M.A. High-sensitivity infrared vibrational nanospectroscopy in water. Light Sci. Appl. 2017, 6, e17096. [Google Scholar] [CrossRef]

- Pering, T.D.; McGonigle, A.J.S.; Tamburello, G.; Aiuppa, A.; Bitetto, M.; Rubino, C.; Wilkes, T.C. A novel and inexpensive method for measuring volcanic plume water fluxes at high temporal resolution. Remote Sens. 2017, 9, 146. [Google Scholar] [CrossRef]

- Burdanowitz, J.; Klepp, C.; Bakan, S.; Buehler, S.A. Simulation of ship-track versus satellite-sensor differences in oceanic precipitation using an island-based radar. Remote Sens. 2017, 9, 593. [Google Scholar] [CrossRef]

- Qiu, G.N.; Quan, H.M. Moving object detection algorithm based on symmetrical-differencing and background subtraction. Comput. Eng. Appl. 2014, 50, 158–162. [Google Scholar]

- Alkandri, A.; Gardezi, A.; Bangalore, N.; Birch, P.; Young, R.; Chatwin, C. Automatic parameter adjustment of difference of Gaussian (DoG) filter to improve OT-MACH filter performance for target recognition applications. Proc. SPIE 2008, 8185, 81850M. [Google Scholar]

- Chen, C.L.P.; Li, H.; Wei, T.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Fan, H.; Wen, C. Two-dimensional adaptive filtering based on projection algorithm. IEEE Trans. Signal Process. 2004, 52, 832–838. [Google Scholar] [CrossRef]

- Wang, P.; Tian, J.W.; Gao, C.Q. Infrared small target detection using directional high pass filters based on LS-SVM. Electron. Lett. 2009, 45, 156–158. [Google Scholar] [CrossRef]

- Newey, M.; Benitz, G.R.; Barrett, D.J.; Mishra, S. Detection and imaging of moving targets with limit SAR data. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3499–3510. [Google Scholar] [CrossRef]

- Genin, L.; Champagnat, F.; Besnerais, G.L. Background first- and second-order modeling for point target detection. Appl. Opt. 2012, 51, 7701–7713. [Google Scholar] [CrossRef] [PubMed]

- Niu, W.; Zheng, W.; Yang, Z.; Wu, Y.; Vagvolgyi, B.; Liu, B. Moving point target detection based on higher order statistics in very low SNR. IEEE Trans. Geosci. Remote Sens. 2018, 15, 217–221. [Google Scholar] [CrossRef]

- Wang, L.G.; Lin, Z.P.; Deng, X.P. Infrared point target detection based on multi-label generative MRF model. Infrared Phys. Technol. 2017, 83, 188–194. [Google Scholar] [CrossRef]

- Succary, R.; Cohen, A.; Yaractzi, P.; Rotman, S.R. Dynamic programming algorithm for point target detection: Practical parameters for DPA. Proc. SPIE 2001, 4473, 96–100. [Google Scholar]

- Sun, X.L.; Long, G.C.; Shang, Y.; Liu, X.L. A framework for small infrared target real-time visual enhancement. Proc. SPIE 2015, 9443, 94430N. [Google Scholar]

- Huber-Shalem, R.; Hadar, O.; Rotman, S.R.; Huber-Lerner, M. Parametric temporal compression of infrared imagery sequences containing a slow-moving point target. Appl. Opt. 2016, 55, 1151–1163. [Google Scholar] [CrossRef] [PubMed]

- Foglia, G.; Hao, C.; Farina, A.; Giunta, G.; Orlando, D.; Hou, C. Adaptive detection of point-like targets in partially homogeneous clutter with symmetric spectrum. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 2110–2119. [Google Scholar] [CrossRef]

- Abu Bakr, M.; Lee, S. A Framework of Covariance Projection on Constraint Manifold for Data Fusion. Sensors 2018, 18, 1610. [Google Scholar] [CrossRef] [PubMed]

- Bakr, M.A.; Lee, S. Distributed Multisensor Data Fusion under Unknown Correlation and Data Inconsistency. Sensors 2017, 17, 2472. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Zhan, X.; Zhu, Z.H. Multisensor Parallel Largest Ellipsoid Distributed Data Fusion with Unknown Cross-Covariances. Sensors 2017, 17, 1526. [Google Scholar] [CrossRef] [PubMed]

- Torbick, N.; Ledoux, L.; Salas, W.; Zhao, M. Regional Mapping of Plantation Extent Using Multisensor Imagery. Remote Sens. 2016, 8, 236. [Google Scholar] [CrossRef]

- Mehmood, A.; Nasrabadi, N.M. Wavelet-RX anomaly detection for dual-band forward-looking infrared imagery. Appl. Opt. 2010, 49, 4621–4632. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.J.; Lu, G.; Bai, L.P.; Li, Q.; Liu, S.Q. A new small and dim targets detection and recognition algorithm based on infrared dual bands imaging system. Proc. SPIE 2011, 8193, 81933U. [Google Scholar]

- Zhou, J.W.; Li, J.C.; Shi, Z.G.; Lu, X.W.; Ren, D.W. Detection of dual-band infrared small target based on joint dynamic sparse representation. Proc. SPIE 2015, 9675, 96751C. [Google Scholar]

- Yang, K.H.; Ma, Y.H.; Guo, J.G.; Liu, Z. The comparison of single-band and dual-band infrared detection of small targets. Proc. SPIE 2016, 10156, 101560N. [Google Scholar]

- Yu, J.C.; Sun, S.L.; Chen, G.L. Automatic target detection in dual band infrared imagery. Proc. SPIE 2008, 6835, 68351L. [Google Scholar]

- Zeng, M.; Li, J.; Peng, Z. The design of top-hat morphological filter and application to infrared target detection. Infrared Phys. Technol. 2006, 48, 67–76. [Google Scholar] [CrossRef]

- Bai, X.Z.; Zhou, F.G.; Xue, B.D. Fusion of infrared and visual images through region extraction by using multi scale center-surround top-hat transform. Opt. Express 2011, 19, 8444–8457. [Google Scholar] [CrossRef] [PubMed]

- Wei, M.S.; Xing, F.; You, Z. A real-time detection and positioning method for small and weak targets using a 1D morphology-based approach in 2D images. Light Sci. Appl. 2018, 7, 18006. [Google Scholar] [CrossRef] [Green Version]

- Das, D.; Mukhopadhyay, S.; Praveen, S.R.S. Multi-scale contrast enhancement of oriented features in 2D images using directional morphology. Opt. Laser Technol. 2017, 87, 51–63. [Google Scholar] [CrossRef]

- Bai, X.Z.; Zhou, F.G.; Xue, B.D. Multiple linear feature detection based on multiple-structuring-element center-surround top-hat transform. Appl. Opt. 2012, 51, 5201–5211. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.Z.; Zhou, F.G.; Xue, B.D. Image enhancement using multi scale image features extracted by top-hat transform. Opt. Laser Technol. 2012, 44, 328–336. [Google Scholar] [CrossRef]

- Ai, J.; Yang, X.; Zhou, F.; Dong, Z.; Jia, L.; Yan, H. A correlation-based joint CFAR detector using adaptively truncated statistics in SAR imagery. Sensors 2017, 17, 686. [Google Scholar] [CrossRef] [PubMed]

- Greidanus, H.; Alvarez, M.; Santamaria, C.; Thoorens, F.-X.; Kourti, N.; Argentieri, P. The SUMO ship detector algorithm for satellite radar images. Remote Sens. 2017, 9, 246. [Google Scholar] [CrossRef]

- Liu, S.T.; Zhou, X.D.; Shen, T.S.; Han, Y.L. Research on infrared-image denoising algorithm based on the noise analysis of the detector. Proc. SPIE 2005, 5640, 440–448. [Google Scholar]

- Li, X.; Ou, X.; Li, Z.; Wei, H.; Zhou, W.; Duan, Z. On-Line Temperature Estimation for Noisy Thermal Sensors Using a Smoothing Filter-Based Kalman Predictor. Sensors 2018, 18, 433. [Google Scholar] [Green Version]

- Cui, Z.; Yang, J.; Jiang, S.; Wei, C. Target detection algorithm based on two layers Human Visual System. Algorithms 2015, 8, 541–551. [Google Scholar] [CrossRef]

- Yuan, G.H.; Rogers, E.T.F.; Zheludev, N.I. Achromatic super-oscillatory lenses with sub-wavelength focusing. Light Sci. Appl. 2017, 6, e17036. [Google Scholar] [CrossRef]

- Li, Y.; Yong, B.; van Oosterom, P.; Lemmens, M.; Wu, H.; Ren, L.; Zheng, M.; Zhou, J. Airborne LiDAR Data Filtering Based on Geodesic Transformations of Mathematical Morphology. Remote Sens. 2017, 9, 1104. [Google Scholar] [CrossRef]

- Hui, Z.; Hu, Y.; Yevenyo, Y.Z.; Yu, X. An Improved Morphological Algorithm for Filtering Airborne LiDAR Point Cloud Based on Multi-Level Kriging Interpolation. Remote Sens. 2016, 8, 35. [Google Scholar] [CrossRef]

- Wang, J.; Cheng, W.; Luo, W.; Zheng, X.; Zhou, C. An Iterative Black Top Hat Transform Algorithm for the Volume Estimation of Lunar Impact Craters. Remote Sens. 2017, 9, 952. [Google Scholar] [CrossRef]

- Page, G.A.; Carroll, B.D.; Prrat, A.; Randall, P.N. Long-range target detection algorithms for infrared search and track. Proc. SPIE 1999, 3698, 48–57. [Google Scholar]

- Zhang, C.; Li, L.; Wang, Y. A Particle Filter Track-Before-Detect Algorithm Based on Hybrid Differential Evolution. Algorithms 2015, 8, 965–981. [Google Scholar] [CrossRef] [Green Version]

| Group | Channel | SNR of Channel | Single-Band Probability of Detection | Optimal Fused Probability of Detection | Probability of Detection of Each Channel after Fusion |

|---|---|---|---|---|---|

| 1 | Ch 1 | 3 | 0.2361 | 0.3553 | 0.4882 |

| Ch 2 | 2 | 0.0428 | 0.7278 | ||

| 2 | Ch 1 | 3 | 0.2361 | 0.5621 | 0.7497 |

| Ch 2 | 3 | 0.2361 | 0.7497 | ||

| 3 | Ch 1 | 3 | 0.2361 | 0.7931 | 0.9201 |

| Ch 2 | 4 | 0.6106 | 0.8620 | ||

| 4 | Ch 1 | 3 | 0.2361 | 0.9416 | 0.9851 |

| Ch 2 | 5 | 0.8999 | 0.9558 | ||

| 5 | Ch 1 | 4 | 0.6106 | 0.9740 | 0.9899 |

| Ch 2 | 5 | 0.8999 | 0.9839 | ||

| 6 | Ch 1 | 4 | 0.6106 | 0.9959 | 0.9989 |

| Ch 2 | 6 | 0.9887 | 0.9971 |

| Channel | Target Coordinate | SNR | Local STD |

|---|---|---|---|

| MWIR | (255, 323) | 3.56 | 2.45 |

| LWIR | (254, 322) | 3.02 | 2.88 |

| Channel | Target Coordinate | SNR | Local STD |

|---|---|---|---|

| MWIR | (254, 205) | 3.03 | 3.21 |

| LWIR | (255, 207) | 4.12 | 2.88 |

| Method | /% | /% | FoM/% | Running Time/s |

|---|---|---|---|---|

| Traditional Top-hat | 83.27 | 6.77 | 69.21 | 0.39 |

| DoG | 87.27 | 5.18 | 75.53 | 0.83 |

| BM3D | 97.40 | 0.86 | 94.95 | 5.24 |

| GMM | 95.53 | 2.41 | 89.09 | 4.92 |

| Proposed method | 99.13 | 0.07 | 98.92 | 0.46 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, R.; Wang, D.; Jia, P.; Sun, H. An Omnidirectional Morphological Method for Aerial Point Target Detection Based on Infrared Dual-Band Model. Remote Sens. 2018, 10, 1054. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10071054

Liu R, Wang D, Jia P, Sun H. An Omnidirectional Morphological Method for Aerial Point Target Detection Based on Infrared Dual-Band Model. Remote Sensing. 2018; 10(7):1054. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10071054

Chicago/Turabian StyleLiu, Rang, Dejiang Wang, Ping Jia, and He Sun. 2018. "An Omnidirectional Morphological Method for Aerial Point Target Detection Based on Infrared Dual-Band Model" Remote Sensing 10, no. 7: 1054. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10071054