1. Introduction

Over the past 15 years, the Government of Canada—led by Agriculture and Agri-Food Canada, the Federal department responsible for Canada’s agriculture sector—has devoted considerable effort to better understanding how Earth Observation (EO) technologies can be used to operationally provide timely and repeatable observations of Canadian agriculture at a national scale. Accordingly, many studies have highlighted the utility of using spectral vegetation indices (VIs) derived from optical observations (particularly, R, NIR and SWIR) for use in agricultural applications [

1,

2]. However, the application of optical remote sensing is limited by its restriction to daytime monitoring through cloud-free skies [

3]. This is of particular concern for the systematic operational monitoring of agricultural regions that experience frequent cloud cover, such as Canada’s east and west coasts. In contrast, Synthetic Aperture Radar (SAR) sensors have the ability to penetrate clouds, smoke, haze and darkness, thus providing all-weather day-and-night imaging capability. This flexibility makes SAR a popular choice of national monitoring agencies for the operational monitoring of land, coastal and ocean environments.

The ability of SAR to distinguish between crop classes depends on the exact nature of the SAR sensor itself. As a general rule, single-polarized (SP) SAR is less useful to discriminate among crop types compared to dual-polarized (DP) SAR which, in turn, is generally less useful to discriminate among crop types compared to full polarimetric (FP) SAR. FP SAR is the most useful of these modes because FP SARs collect the full suite of possible horizontal and vertical polarizations which better allows for the discrimination of crop types with similar structures. FP SAR sensors transmit a fully polarized signal toward the ground target and receive a backscattering response that contains both fully polarized and depolarized constituents [

4]. These sensors collect data at four polarization channels, which comprise the full scattering matrix for each ground resolution cell [

5]. In addition, the relative phase between polarization channels is maintained that allows SAR backscattering responses to be decomposed into various scattering mechanisms using advanced polarimetric decomposition techniques [

6]. These techniques allow the polarimetric covariance or coherency matrixes to be decomposed into three main scattering mechanisms: (1) single/odd-bounce scattering, which represents a direct scattering from the vegetation or ground surface; (2) double/even-bounce scattering, which represents a scattering between, for example, a plant stalk and the ground surface; and (3) volume scattering, which represents multiple scattering within the developed vegetation canopies [

7,

8].

Despite promising results having been obtained from FP SAR data for land cover classification in a variety of applications to date [

7,

8], it is limited from an operational perspective for two main reasons. First, there is an inherent time constraint associated with the alternating transmission of H- and V-polarized pulses [

9]. Second, the complexity due to the double pulse repetition frequency and an increase in data rate relative to SP SAR systems [

10], halves the image swath width of FP SAR systems, decreasing satellite coverage and increasing revisit times. This hinders the utility of FP SAR for operational applications that demand data over large geographical extents. While DP SAR partially addresses some of these limitations (e.g., small swath width), its inability to maintain relative phase between co- and cross-polarization channels remains a problem [

9].

A solution to the previously described limitations of the DP and FP SAR configurations may lie in the use of compact polarimetry (CP) SAR [

11]. Over the past few years, CP SAR observations (e.g., from RISAT-1 and ALOS PALSAR-2) and simulated CP observations have drawn attention within the radar remote sensing community. Similar to DP SAR, a CP sensor transmits one polarization and receives two coherent polarizations simultaneously, thus alleviating the inherent time constraint attributed to that of FP SAR sensors. These sensors collect more scattering information than SP and DP SAR sensors, and are comparable to that obtained from FP systems at a swath width two times larger than FP sensors. Moreover, the relative phase between polarization channels can be maintained using this configuration [

12].

There are three CP SAR configurations in the context of EO sensors. These modes are: (1)

[

12], (2) circularly transmitting circularly receiving (CC; [

5,

13]), and (3) circularly transmitting linearly receiving (CTLR; [

14]). The third configuration is of particular interest in a Canadian context because of its use in the RADARSAT Constellation Mission (RCM). A CTLR SAR sensor transmits either right or left circular polarization and receives both linear polarizations (H and V) coherently.

The RCM contains three identical C-band SAR satellites, which improve satellite revisit time [

15]. The primary purposes of the RCM mission are to ensure data continuity for RADARSAT users and improve operational capability by collecting sub-weekly data (i.e., a four day repeat cycle) for a variety of applications, including maritime surveillance, disaster management, and ecosystem monitoring [

16]. Various polarization settings, including SP (i.e., HH, VV, and HV or VH), DP (i.e., HH-HV, VV-VH, and HH-VV), and CP (i.e., CTLR) modes, at varying spatial resolutions and noise floors, are available with RCM. One major drawback, however, is the higher noise equivalent sigma zero (NESZ) of RCM compared to RADARSAT-2. In particular, NESZ values can vary between −25 to −17 dB for RCM data [

16], resulting in a decreased sensitivity to low backscattering values within a SAR image.

Previous studies found that cross-polarized SAR data (VH or HV) are the most useful SAR observations for crop mapping, though the inclusion of the second polarization (VV) can significantly increase classification accuracy [

17,

18]. For example, AAFC’s Annual Space-Based Crop Inventory uses DP SAR data (VV/VH from RADARSAT-2) along with optical observations to produce overall accuracies of 85% and above [

19]. The addition of the third polarization; however, may improve the accuracies of some crop classes [

18]. Although previous research has examined several aspects of SAR data, including the most useful wavelengths and polarizations, fewer investigations have been carried out to identify the most effective polarimetric decomposition features for accurate crop mapping using either FP or CP SAR data [

3]. For example, McNairn et al. (2009b) reported the superiority of decomposition features, such as Cloude-Pottier and Freeman-Durden, compared to the intensity channels for crop classification using ALOS PALSAR L-band data [

18]. Charbonneau et al. (2010) also noted that the Stokes parameters extracted from simulated CP data were useful for crop classification [

12]. Nonetheless, no comprehensive examination of polarimetric decomposition parameters extracted from FP and CP SAR data for accurate crop mapping exists [

3].

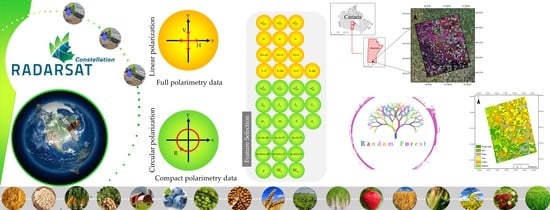

In this study, we undertake a much-needed investigation to identify the potential of polarimetric decomposition features extracted from FP RADARSAT-2 data and simulated CP data collected over an agricultural region near Winnipeg, Manitoba, Canada. In particular, the main purposes of this research are to:

- (1)

confirm the necessity of multi-temporal SAR observations for capturing phenological information of cropping systems;

- (2)

investigate the effect of the difference in polarization between FP (RADARSAT-2) and simulated HH/HV, VV/VH, and CP SAR data (to be collected by RCM) for complex crop classification;

- (3)

examine the effects of difference in radiometry by simulating CP data at two NESZ of −19 dB and −24 dB for crop mapping;

- (4)

identify the most useful polarimetric decomposition features that can be extracted from FP and CP SAR data; and

- (5)

determine the ability of early- and mid-season PolSAR observations for crop classification.

The results of this study will advance our understanding of the use of CP SAR data to be collected by RCM for operational crop mapping prior to the availability of such an important EO data.

5. Discussion

With only one image available, RADARSAT-2 FP data were unable to successfully classify crops to an acceptable degree (the highest overall accuracy = 56%), which is consistent with the results of previous studies [

7,

18]. Overall, user and producer accuracies improved when four SAR images were incorporated into the classification scheme. In particular, an overall accuracy of 80% was achieved with only 12 intensity images, illustrating an approximate 24% improvement relative to the classification based on a single July observation (the most useful SAR observation in this study; see

Table 4 and

Figure 2). These results were likely due to the variation in SAR backscattering responses for different crops caused by variations in their water content and structures from emergence through reproduction, seed development, and senescence. These results underscore the significance of exploiting crop-specific sensitivity (crop phenology) using multi-temporal SAR observations [

7].

The comparison of the classifications using multi-temporal, multi-polarized data (FP SAR data) revealed the superiority of cross-polarized observations relative to those of co-polarized observations (see

Table 5), which is supported by the RF variable importance analysis (see

Figure 3). This is likely because cross-polarized observations are produced by volume scattering within the crop canopy and have a higher sensitivity to crop structures. Furthermore, as reported by a previous study [

42], the HV intensity is highly correlated with leaf area index (LAI). The HV-polarized signal is also less sensitive to the row direction of planting crops. This reduces the variations in the cross-polarized SAR backscattering signatures of the same crops planted in varying row directions [

7]. Regarding the CP intensity features,

was the most important feature according to the RF variable importance (see

Figure 7), and it also produced the highest classification accuracies when intensity features were compared (see

Table 8 and

Table 9).

Notably, in the mid-late growing season, differences in the cross-polarization SAR observations for various crop classes are potentially maximized due to both differences in canopy structures and moisture content, thus enhancing the discrimination between these classes [

7]. Some studies, such as [

43], reported that the late-July/early-August C-band data are optimal SAR observations for crop mapping, as crops exist in various stages of development, such as seeding (e.g., corn and soybeans), ripening (e.g., canola), and senesce or harvest (e.g., wheat and oats), at this time [

7]. However, the phenological cycles of crops vary depending on local conditions (e.g., weather, soil water content) and management strategies (e.g., farmers’ decisions). For example, some crops may be planted much later due to a very long winter in years with extremely abnormal weather conditions [

44]. The VV-polarization (i.e., see

Table 5, S2, and

Figure 3) was found to be the second-best polarization and was approximately 14.5% more accurate in terms of overall accuracy relative to HH (i.e., S1) for crop mapping. The results of our research endorse those of previous studies that confirmed the higher potential of HV- and VV-polarizations for crop mapping relative to HH-polarization [

7,

45]. Notably, some features of the CP SAR data, such as

,

, and

, are sensitive to the surface scattering [

46] and were also found to be important CP SAR features (see

Figure 7). This is particularly true for

, as it was among the top-five most important CP SAR features. This finding is potentially explained by the fact that

S3 is highly sensitive to the crop biomass, as [

26] reported the best result for biomass inversion with

S3 among all other CP SAR features.

Our results revealed the equal or slightly better ability of polarimetric decomposition methods compared to intensity channels for crop mapping either with FP or CP SAR data (e.g., see

Table 9, S48 vs S51 and S52). In comparison, [

18] reported the superiority of decomposition methods (e.g., Krogager decomposition) relative to intensity channels for crop mapping using ALOS PALSAR L-band data (~4% to 7% improvements). Nevertheless, they noted that C-band intensity observations (i.e., ASAR and RADARSAT-1) were advantageous relative to the L-band decomposition methods for crop classification, possibly due to the larger number of C-band acquisitions compared to those of L-band in that study. In general, the results of our study found that polarimetric decompositions are useful for discriminating differing crop types by characterizing various dominant scattering mechanisms during the stages of their developments.

The results also indicated that an improvement in overall accuracy was partial when polarimetric decomposition parameters were integrated with intensity observations. For example, S8 in

Table 5 (all extracted features from the FP SAR data) was approximately 2.5% and 1.6% more accurate than S4 and S7, respectively. The minimal gain was also achieved by integrating intensity and decomposition features from the CP SAR data relative to those classifications based on either intensity (S37 vs S43 and S48 vs S54) or polarimetric decomposition features (S40 vs S43 and S52 vs S54). This finding corresponded to past studies that reported either a slight decline [

18] or minimal improvement in overall accuracies [

44] using L-band SAR data for crop mapping through the synergistic use of polarimetry and intensity features.

Regarding polarimetric decomposition methods, the variable importance analysis of RF indicated the highest and lowest contribution of volume and double-bounce scattering mechanisms, respectively, for crop mapping using both FP and CP SAR data. Previous research also found the lower contribution of double-bounce scattering for crop mapping [

18], which was corroborated in our study. Double-bounce scattering, however, is of significance for monitoring wetland flooded vegetation and forest due to ground–trunk/stem interactions [

47,

48]. Surface/single scattering, which is produced by direct scattering from both soil and upper sections of crop canopies, was also found to be useful. Specifically, surface scattering is the dominant scattering mechanism during periods of crop emergence (early in the growing season), given that the ground is almost bare (very low vegetative cover) and, as such, there is negligible depolarization and random scattering. By mid-season, surface/odd-bounce scattering may be present; however, the enhanced interaction of the incident wave with the top layers of the crop canopies rather than surface scattering from soil is more pronounced [

43]. During the mid-season, volume scattering becomes dominant due to reproduction, stem elongation, as well as significant seed and leaf development, increasing the chance of multiple interactions of the SAR signal within the randomly oriented crop canopies.

The results of H/A/alpha decomposition were well aligned with those of previous studies (e.g., [

18,

44]). Specifically, the RF variable importance indicated the greater contribution of alpha angle relative to those of entropy and anisotropy in this study (see

Figure 3). McNairn and her colleagues in [

18] also reported that alpha angle had discrimination potential for all crops during the entire growing season. They highlighted that the alpha angle could distinguish lower (e.g., forage crops) and more abundant (e.g., soybeans and corn) biomass crops at the beginning and end of the season. Canisius et al. in 2018 [

49] also found that the alpha angle had a strong correlation with the LAI of wheat and, to a lesser extent, canola. Similar to our results, recent studies found the minimal discrimination potential of anisotropy for crop mapping using C- [

49] and L-band [

44] SAR data. Interestingly,

was also ranked as the important features of the CP SAR data (see

Figure 7, c12). The

is similar to the alpha component of the Cloude-Pottier decomposition (i.e, an approximation to the

) and determines the dominant scattering mechanims.

Comparison of classifications using simulated data at −19 and −24 dB revealed the marginal differences between the classification results of similar cases. This finding suggested that almost all crops had backscatter intensities higher than -19 dB (even at their early stages of development, for example, in May), which was at or higher than the noise floor of simulated SAR data in this study. Other studies, such as [

42], also found HV backscatter intensity higher than or at −20 dB for similar crops (e.g., wheat and soybeans) during the early stages of the crop phenology using RADARSAT-2 data. The higher noise floor of simulated SAR data at −19 dB, however, is not problematic after the early stages of the crop development (e.g., mid-season). The reason is that all crops generally produce low backscattering intensities during emergence; however, intensities significantly increase as vegetative density increases during the growing season [

42], resulting in backscattering values considerably higher than -19 dB in all polarization channels.

Importantly, the results in the final classification scheme using the object-based RF classification were promising, especially using both FP and CP SAR data (see

Table 10). For example, 5 of 6 classes had producer’s accuracies approaching (i.e., wheat) or exceeding (i.e., oats, corn, canola, and soybeans) 85% using FP SAR data. Overall, target accuracies of 85% were achieved using this classification scenario [

7]. CP SAR data were also useful with high producer’s accuracies for most classes (e.g., corn, canola, wheat, and oats). The success of CP SAR data for crop mapping is potentially explained by the fact that a circular polarization wave enhances interaction with the canopy and, as such, increases the chance of multiple scattering within crop canopies [

50]. This is particularly true for crops such as canola and corn, that have stem, leaf, and seed with random orientations.

One possible reason for the lowest accuracy of the forage crops in all three cases (see

Figure 12) is the difficulty in assigning an explicit SAR backscattering signature to this class, as it includes several species. Conversely, the confusion matrices demonstrated that larger biomass crops, such as corn and canola, were identified with producer accuracies above 85% using three SAR data types (see

Figure 12). This is because these larger biomass crops have a random structure after flowering, which produces a large amount of volume scattering, thus simplifying their discrimination using PolSAR imagery. Wheat and oats were also classified with accuracies exceeding 83% using both FP and CP SAR data; however, confusion was present between these two classes in some cases. This is because these two classes have very similar canopy structures and relatively the same growing stages, which result in similar SAR backscattering responses.

Both wheat and oats are lower biomass crops with less random crop structures, allowing a deeper penetration of the SAR signal into their canopy. This enhances backscattering responses from the underlying soil, thus contributing to the misclassification between the two classes [

43]. Accordingly, these classes have been merged into a single class (cereals) in several crop classification studies (e.g., [

7]). One interesting observation was the higher producer accuracy of wheat using CP SAR data compared to that of FP SAR data. Although there was no apparent reason for this observation, this agreed, to some extent, with [

20] who found the highest correlation between wheat dry biomass and the circular compared to the linear polarization ratios for the same test site in SMAPVEX12.

An improvement in overall accuracy could be achieved upon the inclusion of August SAR observations into the classification scheme. As mentioned above, the highest contrast in the crop structures is expected at this time of the crop phenological cycle under normal weather conditions, given that some crops (e.g., corn and soybeans) are likely to be in their seed developmental stages, whereas others (e.g., wheat and oats) are in their senescence and harvest stages [

7]. Further improvement in the overall accuracy of crop mapping is expected upon the inclusion of multi-frequency SAR data. This is because, while C-band SAR data are useful for both lower and larger biomass crops, L-band may improve the discrimination capability for larger biomass crops (e.g., soybeans and corn), given the higher penetration depth of the latter SAR signal [

18]. However, such multi-frequency SAR data demand acquisition from different satellites because currently operating SAR sensors do not collect multi-frequency data. Previous studies also reported that the synergistic use of optical and SAR data is promising, given that the former observation is sensitive to spectral and biophysical characterization of crops [

51,

52] while the latter is sensitive to their structural characteristics. This also addresses the intrinsic limitation of optical imagery (i.e., cloud and haze), which results in data gaps in classifications based only on optical data [

7]. Notably, multi-source satellite imagery (optical and SAR) could be of particular use when there is a lack of multi-temporal data. An operational example of this approach is the annual crop inventory produced by Agriculture and Agri-Food Canada, which incorporates C-band RADARSAT-2 DP SAR data (VV/VH) with available optical data (depending on the cloud cover; [

19]). Although the results of this study suggested that the simulated CP SAR data will be of great significance for crop mapping, these results should be further justified in different case studies when real CP SAR data are available by the RADARSAT Constellation Mission.

6. Conclusions

In this study, the capability of early- to mid-season (i.e., May to July) RADARSAT-2 SAR images were examined for crop mapping in an agricultural region in Manitoba, Canada. Various classification scenarios were defined based on extracted features from full polarimetry SAR data, as well as simulated dual and compact polarimetry SAR data. Both overall and individual class accuracies were compared for multi-temporal, multi-polarization SAR data using the pixel-based and object-based random forest classification schemes.

The classification results revealed the importance of multi-temporal SAR observations for accurate crop mapping. Mid-season C-band SAR observations (late July in this study) were found to be advantageous for crop mapping, as crops have already accumulated significant biomass and have transitioned into varying developmental stages, such as reproduction, seed development, and senescence. The results demonstrated a similar capability of decomposition parameters for crop mapping relative to the intensity channels for both FP and CP SAR data. The synergistic use of polarimetric decomposition and intensity features resulted in a marginal improvement in overall accuracies relative to classifications based only on SAR intensity and decomposition features. The variable importance analysis of RF found that for both FP and CP SAR data the volumetric component of the decomposition features and and intensities contribute more to the crop mapping. The reason is that these features have the highest sensitivity to variances in crop structures due to crop types and changing phenology.

A Spearman correlation coefficient analysis revealed that there were high correlations between extracted features from FP, DP, and CP SAR data. This explained the lower gain in overall classification accuracy when highly correlated features were incorporated into the classification scheme. The highest classification accuracies of 88.2%, 82.1%, and 77.3% were achieved using uncorrelated features extracted from the FP, CP, and DP SAR data, respectively, with the object-based RF classification approach. Although the classification accuracy obtained from FP SAR data was higher than that of CP SAR data, the latter is more suitable for operational monitoring. Wider swath widths associated with CP will allow for sub-weekly observations, thus providing high temporal resolution data, an important factor for accurate crop mapping at a national scale.

The results of this research suggest that the CP mode available on the RADARSAT Constellation Mission will provide data of great interest for operational crop mapping. The analysis presented in this study contributes to further scientific research for agricultural applications and, importantly, demonstrates that RCM’s CP configuration will be a critical source of data to support Canada’s commitment to operational crop monitoring.