A Novel Method for Plane Extraction from Low-Resolution Inhomogeneous Point Clouds and its Application to a Customized Low-Cost Mobile Mapping System

Abstract

:1. Introduction

2. Related Works

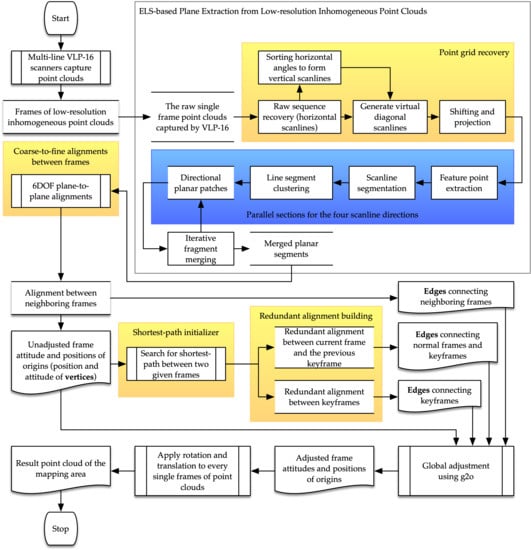

3. Plane Extraction Based on Enhanced Line Simplification Algorithm

3.1. Point Grid Recovery

3.2. Feature Point Extraction

3.3. Scanline Segment Seeking and Clustering

3.4. Multi-Direction Fragment Merging

4. Applications to Mobile Mapping

4.1. Individual Alignment between Frames

4.2. Overall Alignment Procedure

5. Sample Datasets and Results

5.1. Plane Extraction from Low-Resolution Inhomogeneous Point Clouds

5.2. A Dedicated Mobile Mapping System, S2DAS

5.3. Plane-to-Plane Alignments for IMU-free Mobile Mapping

6. Discussion

7. Conclusions

8. Patents

Author Contributions

Funding

Conflicts of Interest

References

- Applanix Corp. Land Solutions: TIMMS Indoor Mapping. Available online: http://www.applanix.com/solutions/land/timms.html (accessed on 8 December 2015).

- ViAmetris 3D Mapping Viametris|Continuous Indoor Mobile Scanner iMS3D. Available online: https://www.viametris.com/ims3d (accessed on 8 December 2015).

- NavVis US Inc. NavVis|M6. Available online: https://www.navvis.com/m6 (accessed on 12 April 2019).

- Leica Geosystems AG Leica Pegasus: Backpack-Award-Winning Wearable Reality Capture-Indoors, Outdoors, Anywhere. Available online: http://www.leica-geosystems.com/en/Leica-PegasusBackpack_106730.htm (accessed on 8 June 2015).

- Google Introducing Cartographer. Available online: https://opensource.googleblog.com/2016/10/introducing-cartographer.html (accessed on 5 October 2016).

- GreenValley International LiBackpack-Mobile Handheld LiDAR-3D Mapping System. Available online: https://greenvalleyintl.com/hardware/libackpack/ (accessed on 11 April 2019).

- ViAmetris 3D Mapping Viametris|Backpack Mobile Scanner bMS3D LD5+. Available online: https://www.viametris.com/bms3d4cams (accessed on 11 April 2019).

- Blaser, S.; Cavegn, S.; Nebiker, S. Development of A Portable High Performance Mobile Mapping System Using The Robot Operating System. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV–1, 13–20. [Google Scholar] [CrossRef]

- Nüchter, A.; Borrmann, D.; Koch, P.; Kühn, M.; May, S. A man-portable, IMU-free mobile mapping system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, II, 17–23. [Google Scholar] [CrossRef]

- Liu, T.; Carlberg, M.; Chen, G.; Chen, J.; Kua, J.; Zakhor, A. Indoor localization and visualization using a human-operated backpack system. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation, Zürich, Switzerland, 15–17 September 2010. [Google Scholar]

- Occipital Inc. PX-80 Overview. Available online: http://labs.paracosm.io/px-80-overview (accessed on 16 July 2019).

- GeoSLAM GeoSLAM-The Experts in “Go-Anywhere” 3D Mobile Mapping Technology. Available online: https://geoslam.com/ (accessed on 11 April 2019).

- Kaarta Stencil 2–KAARTA. Available online: https://www.kaarta.com/products/stencil-2/ (accessed on 15 July 2019).

- Lehtola, V.; Kaartinen, H.; Nüchter, A.; Kaijaluoto, R.; Kukko, A.; Litkey, P.; Honkavaara, E.; Rosnell, T.; Vaaja, M.; Virtanen, J.-P.; et al. Comparison of the Selected State-Of-The-Art 3D Indoor Scanning and Point Cloud Generation Methods. Remote Sens. 2017, 9, 796. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Remondino, F.; Toschi, I.; Rodríguez-Gonzálvez, P. Investigation of indoor and outdoor performance of two portable mobile mapping systems. In Proceedings of the Videometrics, Range Imaging, and Applications XIV, Munich, Germany, 26–27 June 2017; Volume 10332I. [Google Scholar]

- Maboudi, M.; Bánhidi, D.; Gerke, M. Investigation of Geometric Performance of An Indoor Mobile Mapping System. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–2, 637–642. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems (RSS), Berkeley, CA, USA, 13–17 July 2014. [Google Scholar]

- Olsson, C.; Kahl, F.; Oskarsson, M. The Registration Problem Revisited: Optimal Solutions From Points, Lines and Planes. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), IEEE, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 1206–1213. [Google Scholar]

- Bogoslavskyi, I.; Stachniss, C. Fast range image-based segmentation of sparse 3D laser scans for online operation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Daejeon, Korea, 9–14 October 2016; pp. 163–169. [Google Scholar]

- Glennie, C.L.; Kusari, A.; Facchin, A. Calibration and Stability Analysis of the VLP-16 Laser Scanner. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XL, 55–60. [Google Scholar] [CrossRef]

- Grant, W.S.; Voorhies, R.C.; Itti, L. Finding planes in LiDAR point clouds for real-time registration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Grant, W.S.; Voorhies, R.C.; Itti, L. Efficient Velodyne SLAM with point and plane features. Auton. Robots 2019, 43, 1207–1224. [Google Scholar] [CrossRef]

- Karam, S.; Vosselman, G.; Peter, M.; Hosseinyalamdary, S.; Lehtola, V. Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System. Remote Sens. 2019, 11, 905. [Google Scholar] [CrossRef]

- Vosselman, G. Point cloud segmentation for urban scene classification. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-7/W2, 257–262. [Google Scholar] [CrossRef]

- Nguyen, A.; Le, B. 3D point cloud segmentation: A survey. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), IEEE, Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Dimitrov, A.; Golparvar-Fard, M. Segmentation of building point cloud models including detailed architectural/structural features and MEP systems. Autom. Constr. 2015, 51, 32–45. [Google Scholar] [CrossRef]

- Xu, X.; McGorry, R.W. The validity of the first and second generation Microsoft KinectTM for identifying joint center locations during static postures. Appl. Ergon. 2015, 49, 47–54. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Yang, B.; Hu, P.; Scherer, S. An efficient global energy optimization approach for robust 3D plane segmentation of point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 137, 112–133. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.; Shan, J.; Zhang, J.; Li, L. Investigation on the Weighted RANSAC Approaches for Building Roof Plane Segmentation from LiDAR Point Clouds. Remote Sens. 2015, 8, 5. [Google Scholar] [CrossRef]

- Grilli, E.; Menna, F.; Remondino, F. A Review of Point Clouds Segmentation and Classification Algorithms. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef]

- Pham, T.T.; Chin, T.-J.; Yu, J.; Suter, D. The Random Cluster Model for Robust Geometric Fitting. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1658–1671. [Google Scholar] [CrossRef]

- Sharp, G.C.; Lee, S.W.; Wehe, D.K. ICP registration using invariant features. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 90–102. [Google Scholar] [CrossRef]

- Adan, A.; Huber, D. 3D Reconstruction of Interior Wall Surfaces under Occlusion and Clutter. In Proceedings of the 2011 International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, IEEE, Hangzhou, China, 16–19 May 2011; pp. 275–281. [Google Scholar]

- Shi, W.; Ahmed, W.; Li, N.; Fan, W.; Xiang, H.; Wang, M. Semantic Geometric Modelling of Unstructured Indoor Point Cloud. ISPRS Int. J. Geo-Inf. 2018, 8, 9. [Google Scholar] [CrossRef]

- Deschaud, J.; Goulette, F. A Fast and Accurate Plane Detection Algorithm for Large Noisy Point Clouds Using Filtered Normals and Voxel Growing. In Proceedings of the 3DPVT, Paris, France, 17–20 May 2010. [Google Scholar]

- Xiao, J.; Zhang, J.; Adler, B.; Zhang, H.; Zhang, J. Three-dimensional point cloud plane segmentation in both structured and unstructured environments. Robot. Auton. Syst. 2013, 61, 1641–1652. [Google Scholar] [CrossRef]

- Vo, A.-V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Li, Y.; Wu, B.; Ge, X. Structural segmentation and classification of mobile laser scanning point clouds with large variations in point density. ISPRS J. Photogramm. Remote Sens. 2019, 153, 151–165. [Google Scholar] [CrossRef]

- Teboul, O.; Simon, L.; Koutsourakis, P.; Paragios, N. Segmentation of building facades using procedural shape priors. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE, San Francisco, CA, USA, 13–18 June 2010; pp. 3105–3112. [Google Scholar]

- Miyazaki, R.; Yamamoto, M.; Harada, K. Line-Based Planar Structure Extraction from a Point Cloud with an Anisotropic Distribution. Int. J. Autom. Technol. 2017, 11, 657–665. [Google Scholar] [CrossRef]

- Czerniawski, T.; Sankaran, B.; Nahangi, M.; Haas, C.; Leite, F. 6D DBSCAN-based segmentation of building point clouds for planar object classification. Autom. Constr. 2018, 88, 44–58. [Google Scholar] [CrossRef]

- Georgiev, K.; Creed, R.T.; Lakaemper, R. Fast plane extraction in 3D range data based on line segments. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, San Francisco, CA, USA, 25–30 September 2011; pp. 3808–3815. [Google Scholar]

- Cabo, C.; García Cortés, S.; Ordoñez, C. Mobile Laser Scanner data for automatic surface detection based on line arrangement. Autom. Constr. 2015, 58, 28–37. [Google Scholar] [CrossRef]

- Wang, W.; Sakurada, K.; Kawaguchi, N. Incremental and Enhanced Scanline-Based Segmentation Method for Surface Reconstruction of Sparse LiDAR Data. Remote Sens. 2016, 8, 967. [Google Scholar] [CrossRef]

- Nguyen, H.L.; Belton, D.; Helmholz, P. Planar surface detection for sparse and heterogeneous mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 151, 141–161. [Google Scholar] [CrossRef]

- Yao, J.; Ruggeri, M.R.; Taddei, P.; Sequeira, V. Automatic Scan Registration Using 3D Linear And Planar Features. 3D Res. 2010, 1, 6. [Google Scholar] [CrossRef]

- Bosché, F. Plane-based Registration of Construction Laser Scans with 3D/4D Building Models. Adv. Eng. Inform. 2012, 26, 90–102. [Google Scholar] [CrossRef]

- Al-Durgham, K.; Habib, A. Association-Matrix-Based Sample Consensus Approach for Automated Registration of Terrestrial Laser Scans Using Linear Features. Photogramm. Eng. Remote Sens. 2014, 80, 1029–1039. [Google Scholar] [CrossRef]

- Fangning, H.; Ayman, H. A Closed-Form Solution for Coarse Registration of Point Clouds Using Linear Features. J. Surv. Eng. 2016, 142, 04016006. [Google Scholar] [CrossRef]

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Rodolà, E.; Albarelli, A.; Cremers, D.; Torsello, A. A simple and effective relevance-based point sampling for 3D shapes. Pattern Recognit. Lett. 2015, 59, 41–47. [Google Scholar] [CrossRef] [Green Version]

- Kwok, T.-H. DNSS: Dual-Normal-Space Sampling for 3-D ICP Registration. IEEE Trans. Autom. Sci. Eng. 2019, 16, 241–252. [Google Scholar] [CrossRef]

- Khoshelham, K.; Dos Santos, D.R.; Vosselman, G. Generation and weighting of 3D point correspondences for improved registration of RGB-D data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W2, 127–132. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.; Ma, J.; Zhao, J.; Tian, J.; Zhang, D. A robust and outlier-adaptive method for non-rigid point registration. Pattern Anal. Appl. 2014, 17, 379–388. [Google Scholar] [CrossRef]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef] [Green Version]

- Lu, F.; Milios, E. Robot Pose Estimation in Unknown Environments by Matching 2D Range Scans. J. Intell. Robot. Syst. 1997, 18, 249–275. [Google Scholar] [CrossRef]

- Alshawa, M. ICL: Iterative closest line a novel point cloud registration algorithm based on linear features. Ekscentar 2007, 10, 53–59. [Google Scholar]

- Jaw, J.; Chuang, T. Registration of ground-based LiDAR point clouds by means of 3D line features. J. Chin. Inst. Eng. 2008, 31, 1031–1045. [Google Scholar] [CrossRef]

- Lu, Z.; Baek, S.; Lee, S. Robust 3D Line Extraction from Stereo Point Clouds. In Proceedings of the 2008 IEEE Conference on Robotics, Automation and Mechatronics, IEEE, Chengdu, China, 21–24 September 2008; pp. 1–5. [Google Scholar]

- Xu, Z.; Shin, B.; Klette, R. Closed form line-segment extraction using the Hough transform. Pattern Recognit. 2015, 48, 4012–4023. [Google Scholar] [CrossRef]

- Poppinga, J.; Vaskevicius, N.; Birk, A.; Pathak, K. Fast plane detection and polygonalization in noisy 3D range images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3378–3383. [Google Scholar]

- Pathak, K.; Birk, A.; Vaskevicius, N.; Pfingsthorn, M.; Schwertfeger, S.; Poppinga, J. Online three-dimensional SLAM by registration of large planar surface segments and closed-form pose-graph relaxation. J. Field Robot. 2010, 27, 52–84. [Google Scholar] [CrossRef]

- Theiler, P.W.; Schindler, K. Automatic registration of terrestrial laser scanner point clouds using natural planar surfaces. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I–3, 173–178. [Google Scholar] [CrossRef] [Green Version]

- Ulas, C.; Temeltas, H. Plane-feature based 3D outdoor SLAM with Gaussian filters. In Proceedings of the IEEE International Conference on Vehicular Electronics and Safety (ICVES 2012), Istanbul, Turkey, 24–27 July 2012; pp. 13–18. [Google Scholar]

- Lenac, K.; Kitanov, A.; Cupec, R.; Petrović, I. Fast planar surface 3D SLAM using LIDAR. Robot. Auton. Syst. 2017, 92, 197–220. [Google Scholar] [CrossRef]

- Rivadeneyra, C.; Campbell, M. Probabilistic multi-level maps from LIDAR data. Int. J. Robot. Res. 2011, 30, 1508–1526. [Google Scholar] [CrossRef]

- Lee, Y. A reliable range-free indoor localization method for mobile robots. In Proceedings of the IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 720–727. [Google Scholar]

- Chen, H.H. Pose determination from line-to-plane correspondences: Existence condition and closed-form solutions. In Proceedings of the Third International Conference on Computer Vision, Osaka, Japan, 4–7 December 1990; pp. 374–378. [Google Scholar]

- Nistér, D.; Stewénius, H. A Minimal Solution to the Generalised 3-Point Pose Problem. J. Math. Imaging Vis. 2007, 27, 67–79. [Google Scholar] [CrossRef]

- Ramalingam, S.; Taguchi, Y. A Theory of Minimal 3D Point to 3D Plane Registration and Its Generalization. Int. J. Comput. Vis. 2013, 102, 73–90. [Google Scholar] [CrossRef] [Green Version]

- Ebisch, K. A correction to the Douglas–Peucker line generalization algorithm. Comput. Geosci. 2002, 28, 995–997. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef] [Green Version]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. BundleFusion: Real-time globally consistent 3D reconstruction using on-the-fly surface reintegration. ACM Trans. Graph. 2017, 36, 24. [Google Scholar] [CrossRef]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform; University of Massachusetts Amherst: Amherst, MA, USA, 2009. [Google Scholar]

- Zhang, J.; Singh, S. Low-drift and real-time lidar odometry and mapping. Auton. Robot. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- Geneva, P.; Eckenhoff, K.; Yang, Y.; Huang, G. LIPS: LiDAR-Inertial 3D Plane SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 123–130. [Google Scholar]

- Kummerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Papon, J.; Abramov, A.; Schoeler, M.; Worgotter, F. Voxel Cloud Connectivity Segmentation-Supervoxels for Point Clouds. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Portland, OR, USA, 23–28 June 2013; pp. 2027–2034. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Urbančič, T.; Vrečko, A.; Kregar, K. The Reliability of RANSAC Method When Estimating Geometric Object Parameters. Geod. Vestn. 2016, 60, 69–97. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Niu, X.; Wang, L.; Chen, L.; Liu, J.; Shi, C.; Hyyppä, J. LiDAR Scan Matching Aided Inertial Navigation System in GNSS-Denied Environments. Sensors 2015, 15, 16710–16728. [Google Scholar] [CrossRef]

- Jurjević, L.; Gašparović, M. 3D Data Acquisition Based on OpenCV for Close-range Photogrammetry Applications. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-1/W1, 377–382. [Google Scholar]

- Lachat, E.; Landes, T.; Grussenmeyer, P. Comparison of Point Cloud Registration Algorithms for Better Result Assessment–Towards An Open-source Solution. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–2, 551–558. [Google Scholar] [CrossRef] [Green Version]

- Sammartano, G.; Spanò, A. Point Clouds by SLAM-based Mobile Mapping Systems: Accuracy And Geometric Content Validation in Multisensor Survey And Stand-alone Acquisition. Appl. Geomat. 2018, 10, 317–339. [Google Scholar] [CrossRef]

- Maboudi, M.; Bánhidi, D.; Gerke, M. Evaluation of Indoor Mobile Mapping Systems. In Proceedings of the 20th Application-oriented Workshop on Measuring, Modeling, Processing and Analysis of 3D-Data Gesellschaft zur Förderung angewandter Informatik, Berlin, Germany, 7–8 December 2017; pp. 125–134. [Google Scholar]

| Scenario | Hallway | Laboratory | Lecture Theater | Stairwell |

|---|---|---|---|---|

| Photos |  |  |  |  |

| Raw Point Clouds |  |  |  |  |

| RANSAC 2 |  |  |  |  |

| VCCS 3 |  |  |  |  |

| Multi-scale Voxels 3 |  |  |  |  |

| Proposed Method |  |  |  |  |

| Scenario | Unclear Edges | Stairs as a Slope | Single-Line Fractions | Undivided Fractions |

|---|---|---|---|---|

| RANSAC |  |  |  |  |

| VCCS 2 |  |  |  |  |

| Multi-scale Voxels 2 |  |  |  |  |

| Proposed Method 3 |  |  |  |  |

| Error Type | Distance Measurement Error [cm] |

|---|---|

| Maximum Error | 15.58 |

| Minimum Error | −12.69 |

| Mean Error | 0.24 |

| Standard Deviation | 3.09 |

| Root-mean-square Error | 3.10 |

| Scenario | TLS Point Clouds 1 | S2DAS Point Clouds 2 |

|---|---|---|

| Lecture Theater 3 |  |  |

| Stairwell 3 |  |  |

| Terrace (outdoor) |  |  |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, W.; Shi, W.; Xiang, H.; Ding, K. A Novel Method for Plane Extraction from Low-Resolution Inhomogeneous Point Clouds and its Application to a Customized Low-Cost Mobile Mapping System. Remote Sens. 2019, 11, 2789. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11232789

Fan W, Shi W, Xiang H, Ding K. A Novel Method for Plane Extraction from Low-Resolution Inhomogeneous Point Clouds and its Application to a Customized Low-Cost Mobile Mapping System. Remote Sensing. 2019; 11(23):2789. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11232789

Chicago/Turabian StyleFan, Wenzheng, Wenzhong Shi, Haodong Xiang, and Ke Ding. 2019. "A Novel Method for Plane Extraction from Low-Resolution Inhomogeneous Point Clouds and its Application to a Customized Low-Cost Mobile Mapping System" Remote Sensing 11, no. 23: 2789. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11232789