Measuring Change Using Quantitative Differencing of Repeat Structure-From-Motion Photogrammetry: The Effect of Storms on Coastal Boulder Deposits

Abstract

:1. Introduction

2. Coastal Boulder Deposits

3. Study Area

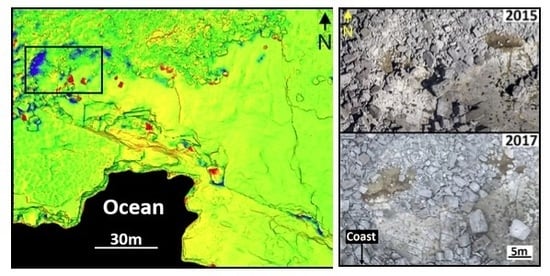

4. Materials and Methods

4.1. Image Acquisition

4.2. Image Optimisation and Distortion Correction

4.3. Model Generation Using Agisoft Photogrammetry Software

4.4. Quantitative Differencing Using CloudCompare

5. Results

5.1 Agisoft Models

5.2. Aligning Disparate Datasets with CloudCompare

5.3. Quantitative Differencing via CloudCompare

6. Discussion

6.1 Effective, Efficient Methodology for Quantitative Repeat Photogrammetric Analysis

6.2. Boulder Movement

6.3. Advantages and Disadvantages of CloudCompare

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Brunier, G.; Fleury, J.; Anthony, E.J.; Gardel, A.; Dussouillez, P. Close-range airborne Structure-from-Motion Photogrammetry for high-resolution beach morphometric surveys: Examples from an embayed rotating beach. Geomorphology 2016, 261, 76–88. [Google Scholar] [CrossRef]

- Casella, E.; Rovere, A.; Pedroncini, A.; Stark, C.P.; Casella, M.; Ferrari, M.; Firpo, M. Drones as tools for monitoring beach topography changes in the Ligurian Sea (NW Mediterranean). Geo-Mar. Lett. 2016, 36, 151–163. [Google Scholar] [CrossRef]

- Marteau, B.; Vericat, D.; Gibbins, C.; Batalla, R.J.; Green, D.R. Application of Structure-from-Motion photogrammetry to river restoration. Earth Surf. Process. Landf. 2017, 42, 503–515. [Google Scholar] [CrossRef] [Green Version]

- Woodget, A.S.; Austrums, R.; Maddock, I.P.; Habit, E. Drones and digital photogrammetry: From classifications to continuums for monitoring river habitat and hydromorphology. Wiley Interdiscip. Rev. Water 2017, 4, e1222. [Google Scholar] [CrossRef] [Green Version]

- Tamminga, A.D.; Eaton, B.C.; Hugenholtz, C.H. UAS-based remote sensing of fluvial change following an extreme flood event. Earth Surf. Process. Landf. 2015, 40, 1464–1476. [Google Scholar] [CrossRef]

- Marek, L.; Miřijovský, J.; Tuček, P. Monitoring of the Shallow Landslide Using UAV Photogrammetry and Geodetic Measurements BT. Engineering Geology for Society and Territory—Volume 2; Lollino, G., Giordan, D., Crosta, G.B., Corominas, J., Azzam, R., Wasowski, J., Sciarra, N., Eds.; Springer International Publishing: Cham, The Switzerland, 2015; pp. 113–116. [Google Scholar]

- Stumpf, A.; Malet, J.-P.; Kerle, N.; Niethammer, U.; Rothmund, S. Image-based mapping of surface fissures for the investigation of landslide dynamics. Geomorphology 2013, 186, 12–27. [Google Scholar] [CrossRef] [Green Version]

- Gomez, C.; Purdie, H. UAV-based photogrammetry and geocomputing for hazards and disaster risk monitoring–A review. Geo-Environ. Disasters 2016, 3, 23. [Google Scholar] [CrossRef] [Green Version]

- Bitelli, G.; Dubbini, M.; Zanutta, A. Terrestrial laser scanning and digital photogrammetry techniques to monitor landslide bodies. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 246–251. [Google Scholar]

- Lucieer, A.; Jong, S.M.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Rossi, G.; Tanteri, L.; Tofani, V.; Vannocci, P.; Moretti, S.; Casagli, N. Multitemporal UAV surveys for landslide mapping and characterization. Landslides 2018, 15, 1045–1052. [Google Scholar] [CrossRef] [Green Version]

- Rossi, G.; Tanteri, L.; Salvatici, T.; Casagli, N. The use of multi-copter drones for landslide investigations. In Proceedings of the 3rd North American Symposium on Landslides, Roanoke, VA, USA, 4–8 June 2017; pp. 978–984. [Google Scholar]

- Suh, J.; Choi, Y. Mapping hazardous mining-induced sinkhole subsidence using unmanned aerial vehicle (drone) photogrammetry. Environ. Earth Sci. 2017, 76, 144. [Google Scholar] [CrossRef]

- Al-Halbouni, D.; Holohan, E.P.; Saberi, L.; Alrshdan, H.; Sawarieh, A.; Closson, D.; Walter, T.R.; Dahm, T. Sinkholes, subsidence and subrosion on the eastern shore of the Dead Sea as revealed by a close-range photogrammetric survey. Geomorphology 2017, 285, 305–324. [Google Scholar] [CrossRef] [Green Version]

- Gasperini, D.; Allemand, P.; Delacourt, C.; Grandjean, P. Potential and limitation of UAV for monitoring subsidence in municipal landfills. Int. J. Environ. Technol. Manag. 2014, 17, 1. [Google Scholar] [CrossRef]

- Gonçalves, J.A.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Barlow, J.; Gilham, J.; Ibarra Cofrã, I. Kinematic analysis of sea cliff stability using UAV photogrammetry. Int. J. Remote Sens. 2017, 38, 2464–2479. [Google Scholar] [CrossRef]

- Swirad, Z.M.; Rosser, N.J.; Brain, M.J. Identifying mechanisms of shore platform erosion using Structure-from-Motion (SfM) photogrammetry. Earth Surf. Process. Landf. 2019, 44, 1542–1588. [Google Scholar] [CrossRef] [Green Version]

- Bryson, M.; Johnson-Roberson, M.; Pizarro, O.; Williams, S. Automated registration for multi-year robotic surveys of marine benthic habitats. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3344–3349. [Google Scholar]

- Bryson, M.; Johnson-Roberson, M.; Pizarro, O.; Williams, S. Repeatable robotic surveying of marine benthic habitats for monitoring long-term change. In Proceedings of the Robotics Science and Systems, Sydney, Australia, 9–13 July 2012; pp. 3–7. [Google Scholar]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2019, 70, 952–963. [Google Scholar] [CrossRef]

- Leon, J.X.; Roelfsema, C.M.; Saunders, M.I.; Phinn, S.R. Measuring coral reef terrain roughness using ‘Structure-from-Motion’close-range photogrammetry. Geomorphology 2015, 242, 21–28. [Google Scholar] [CrossRef]

- Burns, J.H.R.; Delparte, D.; Gates, R.D.; Takabayashi, M. Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. Life Environ. 2015, 3, e1077. [Google Scholar] [CrossRef]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Grenzdörffer, G.J.; Engel, A.; Teichert, B. The photogrammetric potential of low-cost UAVs in forestry and agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 31, 1207–1214. [Google Scholar]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef] [Green Version]

- Paneque-Gálvez, J.; McCall, M.K.; Napoletano, B.M.; Wich, S.A.; Koh, L.P. Small drones for community-based forest monitoring: An assessment of their feasibility and potential in tropical areas. Forests 2014, 5, 1481–1507. [Google Scholar] [CrossRef] [Green Version]

- Hixon, S.W.; Lipo, C.P.; Hunt, T.L.; Lee, C. Using Structure from Motion mapping to record and analyze details of the Colossal Hats (Pukao) of monumental statues on Rapa Nui (Easter Island). Adv. Archaeol. Pract. 2018, 6, 42–57. [Google Scholar] [CrossRef] [Green Version]

- Sapirstein, P. Accurate measurement with photogrammetry at large sites. J. Archaeol. Sci. 2016, 66, 137–145. [Google Scholar] [CrossRef]

- Meyer, D.E.; Lo, E.; Afshari, S.; Vaughan, A.; Rissolo, D.; Kuester, F. Utility of low-cost drones to generate 3D models of archaeological sites from multisensor data. SAA Archaeol. Rec. Mag. Soc. Am. Archaeol. 2016, 16, 22–24. [Google Scholar]

- Balletti, C.; Guerra, F.; Scocca, V.; Gottardi, C. 3D integrated methodologies for the documentation and the virtual reconstruction of an archaeological site. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 215–222. [Google Scholar] [CrossRef] [Green Version]

- Verhoeven, G. Taking computer vision aloft–archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G.; Soura, K.; Koukouvelas, I.K.; Argyropoulos, N.G. UAV vs classical aerial photogrammetry for archaeological studies. J. Archaeol. Sci. Rep. 2017, 14, 758–773. [Google Scholar] [CrossRef]

- Smith, N.G.; Passone, L.; al-Said, S.; al-Farhan, M.; Levy, T.E. Drones in Archaeology: Integrated Data Capture, Processing, and Dissemination in the al-Ula Valley, Saudi Arabia. Near East. Archaeol. 2014, 77, 176–181. [Google Scholar] [CrossRef]

- Semaan, L.; Salama, M.S. Underwater Photogrammetric Recording at the Site of Anfeh, Lebanon BT. In 3D Recording and Interpretation for Maritime Archaeology; McCarthy, J.K., Benjamin, J., Winton, T., Van Duivenvoorde, W., Eds.; Springer International Publishing: Cham, The Switzerland, 2019; pp. 67–87. ISBN 978-3-030-03635-5. [Google Scholar]

- Yamazaki, F.; Matsuda, T.; Denda, S.; Liu, W. Construction of 3D models of buildings damaged by earthquakes using UAV aerial images. In Proceedings of the Tenth Pacific Conference Earthquake Engineering Building an Earthquake-Resilient Pacific, McKinnon, Australia, 6–8 November 2015; pp. 6–8. [Google Scholar]

- Ridolfi, E.; Buffi, G.; Venturi, S.; Manciola, P. Accuracy analysis of a dam model from drone surveys. Sensors 2017, 17, 1777. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pacifici, F.; Chini, M.; Emery, W.J. A neural network approach using multi-scale textural metrics from very high-resolution panchromatic imagery for urban land-use classification. Remote Sens. Environ. 2009, 113, 1276–1292. [Google Scholar] [CrossRef]

- Yeum, C.M.; Choi, J.; Dyke, S.J. Autonomous image localization for visual inspection of civil infrastructure. Smart Mater. Struct. 2017, 26, 35051. [Google Scholar] [CrossRef]

- Francesco, G.; Emanuele, B. Inspection of Components with the Support of the Drones. Int. Res. J. Eng. Technol. 2018, 5, 1784–1789. [Google Scholar]

- Eltner, A.; Kaiser, A.; Abellan, A.; Schindewolf, M. Time lapse structure-from-motion photogrammetry for continuous geomorphic monitoring. Earth Surf. Process. Landf. 2017, 42, 2240–2253. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A Lightweight Hyperspectral Mapping System and Photogrammetric Processing Chain for Unmanned Aerial Vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef] [Green Version]

- Nolan, M.; Larsen, C.F.; Sturm, M. Mapping snow-depth from manned-aircraft on landscape scales at centimeter resolution using Structure-from-Motion photogrammetry. Cryosph. Discuss. TCD 2015, 9, 333–381. [Google Scholar] [CrossRef]

- Gillan, K.J.; Karl, W.J.; Elaksher, A.; Duniway, C.M. Fine-Resolution Repeat Topographic Surveying of Dryland Landscapes Using UAS-Based Structure-from-Motion Photogrammetry: Assessing Accuracy and Precision against Traditional Ground-Based Erosion Measurements. Remote Sens. 2017, 9, 437. [Google Scholar] [CrossRef] [Green Version]

- Micheletti, N.; Chandler, J.H.; Lane, S.N. Structure from motion (SFM) photogrammetry. In Geomorphological Techniques, Online Edition; Clarke, L.E., Nield, J.M., Eds.; Society for Geomorphology: London, UK, 2015; Chapter 2, Section 2.2; ISSN 2047-0371. [Google Scholar]

- Girardeau-Montaut, D.C. 3D Point Cloud and Mesh Processing Software. Telecom ParisTechs 2017. Available online: https://pastel.archives-ouvertes.fr/pastel-00001745/ (accessed on 1 November 2019).

- Girardeau-Montaut, D. Cloud compare—3d point cloud and mesh processing software. Open Source Project. 2019. Available online: https://www.danielgm.net/cc/ (accessed on 1 November 2019).

- Cosso, T.; Ferrando, I.; Orlando, A. Surveying and mapping a cave using 3D Laser scanner: The open challenge with free and open source software. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 181–186. [Google Scholar] [CrossRef] [Green Version]

- Będkowski, J.; Pełka, M.; Majek, K.; Fitri, T.; Naruniec, J. Open source robotic 3D mapping framework with ROS—Robot Operating System, PCL—Point Cloud Library and Cloud Compare. In Proceedings of the 2015 International Conference on Electrical Engineering and Informatics (ICEEI), Denpasar, Indonesia, 10–11 August 2015; pp. 644–649. [Google Scholar]

- Lieberwirth, U.; Metz, M.; Neteler, M.; Kühnle, K. Applying low budget equipment and open source software for high resolution documentation of archaeological stratigraphy and features. In Papers from the 41st Conference on Computer Applications and Quantitative Methods in Archaeology; Amsterdam University Press: Amsterdam, The Netherlands, 2013; pp. 55–64. [Google Scholar]

- Abed, F.M.; Mohammed, M.U.; Mohammed, M.U.; Kadhim, S.J. Architectural and Cultural Heritage conservation using low-cost cameras. Appl. Res. J. 2017, 3, 376–384. [Google Scholar]

- Stumpf, A.; Malet, J.-P.; Allemand, P.; Pierrot-Deseilligny, M.; Skupinski, G. Ground-based multi-view photogrammetry for the monitoring of landslide deformation and erosion. Geomorphology 2015, 231, 130–145. [Google Scholar] [CrossRef]

- Esposito, G.; Mastrorocco, G.; Salvini, R.; Oliveti, M.; Starita, P. Application of UAV photogrammetry for the multi-temporal estimation of surface extent and volumetric excavation in the Sa Pigada Bianca open-pit mine, Sardinia, Italy. Environ. Earth Sci. 2017, 76, 103. [Google Scholar] [CrossRef]

- Duffy, P.J.; Shutler, D.J.; Witt, J.M.; DeBell, L.; Anderson, K. Tracking Fine-Scale Structural Changes in Coastal Dune Morphology Using Kite Aerial Photography and Uncertainty-Assessed Structure-from-Motion Photogrammetry. Remote Sens. 2018, 10, 1494. [Google Scholar] [CrossRef] [Green Version]

- Tung, W.Y.; Nagendran, S.K.; Mohamad Ismail, M.A. 3D rock slope data acquisition by photogrammetry approach and extraction of geological planes using FACET plugin in CloudCompare. IOP Conf. Ser. Earth Environ. Sci. 2018, 169, 012051. [Google Scholar] [CrossRef]

- Hastedt, H.; Ekkela, T.; Luhmann, T. Evaluation of the quality of action cameras with wide-Angle lenses in uav photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 2016, 851–859. [Google Scholar] [CrossRef]

- Wierzbicki, D. Multi-camera imaging system for UAV photogrammetry. Sensors 2018, 18, 2433. [Google Scholar] [CrossRef] [Green Version]

- Di Franco, C.; Buttazzo, G. Coverage Path Planning for UAVs Photogrammetry with Energy and Resolution Constraints. J. Intell. Robot. Syst. Theory Appl. 2016, 83, 445–462. [Google Scholar] [CrossRef]

- Mustard, J.F.; Adler, M.; Allwood, A.; Bass, D.S.; Beaty, D.W.; Bell, J.F.; Brinckerhoff, W.B.; Carr, M.; Des Marais, D.J.; Drake, B.; et al. Report of the Mars 2020 Science Definition Team. Mars Explor. Progr. Anal. Gr. 2013, 150, 155–205. [Google Scholar]

- Zhang, H.; Aldana-Jague, E.; Clapuyt, F.; Wilken, F.; Vanacker, V.; Van Oost, K. Evaluating the potential of post-processing kinematic (PPK) georeferencing for UAV-based structure-from-motion (SfM) photogrammetry and surface change detection. Earth Surf. Dyn. 2019, 7, 827. [Google Scholar] [CrossRef] [Green Version]

- Wierzbicki, D.; Nienaltowski, M. Accuracy Analysis of a 3D Model of Excavation, Created from Images Acquired with an Action Camera from Low Altitudes. ISPRS Int. J. Geo-Inf. 2019, 8, 83. [Google Scholar] [CrossRef] [Green Version]

- Mosbrucker, A.R.; Major, J.J.; Spicer, K.R.; Pitlick, J. Camera system considerations for geomorphic applications of SfM photogrammetry. Earth Surf. Process. Landf. 2017, 42, 969–986. [Google Scholar] [CrossRef] [Green Version]

- Cox, R.; Jahn, K.L.; Watkins, O.G.; Cox, P. Extraordinary boulder transport by storm waves (west of Ireland, winter 2013–2014), and criteria for analysing coastal boulder deposits. Earth-Sci. Rev. 2018, 177, 623–636. [Google Scholar] [CrossRef]

- Nott, J. Extremely high-energy wave deposits inside the Great Barrier Reef, Australia: Determining the cause—tsunami or tropical cyclone. Mar. Geol. 1997, 141, 193–207. [Google Scholar] [CrossRef]

- Kennedy, A.B.; Mori, N.; Zhang, Y.; Yasuda, T.; Chen, S.-E.; Tajima, Y.; Pecor, W.; Toride, K. Observations and modeling of coastal boulder transport and loading during Super Typhoon Haiyan. Coast. Eng. J. 2016, 58, 1640004. [Google Scholar] [CrossRef]

- Kennedy, A.B.; Mori, N.; Yasuda, T.; Shimozono, T.; Tomiczek, T.; Donahue, A.; Shimura, T.; Imai, Y. Extreme block and boulder transport along a cliffed coastline (Calicoan Island, Philippines) during Super Typhoon Haiyan. Mar. Geol. 2017, 383, 65–77. [Google Scholar] [CrossRef] [Green Version]

- Hall, A.M.; Hansom, J.D.; Williams, D.M.; Jarvis, J. Distribution, geomorphology and lithofacies of cliff-top storm deposits: Examples from the high-energy coasts of Scotland and Ireland. Mar. Geol. 2006, 232, 131–155. [Google Scholar] [CrossRef]

- Williams, D.M.; Hall, A.M. Cliff-top megaclast deposits of Ireland, a record of extreme waves in the North Atlantic—storms or tsunamis? Mar. Geol. 2004, 206, 101–117. [Google Scholar] [CrossRef]

- Miller, S.; Rowe, D.-A.; Brown, L.; Mandal, A. Wave-emplaced boulders: Implications for development of prime real estate seafront, North Coast Jamaica. Bull. Eng. Geol. Environ. 2014, 73, 109–122. [Google Scholar] [CrossRef]

- Barbano, M.S.; Pirrotta, C.; Gerardi, F. Large boulders along the south-eastern Ionian coast of Sicily: Storm or tsunami deposits? Mar. Geol. 2010, 275, 140–154. [Google Scholar] [CrossRef]

- Biolchi, S.; Furlani, S.; Antonioli, F.; Baldassini, N.; Deguara, J.C.; Devoto, S.; Stefano, A.D.; Evans, J.; Gambin, T.; Gauci, R. Boulder accumulations related to extreme wave events on the eastern coast of Malta. Nat. Hazards Earth Syst. 2016. [Google Scholar] [CrossRef] [Green Version]

- Mastronuzzi, G.; Sansò, P. Boulders transport by catastrophic waves along the Ionian coast of Apulia (southern Italy). Mar. Geol. 2000, 170, 93–103. [Google Scholar] [CrossRef]

- Mastronuzzi, G.; Pignatelli, C.; Sansò, P.; Selleri, G. Boulder accumulations produced by the 20th of February, 1743 tsunami along the coast of southeastern Salento (Apulia region, Italy). Mar. Geol. 2007, 242, 191–205. [Google Scholar] [CrossRef]

- Cox, R.; Zentner, D.B.; Kirchner, B.J.; Cook, M.S. Boulder ridges on the Aran Islands (Ireland): Recent movements caused by storm waves, not tsunamis. J. Geol. 2012, 120, 249–272. [Google Scholar] [CrossRef] [Green Version]

- Autret, R.; Dodet, G.; Fichaut, B.; Suanez, S.; David, L.; Leckler, F.; Ardhuin, F.; Ammann, J.; Grandjean, P.; Allemand, P. A comprehensive hydro-geomorphic study of cliff-top storm deposits on Banneg Island during winter 2013–2014. Mar. Geol. 2016, 382, 37–55. [Google Scholar] [CrossRef] [Green Version]

- Etienne, S.; Paris, R. Boulder accumulations related to storms on the south coast of the Reykjanes Peninsula (Iceland). Geomorphology 2010, 114, 55–70. [Google Scholar] [CrossRef]

- Nott, J. The tsunami hypothesis—Comparisons of the field evidence against the effects, on the Western Australian coast, of some of the most powerful storms on Earth. Mar. Geol. 2004, 208, 1–12. [Google Scholar] [CrossRef]

- Shah-hosseini, M.; Morhange, C.; Beni, A.N.; Marriner, N.; Lahijani, H.; Hamzeh, M.; Sabatier, F. Coastal boulders as evidence for high-energy waves on the Iranian coast of Makran. Mar. Geol. 2011, 290, 17–28. [Google Scholar] [CrossRef]

- Hoffmann, G.; Reicherter, K.; Wiatr, T.; Grützner, C.; Rausch, T. Block and boulder accumulations along the coastline between Fins and Sur (Sultanate of Oman): Tsunamigenic remains? Nat. Hazards 2013, 65, 851–873. [Google Scholar] [CrossRef]

- Boesl, F.; Engel, M.; Eco, R.C.; Galang, J.N.B.; Gonzalo, L.A.; Llanes, F.; Quix, E.; Brückner, H. Digital mapping of coastal boulders—high-resolution data acquisition to infer past and recent transport dynamics. Sedimentology 2019. [Google Scholar] [CrossRef]

- Khan, S.; Robinson, E.; Rowe, D.-A.; Coutou, R. Size and mass of shoreline boulders moved and emplaced by recent hurricanes, Jamaica. Z. Geomorphol. Suppl. Issues 2010, 54, 281–299. [Google Scholar] [CrossRef]

- Glumac, B.; Curran, A. Documenting the Generation and Transport of Large Rock Boulders by Storm Waves Along the High-energy Southern Coast of San Salvador Island, Bahamas (EP23C-2296). In Proceedings of the 2018 AGU Fall Meeting, Washington, DC, USA, 10–14 December 2018. [Google Scholar]

- Wilson, K.; Hassenruck-Gudipati, H.J.; Mason, J.; Schroeder, C.L.; Smith, B.; Mohrig, D.C. Coastal impacts from far field storms-Evidence from Eleuthera, The Bahamas (EP13A-06). Presented at the 2018 AGU Fall Meeting, Washington, DC, USA, 10–14 December 2018. [Google Scholar]

- Cox, R.; Hearty, P.J.; Russell, D.; Edwards, K.R. Comparison of coastal boulder deposits (Holocene Age) on Eleuthera, Bahamas, with storm-transported boulders on Aran Islands, Ireland. Available online: https://gsa.confex.com/gsa/2016AM/webprogram/Paper281076.html (accessed on 30 September 2016).

- Salzmann, L.; Green, A. Boulder emplacement on a tectonically stable, wave-dominated coastline, Mission Rocks, northern KwaZulu-Natal, South Africa. Mar. Geol. 2012, 323, 95–106. [Google Scholar] [CrossRef]

- Cox, R.; O’Boyle, L.; Cytrynbaum, J. Imbricated Coastal Boulder Deposits are Formed by Storm Waves, and Can Preserve a Long-Term Storminess Record. Sci. Rep. 2019, 9, 10784. [Google Scholar] [CrossRef]

- Fichaut, B.; Suanez, S. Quarrying, transport and deposition of cliff-top storm deposits during extreme events: Banneg Island, Brittany. Mar. Geol. 2011, 283, 36–55. [Google Scholar] [CrossRef]

- Lorang, M.S. A wave-competence approach to distinguish between boulder and megaclast deposits due to storm waves versus tsunamis. Mar. Geol. 2011, 283, 90–97. [Google Scholar] [CrossRef]

- Medina, F.; Mhammdi, N.; Chiguer, A.; Akil, M.; Jaaidi, E.B. The Rabat and Larache boulder fields; new examples of high-energy deposits related to storms and tsunami waves in north-western Morocco. Nat. Hazards 2011, 59, 725. [Google Scholar] [CrossRef]

- Switzer, A.D.; Burston, J.M. Competing mechanisms for boulder deposition on the southeast Australian coast. Geomorphology 2010, 114, 42–54. [Google Scholar] [CrossRef]

- Scheffers, A.M.; Kinis, S. Stable imbrication and delicate/unstable settings in coastal boulder deposits: Indicators for tsunami dislocation? Quat. Int. 2014, 332, 73–84. [Google Scholar] [CrossRef]

- Paris, R.; Naylor, L.A.; Stephenson, W.J. Boulders as a Signature of Storms on Rock Coasts. Mar. Geogl. 2011, 283, 1–11. [Google Scholar] [CrossRef]

- Erdmann, W.; Kelletat, D.; Scheffers, A. Boulder transport by storms—Extreme-waves in the coastal zone of the Irish west coast. Mar. Geol. 2018, 399, 1–13. [Google Scholar] [CrossRef]

- Schmitt, P.; Cox, R.; Dias, F.; O’Boyle, L.; Whittaker, T. Field measurements of extreme waves in the intertidal zone (EGU2019-15785). In Proceedings of the Geophys Research Abstracts, Aran Islands, Ireland, 7–12 April 2019. [Google Scholar]

- Langridge, D. Limestone pavement patterns on the Island of Inishmore Co. Galway. Irish Geogr. 1971, 6, 282–293. [Google Scholar] [CrossRef]

- Scheffers, A.; Kelletat, D.; Haslett, S.; Scheffers, S.; Browne, T. Coastal boulder deposits in Galway Bay and the Aran Islands, western Ireland. Z. Geomorphol. Suppl. Issues 2010, 54, 247–279. [Google Scholar] [CrossRef]

- Cox, R.; Ardhuin, F.; Dias, F.; Autret, R.; Beisiegel, N.; Earlie, C.S.; Herterich, J.G.; Kennedy, A.; Paris, R.; Raby, A.; et al. Boulders deposited by storm waves can be misinterpreted as tsunami-related because commonly used hydrodynamic equations are flawed. Front. Mar. Sci. Rev. 2012, 63, 5–30. [Google Scholar]

- Cox, R. Movie Man of Aran as a documentary source for studying boulder transport by storm waves. In Proceedings of the 2013 GSA Annual Meeting in Denver, Denver, CO, USA, 27–30 October 2013. [Google Scholar]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef] [Green Version]

- Henry, J.; Malet, J.; Maquaire, O.; Grussenmeyer, P. The use of small-format and low-altitude aerial photos for the realization of high-resolution DEMs in mountainous areas: Application to the Super-Sauze earthflow (Alpes-de-Haute-Provence, France). Earth Surf. Process. Landf. J. Br. Geomorphol. Res. Gr. 2002, 27, 1339–1350. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; Notario García, M.; Meroño de Larriva, J.; García-Ferrer, A. An analysis of the influence of flight parameters in the generation of unmanned aerial vehicle (UAV) orthomosaicks to survey archaeological areas. Sensors 2016, 16, 1838. [Google Scholar] [CrossRef] [Green Version]

- Agisoft LLC Agisoft Metashape User Manual Professional Edition, Version 1.5. Available online: https://www. agisoft.com/pdf/metashape-pro_1_5_en. pdf (accessed on 2 June 2018).

- Barazzetti, L.; Scaioni, M.; Remondino, F. Orientation and 3D modelling from markerless terrestrial images: Combining accuracy with automation. Photogramm. Rec. 2010, 25, 356–381. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.; Kavoura, K.; Depountis, N.; Kyriou, A.; Argyropoulos, N.; Koukouvelas, I.; Sabatakakis, N. Preliminary results from active landslide monitoring using multidisciplinary surveys. Eur. J. Remote Sens. 2017, 50, 280–299. [Google Scholar] [CrossRef] [Green Version]

- Jin, H. Metadata Based Alignment of Distorted Images. U.S. Patent US8830347B2, 9 September 2014. [Google Scholar]

- Jin, H. Metadata-Driven Method and Apparatus for Automatically Aligning Distorted Images. U.S. Patent US8368773B1, 5 February 2013. [Google Scholar]

- Chen, S.; Jin, H.; Chien, J.-C.; Goldman, D.R. Methods and Apparatus for Camera Calibration Based on Multiview Image Geometry. U.S. Patent US8368762B1, 5 February 2013. [Google Scholar]

- Jin, H. Method and Apparatus for Aligning and Unwarping Distorted Images. U.S. Patent US8391640B1, 12 December 2013. [Google Scholar]

- Paris, S.; Kee, E.R.; Chen, S.; Wang, J. Lens Modeling. U.S. Patent US9235063B2, 12 January 2016. [Google Scholar]

- Chen, S.; Chien, J.-C.; Jin, H. Method and Apparatus for Matching Image Metadata to a Profile Database to Determine Image Processing Parameters. U.S. Patent US8194993B1, 5 June 2012. [Google Scholar]

- Chen, S.; Chan, E.; Jin, H.; Chien, J.-C. Methods and Apparatus for Retargeting and Prioritized Interpolation of Lens Profiles. U.S. Patent US20130124159A1, 4 July 2013. [Google Scholar]

- Harwin, S.; Lucieer, A.; Osborn, J. The impact of the calibration method on the accuracy of point clouds derived using unmanned aerial vehicle multi-view stereopsis. Remote Sens. 2015, 7, 11933–11953. [Google Scholar] [CrossRef] [Green Version]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Bakker, M.; Lane, S.N. Archival photogrammetric analysis of river–floodplain systems using Structure from Motion (SfM) methods. Earth Surf. Process. Landf. 2017, 42, 1274–1286. [Google Scholar] [CrossRef] [Green Version]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef] [Green Version]

- Terpstra, T.; Dickinson, J.; Hashemian, A. Using Multiple Photographs and USGS LiDAR to Improve Photogrammetric Accuracy. SAE Int. J. Trans. Saf. 2018, 6, 193–216. [Google Scholar] [CrossRef]

- Jeong, H.; Ahn, H.; Park, J.; Kim, H.; Kim, S.; Lee, Y.; Choi, C. Feasibility of Using an Automatic Lens Distortion Correction (ALDC) Camera in a Photogrammetric UAV System. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2015, 33, 475–483. [Google Scholar] [CrossRef] [Green Version]

- Verma, A.K.; Bourke, M.C. A method based on structure-from-motion photogrammetry to generate sub-millimetre-resolution digital elevation models for investigating rock breakdown features. Earth Surf. Dyn. 2019, 7, 45–66. [Google Scholar] [CrossRef] [Green Version]

- Neale, W.T.; Hessel, D.; Terpstra, T. Photogrammetric measurement error associated with lens distortion. SAE Tech. Pap. 2011. [Google Scholar] [CrossRef] [Green Version]

- Barbasiewicz, A.; Widerski, T.; Daliga, K. The analysis of the accuracy of spatial models using photogrammetric software: Agisoft Photoscan and Pix4D. In Proceedings of the E3S Web of Conferences, Gdansk, Poland, 22–25 June 2017; p. 12. [Google Scholar]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the accuracy of high resolution digital surface models computed by PhotoScan® and MicMac® in sub-optimal survey conditions. Remote Sens. 2016, 8, 465. [Google Scholar] [CrossRef] [Green Version]

- Li, X.Q.; Chen, Z.A.; Zhang, L.T.; Jia, D. Construction and Accuracy Test of a 3D Model of Non-Metric Camera Images Using Agisoft PhotoScan. Procedia Environ. Sci. 2016, 36, 184–190. [Google Scholar] [CrossRef] [Green Version]

- Tavani, S.; Granado, P.; Corradetti, A.; Girundo, M.; Iannace, A.; Arbués, P.; Muñoz, J.A.; Mazzoli, S. Building a virtual outcrop, extracting geological information from it, and sharing the results in Google Earth via OpenPlot and Photoscan: An example from the Khaviz Anticline (Iran). Comput. Geosci. 2014, 63, 44–53. [Google Scholar] [CrossRef]

- Esposito, G.; Salvini, R.; Matano, F.; Sacchi, M.; Danzi, M.; Somma, R.; Troise, C. Multitemporal monitoring of a coastal landslide through SfM-derived point cloud comparison. Photogramm. Rec. 2017, 32, 459–479. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.W.; Vericat, D. From experimental plots to experimental landscapes: Topography, erosion and deposition in sub-humid badlands from structure-from-motion photogrammetry. Earth Surf. Process. Landf. 2015, 40, 1656–1671. [Google Scholar] [CrossRef] [Green Version]

- Miles, J.; Pitts, M. Photogrammetry and RTI Survey of Hoa Hakananai’a Easter Island Statue. In Papers from the 41st Conference on Computer Applications and Quantitative Methods in Archaeology; Amsterdam University Press: Amsterdam, The Netherlands, 2013; pp. 144–156. [Google Scholar]

- Yamafune, K. Using Computer Vision Photogrammetry (Agisoft Photoscan) to Record and Analyze Underwater Shipwreck Sites. Available online: https://oaktrust.library.tamu.edu/handle/1969.1/156847 (accessed on 2 June 2016).

- Fraser, B.T.; Congalton, R.G. Issues in Unmanned Aerial Systems (UAS) data collection of complex forest environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef] [Green Version]

- Rush, G.P.; Clarke, L.E.; Stone, M.; Wood, M.J. Can drones count gulls? Minimal disturbance and semiautomated image processing with an unmanned aerial vehicle for colony-nesting seabirds. Ecol. Evol. 2018, 8, 12322–12334. [Google Scholar] [CrossRef] [PubMed]

- Casana, J.; Wiewel, A.; Cool, A.; Hill, A.C.; Fisher, K.D.; Laugier, E.J. Archaeological aerial thermography in theory and practice. Adv. Archaeol. Pract. 2017, 5, 310–327. [Google Scholar] [CrossRef] [Green Version]

- Gillan, J.K.; McClaran, M.P.; Swetnam, T.L.; Heilman, P. Estimating forage utilization with drone-based photogrammetric point clouds. Rangel. Ecol. Manag. 2019, 72, 575–585. [Google Scholar] [CrossRef]

- Mayer, C.; Pereira, L.M.G.; Kersten, T.P. A Comprehensive Workflow to Process UAV Images for the Efficient Production of Accurate Geo-information. In Proceedings of the IX National Conference on Cartography and Geodesy, Amadora, Portugal, 25–26 October 2018. [Google Scholar]

- Girardeau-Montaut, D. CloudCompare Version 2.6. 1 User Manual. Available online: http://www.danielgm.net/cc/doc/qCC/CloudCompare v2 (accessed on 1 November 2019).

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (NZ). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Alouache, A.; Yao, X.; Wu, Q. Creating Textured 3D Models from Image Collections using Open Source Software. Int. J. Comput. Appl. 2017, 163, 14–19. [Google Scholar] [CrossRef]

- Rajendra, Y.D.; Mehrotra, S.C.; Kale, K.V.; Manza, R.R.; Dhumal, R.K.; Nagne, A.D.; Vibhute, A.D. Evaluation of Partially Overlapping 3D Point Cloud’s Registration by using ICP variant and CloudCompare. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 891. [Google Scholar] [CrossRef] [Green Version]

- Wu, M.L.; Chien, J.C.; Wu, C.T.; Lee, J. Der An augmented reality system using improved-iterative closest point algorithm for on-patient medical image visualization. Sensors 2018, 18, 2505. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Holst, C.; Klingbeil, L.; Esser, F.; Kuhlmann, H. Using point cloud comparisons for revealing deformations of natural and artificial objects. In Proceedings of the 7th International Conference on Engineering Surveying (INGEO 2017), Lisbon, Portugal, 18–20 October 2017; pp. 265–274. [Google Scholar]

- Chen, H.; Zhao, X.; Luo, J.; Yang, Z.; Zhao, Z.; Wan, H.; Ye, X.; Weng, G.; He, Z.; Dong, T. Towards Generation and Evaluation of Comprehensive Mapping Robot Datasets. arXiv 2019, arXiv:1905.09483. [Google Scholar]

- Vasilakos, C.; Chatzistamatis, S.; Roussou, O.; Soulakellis, N. Terrestrial photogrammetry vs Laser Scanning for rapid earthquake damage assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 527–533. [Google Scholar] [CrossRef] [Green Version]

- Monserrat, O.; Crosetto, M. Deformation measurement using terrestrial laser scanning data and least squares 3D surface matching. ISPRS J. Photogramm. Remote Sens. 2008, 63, 142–154. [Google Scholar] [CrossRef]

- Benito-Calvo, A.; Arroyo, A.; Sánchez-Romero, L.; Pante, M.; De La Torre, I. Quantifying 3D Micro-Surface Changes on Experimental Stones Used to Break Bones and Their Implications for the Analysis of Early Stone Age Pounding Tools. Archaeometry 2018, 60, 419–436. [Google Scholar] [CrossRef]

- Martínez-Espejo Zaragoza, I.; Caroti, G.; Piemonte, A.; Riedel, B.; Tengen, D.; Niemeier, W. Structure from motion (SfM) processing of UAV images and combination with terrestrial laser scanning, applied for a 3D-documentation in a hazardous situation. Geomat. Nat. Hazards Risk 2017, 8, 1492–1504. [Google Scholar] [CrossRef]

- Chen, T.; Catrysse, P.B.; Gamal, A.E.; Wandell, B.A. How small should pixel size be? In Proceedings of the SPIE, San Jose, CA, USA, 15 May 2000; Volume 3965. [Google Scholar] [CrossRef]

- Farrell, J.; Xiao, F.; Kavusi, S. Resolution and light sensitivity tradeoff with pixel size. In Proceeding of the SPIE, San Jose, CA, USA, 10 February 2006; Volume 6069. [Google Scholar] [CrossRef]

- Eid, E. Study of limitations on pixel size of very high resolution image sensors. In Proceedings of the Eighteenth National Radio Science Conference, NRSC2001 (IEEE Cat. No.01EX462), Mansoura, Egypt, 27–29 March 2001; Volume 1, pp. 15–28. [Google Scholar] [CrossRef]

- Jiang, C.; Song, J. An Ultrahigh-Resolution Digital Image Sensor with Pixel Size of 50 nm by Vertical Nanorod Arrays. Adv. Mater. 2015, 27, 4454–4460. [Google Scholar] [CrossRef]

- Gomez, C.; Hayakawa, Y.; Obanawa, H. A study of Japanese landscapes using structure from motion derived DSMs and DEMs based on historical aerial photographs: New opportunities for vegetation monitoring and diachronic geomorphology. Geomorphology 2015, 242, 11–20. [Google Scholar] [CrossRef] [Green Version]

- Rapinel, S.; Clément, B.; Dufour, S.; Hubert-Moy, L. Fine-Scale Monitoring of Long-term Wetland Loss Using LiDAR Data and Historical Aerial Photographs: The Example of the Couesnon Floodplain, France. Wetlands 2018, 38, 423–435. [Google Scholar] [CrossRef]

- Eltner, A.; Baumgart, P.; Maas, H.G.; Faust, D. Multi-temporal UAV data for automatic measurement of rill and interrill erosion on loess soil. Earth Surf. Process. Landf. 2015, 40, 741–755. [Google Scholar] [CrossRef]

- Rossini, M.; Di Mauro, B.; Garzonio, R.; Baccolo, G.; Cavallini, G.; Mattavelli, M.; De Amicis, M.; Colombo, R. Rapid melting dynamics of an alpine glacier with repeated UAV photogrammetry. Geomorphology 2018, 304, 159–172. [Google Scholar] [CrossRef]

- Herterich, J.G.; Dias, F. Wave breaking and runup of long waves approaching a cliff over a variable bathymetry. Procedia IUTAM 2017, 25, 18–27. [Google Scholar] [CrossRef]

| Survey Year | 2015 | 2017 |

|---|---|---|

| Camera Model | GoPro Hero3+ | DJI FC300X |

| 35 mm sensor focal length equivalent | 17 mm | 20 mm |

| Field of view | 149° | 94° |

| Resolution (Megapixels) | 12 | 12 |

| Flight altitude (m) | 90 | 50 |

| Number of orthogonal photos | 359 | 211 |

| GCP deployment | No | Yes |

| ID # | Estimated Mass (tonnes) | Distance Moved (m) | 2015 Elevation (m) | 2017 Elevation (m) | Elevation Change (m) |

|---|---|---|---|---|---|

| 1 | 19 | -- | -- | 11 | -- |

| 2 | 28 | -- | -- | 12.5 | -- |

| 3 | 19 | 7 | 9 | 10 | +1 |

| 4 | 15 | 10 | 10 | 10 | 0 |

| 5 | 3 | 23 | 9 | 10 | +1 |

| 6 | 7 | 15 | 9 | 11 | +2 |

| 7 | 12 | 12 | 3 | 4 | +1 |

| 8 | 8 | 6 | 9 | 11 | +2 |

| 9 | 3 | -- | -- | 11 | -- |

| 10 | 3 | -- | -- | 11 | -- |

| 11 | 3 | -- | -- | 11 | -- |

| 12 | 6 | 4 | 2 | 2 | 0 |

| 13 | 8 | 5 | 11 | 11 | 0 |

| 14 | 13 | -- | -- | 13 | -- |

| 15 | 11 | 4 | 12 | 14 | +2 |

| 16 | 3 | 7 | 12 | 14 | +2 |

| 17 | 7 | 11 | 12 | 15 | +3 |

| 18 | 6 | 10 | 12 | 15 | +3 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nagle-McNaughton, T.; Cox, R. Measuring Change Using Quantitative Differencing of Repeat Structure-From-Motion Photogrammetry: The Effect of Storms on Coastal Boulder Deposits. Remote Sens. 2020, 12, 42. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010042

Nagle-McNaughton T, Cox R. Measuring Change Using Quantitative Differencing of Repeat Structure-From-Motion Photogrammetry: The Effect of Storms on Coastal Boulder Deposits. Remote Sensing. 2020; 12(1):42. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010042

Chicago/Turabian StyleNagle-McNaughton, Timothy, and Rónadh Cox. 2020. "Measuring Change Using Quantitative Differencing of Repeat Structure-From-Motion Photogrammetry: The Effect of Storms on Coastal Boulder Deposits" Remote Sensing 12, no. 1: 42. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010042