A Novel Classification Extension-Based Cloud Detection Method for Medium-Resolution Optical Images

Abstract

:1. Introduction

2. Test Sites and Data

2.1. Study Area

2.2. Datasets and Preprocessing

2.3. Validation Dataset

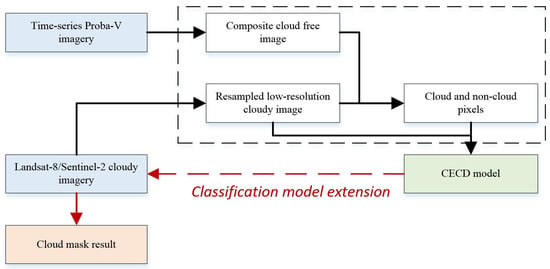

3. Method

3.1. Identifying Cloud and Non-Cloud Pixels with the Support of Reference Cloud-Free Imagery

3.2. Classification Extention-Based Cloud Detection Model

3.2.1. Collection of Training Samples

3.2.2. Modeling of Random Forest Classifier

4. Results and Accuracy Assessment

4.1. Performance of CECD Using the Landsat-8 and Sentinel-2 Imagery

4.1.1. Cloud Masking in Landsat Imagery

4.1.2. Cloud Masking in Sentinel-2 Imagery

4.2. Comparison with FMASK Cloud-Detection Algorithm

5. Discussion

5.1. Effectivenss of Classification Extention Strategy in Cloud Detection

5.2. Reliability of the Collected Training Samples

5.3. Computational Efficiency

5.4. The Importance of Input Features for Different Environments

5.5. Limitations of the Use of CECD for Cloud Detection

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Data availability

Appendix A. Estimation of the Corrected THOT Value by a Minimization of Objective Function

References

- Zhu, X.; Helmer, E.H. An automatic method for screening clouds and cloud shadows in optical satellite image time series in cloudy regions. Remote Sens. Environ. 2018, 214, 135–153. [Google Scholar] [CrossRef]

- Hansen, M.C.; Loveland, T.R. A review of large area monitoring of land cover change using Landsat data. Remote Sens. Environ. 2012, 122, 66–74. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, D. Improving forest aboveground biomass estimation using seasonal Landsat NDVI time-series. ISPRS J. Photogramm. Remote Sens. 2015, 102, 222–231. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, D. Accurate mapping of forest types using dense seasonal Landsat time-series. ISPRS J. Photogramm. Remote Sens. 2014, 96, 1–11. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascón, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef] [Green Version]

- Storey, J.; Roy, D.P.; Masek, J.; Gascon, F.; Dwyer, J.L.; Choate, M.J. A note on the temporary misregistration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) imagery. Remote Sens. Environ. 2016, 186, 121–122. [Google Scholar] [CrossRef] [Green Version]

- Wulder, M.A.; Hilker, T.; White, J.C.; Coops, N.C.; Masek, J.G.; Pflugmacher, D.; Crevier, Y. Virtual constellations for global terrestrial monitoring. Remote Sens. Environ. 2015, 170, 62–76. [Google Scholar] [CrossRef] [Green Version]

- Fisher, A. Cloud and Cloud-Shadow Detection in SPOT5 HRG Imagery with Automated Morphological Feature Extraction. Remote Sens. 2014, 6, 776–800. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Woodcock, C.E. Automated cloud, cloud shadow, and snow detection in multitemporal Landsat data: An algorithm designed specifically for monitoring land cover change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Sun, L.; Mi, X.; Wei, J.; Wang, J.; Tian, X.; Yu, H.; Gan, P. A cloud detection algorithm-generating method for remote sensing data at visible to short-wave infrared wavelengths. ISPRS J. Photogramm. Remote Sens. 2017, 124, 70–88. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4–8 and Sentinel-2 imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- Zhai, H.; Zhang, H.; Zhang, L.; Li, P. Cloud/shadow detection based on spectral indices for multi/hyperspectral optical remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2018, 144, 235–253. [Google Scholar] [CrossRef]

- Chen, S.; Chen, X.; Chen, J.; Jia, P.; Cao, X.; Liu, C. An Iterative Haze Optimized Transformation for Automatic Cloud/Haze Detection of Landsat Imagery. IEEE Trans. Geosci. Remote Sens. 2015, 54, 2682–2694. [Google Scholar] [CrossRef]

- Sun, L.; Wei, J.; Wang, J.; Mi, X.; Guo, Y.; Lv, Y.; Yang, Y.; Gan, P.; Zhou, X.; Jia, C.; et al. A Universal Dynamic Threshold Cloud Detection Algorithm (UDTCDA) supported by a prior surface reflectance database. J. Geophys. Res. Atmos. 2016, 121, 7172–7196. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Huang, C.Q.; Thomas, N.; Goward, S.N.; Masek, J.G.; Zhu, Z.L.; Townshend, J.R.G.; Vogelmann, J.E. Automated masking of cloud and cloud shadow for forest change analysis using Landsat images. Int. J. Remote Sens. 2010, 31, 5449–5464. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ Automated Cloud-Cover Assessment (ACCA) Algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENµS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef] [Green Version]

- Joshi, P.P.; Wynne, R.H.; Thomas, V.A. Cloud detection algorithm using SVM with SWIR2 and tasseled cap applied to Landsat 8. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101898. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef] [Green Version]

- Mateo-Garcia, G.; Laparra, V.; López-Puigdollers, D.; Gomez-Chova, L. Transferring deep learning models for cloud detection between Landsat-8 and Proba-V. ISPRS J. Photogramm. Remote Sens. 2020, 160, 1–17. [Google Scholar] [CrossRef]

- Mateo-Garcia, G.; Gómez-Chova, L. Convolutional Neural Networks for Cloud Screening: Transfer Learning from Landsat-8 to Proba-V. In Proceedings of the IGARSS 2018–2018 IEEE Internationl Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Li, P.; Dong, L.; Xiao, H.; Xu, M. A cloud image detection method based on SVM vector machine. Neurocomputing 2015, 169, 34–42. [Google Scholar] [CrossRef]

- Hughes, M.J.; Hayes, D.J. Automated Detection of Cloud and Cloud Shadow in Single-Date Landsat Imagery Using Neural Networks and Spatial Post-Processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef] [Green Version]

- Scaramuzza, P.L.; Bouchard, M.A.; Dwyer, J.L. Development of the Landsat Data Continuity Mission Cloud-Cover Assessment Algorithms. IEEE Trans. Geosci. Remote Sens. 2011, 50, 1140–1154. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Liu, L.Y.; Chen, X.D.; Xie, S.; Lei, L.P. A Novel Multitemporal Cloud and Cloud Shadow Detection Method Using the Integrated Cloud Z-Scores Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 123–134. [Google Scholar] [CrossRef]

- Goodwin, N.R.; Collett, L.J.; Denham, R.J.; Flood, N.; Tindall, D. Cloud and cloud shadow screening across Queensland, Australia: An automated method for Landsat TM/ETM+ time series. Remote Sens. Environ. 2013, 134, 50–65. [Google Scholar] [CrossRef]

- Ghasemian, N.; Akhoondzadeh, M. Introducing two Random Forest based methods for cloud detection in remote sensing images. Adv. Space Res. 2018, 62, 288–303. [Google Scholar] [CrossRef]

- Bai, T.; Li, D.; Sun, K.; Chen, Y.; Li, W. Cloud Detection for High-Resolution Satellite Imagery Using Machine Learning and Multi-Feature Fusion. Remote Sens. 2016, 8, 715. [Google Scholar] [CrossRef] [Green Version]

- Olthof, I.; Butson, C.; Fraser, R. Signature extension through space for northern landcover classification: A comparison of radiometric correction methods. Remote Sens. Environ. 2005, 95, 290–302. [Google Scholar] [CrossRef]

- E Woodcock, C.E.; A Macomber, S.A.; Pax-Lenney, M.; Cohen, W.B. Monitoring large areas for forest change using Landsat: Generalization across space, time and Landsat sensors. Remote Sens. Environ. 2001, 78, 194–203. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Liu, L.; Wang, Y.; Hu, Y.; Zhang, B. A SPECLib-based operational classification approach: A preliminary test on China land cover mapping at 30 m. Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 83–94. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Chen, X.; Xie, S.; Gao, Y. Fine Land-Cover Mapping in China Using Landsat Datacube and an Operational SPECLib-Based Approach. Remote Sens. 2019, 11, 1056. [Google Scholar] [CrossRef] [Green Version]

- Sedano, F.; Kempeneers, P.; Strobl, P.; Kučera, J.; Vogt, P.; Seebach, L.; San-Miguel-Ayanz, J. A cloud mask methodology for high resolution remote sensing data combining information from high and medium resolution optical sensors. ISPRS J. Photogramm. Remote Sens. 2011, 66, 588–596. [Google Scholar] [CrossRef]

- Bicheron, P.; Amberg, V.; Bourg, L.; Petit, D.; Huc, M.; Miras, B.; Brockmann, C.; Hagolle, O.; Delwart, S.; Ranera, F.; et al. Geolocation Assessment of MERIS GlobCover Orthorectified Products. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2972–2982. [Google Scholar] [CrossRef]

- Dierckx, W.; Sterckx, S.; Benhadj, I.; Livens, S.; Duhoux, G.; Van Achteren, T.; François, M.; Mellab, K.; Saint, G. PROBA-V mission for global vegetation monitoring: Standard products and image quality. Int. J. Remote Sens. 2014, 35, 2589–2614. [Google Scholar] [CrossRef]

- Sterckx, S.; Benhadj, I.; Duhoux, G.; Livens, S.; Dierckx, W.; Goor, E.; Adriaensen, S.; Heyns, W.; Van Hoof, K.; Strackx, G.; et al. The PROBA-V mission: Image processing and calibration. Int. J. Remote Sens. 2014, 35, 2565–2588. [Google Scholar] [CrossRef]

- Xie, S.; Liu, L.; Zhang, X.; Yang, J.; Chen, X.; Gao, Y. Automatic Land-Cover Mapping using Landsat Time-Series Data based on Google Earth Engine. Remote Sens. 2019, 11, 3023. [Google Scholar] [CrossRef] [Green Version]

- Wingate, V.R.; Phinn, S.R.; Kuhn, N.; Bloemertz, L.; Dhanjal-Adams, K.L. Mapping Decadal Land Cover Changes in the Woodlands of North Eastern Namibia from 1975 to 2014 Using the Landsat Satellite Archived Data. Remote Sens. 2016, 8, 681. [Google Scholar] [CrossRef] [Green Version]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Hughes, M.J.; Laue, B. Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Ghaffarian, S. Automatic histogram-based Fuzzy C-means clustering for remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2014, 97, 46–57. [Google Scholar] [CrossRef]

- Yang, Y.H.; Liu, Y.X.; Zhou, M.X.; Zhang, S.Y.; Zhan, W.F.; Sun, C.; Duan, Y.W. Landsat 8 OLI image based terrestrial water extraction from heterogeneous backgrounds using a reflectance homogenization approach. Remote Sens. Environ. 2015, 171, 14–32. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Sternberg, S.R.; Zhuang, X.H. Image Analysis Using Mathematical Morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 532–550. [Google Scholar] [CrossRef]

- Zhou, Q.; Tollerud, H.J.; Barber, C.P.; Smith, K.; Zelenak, D. Training Data Selection for Annual Land Cover Classification for the Land Change Monitoring, Assessment, and Projection (LCMAP) Initiative. Remote Sens. 2020, 12, 699. [Google Scholar] [CrossRef] [Green Version]

- Mellor, A.; Boukir, S.; Haywood, A.; Jones, S. Exploring issues of training data imbalance and mislabelling on random forest performance for large area land cover classification using the ensemble margin. ISPRS J. Photogramm. Remote Sens. 2015, 105, 155–168. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P. Using the 500 m MODIS land cover product to derive a consistent continental scale 30 m Landsat land cover classification. Remote Sens. Environ. 2017, 197, 15–34. [Google Scholar] [CrossRef]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and Change Detection Using Landsat TM Data: When and how to correct atmospheric effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Belgiu, M.; Dagut, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Hasituya; Chen, Z. Mapping Plastic-Mulched Farmland with Multi-Temporal Landsat-8 Data. Remote Sens. 2017, 9, 557. [Google Scholar] [CrossRef] [Green Version]

- Chan, J.C.-W.; Paelinckx, D. Evaluation of Random Forest and Adaboost tree-based ensemble classification and spectral band selection for ecotope mapping using airborne hyperspectral imagery. Remote Sens. Environ. 2008, 112, 2999–3011. [Google Scholar] [CrossRef]

- Chutia, D.; Bhattacharyya, D.K.; Sarma, K.K.; Kalita, R.; Sudhakar, S. Hyperspectral Remote Sensing Classifications: A Perspective Survey. Trans. GIS 2015, 20, 463–490. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Brooks, E.B.; Yang, Z.; Thomas, V.A.; Wynne, R.H. Edyn: Dynamic Signaling of Changes to Forests Using Exponentially Weighted Moving Average Charts. Forest 2017, 8, 304. [Google Scholar] [CrossRef] [Green Version]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation. In AI 2006: Advances in Artificial Intelligence. AI 2006. Lecture Notes in Computer Science; Sattar, A., Kang, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4304. [Google Scholar]

- Bell, C.B. Distribution-free statistical tests - bradley,jv. Technometrics 1970, 12, 929. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. Toward intelligent training of supervised image classifications: Directing training data acquisition for SVM classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Gong, P.; Liu, H.; Zhang, M.; Li, C.; Wang, J.; Huang, H.; Clinton, N.; Ji, L.; Li, W.; Bai, Y.; et al. Stable classification with limited sample: Transferring a 30-m resolution sample set collected in 2015 to mapping 10-m resolution global land cover in 2017. Sci. Bull. 2019, 64, 370–373. [Google Scholar] [CrossRef] [Green Version]

- Pflugmacher, D.; Cohen, W.B.; Kennedy, R.E.; Yang, Z. Using Landsat-derived disturbance and recovery history and lidar to map forest biomass dynamics. Remote Sens. Environ. 2014, 151, 124–137. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Dorji, P.; Fearns, P. Impact of the spatial resolution of satellite remote sensing sensors in the quantification of total suspended sediment concentration: A case study in turbid waters of Northern Western Australia. PLoS ONE 2017, 12, e0175042. [Google Scholar] [CrossRef]

| Site | a | b | c | d | e | f | |

| Landsat-8 | Path row | p120r028 | p139r039 | p123r032 | p029r043 | p039r032 | p043r027 |

| Date | 2018/5/5 | 2019/2/22 | 2019/1/21 | 2018/9/9 | 2018/10/4 | 2018/5/25 | |

| Confused types | BB, TC | SN, FW, BB | BI, FW, TC | BB | SN, FW, TC | TC | |

| Sentinel-2 | Granule tile | T51TXM | T45RWN | T50TMK | T13RGH | T11TQE | T11TMN |

| Date | 2019/5/4 | 2019/2/22 | 2019/8/13 | 2019/1/8 | 2019/1/11 | 2019/10/1 | |

| Confused types | BB, TC | SN, BB, TC | BI, TC | BB, TC | SN, BB, TC | SN, BI, TC | |

| Site | g | h | i | j | k | l | |

| Landsat-8 | Path row | p106r075 | p147r032 | p159r026 | p190r043 | p195r028 | p233r073 |

| Date | 2018/12/2 | 2018/11/10 | 2018/7/24 | 2018/10/22 | 2018/10/9 | 2018/3/25 | |

| Confused types | FW, BB, TC | SN, BD, TC | BB, FW | BD, TC | SN, BB, BI | FW, TC | |

| Sentinel-2 | Granule tile | T52KDB | T44TLL | T41UPP | T32RMN | T32TLS | T19KFV |

| Date | 2019/1/20 | 2019/2/14 | 2019/12/7 | 2019/10/18 | 2019/5/30 | 2019/12/10 | |

| Confused types | BB, TC | SN, BB, TC | SN, BB, TC | BD, TC | SN, BI, TC | BB, FW, TC |

| Band Names | Proba-V Bands (μm) | Landsat-8 Bands (μm) | Sentinel-2 Bands (μm) |

|---|---|---|---|

| Coastal | \ | Band 1 (0.435–0.451) | Band 1 (0.433–0.453) |

| Blue | Blue (0.440–0.487) | Band 2 (0.452–0.512) | Band 2 (0.458–0.523) |

| Green | \ | Band 3 (0.533–0.590) | Band 3 (0.543–0.578) |

| Red | Red (0.614–0.696) | Band 4 (0.636–0.673) | Band 4 (0.650–0.680) |

| Red Edge 1 | \ | \ | Band 5 (0.698–0.713) |

| Red Edge 2 | \ | \ | Band 6 (0.733–0.748) |

| Red Edge 3 | \ | \ | Band 7 (0.765–0.785) |

| Wide NIR | NIR (0.772–0.902) | \ | Band 8 (0.785–0.900) |

| Narrow NIR | \ | Band 5 (0.851–0.879) | Band 8a (0.855–0.875) |

| Water vapor | \ | \ | Band 9 (0.930–0.950) |

| Cirrus | \ | Band 9 (1.363–1.384) | Band 10 (1.365–1.385) |

| SWIR1 | SWIR (1.570–1.635) | Band 6 (1.566–1.651) | Band 11 (1.565–1.655) |

| SWIR2 | \ | Band 7 (2.107–2.294) | Band 12 (2.100–2.280) |

| a | b | c | d | e | f | g | h | i | j | k | l | A. A. | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Landsat 8 | Npts | C. S. | 545 | 244 | 253 | 164 | 420 | 357 | 83 | 423 | 274 | 417 | 125 | 494 | / |

| N. S. | 235 | 537 | 507 | 578 | 340 | 426 | 705 | 331 | 505 | 470 | 647 | 282 | / | ||

| CECD | P. C. | 95.91 | 95.27 | 97.86 | 98.75 | 96.18 | 97.66 | 96.97 | 96.41 | 98.72 | 97.18 | 97.43 | 95.33 | 96.88 | |

| U. C. | 99.51 | 96.48 | 99.59 | 95.33 | 99.75 | 98.88 | 99.98 | 98.90 | 99.53 | 98.01 | 99.63 | 99.89 | 98.64 | ||

| P. N. | 99.74 | 98.31 | 99.79 | 97.92 | 99.51 | 98.23 | 99.95 | 98.91 | 99.77 | 96.89 | 99.98 | 99.17 | 98.11 | ||

| U. N. | 98.26 | 97.21 | 97.29 | 98.55 | 93.61 | 93.28 | 98.47 | 96.23 | 97.94 | 92.59 | 98.07 | 94.05 | 96.67 | ||

| F. M. | 97.86 | 95.75 | 98.71 | 95.84 | 97.85 | 97.77 | 98.25 | 97.75 | 99.14 | 96.85 | 98.65 | 97.37 | 97.65 | ||

| K. C. | 95.75 | 93.12 | 95.69 | 92.86 | 94.64 | 92.94 | 98.31 | 95.49 | 96.56 | 91.26 | 94.55 | 91.51 | 94.33 | ||

| FMASK | P. C. | 98.86 | 99.62 | 99.60 | 98.48 | 98.04 | 89.25 | 90.22 | 97.92 | 99.03 | 94.39 | 98.79 | 96.42 | 97.06 | |

| U. C. | 97.65 | 59.18 | 78.14 | 92.06 | 93.64 | 99.99 | 99.97 | 82.38 | 99.10 | 98.58 | 60.31 | 88.71 | 87.47 | ||

| P. N. | 94.59 | 66.17 | 85.60 | 97.46 | 87.89 | 99.99 | 99.97 | 79.19 | 99.53 | 97.93 | 86.62 | 80.61 | 89.62 | ||

| U. N. | 99.99 | 99.72 | 99.76 | 99.26 | 99.29 | 90.04 | 98.22 | 97.68 | 99.07 | 92.14 | 99.29 | 93.12 | 97.29 | ||

| F. M. | 98.27 | 72.70 | 87.19 | 94.95 | 94.34 | 94.31 | 94.73 | 91.43 | 99.08 | 95.96 | 73.71 | 92.94 | 90.80 | ||

| K. C. | 96.04 | 56.06 | 76.98 | 92.04 | 90.36 | 83.15 | 88.17 | 79.34 | 97.35 | 90.90 | 65.82 | 78.52 | 82.88 | ||

| Sentinel-2 | Npts | C. S. | 441 | 272 | 143 | 203 | 408 | 186; | 523 | 383 | 490 | 637 | 167 | 232 | / |

| N. S. | 339 | 518 | 649 | 571 | 369 | 610 | 268 | 385 | 218 | 138 | 634 | 557 | / | ||

| CECD | P. C. | 96.00 | 95.27 | 95.01 | 96.63 | 96.24 | 97.93 | 98.07 | 97.65 | 97.39 | 97.03 | 96.22 | 96.19 | 96.46 | |

| U. C. | 99.76 | 96.48 | 95.00 | 98.41 | 94.25 | 99.46 | 98.28 | 95.41 | 94.38 | 99.82 | 98.59 | 99.67 | 97.46 | ||

| P. N. | 99.71 | 98.31 | 98.94 | 99.47 | 93.67 | 99.84 | 97.11 | 94.93 | 91.90 | 98.89 | 99.69 | 99.46 | 97.66 | ||

| U. N. | 95.08 | 97.21 | 98.49 | 97.09 | 95.85 | 99.67 | 96.76 | 97.40 | 99.10 | 89.03 | 99.37 | 95.9 | 96.66 | ||

| F. M. | 97.85 | 96.00 | 95.10 | 97.55 | 95.30 | 98.69 | 98.29 | 96.33 | 96.91 | 98.37 | 97.49 | 97.41 | 97.11 | ||

| K. C. | 95.15 | 93.12 | 92.71 | 97.41 | 89.99 | 98.94 | 95.11 | 92.67 | 92.19 | 92.60 | 97.41 | 92.81 | 94.18 | ||

| FMASK | P. C. | 98.99 | 98.62 | 93.01 | 97.12 | 97.07 | 99.47 | 84.37 | 97.18 | 87.35 | 93.96 | 97.96 | 98.09 | 95.01 | |

| U. C. | 92.41 | 59.18 | 74.70 | 99.47 | 82.82 | 97.82 | 99.76 | 73.54 | 73.67 | 99.64 | 61.57 | 92.13 | 83.81 | ||

| P. N. | 89.68 | 66.17 | 91.55 | 99.82 | 78.73 | 99.35 | 99.64 | 57.10 | 57.26 | 97.78 | 86.62 | 93.20 | 84.74 | ||

| U. N. | 98.86 | 98.72 | 98.42 | 97.26 | 93.67 | 99.84 | 78.39 | 92.35 | 76.78 | 83.81 | 99.48 | 96.64 | 92.36 | ||

| F. M. | 95.68 | 74.98 | 80.40 | 98.29 | 89.75 | 98.68 | 91.43 | 80.88 | 84.66 | 96.65 | 78.76 | 91.50 | 88.47 | ||

| K. C. | 90.63 | 56.06 | 72.73 | 97.80 | 74.24 | 98.26 | 79.11 | 55.32 | 43.54 | 88.55 | 67.19 | 88.97 | 76.03 |

| Vegetation | Water | Barren | Impervious | Snow | ||

|---|---|---|---|---|---|---|

| Landsat-8 | χ2 | 0.36 | 2.44 | 3.23 | 54.39 | 215.43 |

| p-value | 0.55 | 0.11 | 0.07 | 0.00 | 0.00 | |

| Sentinel-2 | χ2 | 0.13 | 1.12 | 17.06 | 60.01 | 232.07 |

| p-value | 0.72 | 0.28 | 0.00 | 0.00 | 0.00 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Liu, L.; Gao, Y.; Zhang, X.; Xie, S. A Novel Classification Extension-Based Cloud Detection Method for Medium-Resolution Optical Images. Remote Sens. 2020, 12, 2365. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152365

Chen X, Liu L, Gao Y, Zhang X, Xie S. A Novel Classification Extension-Based Cloud Detection Method for Medium-Resolution Optical Images. Remote Sensing. 2020; 12(15):2365. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152365

Chicago/Turabian StyleChen, Xidong, Liangyun Liu, Yuan Gao, Xiao Zhang, and Shuai Xie. 2020. "A Novel Classification Extension-Based Cloud Detection Method for Medium-Resolution Optical Images" Remote Sensing 12, no. 15: 2365. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152365