4.1. Analysis of Performance of the gbXML Generation Method

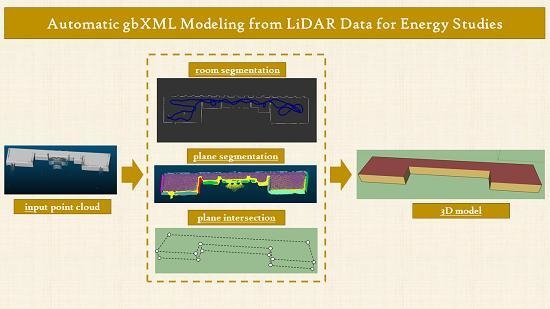

In this section, the performance of the method for each case of study is evaluated. The critical points in the flux of the systems are the clustering of plane segments for obtaining the walls, followed in order of importance by the room segmentation. These processes require the attention of the user, to verify or correct the results obtained. Their successful outcome determines the success of the whole gbXML generation process.

The point clouds are surveyed with a Zeb-Revo, which has an accuracy of 0.03 m [

34], which determines the accuracy of the input point cloud. The experiments were run on Ubuntu 16.04 LTS, with an Intel Core i7 CPU an NVIDIA GF119M GPU. The summary of the results of the three scenes can be seen in

Table 3.

Case 1 is the simplest one. Therefore, there are practically no problems during the execution of the software and the user only needs to confirm each step. The point cloud of the room has a size of 4302130 points and the point cloud of the trajectory, 13100. The survey was made from the inside of the room. Thus, the room segmentation algorithm does not detect any door along the trajectory in 27 s (

Figure 12).

The point cloud is voxelized with a size of the side of the voxel of 0.03 m. This value is obtained by experimentation to reduce the processing time without losing the information. The plane segmentation algorithm is performed for the detected room. The parameters of the region growing algorithm are: minimum size of detected planes of 200 points, the size of the neighborhood is 50 points and smoothness of 1º. With these parameters, 32 planes are detected in the room in 6 s (

Figure 13a). These planes are classified as XY, XZ and YZ planes and merged by their proximity, as was explained in the previous section, obtaining 2 XY planes, 2 XZ planes and 2 YZ planes (

Figure 13b). They intersect obtaining 8 intersection points, which are used to generate the gbXML structure. The entire process lasts 94 s, but this value is relative in this case, because it depends on the time that the user last validated each step.

Case 2 is more complicated, given its geometry and dimensions. The room segmentation algorithm detects only one room in 110 s (

Figure 14).

Due to the larger size of the point cloud (the point cloud of the room has 13,647,747 points and the point cloud of the trajectory, 39,237), this case is voxelized with a size of the side of the voxel of 0.08 m. As in the previous case, the room segmentation algorithm only detects one room in 110 s. The plane segmentation algorithm of the room uses the same parameters as in the previous case. The algorithm detects 93 planes in 25 s (

Figure 15).

From them, 13 XY planes, 41 are XZ planes and 22 are YZ planes. The correction of the planes, in this case, obtain 2 planes XY, 15 XZ and 8 YZ. These values are unacceptable, but can be repeated, changing the values of the correction algorithm. For the planes XZ, it is repeated with a distance between groups in the same plane of 3.5 m, obtaining 8 groups XZ. All planes are then correct, but some of them are produced by the great noise of the point cloud. This noise can be seen in

Figure 16 as the segments of point clouds on the top of it. The undesired groups can be corrected by the user obtaining the needed 4 XZ planes for the desired simplified 3D model. The association of planes for YZ planes is correct, but some of the estimated groups are produced by planes that must be omitted to obtain the simplified model required for this example. The user can correct the groups, selecting the needed 4 groups (

Figure 16). Then, the groups are intersected, obtaining 16 intersection points, which correspond with the vertexes of the model.

After the correction made by the user, the segments of

Figure 17 are obtained. Each color corresponds to a different wall. The system works well with the floor and ceiling of this case, but needs to be corrected for walls, especially the longitudinal ones.

When the planes of the walls are intersected (2 from group XY, 4 from group XZ and 4 from group YZ), 32 intersection points are obtained. This is produced, as shown in

Section 2, because of the intersection of some planes, which have a mathematic intersection but no real contact between the walls represented by them. Thus, the estimated virtual intersection points are 32, but after evaluating which of these points are vertexes, an amount of 16 real vertexes is obtained. In

Figure 17, a schema of the problem for this scenario is shown. Although this part of the system is supervised by the user and needs his approval, it is tested that no problem appears if the previous clustering process is correct.

Once the real vertexes are estimated, the gbXML schema can be generated. The whole process with user interactions lasts 407 s.

In case 1 the basic performance of the algorithm is studied. Case 2 enables the study of how the system deals with more complex geometries. Case 3 allows one to test the performance with multiple rooms and how to deal with errors on the acquisition of one of them. The input point cloud corresponds with two rooms and a segment of the corridor with a size of 11,156,807. The trajectory point cloud has 33,265 points. Working with non-ideal scenarios allows one to think about how to solve the problems inherent from the data acquisition and its processing, as will be explained with Scenario 3, making the system more flexible. The system allows selecting the rooms that the user wants to analyze, avoiding the incomplete or corrupted ones. As shown in

Figure 18, the room segmentation algorithm detects two doors along the trajectory in 86 s, splitting the input point cloud among three different rooms. It is not strange to find multiple near points of the trajectory identified as a door. It must be considered that the survey was performed making loops in the trajectory, and each door was crossed two times minimum. Moreover, because of the geometry of the doors and the trajectory of the acquisition, multiple near points are susceptible to meet with the conditions of a door. That is why a radius of 0.8 m is considered from each door point to replicas of this door.

As mentioned before, the corridor is incomplete, and cannot be reconstructed. Thus, in the GUI of the methodology, the user is given the option to select which rooms to analyze, in case of issues during the acquisition. Therefore, only the two rooms present in the corridor are analyzed.

The room segmentation algorithm detects 3 different rooms in 86 s. The segment of the point cloud which corresponds with the corridor is incomplete. Therefore, it is discarded for the rest of the process. The other two segments of the point cloud are voxelized with a side of a voxel of 0.03 m. Each room is segmented in planes with the same parameters, as in the previous cases. One of the rooms is segmented in 63 segments in 19 s (

Figure 19a). From these planes, 17 are XY segments, 11 are XZ segments and 6 are YZ segments. The correction planes algorithm obtains 2 groups of XY planes, 4 groups of XZ planes and 2 of YZ planes. From the XZ groups, 2 must be discarded by the user because they correspond with reflected surfaces, as can be seen in

Figure 19a. For the other room, 73 segments are obtained in the plane segmentation. Fifteen of them are classified as XY planes, 10 of them as XZ planes and 14 of them as YZ planes. The planes correction algorithm obtains the same results as in the other room. The correct clusters can be seen in

Figure 19b.

Following the workflow described in

Section 2, now all adjacent planes are corrected as shown in the schema of

Figure 20. Of course, the schema is exaggerated to show a better visualization of what happen between the two rooms. The medium plane between the adjacent parallel planes is calculated to regularize the model and remove the gaps between adjacent rooms.

At this point, the planes of each room are intersected. With 6 planes per room, 8 intersection points are obtained, which are the vertexes of each room. After generating the gbXML schema with these data, one last correction is needed, as explained in

Section 1: if any wall corresponding with the intersection wall between two rooms is duplicated, one of the polygons is deleted for this wall and the polygon left is used to define the wall for gbXML. This polygon is assigned to the two adjacent spaces, one for each adjacent room. The whole process with user interactions lasts 427 s.

After comparing the time of the process in the three cases, time shows to be highly dependent on the number of points of the point cloud of the scenario. Attending the duration too, the critical step is the room segmentation. It is followed by the plane segmentation of each room and the plane classification and correction.

To evaluate the accuracy of the system, the 3D models of the scenarios have been manually elaborated to compare with the 3D models estimated by the software. This way, the system is compared with the traditional methods. The 3D models in SketchUp can be seen in

Figure 21.

Table 4 shows the error estimation of the areas, using as reference value, the areas of the manually elaborated models. The best performance goes to Scenario 3, particularly to room 1.

Table 5 includes the volumetric comparison between the estimated models and those manually elaborated. All the errors estimated are satisfactory, remaining the relative error below 1.3% in all cases.

The system worked well in all three scenarios with satisfactory errors, and the only remarkable problem during the execution was the reflected surfaces, which can be solved by the user interaction.

The energy analyses performed in the previous section have been repeated with the handmade models. The results can be seen in

Table 6. The results are coherent with the results for the estimated models. The biggest scenarios have practically the same maximum consumption per volume and mean consumption per volume. Moreover, these values are very similar to the obtained for the estimated models, showing the same tendency.

The comparison of the behavior of the consumption of the heating system along a year can be seen below in

Figure 22 for Scenario 1,

Figure 23 for Scenario 2 and

Figure 24 for Scenario 3. It can be seen in the graphics that the behavior for the consumption is the same for the estimated cases than those used as a reference. The form of the graphics is practically the same, only with slight variations in the peaks of consumption value. The months of maximum and minimum consumption remain equal and their values are similar.

4.2. Comparison of the Proposed Method with Existing Methods

Compared to related work, the proposed system presents the advantage of controlling the workflow of the process, avoiding the propagation of errors and making it affordable for non-specialist users. A general comparison with the related work is difficult because of the variety of the scenarios and the purposes of each system. However, a brief comparison explaining the advantages and disadvantages of each system will be performed, based on the available data. Ambrus et al. [

7] present an efficient and robust fully-automatic method for indoor 2D reconstruction, without prior knowledge of the scanning device poses. This method can also detect and categorize gaps in the structure, like doors. However, 2D reconstruction is not compatible with energy analysis, which is the use for the models of the proposed system. Moreover, their system is designed to work with rooms which tend to be convex, whereas the proposed system can work with concave rooms. Wang et al. [

8] present a framework for 3D modelling of indoor point clouds acquired with mobile laser scanners. Their system uses the 2D projection of the point cloud to detect the walls of the building and generate 2D floor plans and 3D watertight building models. Their system was tested against synthetic and real-world scenarios, presenting a maximum average error in the distance between the detected planes and their closest point of 3.528 cm. Their system is also able to reduce the effect of outliers and small structures, like irregular bumps and craters in the 2D floor plan. The doubt about the performance of the system comes in the case of point clouds with clutter from furniture like large wardrobes. The reason for this doubt is that the method proposed in [

9] can easily detect erroneous surfaces as primitive of a wall in crowded rooms. In contrast, the method proposed in this paper allows the user to control each step of the process, allowing for the detection of irregularities that can appear in real-world scenarios. Macer et al. [

9] present a semi-automatic approach for 3D modelling, using indoor point clouds. Their choice as schema for the 3D model is IFC, and it can work with different floors. Their approach divides the input point cloud into different sub-spaces, with one point cloud per room, analogously to the method proposed. This strategy simplifies the logic of the 3D reconstruction. Their approach was tested against two different scenarios, resulting in the generation of 3D models with satisfactory accuracy: 4.4 cm is the maximum deviation from the control points. Therefore, the operation times in [

9] are 8 min for scenario 1 with ~10M points and 19 min for scenario w, with ~39M points. Thus, the method proposed is slightly faster. Murali et al. [

10] present an automatic system to generate BIM of indoor point clouds which is remarkable for its speed. Their system was tested, with five real-world scenarios being able to generate 3D models in less than one second. Unfortunately, the authors do not specify the number of points of the input point clouds. An error in the detection of doors in one of the scenarios is also detected. In contrast, the system presented in this paper bases the room segmentation on the detection of doors along the trajectory. This particularity shows robustness with satisfactory results, even in clutter point clouds. Moreover, the developed interface allows the users to detect possible errors avoiding its propagation. Ochman et al. [

11] present a fully-automatic method for 3D modelling based on the IFC schema. It is probably the most complete and with better performance method of the literature. The runtime for reconstructing the test datasets is in the range of 1 to 10 min. having the largest dataset 33,687,751 points. However, the processing of very large datasets may require optimizations to make them computationally feasible. The proposed system, instead, addresses the problem, dividing the point cloud into the different rooms and analyzing each of them separately, avoiding the requirement for high computational capacity.