Wildfire-Detection Method Using DenseNet and CycleGAN Data Augmentation-Based Remote Camera Imagery

Abstract

:1. Introduction

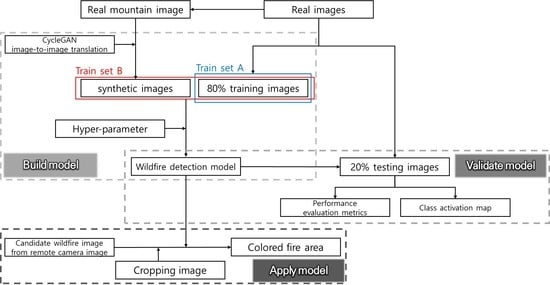

2. Materials and Methods

2.1. Data Collection

2.2. CycleGAN Image-to-Image Translation

2.3. DenseNet

2.4. Performance Evaluation Metrics

3. Experimental Results

3.1. Dataset Augmentation Using GAN

3.2. Wildfire Detection

3.2.1. Dataset Partition

3.2.2. Model Training and Comparison of the Models

3.2.3. Influence of Data Augmentation Methods

3.2.4. Visualization of the Contributed Features

3.3. Model Application

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Meng, Y.; Deng, Y.; Shi, P. Mapping forest wildfire risk of the world. In World Atlas of Natural Disaster Risk; Springer: Berlin/Heidelberg, Germany, 2015; pp. 261–275. [Google Scholar]

- Jolly, W.M.; Cochrane, M.A.; Freeborn, P.H.; Holden, Z.A.; Brown, T.J.; Williamson, G.J.; Bowman, D.M. Climate-induced variations in global wildfire danger from 1979 to 2013. Nat. Commun. 2015, 6, 7537. [Google Scholar] [CrossRef]

- Williams, A.P.; Allen, C.D.; Macalady, A.K.; Griffin, D.; Woodhouse, C.A.; Meko, D.M.; Swetnam, T.W.; Rauscher, S.A.; Seager, R.; Grissino-Mayer, H.D. Temperature as a potent driver of regional forest drought stress and tree mortality. Nat. Clim. Chang. 2012, 2, 1–6. [Google Scholar] [CrossRef]

- Solomon, S.; Matthews, D.; Raphael, M.; Steffen, K. Climate Stabilization Targets: Emissions, Concentrations, and Impacts over Decades to Millennia; National Academies Press: Washington, DC, USA, 2011. [Google Scholar]

- Mahmoud, M.A.I.; Ren, H. Forest Fire Detection Using a Rule-Based Image Processing Algorithm and Temporal Variation. Math. Probl. Eng. 2018, 2018, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.J.; Kim, E.G. Image based fire detection using convolutional neural network. J. Korea Inst. Inf. Commun. Eng. 2016, 20, 1649–1656. [Google Scholar] [CrossRef] [Green Version]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Wang, Y.; Dang, L.; Ren, J. Forest fire image recognition based on convolutional neural network. J. Algorithms Comput. Technol. 2019, 13. [Google Scholar] [CrossRef] [Green Version]

- Souza, M.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2020, 142, 112975. [Google Scholar] [CrossRef]

- Ko, B.C.; Cheong, K.H.; Nam, J.Y. Fire detection based on vision sensor and support vector machines. Fire Saf. J. 2009, 44, 322–329. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Z.; Han, S.; Qu, C.; Yuan, Z.; Zhang, D. SVM based forest fire detection using static and dynamic features. Comput. Sci. Inf. Syst. 2011, 8, 821–841. [Google Scholar] [CrossRef]

- Tung, T.X.; Kim, J. An effective four-stage smoke-detection algorithm using video image for early fire-alarm systems. Fire Saf. J. 2011, 5, 276–282. [Google Scholar] [CrossRef]

- Gomes, P.; Santana, P.; Barata, J. A Vision-Based Approach to Fire Detection. Int. J. Adv. Robot. Syst. 2014, 11, 149. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, Z.; Jia, Y.; Wang, J. Video Smoke Detection Based on Deep Saliency Network. Fire Saf. J. 2019, 105, 277–285. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep convolutional neural networks for forest fire detection. In Proceedings of the 2016 International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016. [Google Scholar]

- Pan, H.; Diaa, B.; Ahmet, E.C. Computationally efficient wildfire detection method using a deep convolutional network pruned via Fourier analysis. Sensors 2020, 20, 2891. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1, 1097–1105. [Google Scholar] [CrossRef]

- Md, Z.A.; Aspiras, T.; Taha, T.M.; Asari, V.K.; Bowen, T.J.; Billiter, D.; Arkell, S. Advanced deep convolutional neural network approaches for digital pathology image analysis: A comprehensive evaluation with different use cases. arXiv 2019, arXiv:1904.09075. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S. Convolutional Neural Networks based Fire Detection in Surveillance Videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Jung, D.; Tuan, V.T.; Tran, D.Q.; Park, M.; Park, S. Conceptual Framework of an Intelligent Decision Support System for Smart City Disaster Management. Appl. Sci. 2020, 10, 666. [Google Scholar] [CrossRef] [Green Version]

- Jain, p.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. arXiv 2020, arXiv:2003.00646v2. [Google Scholar]

- Zhang, Q.X.; Lin, G.H.; Zhang, Y.M.; Xu, G.; Wang, J.J. Wildland forest fire smoke detection based on faster r-cnn using synthetic smoke images. Procedia Eng. 2018, 211, 411–466. [Google Scholar] [CrossRef]

- Cao, Y.; Yang, F.; Tang, Q.; Lu, X. An attention enhanced bidirectional LSTM for early forest fire smoke recognition. IEEE Access 2019, 7, 154732–154742. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency Detection and Deep Learning-Based Wildfire Identification in UAV Imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef] [Green Version]

- Alexandrov, D.; Pertseva, E.; Berman, I.; Pantiukhin, I.; Kapitonov, A. Analysis of Machine Learning Methods for Wildfire Security Monitoring with an Unmanned Aerial Vehicles. In Proceedings of the 2019 24th Conference of Open Innovations Association (FRUCT), Moscow, Russia, 8–12 April 2019. [Google Scholar]

- Namozov, A.; Cho, Y.I. An efficient deep learning algorithm for fire and smoke detection with limited data. Adv. Electr. Comput. Eng. 2018, 18, 121–129. [Google Scholar] [CrossRef]

- Zhikai, Y.; Leping, B.; Teng, W.; Tianrui, Z.; Fen, W. Fire Image Generation Based on ACGAN. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 5743–5746. [Google Scholar]

- Li, T.; Zhao, E.; Zhang, J.; Hu, C. Detection of Wildfire Smoke Images Based on a Densely Dilated Convolutional Network. Electronics 2019, 8, 1131. [Google Scholar] [CrossRef] [Green Version]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, J. Domain adaptation from synthesis to reality in single-model detector for video smoke detection. arXiv 2017, arXiv:1709.08142. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; ACM: New York, NY, USA, 2014; pp. 2672–2680. [Google Scholar]

- Zheng, K.; Wei, M.; Sun, G.; Anas, B.; Li, Y. Using Vehicle Synthesis Generative Adversarial Networks to Improve Vehicle Detection in Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2019, 8, 390. [Google Scholar] [CrossRef] [Green Version]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-To-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1947–1962. [Google Scholar] [CrossRef] [Green Version]

- Sandfort, V.; Yan, K.; Pickhardt, P.J.; Summers, R.M. Data augmentation using generative adversarial networks (CycleGAN) to improve generalizability in CT segmentation tasks. Sci. Rep. 2019, 9, 16884. [Google Scholar] [CrossRef]

- Uzunova, H.; Ehrhardt, J.; Jacob, F.; Frydrychowicz, A.; Handels, H. Multi-scale GANs for memory-efficient generation of high resolution medical images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Shenzhen, China, 13–17 October 2019; pp. 112–120. [Google Scholar]

- Hu, Q.; Wu, C.; Wu, Y.; Xiong, N. UAV Image High Fidelity Compression Algorithm Based on Generative Adversarial Networks Under Complex Disaster Conditions. IEEE Access 2019, 7, 91980–91991. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Pattern Recognition and Computer Vision 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Oliva, A.; Torralba, A. Modeling the shape of the scene: A holistic representation of the spatial envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Jeong, C.; Jang, S.-E.; Na, S.; Kim, J. Korean Tourist Spot Multi-Modal Dataset for Deep Learning Applications. Data 2019, 4, 139. [Google Scholar] [CrossRef] [Green Version]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond accuracy, F-Score and ROC: A family of discriminant measures for performance evaluation. In AI 2006: Advances in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2018, 13. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A method for Stochastic Optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Hinton, G.; Srivastava, N.; Swersky, K. Neural networks for machine learning lecture 6a overview of mini-batch gradient descent. Cited 2012, 14, 1–31. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Drone Center. Wildfire Video of Nangwol-dong. December 2015. Available online: http://www.dronecenter.kr/bbs/s5_4/3266 (accessed on 13 October 2020).

| Original Non-Fire Images | Original Wildfire Images | Generated Wildfire Images | |

|---|---|---|---|

| Train set A [Real database] | 3165 | 2427 | |

| Train set B [Real + synthetic database] | 6309 | 2427 | 3585 |

| Test set | 545 | 486 |

| VGG-16 | ResNet-50 | DenseNet | |

|---|---|---|---|

| Batch Size | 60 | 60 | 60 |

| Initial Learning Rate | 0.0002 | 0.0002 | 0.01 |

| Number of Training Epochs | 250 | 250 | 250 |

| Optimizer | Adam | Adam | SGD |

| VGG-16 | ResNet-50 | Proposed Method | ||||

|---|---|---|---|---|---|---|

| Train Set A | Train Set B | Train Set A | Train Set B | Train Set A | Train Set B | |

| Accuracy (%) | 93.756 | 93.276 | 96.734 | 96.926 | 96.734 | 98.271 |

| Precision (%) | 93.890 | 97.973 | 97.727 | 97.934 | 96.573 | 99.380 |

| Sensitivity (%) | 92.944 | 87.702 | 95.363 | 95.565 | 96.573 | 96.976 |

| Specificity (%) | 94.495 | 98.349 | 97.982 | 98.165 | 96.881 | 99.450 |

| F1-Score | 93.414 | 92.553 | 96.531 | 96.735 | 96.573 | 98.163 |

| Data Augmentation Method | Training Images | F1-Score |

|---|---|---|

| Original + GAN + Horizontal flip + Zoom (200) | 6312 | 98.163 |

| Original + GAN + Rotation (10 and 350) | 6312 | 97.911 |

| Original + GAN + Random brightness (from to ) | 6312 | 97.830 |

| Original + Traditional augmentation (Without GAN) | 6363 | 97.009 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, M.; Tran, D.Q.; Jung, D.; Park, S. Wildfire-Detection Method Using DenseNet and CycleGAN Data Augmentation-Based Remote Camera Imagery. Remote Sens. 2020, 12, 3715. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12223715

Park M, Tran DQ, Jung D, Park S. Wildfire-Detection Method Using DenseNet and CycleGAN Data Augmentation-Based Remote Camera Imagery. Remote Sensing. 2020; 12(22):3715. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12223715

Chicago/Turabian StylePark, Minsoo, Dai Quoc Tran, Daekyo Jung, and Seunghee Park. 2020. "Wildfire-Detection Method Using DenseNet and CycleGAN Data Augmentation-Based Remote Camera Imagery" Remote Sensing 12, no. 22: 3715. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12223715