A Multi-Scale Superpixel-Guided Filter Feature Extraction and Selection Approach for Classification of Very-High-Resolution Remotely Sensed Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. VHR Remote Sensing Datasets

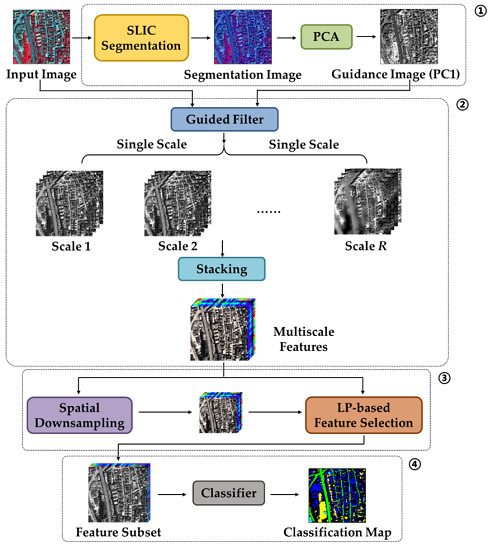

2.2. Proposed Method

2.2.1. Superpixel Segmentation for Building the Guidance Image

2.2.2. Multi-Scale Superpixel-Guided Filter Feature Generation

2.2.3. Unsupervised Feature Selection

- Step 1: Initialize the band subset Φ = {Q1, Q2} by selecting a pair of bands Q1 and Q2.

- Step 2: Find the 3rd band Q3, which is the most dissimilar from those in Φ according to given criteria. Then, update the selected band subset as Φ = Φ ∪ {Q3}.

- Step 3: Repeat Step 2 until convergence is reach, i.e., in Φ, the number of bands meets the pre-defined number in the experiment.

2.2.4. Classification Relying on the Selected MSGF Feature Subset

3. Experiments

3.1. Parameter Settings

3.2. Performance Evaluation of the Proposed FS-MSGF Approach

3.2.1. Results of the MSGF

3.2.2. Role of the Feature Selection

3.2.3. Component Analysis

3.3. Results Comparison with Different State-of-the-Art Approaches

4. Discussion

5. Conclusions

- Instead of using the original pixel-level guidance image, the idea of using superpixel-level guidance image significantly improved the classification performance. More accurate boundaries and homogenous context information of local objects were well preserved in the extracted multi-scale GF features.

- Compared with the single-scale GF feature, use of multi-scale stacked features helped to model the image objects better spatially from the viewpoint of different scales. Note that such generated features could increase the land-cover class discriminability, but in the meantime, would inevitably lead to the increasing of feature dimensionality.

- The feature subset generated by a certain feature selection technique maintained the classification performance at a high level while only using a portion of the original features. This could effectively reduce the overall computational cost without losing the overall accuracy, which is very valuable in practical applications.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, J.; Zeil, P. Object-oriented image processing in an integrated GIS/remote sensing environment and perspectives for environmental applications. Environ. Inf. Plan. Polit. Public Metrop. Verlag Marburg 2000, 2, 555–570. [Google Scholar]

- Han, X.; Huang, X.; Li, J.; Li, Y.; Yang, M.Y.; Gong, J. The edge-preservation multi-classifier relearning framework for the classification of high-resolution remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2018, 138, 57–73. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Dalla Mura, M. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Chanussot, J.; Benediktsson, J.A. Segmentation and classification of hyperspectral images using watershed transformation. Pattern Recognit. 2010, 43, 2367–2379. [Google Scholar] [CrossRef] [Green Version]

- Csillik, O. Fast segmentation and classification of very high resolution remote sensing data using SLIC superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Zhongwen, H.; Qian, Z.; Qin, Z.; Qingquan, L.; Guofeng, W. Stepwise evolution analysis of the region-merging segmentation for scale parameterization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2461–2472. [Google Scholar]

- Ming, D.; Li, J.; Wang, J.; Zhang, M. Scale parameter selection by spatial statistics for GeOBIA: Using mean-shift based multi-scale segmentation as an example. ISPRS J. Photogramm. Remote Sens. 2015, 106, 28–41. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Baud, I. Extraction of slum areas from VHR imagery using GLCM variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1830–1840. [Google Scholar] [CrossRef]

- Chen, Y.; Lv, Z.; Huang, B.; Jia, Y. Delineation of built-up areas from very high-resolution satellite imagery using multi-scale textures and spatial dependence. Remote Sens. 2018, 10, 1596. [Google Scholar] [CrossRef] [Green Version]

- Bellens, R.; Gautama, S.; Martinez-Fonte, L.; Philips, W.; Chan, J.C.-W.; Canters, F. Improved classification of VHR images of urban areas using directional morphological profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2803–2813. [Google Scholar] [CrossRef]

- Samat, A.; Persello, C.; Liu, S.; Li, E.; Miao, Z.; Abuduwaili, J. Classification of VHR multispectral images using extratrees and maximally stable extremal region-guided morphological profile. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3179–3195. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Pesaresi, M.; Arnason, K. Classification and feature extraction for remote sensing images from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef] [Green Version]

- Liao, W.; Dalla Mura, M.; Chanussot, J.; Bellens, R.; Philips, W. Morphological attribute profiles with partial reconstruction. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1738–1756. [Google Scholar] [CrossRef]

- Liao, W.; Chanussot, J.; Dalla Mura, M.; Huang, X.; Bellens, R.; Gautama, S.; Philips, W. Taking optimal advantage of fine spatial resolution: Promoting partial image reconstruction for the morphological analysis of very-high-resolution images. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–28. [Google Scholar] [CrossRef] [Green Version]

- Samat, A.; Du, P.; Liu, S.; Li, J.; Cheng, L. E2LM: Ensemble extreme learning machines for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1060–1069. [Google Scholar] [CrossRef]

- Jing, W.; Chein, I.C. Independent component analysis-based dimensionality reduction with applications in hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1586–1600. [Google Scholar] [CrossRef]

- Du, P.J.; Liu, S.C.; Bruzzone, L.; Bovolo, F. Target-driven change detection based on data transformation and similarity measures. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 2016–2019. [Google Scholar]

- De Backer, S.; Kempeneers, P.; Debruyn, W.; Scheunders, P. A band selection technique for spectral classification. IEEE Geosci. Remote Sens. Lett. 2005, 2, 319–323. [Google Scholar] [CrossRef]

- Ifarraguerri, A.; Prairie, M.W. Visual method for spectral band selection. IEEE Geosci. Remote Sens. Lett. 2004, 1, 101–106. [Google Scholar] [CrossRef]

- Huang, R.; He, M. Band selection based on feature weighting for classification of hyperspectral data. IEEE Geosci. Remote Sens. Lett. 2005, 2, 156–159. [Google Scholar] [CrossRef]

- Du, Q.; Yang, H. Similarity-based unsupervised band selection for hyperspectral image analysis. IEEE Geosci. Remote Sens. Lett. 2008, 5, 564–568. [Google Scholar] [CrossRef]

- Jia, S.; Tang, G.; Zhu, J.; Li, Q. A novel ranking-based clustering approach for hyperspectral band selection. IEEE Trans. Geosci. Remote Sens. 2015, 54, 88–102. [Google Scholar] [CrossRef]

- Tschannerl, J.; Ren, J.; Yuen, P.; Sun, G.; Zhao, H.; Yang, Z.; Wang, Z.; Marshall, S. MIMR-DGSA: Unsupervised hyperspectral band selection based on information theory and a modified discrete gravitational search algorithm. Inf. Fusion 2019, 51, 189–200. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Kang, X.; Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar]

- Zhang, Q.; Li, X.R. Fast image dehazing using guided filter. In Proceedings of the 2015 IEEE 16th International Conference on Communication Technology (ICCT), Hangzhou, China, 18–20 October 2015; pp. 182–185. [Google Scholar]

- Guo, L.; Chen, L.; Chen, C.P.; Zhou, J. Integrating guided filter into fuzzy clustering for noisy image segmentation. Digit. Signal Process. 2018, 83, 235–248. [Google Scholar] [CrossRef]

- Wang, C.; Fan, Y.Y. Saliency detection using iterative dynamic guided filtering. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3396–3401. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Benediktsson, J.A. Spectral–spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans. Geosci. Remote Sens. 2013, 52, 2666–2677. [Google Scholar] [CrossRef]

- Wang, L.; Cao, X.; Zheng, Y.; Dai, Q. Multi-scale feature extraction of hyperspectral image with guided filering. J. Remote Sens. 2018, 22, 293–303. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Heinz, D.C. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef] [Green Version]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

| Zh1 | Zh2 | ||||

|---|---|---|---|---|---|

| Class | Training | Test | Class | Training | Test |

| Roads | 866 | 85,685 | Roads | 774 | 154,012 |

| Buildings | 1542 | 152,622 | Buildings | 753 | 149,874 |

| Grass | 72 | 6391 | Grass | 555 | 128,570 |

| Trees | 810 | 80,961 | Trees | 646 | 110,426 |

| Bare Soil | 53 | 10,566 | |||

| Water | 45 | 8995 | |||

| Pools | 30 | 6022 | |||

| Classification Approaches | Overall Accuracy (OA) (%) | Kappa Coefficient (Kappa) | Time Cost (s) | |

|---|---|---|---|---|

| Baseline | Original Bands | 85.69 | 0.7809 | 15.82 |

| SLICRaw (when S = 15) | 88.13 | 0.8187 | 8.24 | |

| Single Scale | PGF (with r = 2) | 86.72 | 0.7961 | 15.08 |

| SGF (with r = 7) | 87.75 | 0.8126 | 12.29 | |

| GFProb (with r = 14) | 90.46 | 0.8529 | 17.72 | |

| Multiple Scales | MPGF (with r = [1,30]) | 91.49 | 0.8687 | 189.45 |

| EMPs (with r = [1,30]) | 92.25 | 0.8802 | 192.10 | |

| proposed MSGF (with r = [1,30]) | 93.24 | 0.8960 | 160.59 | |

| proposed FS-MSGF (with r = [1,30] and F = 40) | 93.38 | 0.8979 | 74.58 |

| Classification Approaches | Overall Accuracy (OA) (%) | Kappa Coefficient (Kappa) | Time Cost (s) | |

|---|---|---|---|---|

| Baseline | Original Bands | 83.21 | 0.7812 | 25.67 |

| SLICRaw (when S = 15) | 87.08 | 0.8317 | 16.13 | |

| Single Scale | PGF (with r = 4) | 86.03 | 0.8180 | 23.20 |

| SGF (with r = 5) | 87.28 | 0.8342 | 20.00 | |

| GFProb (with r = 6) | 87.58 | 0.8378 | 28.62 | |

| Multiple Scales | MPGF (with r = [1,25]) | 88.28 | 0.8472 | 176.11 |

| EMPs (with r = [1,25]) | 88.20 | 0.8460 | 262.78 | |

| Proposed MSGF (with r = [1,25]) | 89.14 | 0.8584 | 160.11 | |

| proposed FS-MSGF (with r = [1,25] and F = 30) | 89.18 | 0.8589 | 72.34 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Hu, Q.; Tong, X.; Xia, J.; Du, Q.; Samat, A.; Ma, X. A Multi-Scale Superpixel-Guided Filter Feature Extraction and Selection Approach for Classification of Very-High-Resolution Remotely Sensed Imagery. Remote Sens. 2020, 12, 862. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12050862

Liu S, Hu Q, Tong X, Xia J, Du Q, Samat A, Ma X. A Multi-Scale Superpixel-Guided Filter Feature Extraction and Selection Approach for Classification of Very-High-Resolution Remotely Sensed Imagery. Remote Sensing. 2020; 12(5):862. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12050862

Chicago/Turabian StyleLiu, Sicong, Qing Hu, Xiaohua Tong, Junshi Xia, Qian Du, Alim Samat, and Xiaolong Ma. 2020. "A Multi-Scale Superpixel-Guided Filter Feature Extraction and Selection Approach for Classification of Very-High-Resolution Remotely Sensed Imagery" Remote Sensing 12, no. 5: 862. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12050862