Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities

Abstract

:1. Introduction

2. Three-Dimensional Data Acquisition Using Unmanned Aerial Vehicles

2.1. LiDAR Sensors

2.2. Photogrammetric Processing of UAV-Based Imagery

2.3. Data Processing, Vegetation Segmentation and Classification

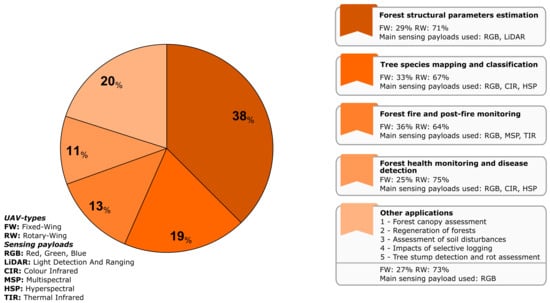

3. Applications of UAVs for Forestry Purposes

3.1. Forest Structural Parameters Estimation

3.1.1. Stand-Level Studies

3.1.2. Tree-Level Studies

3.2. Tree Species Mapping and Classification

3.3. Forest Fire and Post-Fire Monitoring

3.4. Forest Health Monitoring and Disease Detection

3.5. Other Applications

4. Discussion

4.1. Forest Structural Parameters Estimation

4.2. Tree Species Mapping and Classification

4.3. Forest Fire and Post-Fire Monitoring

4.4. Forest Health Monitoring and Disease Detection

4.5. Other Applications

4.6. Data Acquisition and Processing Optimization and Comparison between LiDAR and Photogrammetry

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| Acronym | Expansion | Acronym | Expansion |

| ABA | Area-Based Approach | kNN | k-Nearest Neighbours |

| AGB | Above Ground Biomass | LAD | Leaf Angle Distribution |

| ALS | Airborne Laser Scanning | LAI | Leaf Area Index |

| BA | Basal Area | LiDAR | Light Detection And Ranging |

| CART | Classification And Regression Tree | MaxL | Maximum Likelihood |

| CD | Crown Diameter | ML | Machine Learning |

| CDC | Canonical Discriminant Classifier | MLP | Multi-Layer Perceptron |

| CFS | Correlation-based Feature Selection | MSI | Moisture Stress Index |

| CHM | Canopy Height Model | MSP | multispectral |

| CIR | Colour Infrared | NB | Naive Bayes |

| CNN | Convolutional Neural Network | NDRE | Normalized Difference Red Edge |

| DBH | Diameter at Breast Height | NDVI | Normalized Difference Vegetation Index |

| DN | Digital Number | NIR | Near-Infrared |

| DSM | Digital Surface Model | OBIA | Object Based Image Analysis |

| DT | Decision Trees | RF | Random Forest |

| DTM | Digital Terrain Model | RS | Remote Sensing |

| FPI | Fabry-Pérot Interferometer | SfM | Structure from Motion |

| GIS | Geographical Information Systems | Sn | Stem Number |

| GNSS | Global Navigation Satellite Systems | Sv | Stem Volume |

| H | Height | SVM | Support Vector Machine |

| Hdom | Dominant Height | TIN | Triangulated Irregular Networks |

| HIC | Hierarchical Image Classification | TIR | Thermal Infrared |

| HL | Lorey’s Mean Height | UAS | Unmanned Aircraft System |

| HM | Maximum Height | UAV | Unmanned Aerial Vehicle |

| HSP | Hyperspectral | V | Volume |

| IDW | Inverse Distance Weighting | VSW | Variable-Sized Window |

| INS | Inertial Navigation System | VTOL | Vertically Take-Off and Landing |

| ITC | Individual Tree Crown | WD | Watershed Delineation |

References

- Joseph, G. Fundamentals of Remote Sensing; Orient Blackswan Pvt. Ltd.: Hyderabad, Telangana, India, 2005; ISBN 978-81-7371-535-8. [Google Scholar]

- Roy, P.S.; Behera, M.D.; Srivastav, S.K. Satellite Remote Sensing: Sensors, Applications and Techniques. Proc. Natl. Acad. Sci. USA India Sect. A Phys. Sci. 2017, 87, 465–472. [Google Scholar] [CrossRef] [Green Version]

- Emery, W.; Camps, A. Introduction to Satellite Remote Sensing: Atmosphere, Ocean, Land and Cryosphere Applications; Elsevier: Amsterdam, The Netherlands, 2017; ISBN 978-0-12-809259-0. [Google Scholar]

- Barrett, F.; McRoberts, R.E.; Tomppo, E.; Cienciala, E.; Waser, L.T. A questionnaire-based review of the operational use of remotely sensed data by national forest inventories. Remote Sens. Environ. 2016, 174, 279–289. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; White, J.C. Digital Aerial Photogrammetry for Updating Area-Based Forest Inventories: A Review of Opportunities, Challenges, and Future Directions. Curr. For. Rep. 2019, 5, 55–75. [Google Scholar] [CrossRef] [Green Version]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 60–68. [Google Scholar] [CrossRef] [Green Version]

- Gupta, S.G.; Ghonge, M.M.; Jawandhiya, D.P.M. Review of Unmanned Aircraft System (UAS). Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 14. [Google Scholar] [CrossRef]

- Santamaria, E.; Barrado, C.; Pastor, E.; Royo, P.; Salami, E. Reconfigurable automated behavior for UAS applications. Aerosp. Sci. Technol. 2012, 23, 372–386. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.O.; Lucieer, A.; Turner, D.; Watson, C.S. Error assessment and mitigation for hyper-temporal UAV-borne LiDAR surveys of forest inventory. In Proceedings of the Silvilaser, Hobart, Australia, 16–19 October 2011. [Google Scholar]

- Wargo, C.A.; Church, G.C.; Glaneueski, J.; Strout, M. Unmanned Aircraft Systems (UAS) research and future analysis. In Proceedings of the 2014 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2014; pp. 1–16. [Google Scholar]

- Shakhatreh, H.; Sawalmeh, A.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles: A Survey on Civil Applications and Key Research Challenges. arXiv 2018, arXiv:1805.00881. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piégay, H. Potential and constraints of Unmanned Aerial Vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of Drone Ecology: Low-Cost Autonomous Aerial Vehicles for Conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Zellweger, F.; Braunisch, V.; Baltensweiler, A.; Bollmann, K. Remotely sensed forest structural complexity predicts multi species occurrence at the landscape scale. For. Ecol. Manag. 2013, 307, 303–312. [Google Scholar] [CrossRef]

- Hill, A.; Breschan, J.; Mandallaz, D. Accuracy Assessment of Timber Volume Maps Using Forest Inventory Data and LiDAR Canopy Height Models. Forests 2014, 5, 2253–2275. [Google Scholar] [CrossRef] [Green Version]

- McElhinny, C.; Gibbons, P.; Brack, C.; Bauhus, J. Forest and woodland stand structural complexity: Its definition and measurement. For. Ecol. Manag. 2005, 218, 1–24. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef] [Green Version]

- Zheng, G.; Moskal, L.M. Retrieving Leaf Area Index (LAI) Using Remote Sensing: Theories, Methods and Sensors. Sensors 2009, 9, 2719–2745. [Google Scholar] [CrossRef] [Green Version]

- Turner, W.; Spector, S.; Gardiner, N.; Fladeland, M.; Sterling, E.; Steininger, M. Remote sensing for biodiversity science and conservation. Trends Ecol. Evol. 2003, 18, 306–314. [Google Scholar] [CrossRef]

- Mondello, C.; Hepner, G.; Williamson, R.A. 10-Year Industry Forecast: Phases I-III - Study Documentation. Photogramm. Eng. Remote Sens. 2004, 70, 5–58. [Google Scholar]

- Andersen, H.-E.; Mcgaughey, R.J.; Reutebuch, S.E. Assessing the influence of flight parameters, interferometric processing, slope and canopy density on the accuracy of X-band IFSAR-derived forest canopy height models. Int. J. Remote Sens. 2008, 29, 1495–1510. [Google Scholar] [CrossRef] [Green Version]

- Bergen, K.M.; Goetz, S.J.; Dubayah, R.O.; Henebry, G.M.; Hunsaker, C.T.; Imhoff, M.L.; Nelson, R.F.; Parker, G.G.; Radeloff, V.C. Remote sensing of vegetation 3-D structure for biodiversity and habitat: Review and implications for lidar and radar spaceborne missions. J. Geophys. Res. Biogeosciences 2009, 114. [Google Scholar] [CrossRef] [Green Version]

- Pilarska, M.; Ostrowski, W.; Bakuła, K.; Górski, K.; Kurczyński, Z. The potential of light laser scanners developed for unmanned aerial vehicles—The review and accuracy. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 42, 87–95. [Google Scholar] [CrossRef] [Green Version]

- Vazirabad, Y.F.; Karslioglu, M.O. Lidar for Biomass Estimation. In Biomass Detection, Production and Usage; InTech: Rijeka, Croatia, 2011. [Google Scholar]

- Gordon, S.; Lichti, D.; Franke, J.; Stewart, M. Measurement of Structural Deformation using Terrestrial Laser Scanners. In Proceedings of the 1st FIG International Symposium on Engineering Surveys for Construction Works and Structural Engineering, Nottingham, UK, 28 June–1 July 2004; p. 16. [Google Scholar]

- Almeida, D.R.A.; Stark, S.C.; Chazdon, R.; Nelson, B.W.; Cesar, R.G.; Meli, P.; Gorgens, E.B.; Duarte, M.M.; Valbuena, R.; Moreno, V.S.; et al. The effectiveness of lidar remote sensing for monitoring forest cover attributes and landscape restoration. For. Ecol. Manag. 2019, 438, 34–43. [Google Scholar] [CrossRef]

- Baltsavias, E.P. A comparison between photogrammetry and laser scanning. ISPRS J. Photogramm. Remote Sens. 1999, 54, 83–94. [Google Scholar] [CrossRef]

- Habib, A.; Ghanma, M.; Tait, M. Integration of LIDAR and photogrammetry for close range applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 1045–1050. [Google Scholar]

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. Earth Surf. 2012, 117. [Google Scholar] [CrossRef] [Green Version]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef] [Green Version]

- Fritz, A.; Kattenborn, T.; Koch, B. UAV-based photogrammetric point clouds—Tree stem mapping in open stands in comparison to terrestrial laser scanner point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 141–146. [Google Scholar] [CrossRef] [Green Version]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of Small Forest Areas Using an Unmanned Aerial System. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Rupnik, E.; Daakir, M.; Pierrot Deseilligny, M. MicMac—A free, open-source solution for photogrammetry. Open Geospat. Data Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef]

- Dakota, B.; Fitzsimmons, S.; Toffanin, P. Open Drone Map. 2017. Available online: https://www.opendronemap.org/ (accessed on 10 October 2019).

- Hyyppa, J.; Kelle, O.; Lehikoinen, M.; Inkinen, M. A segmentation-based method to retrieve stem volume estimates from 3-D tree height models produced by laser scanners. IEEE Trans. Geosci. Remote Sens. 2001, 39, 969–975. [Google Scholar] [CrossRef]

- Wulder, M.A.; Hall, R.J.; Coops, N.C.; Franklin, S.E. High Spatial Resolution Remotely Sensed Data for Ecosystem Characterization. BioScience 2004, 54, 511–521. [Google Scholar] [CrossRef] [Green Version]

- Hyde, P.; Dubayah, R.; Walker, W.; Blair, J.B.; Hofton, M.; Hunsaker, C. Mapping forest structure for wildlife habitat analysis using multi-sensor (LiDAR, SAR/InSAR, ETM+, Quickbird) synergy. Remote Sens. Environ. 2006, 102, 63–73. [Google Scholar] [CrossRef]

- Van Leeuwen, M.; Nieuwenhuis, M. Retrieval of forest structural parameters using LiDAR remote sensing. Eur. J. For. Res. 2010, 129, 749–770. [Google Scholar] [CrossRef]

- Delaunay, B. Sur la sphere vide. Izv. Akad. Nauk SssrOtd. Mat. I Estestv. Nauk 1934, 7, 1–2. [Google Scholar]

- Shepard, D. A Two-dimensional Interpolation Function for Irregularly-spaced Data. In Proceedings of the 1968 23rd ACM National Conference, Las Vegas, NV, USA, 27–29 August 1968; ACM: New York, NY, USA, 1968; pp. 517–524. [Google Scholar]

- Fowler, R.J.; Little, J.J. Automatic Extraction of Irregular Network Digital Terrain Models. In Proceedings of the 6th Annual Conference on Computer Graphics and Interactive Techniques, Chicago, IL, USA, 8–10 August 1979; ACM: New York, NY, USA, 1979; pp. 199–207. [Google Scholar]

- Popescu, S.C.; Wynne, R.H.; Nelson, R.F. Measuring individual tree crown diameter with lidar and assessing its influence on estimating forest volume and biomass. Can. J. Remote Sens. 2003, 29, 564–577. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Fernández-Álvarez, M.; Armesto, J.; Picos, J. LiDAR-Based Wildfire Prevention in WUI: The Automatic Detection, Measurement and Evaluation of Forest Fuels. Forests 2019, 10, 148. [Google Scholar] [CrossRef] [Green Version]

- Mei, C.; Durrieu, S. Tree crown delineation from digital elevation models and high resolution imagery. Proc. Int. Arch. Photogramm. Remote Sens 2004, 36, 3–6. [Google Scholar]

- Ke, Y.; Quackenbush, L.J. Comparison of individual tree crown detection and delineation methods. In Proceedings of the 2008 ASPRS Annual Conference (American Society of Photogrammetry and Remote Sensing, Bethesda, Maryland), Portland, OR, USA, 28 April–2 May 2008; p. 11. [Google Scholar]

- Kaartinen, H.; Hyyppä, J.; Yu, X.; Vastaranta, M.; Hyyppä, H.; Kukko, A.; Holopainen, M.; Heipke, C.; Hirschmugl, M.; Morsdorf, F.; et al. An International Comparison of Individual Tree Detection and Extraction Using Airborne Laser Scanning. Remote Sens. 2012, 4, 950–974. [Google Scholar] [CrossRef] [Green Version]

- Vauhkonen, J.; Ene, L.; Gupta, S.; Heinzel, J.; Holmgren, J.; Pitkänen, J.; Solberg, S.; Wang, Y.; Weinacker, H.; Hauglin, K.M.; et al. Comparative testing of single-tree detection algorithms under different types of forest. Forestry 2012, 85, 27–40. [Google Scholar] [CrossRef] [Green Version]

- Ayrey, E.; Fraver, S.; Jr, J.A.K.; Kenefic, L.S.; Hayes, D.; Weiskittel, A.R.; Roth, B.E. Layer Stacking: A Novel Algorithm for Individual Forest Tree Segmentation from LiDAR Point Clouds. Can. J. Remote Sens. 2017, 43, 16–27. [Google Scholar] [CrossRef]

- Popescu, S.C.; Wynne, R.H.; Nelson, R.F. Estimating plot-level tree heights with lidar: Local filtering with a canopy-height based variable window size. Comput. Electron. Agric. 2002, 37, 71–95. [Google Scholar] [CrossRef]

- Duncanson, L.I.; Cook, B.D.; Hurtt, G.C.; Dubayah, R.O. An efficient, multi-layered crown delineation algorithm for mapping individual tree structure across multiple ecosystems. Remote Sens. Environ. 2014, 154, 378–386. [Google Scholar] [CrossRef]

- Chen, Q.; Baldocchi, D.; Gong, P.; Kelly, M. Isolating Individual Trees in a Savanna Woodland Using Small Footprint Lidar Data. Photogramm. Eng. Remote Sens. 2006, 72, 923–932. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the lidar point cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, A.; Le, B. 3D point cloud segmentation: A survey. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in The Great Plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite-1 Symposium, Washington, DC, USA, 10–14 December 1973; NASA: Greenbelt, MD, USA, 1974; Volume 1, pp. 309–317. [Google Scholar]

- Salamí, E.; Barrado, C.; Pastor, E. UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef] [Green Version]

- Castilla, G.; Hay, G.J. Image objects and geographic objects. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; ; Lecture Notes in Geoinformation and Cartography; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 91–110. ISBN 978-3-540-77058-9. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality—Dealing with complexity. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Lecture Notes in Geoinformation and Cartography; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 3–27. ISBN 978-3-540-77058-9. [Google Scholar]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Use of Unmanned Aerial Systems for multispectral survey and tree classification: A test in a park area of northern Italy. Eur. J. Remote Sens. 2014, 47, 251–269. [Google Scholar] [CrossRef]

- Lisein, J.; Michez, A.; Claessens, H.; Lejeune, P. Discrimination of Deciduous Tree Species from Time Series of Unmanned Aerial System Imagery. PLoS ONE 2015, 10, e0141006. [Google Scholar] [CrossRef] [PubMed]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 146. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Lucieer, A.; Malenovský, Z.; Veness, T.; Wallace, L. HyperUAS—Imaging Spectroscopy from a Multirotor Unmanned Aircraft System. J. Field Robot. 2014, 31, 571–590. [Google Scholar] [CrossRef] [Green Version]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A Lightweight Hyperspectral Mapping System and Photogrammetric Processing Chain for Unmanned Aerial Vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef] [Green Version]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef] [Green Version]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Im, J.; Beier, C. Machine learning approaches for forest classification and change analysis using multi-temporal Landsat TM images over Huntington Wildlife Forest. GIScience Remote Sens. 2013, 50, 361–384. [Google Scholar] [CrossRef]

- Shang, X.; Chisholm, L.A. Classification of Australian Native Forest Species Using Hyperspectral Remote Sensing and Machine-Learning Classification Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2481–2489. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Banu, T.P.; Borlea, G.F.; Banu, C. The Use of Drones in Forestry. J. Environ. Sci. Eng. B 2016, 5, 557–562. [Google Scholar]

- Ambrosia, V.G.; Wegener, S.S.; Sullivan, D.V.; Buechel, S.W.; Dunagan, S.E.; Brass, J.A.; Stoneburner, J.; Schoenung, S.M. Demonstrating UAV-Acquired Real-Time Thermal Data over Fires. Photogramm. Eng. Remote Sens. 2003, 69, 391–402. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Adáo, T.; Hruška, J.; Peres, E.; Morais, R.; Sousa, A.; Sousa, J.J. UAS-based Imagery and Photogrammetric Processing for Tree Height and Crown Diameter Extraction. In Proceedings of the International Conference on Geoinformatics and Data Analysis, Prague, Czech Republic, 20–22 April 2018; ACM: New York, NY, USA, 2018; pp. 87–91. [Google Scholar]

- Köhl, M. New Approaches for Multi Resource Forest Inventories. In Advances in Forest Inventory for Sustainable Forest Management and Biodiversity Monitoring; Forestry Sciences; Corona, P., Köhl, M., Marchetti, M., Eds.; Springer: Dordrecht, The Netherlands, 2003; pp. 1–16. ISBN 978-94-017-0649-0. [Google Scholar]

- Bergseng, E.; Ørka, H.O.; Næsset, E.; Gobakken, T. Assessing forest inventory information obtained from different inventory approaches and remote sensing data sources. Ann. For. Sci. 2015, 72, 33–45. [Google Scholar] [CrossRef] [Green Version]

- Yu, X.; Hyyppä, J.; Holopainen, M.; Vastaranta, M. Comparison of Area-Based and Individual Tree-Based Methods for Predicting Plot-Level Forest Attributes. Remote Sens. 2010, 2, 1481–1495. [Google Scholar] [CrossRef] [Green Version]

- Breidenbach, J.; Astrup, R. The Semi-Individual Tree Crown Approach. In Forestry Applications of Airborne Laser Scanning: Concepts and Case Studies; Managing Forest Ecosystems; Maltamo, M., Næsset, E., Vauhkonen, J., Eds.; Springer: Dordrecht, The Netherlands, 2014; pp. 113–133. ISBN 978-94-017-8663-8. [Google Scholar]

- Cao, L.; Liu, H.; Fu, X.; Zhang, Z.; Shen, X.; Ruan, H. Comparison of UAV LiDAR and Digital Aerial Photogrammetry Point Clouds for Estimating Forest Structural Attributes in Subtropical Planted Forests. Forests 2019, 10, 145. [Google Scholar] [CrossRef] [Green Version]

- Ota, T.; Ogawa, M.; Mizoue, N.; Fukumoto, K.; Yoshida, S. Forest Structure Estimation from a UAV-Based Photogrammetric Point Cloud in Managed Temperate Coniferous Forests. Forests 2017, 8, 343. [Google Scholar] [CrossRef]

- Guo, Q.; Su, Y.; Hu, T.; Zhao, X.; Wu, F.; Li, Y.; Liu, J.; Chen, L.; Xu, G.; Lin, G.; et al. An integrated UAV-borne lidar system for 3D habitat mapping in three forest ecosystems across China. Int. J. Remote Sens. 2017, 38, 2954–2972. [Google Scholar] [CrossRef]

- Gobakken, T.; Næsset, E. Estimation of diameter and basal area distributions in coniferous forest by means of airborne laser scanner data. Scand. J. For. Res. 2004, 19, 529–542. [Google Scholar] [CrossRef]

- Giannetti, F.; Chirici, G.; Gobakken, T.; Næsset, E.; Travaglini, D.; Puliti, S. A new approach with DTM-independent metrics for forest growing stock prediction using UAV photogrammetric data. Remote Sens. Environ. 2018, 213, 195–205. [Google Scholar] [CrossRef]

- Chen, S.; McDermid, G.J.; Castilla, G.; Linke, J. Measuring Vegetation Height in Linear Disturbances in the Boreal Forest with UAV Photogrammetry. Remote Sens. 2017, 9, 1257. [Google Scholar] [CrossRef] [Green Version]

- Goodbody, T.R.H.; Coops, N.C.; Tompalski, P.; Crawford, P.; Day, K.J.K. Updating residual stem volume estimates using ALS- and UAV-acquired stereo-photogrammetric point clouds. Int. J. Remote Sens. 2017, 38, 2938–2953. [Google Scholar] [CrossRef]

- Alonzo, M.; Andersen, H.-E.; Morton, D.C.; Cook, B.D. Quantifying Boreal Forest Structure and Composition Using UAV Structure from Motion. Forests 2018, 9, 119. [Google Scholar] [CrossRef] [Green Version]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. The use of fixed–wing UAV photogrammetry with LiDAR DTM to estimate merchantable volume and carbon stock in living biomass over a mixed conifer–broadleaf forest. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 767–777. [Google Scholar] [CrossRef]

- Ni, W.; Liu, J.; Zhang, Z.; Sun, G.; Yang, A. Evaluation of UAV-based forest inventory system compared with LiDAR data. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 3874–3877. [Google Scholar]

- Guerra-Hernández, J.; Gonzalez-Ferreiro, E.; Sarmento, A.; Silva, J.; Nunes, A.; Correia, A.C.; Fontes, L.; Tomé, M.; Diaz-Varela, R. Using high resolution UAV imagery to estimate tree variables in Pinus pinea plantation in Portugal. For. Syst. 2016, 25, 9. [Google Scholar] [CrossRef] [Green Version]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.J.; Faias, S.P.; Tomé, M.; Díaz-Varela, R.A. Use of Multi-Temporal UAV-Derived Imagery for Estimating Individual Tree Growth in Pinus pinea Stands. Forests 2017, 8, 300. [Google Scholar] [CrossRef]

- Lin, J.; Wang, M.; Ma, M.; Lin, Y. Aboveground Tree Biomass Estimation of Sparse Subalpine Coniferous Forest with UAV Oblique Photography. Remote Sens. 2018, 10, 1849. [Google Scholar] [CrossRef] [Green Version]

- Guerra-Hernández, J.; Cosenza, D.N.; Rodriguez, L.C.E.; Silva, M.; Tomé, M.; Díaz-Varela, R.A.; González-Ferreiro, E. Comparison of ALS- and UAV(SfM)-derived high-density point clouds for individual tree detection in Eucalyptus plantations. Int. J. Remote Sens. 2018, 39, 5211–5235. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Liang, X.; Hyyppä, H.; Wang, Y. Autonomous Collection of Forest Field Reference—The Outlook and a First Step with UAV Laser Scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef] [Green Version]

- Yin, D.; Wang, L. Individual mangrove tree measurement using UAV-based LiDAR data: Possibilities and challenges. Remote Sens. Environ. 2019, 223, 34–49. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Carr, J.C.; Slyder, J.B. Individual tree segmentation from a leaf-off photogrammetric point cloud. Int. J. Remote Sens. 2018, 39, 5195–5210. [Google Scholar] [CrossRef]

- Iizuka, K.; Yonehara, T.; Itoh, M.; Kosugi, Y. Estimating Tree Height and Diameter at Breast Height (DBH) from Digital Surface Models and Orthophotos Obtained with an Unmanned Aerial System for a Japanese Cypress (Chamaecyparis obtusa) Forest. Remote Sens. 2018, 10, 13. [Google Scholar] [CrossRef] [Green Version]

- Chisholm, R.A.; Cui, J.; Lum, S.K.Y.; Chen, B.M. UAV LiDAR for below-canopy forest surveys. J. Unmanned Veh. Syst. 2013, 1, 61–68. [Google Scholar] [CrossRef] [Green Version]

- Abdollahnejad, A.; Panagiotidis, D.; Surový, P. Estimation and Extrapolation of Tree Parameters Using Spectral Correlation between UAV and Pléiades Data. Forests 2018, 9, 85. [Google Scholar] [CrossRef] [Green Version]

- Otero, V.; Van De Kerchove, R.; Satyanarayana, B.; Martínez-Espinosa, C.; Fisol, M.A.B.; Ibrahim, M.R.B.; Sulong, I.; Mohd-Lokman, H.; Lucas, R.; Dahdouh-Guebas, F. Managing mangrove forests from the sky: Forest inventory using field data and Unmanned Aerial Vehicle (UAV) imagery in the Matang Mangrove Forest Reserve, peninsular Malaysia. For. Ecol. Manag. 2018, 411, 35–45. [Google Scholar] [CrossRef]

- Surový, P.; Ribeiro, N.A.; Panagiotidis, D. Estimation of positions and heights from UAV-sensed imagery in tree plantations in agrosilvopastoral systems. Int. J. Remote Sens. 2018, 39, 4786–4800. [Google Scholar] [CrossRef]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Guimarães, N.; Adão, T.; Marques, P. Classification of an Agrosilvopastoral System Using RGB Imagery from an Unmanned Aerial Vehicle. In Proceedings of the EPIA Conference on Artificial Intelligence. EPIA 2019, Vila Real, Portugal, 3–6 September 2019; Moura Oliveira, P., Novais, P., Reis, L., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; Crawford, P. Assessing the status of forest regeneration using digital aerial photogrammetry and unmanned aerial systems. Int. J. Remote Sens. 2018, 39, 5246–5264. [Google Scholar] [CrossRef]

- Röder, M.; Latifi, H.; Hill, S.; Wild, J.; Svoboda, M.; Brůna, J.; Macek, M.; Nováková, M.H.; Gülch, E.; Heurich, M. Application of optical unmanned aerial vehicle-based imagery for the inventory of natural regeneration and standing deadwood in post-disturbed spruce forests. Int. J. Remote Sens. 2018, 39, 5288–5309. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- De Sá, N.C.; Castro, P.; Carvalho, S.; Marchante, E.; López-Núñez, F.A.; Marchante, H. Mapping the Flowering of an Invasive Plant Using Unmanned Aerial Vehicles: Is There Potential for Biocontrol Monitoring? Front. Plant Sci. 2018, 9, 293. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Franklin, S.E.; Ahmed, O.S. Deciduous tree species classification using object-based analysis and machine learning with unmanned aerial vehicle multispectral data. Int. J. Remote Sens. 2018, 39, 5236–5245. [Google Scholar] [CrossRef]

- Melville, B.; Lucieer, A.; Aryal, J. Classification of Lowland Native Grassland Communities Using Hyperspectral Unmanned Aircraft System (UAS) Imagery in the Tasmanian Midlands. Drones 2019, 3, 5. [Google Scholar] [CrossRef] [Green Version]

- Cao, J.; Leng, W.; Liu, K.; Liu, L.; He, Z.; Zhu, Y. Object-Based Mangrove Species Classification Using Unmanned Aerial Vehicle Hyperspectral Images and Digital Surface Models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef] [Green Version]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Aerial images from an UAV system: 3D modeling and tree species classification in a park area. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 361–366. [Google Scholar] [CrossRef] [Green Version]

- Sankey, T.T.; McVay, J.; Swetnam, T.L.; McClaran, M.P.; Heilman, P.; Nichols, M. UAV hyperspectral and lidar data and their fusion for arid and semi-arid land vegetation monitoring. Remote Sens. Ecol. Conserv. 2018, 4, 20–33. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Herrick, J.E.; Rango, A.; Winters, C. Acquisition, orthorectification, and object-based classification of unmanned aerial vehicle (UAV) imagery for rangeland monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 661–672. [Google Scholar] [CrossRef]

- Morales, G.; Kemper, G.; Sevillano, G.; Arteaga, D.; Ortega, I.; Telles, J. Automatic Segmentation of Mauritia flexuosa in Unmanned Aerial Vehicle (UAV) Imagery Using Deep Learning. Forests 2018, 9, 736. [Google Scholar] [CrossRef] [Green Version]

- Fraser, B.T.; Congalton, R.G. Evaluating the Effectiveness of Unmanned Aerial Systems (UAS) for Collecting Thematic Map Accuracy Assessment Reference Data in New England Forests. Forests 2019, 10, 24. [Google Scholar] [CrossRef] [Green Version]

- Brovkina, O.; Cienciala, E.; Surový, P.; Janata, P. Unmanned aerial vehicles (UAV) for assessment of qualitative classification of Norway spruce in temperate forest stands. Geo-Spat. Inf. Sci. 2018, 21, 12–20. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Chen, J.; Mu, X.; Li, W.; Yan, G.; Xie, D.; Zhang, W. Quantifying Understory and Overstory Vegetation Cover Using UAV-Based RGB Imagery in Forest Plantation. Remote Sens. 2020, 12, 298. [Google Scholar] [CrossRef] [Green Version]

- Fromm, M.; Schubert, M.; Castilla, G.; Linke, J.; McDermid, G. Automated Detection of Conifer Seedlings in Drone Imagery Using Convolutional Neural Networks. Remote Sens. 2019, 11, 2585. [Google Scholar] [CrossRef] [Green Version]

- Imangholiloo, M.; Saarinen, N.; Markelin, L.; Rosnell, T.; Näsi, R.; Hakala, T.; Honkavaara, E.; Holopainen, M.; Hyyppä, J.; Vastaranta, M. Characterizing Seedling Stands Using Leaf-Off and Leaf-On Photogrammetric Point Clouds and Hyperspectral Imagery Acquired from Unmanned Aerial Vehicle. Forests 2019, 10, 415. [Google Scholar] [CrossRef] [Green Version]

- Shin, J.; Seo, W.; Kim, T.; Park, J.; Woo, C. Using UAV Multispectral Images for Classification of Forest Burn Severity—A Case Study of the 2019 Gangneung Forest Fire. Forests 2019, 10, 1025. [Google Scholar] [CrossRef] [Green Version]

- Martínez-de Dios, J.R.; Merino, L.; Caballero, F.; Ollero, A. Automatic forest-fire measuring using ground stations and Unmanned Aerial Systems. Sensors 2011, 11, 6328–6353. [Google Scholar] [CrossRef] [Green Version]

- Merino, L.; Caballero, F.; Martínez-de-Dios, J.R.; Maza, I.; Ollero, A. An Unmanned Aircraft System for Automatic Forest Fire Monitoring and Measurement. J. Intell. Robot. Syst. 2012, 65, 533–548. [Google Scholar] [CrossRef]

- Hristov, G.; Raychev, J.; Kinaneva, D.; Zahariev, P. Emerging Methods for Early Detection of Forest Fires Using Unmanned Aerial Vehicles and Lorawan Sensor Networks. In Proceedings of the 2018 28th EAEEIE Annual Conference (EAEEIE), Reykjavik, Iceland, 26–28 September 2018; pp. 1–9. [Google Scholar]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring fire severity using UAV imagery in semi-arid central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

- Aicardi, I.; Garbarino, M.; Lingua, A.; Lingua, E.; Marzano, R.; Piras, M. Monitoring Post-Fire Forest Recovery Using Multitemporal Digital Surface Models Generated from Different Platforms. Earsel Eproceedings 2016, 15, 1–8. [Google Scholar]

- White, R.A.; Bomber, M.; Hupy, J.P.; Shortridge, A. UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire. Drones 2018, 2, 40. [Google Scholar] [CrossRef] [Green Version]

- Larrinaga, A.R.; Brotons, L. Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery. Drones 2019, 3, 6. [Google Scholar] [CrossRef] [Green Version]

- Fernández-Guisuraga, J.M.; Sanz-Ablanedo, E.; Suárez-Seoane, S.; Calvo, L. Using Unmanned Aerial Vehicles in Postfire Vegetation Survey Campaigns through Large and Heterogeneous Areas: Opportunities and Challenges. Sensors 2018, 18, 586. [Google Scholar] [CrossRef] [Green Version]

- Mayr, M.J.; Malß, S.; Ofner, E.; Samimi, C. Disturbance feedbacks on the height of woody vegetation in a savannah: A multi-plot assessment using an unmanned aerial vehicle (UAV). Int. J. Remote Sens. 2018, 39, 4761–4785. [Google Scholar] [CrossRef]

- Shin, P.; Sankey, T.; Moore, M.M.; Thode, A.E. Evaluating Unmanned Aerial Vehicle Images for Estimating Forest Canopy Fuels in a Ponderosa Pine Stand. Remote Sens. 2018, 10, 1266. [Google Scholar] [CrossRef] [Green Version]

- Dash, J.; Pearse, G.; Watt, M. UAV Multispectral Imagery Can Complement Satellite Data for Monitoring Forest Health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef] [Green Version]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef] [Green Version]

- Minařík, R.; Langhammer, J. Use of a multispectral UAV photogrammetry for detection and tracking of forest disturbance dynamics. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41. [Google Scholar] [CrossRef]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000; Volume 1619. [Google Scholar]

- Gitelson, A.A.; Chivkunova, O.B.; Merzlyak, M.N. Nondestructive estimation of anthocyanins and chlorophylls in anthocyanic leaves. Am. J. Bot. 2009, 96, 1861–1868. [Google Scholar] [CrossRef] [PubMed]

- Ju, C.-H.; Tian, Y.-C.; Yao, X.; Cao, W.-X.; Zhu, Y.; Hannaway, D. Estimating Leaf Chlorophyll Content Using Red Edge Parameters. Pedosphere 2010, 20, 633–644. [Google Scholar] [CrossRef]

- Cardil, A.; Vepakomma, U.; Brotons, L. Assessing Pine Processionary Moth Defoliation Using Unmanned Aerial Systems. Forests 2017, 8, 402. [Google Scholar] [CrossRef] [Green Version]

- Otsu, K.; Pla, M.; Vayreda, J.; Brotons, L. Calibrating the Severity of Forest Defoliation by Pine Processionary Moth with Landsat and UAV Imagery. Sensors 2018, 18, 3278. [Google Scholar] [CrossRef] [Green Version]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Smigaj, M.; Gaulton, R.; Barr, S.L.; Suárez, J.C. UAV-borne thermal imaging for forest health monitoring: Detection of disease-induced canopy temperature increase. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 349–354. [Google Scholar] [CrossRef] [Green Version]

- Näsi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikäinen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote sensing of bark beetle damage in urban forests at individual tree level using a novel hyperspectral camera from UAV and aircraft. Urban For. Urban Green. 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Otsu, K.; Pla, M.; Brotons, L. Estimating the Severity of Defoliation Due to Pine Processionary Moth Using a Combination of Landsat and UAV Imagery. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3979–3982. [Google Scholar]

- Wen, A.; Zheng, J.; Chen, M.; Mu, C.; Tao, M. Spatial distribution of rodent pests in desert forest based on UAV remote sensing. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1804–1807. [Google Scholar]

- Getzin, S.; Wiegand, K.; Schöning, I. Assessing biodiversity in forests using very high-resolution images and unmanned aerial vehicles. Methods Ecol. Evol. 2012, 3, 397–404. [Google Scholar] [CrossRef]

- Getzin, S.; Nuske, R.S.; Wiegand, K. Using Unmanned Aerial Vehicles (UAV) to Quantify Spatial Gap Patterns in Forests. Remote Sens. 2014, 6, 6988–7004. [Google Scholar] [CrossRef] [Green Version]

- Bagaram, M.B.; Giuliarelli, D.; Chirici, G.; Giannetti, F.; Barbati, A. UAV Remote Sensing for Biodiversity Monitoring: Are Forest Canopy Gaps Good Covariates? Remote Sens. 2018, 10, 1397. [Google Scholar]

- Tian, J.; Wang, L.; Li, X.; Gong, H.; Shi, C.; Zhong, R.; Liu, X. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. Geoinf. 2017, 61, 22–31. [Google Scholar] [CrossRef]

- McNeil, B.E.; Pisek, J.; Lepisk, H.; Flamenco, E.A. Measuring leaf angle distribution in broadleaf canopies using UAVs. Agric. For. Meteorol. 2016, 218–219, 204–208. [Google Scholar] [CrossRef]

- Feduck, C.; McDermid, G.J.; Castilla, G. Detection of Coniferous Seedlings in UAV Imagery. Forests 2018, 9, 432. [Google Scholar] [CrossRef] [Green Version]

- Puliti, S.; Solberg, S.; Granhus, A. Use of UAV Photogrammetric Data for Estimation of Biophysical Properties in Forest Stands Under Regeneration. Remote Sens. 2019, 11, 233. [Google Scholar] [CrossRef] [Green Version]

- Talbot, B.; Rahlf, J.; Astrup, R. An operational UAV-based approach for stand-level assessment of soil disturbance after forest harvesting. Scand. J. For. Res. 2018, 33, 387–396. [Google Scholar] [CrossRef] [Green Version]

- Pierzchała, M.; Talbot, B.; Astrup, R. Estimating Soil Displacement from Timber Extraction Trails in Steep Terrain: Application of an Unmanned Aircraft for 3D Modelling. Forests 2014, 5, 1212–1223. [Google Scholar] [CrossRef] [Green Version]

- Nevalainen, P.; Salmivaara, A.; Ala-Ilomäki, J.; Launiainen, S.; Hiedanpää, J.; Finér, L.; Pahikkala, T.; Heikkonen, J. Estimating the Rut Depth by UAV Photogrammetry. Remote Sens. 2017, 9, 1279. [Google Scholar] [CrossRef] [Green Version]

- Ota, T.; Ahmed, O.S.; Minn, S.T.; Khai, T.C.; Mizoue, N.; Yoshida, S. Estimating selective logging impacts on aboveground biomass in tropical forests using digital aerial photography obtained before and after a logging event from an unmanned aerial vehicle. For. Ecol. Manag. 2019, 433, 162–169. [Google Scholar] [CrossRef]

- Puliti, S.; Talbot, B.; Astrup, R. Tree-Stump Detection, Segmentation, Classification, and Measurement Using Unmanned Aerial Vehicle (UAV) Imagery. Forests 2018, 9, 102. [Google Scholar] [CrossRef] [Green Version]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Floris, A.; Clementel, F.; Colle, G.; Gubert, F.; Bertoldi, L.; De Lorenzi, G. Stima di volumi legnosi forestali con dati fotogrammetrici telerilevati da UAV su piccole superfici: Un caso di studio in Trentino. In Proceedings of the Atti della “16a Conferenza Nazionale ASITA”, Vicenza, Italy, 6–9 November 2012; pp. 681–688. [Google Scholar]

- Körting, T.S.; Garcia Fonseca, L.M.; Câmara, G. GeoDMA—Geographic Data Mining Analyst. Comput. Geosci. 2013, 57, 133–145. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Ambrosia, V.G.; Wegener, S.; Zajkowski, T.; Sullivan, D.V.; Buechel, S.; Enomoto, F.; Lobitz, B.; Johan, S.; Brass, J.; Hinkley, E. The Ikhana unmanned airborne system (UAS) western states fire imaging missions: From concept to reality (2006–2010). Geocarto Int. 2011, 26, 85–101. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef] [Green Version]

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidar-based point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2017, 38, 2411–2426. [Google Scholar] [CrossRef]

- Puliti, S.; Ene, L.T.; Gobakken, T.; Næsset, E. Use of partial-coverage UAV data in sampling for large scale forest inventories. Remote Sens. Environ. 2017, 194, 115–126. [Google Scholar] [CrossRef]

| Studies | UAV Type | Sensor Type | Results (R2) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FW | RW | RGB | LiDAR | CIR | HL | HM | Hdom | Sn | BA | Sv | DBH | V | AGB | |

| Puliti et al. [37] | ● | ● | 0.71 | 0.97 | 0.60 | 0.60 | 0.85 | |||||||

| Giannetti et al. [88] | ● | ● | ● | 0.80–0.83 | ||||||||||

| Chen et al. [89] | ● | ● | 0.91 | |||||||||||

| Cao et al. [84] | ● | ● | 0.90 | 0.56 | 0.64 | 0.69 | 0.78 | 0.68 | ||||||

| Cao et al. [84] | ● | ● | 0.82 | 0.5 | 0.61 | 0.50 | 0.70 | 0.63 | ||||||

| Ota et al. [85] | ● | ● | 0.93 | 0.93 | 0.91 | 0.75 | ||||||||

| Alonzo et al. [91] | ● | ● | 0.79 | 0.92 | ||||||||||

| Jayathunga et al. [92] | ● | ● | 0.84 | |||||||||||

| Guo et al. [86] | ● | ● | 0.81 | 0.84 | ||||||||||

| Goodbody et al. [90] | ● | ● | 0.93 | |||||||||||

| Studies | UAV Type | Sensor Type | Results (R2; *r) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| FW | RW | RGB | LiDAR | MSP | HSP | H | CD | DBH | Sv | AGB | |

| Ni et al. [93] | ● | ● | 0.87 | ||||||||

| Wallace et al. [101] | ● | ● | 0.84 | ||||||||

| Wallace et al. [101] | ● | ● | 0.68 | ||||||||

| Guerra-Hernández et al. [97] | ● | ● | 0.61–0.69* | ||||||||

| Chen et al. [89] | ● | ● | 0.76* | ||||||||

| Carr and Slyder [102] | ● | ● | 0.82* | ||||||||

| Surový et al. [107] | ● | ● | |||||||||

| Dandois et al. [108] | ● | ● | 0.86 | ||||||||

| Chisholm et al. [104] | ● | ● | 0.45 | ||||||||

| Sankey et al. [100] | ● | ● | ● | ● | ● | 0.90 | 0.72 | ||||

| Guerra-Hernández et al. [94] | ● | ● | 0.81 | 0.95 | |||||||

| Lin et al. [96] | ● | ● | 0.92 | 0.96 | |||||||

| Panagiotidis et al. [78] | ● | ● | 0.75–0.72 | 0.63–0.85 | |||||||

| Yin and Wang [99] | ● | ● | > 0.9 | 0.83–0.85 | |||||||

| Otero et al. [106] | ● | ● | 0.60 | 0.75 | |||||||

| Guerra-Hernández et al. [95] | ● | ● | 0.96 | 0.79 | 0.86–0.87 | ||||||

| Abdollahnejad et al. [105] | ● | ● | 0.87 | 0.78 | 0.71 | ||||||

| Jaakkola et al. [98] | ● | ● | 0.92* | 0.88* | 0.88* | 0.89* | |||||

| Studies | UAV Type | Sensors | Classification Method | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FW | RW | RGB | LiDAR | CIR | MSP | HSP | k-NN | DT | NB | MLP | RF | ISO | MaxL | SVM | HIC | CNN | CDC | |

| Fraser and Congalton [123] | ● | ● | ||||||||||||||||

| Brovkina et al. [124] | ● | ● | ● | ● | ||||||||||||||

| Sankey et al. [120] | ● | ● | ● | ● | ||||||||||||||

| Nevalainen et al. [71] | ● | ● | ● | ● | ● | ● | ● | ● | ||||||||||

| Melville et al. [117] | ● | ● | ● | ● | ● | |||||||||||||

| Michez et al. [67] | ● | ● | ● | ● | ||||||||||||||

| Gini et al. [65,119] | ● | ● | ● | ● | ● | |||||||||||||

| Cao et al. [118] | ● | ● | ● | ● | ||||||||||||||

| Laliberte et al. [121] | ● | ● | ● | |||||||||||||||

| Morales et al. [122] | ● | ● | ● | |||||||||||||||

| Goodbody et al. [110] | ● | ● | ● | |||||||||||||||

| Franklin and Ahmed [116] | ● | ● | ● | |||||||||||||||

| Röder et al. [111] | ● | ● | ● | |||||||||||||||

| de Sá et al. [115] | ● | ● | ● | ● | ||||||||||||||

| Alonzo et al. [91] | ● | ● | ● | |||||||||||||||

| Jayathunga et al. [92] | ● | ● | ● | |||||||||||||||

| Li et al. [125] | ● | ● | ● | |||||||||||||||

| Fromm et al. [126] | ● | ● | ● | |||||||||||||||

| Imangholiloo et al. [127] | ● | ● | ● | ● | ||||||||||||||

| Studies | UAV Type | Sensor Type | Outcomes Used | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| FW | RW | RGB | LiDAR | MSP | TIR | PC | OM | CHM | DSM | VI | |

| Fernández-Álvarez et al. [49] | ● | ● | ● | ||||||||

| Shin et al. [138] | ● | ● | ● | ● | |||||||

| Martínez-de Dios et al. [129]; Merino et al. [130] | ● | ● | ● | ||||||||

| Hristov et al. [131] | ● | ● | ● | ● | |||||||

| McKenna et al. [132] | ● | ● | ● | ● | |||||||

| Aicardi et al. [133] | ● | ● | ● | ● | |||||||

| White et al. [134] | ● | ● | ● | ||||||||

| Larrinaga and Brotons [135] | ● | ● | ● | ● | |||||||

| Fernández-Guisuraga et al. [136] | ● | ● | ● | ● | |||||||

| Mayr et al. [137] | ● | ● | ● | ||||||||

| Studies | UAV Type | Sensor Type | |||||

|---|---|---|---|---|---|---|---|

| FW | RW | RGB | CIR | MSP | HSP | TIR | |

| Lehmann et al. [147] | ● | ● | ● | ||||

| Näsi et al. [140,149] | ● | ● | |||||

| Smigaj et al. [148] | ● | ● | ● | ● | |||

| Cardil et al.; Otsu et al. [145,146,150] | ● | ● | |||||

| Wen et al. [151] | ● | ● | |||||

| Minařík and Langhammer [141] | ● | ● | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061046

Guimarães N, Pádua L, Marques P, Silva N, Peres E, Sousa JJ. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sensing. 2020; 12(6):1046. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061046

Chicago/Turabian StyleGuimarães, Nathalie, Luís Pádua, Pedro Marques, Nuno Silva, Emanuel Peres, and Joaquim J. Sousa. 2020. "Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities" Remote Sensing 12, no. 6: 1046. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061046