HTD-Net: A Deep Convolutional Neural Network for Target Detection in Hyperspectral Imagery

Abstract

:1. Introduction

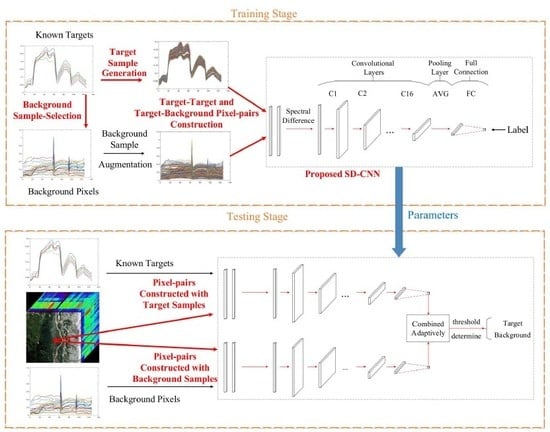

2. Proposed Target Detection Framework

| Algorithm 1 The Proposed HTD-Net |

|

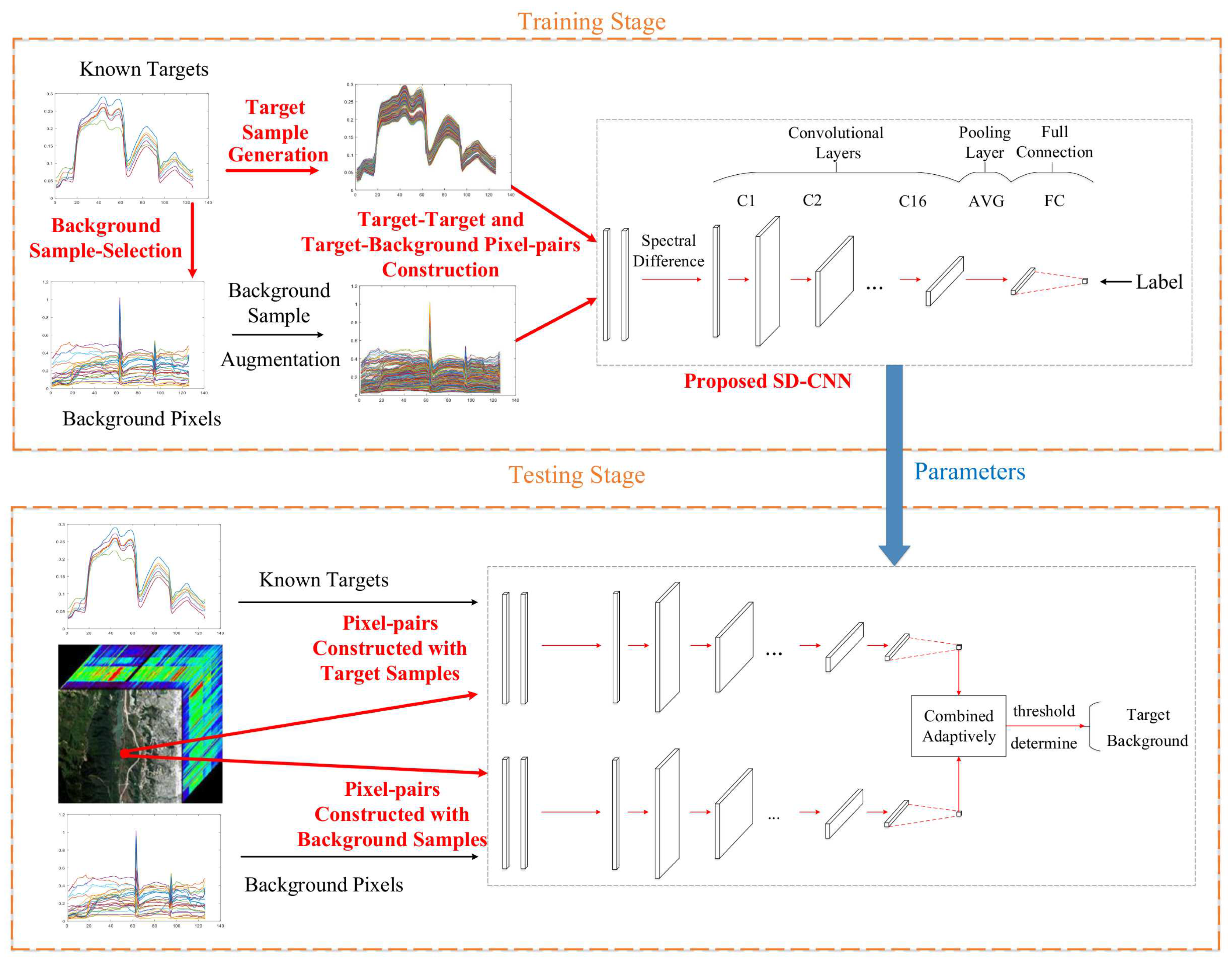

2.1. Generation of Target Samples

2.2. LP-Based Background Sample Selection

2.3. Construction of Training Pixel-Pairs

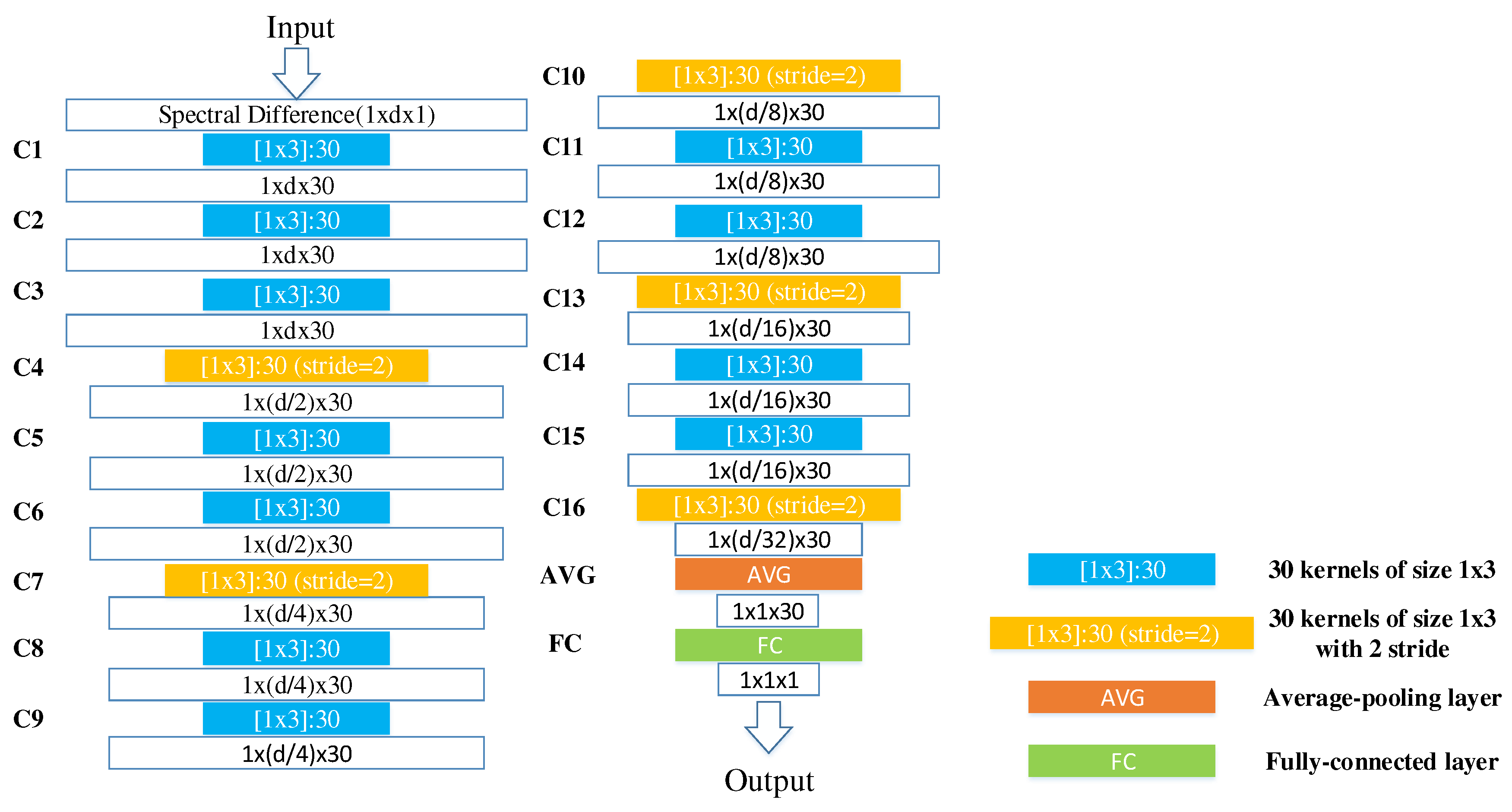

2.4. Similarity-Discrimination CNN

2.5. Combined Target and Background Similarity Scores

3. Analysis on Proposed Method

3.1. Comparison with Representation-Based Detectors

3.2. Comparison with CNN-Based Anomaly Detection

4. Experimental Results

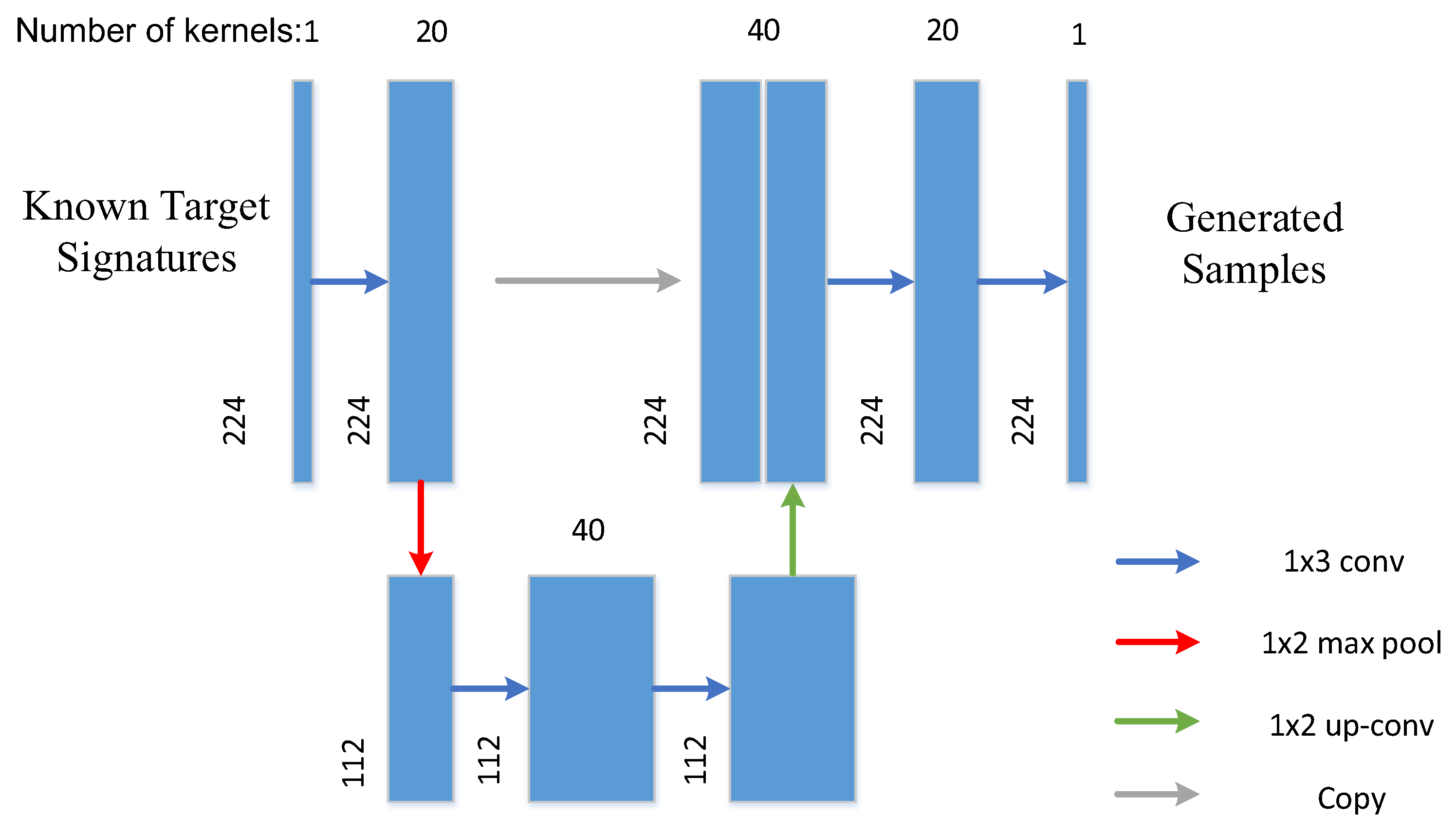

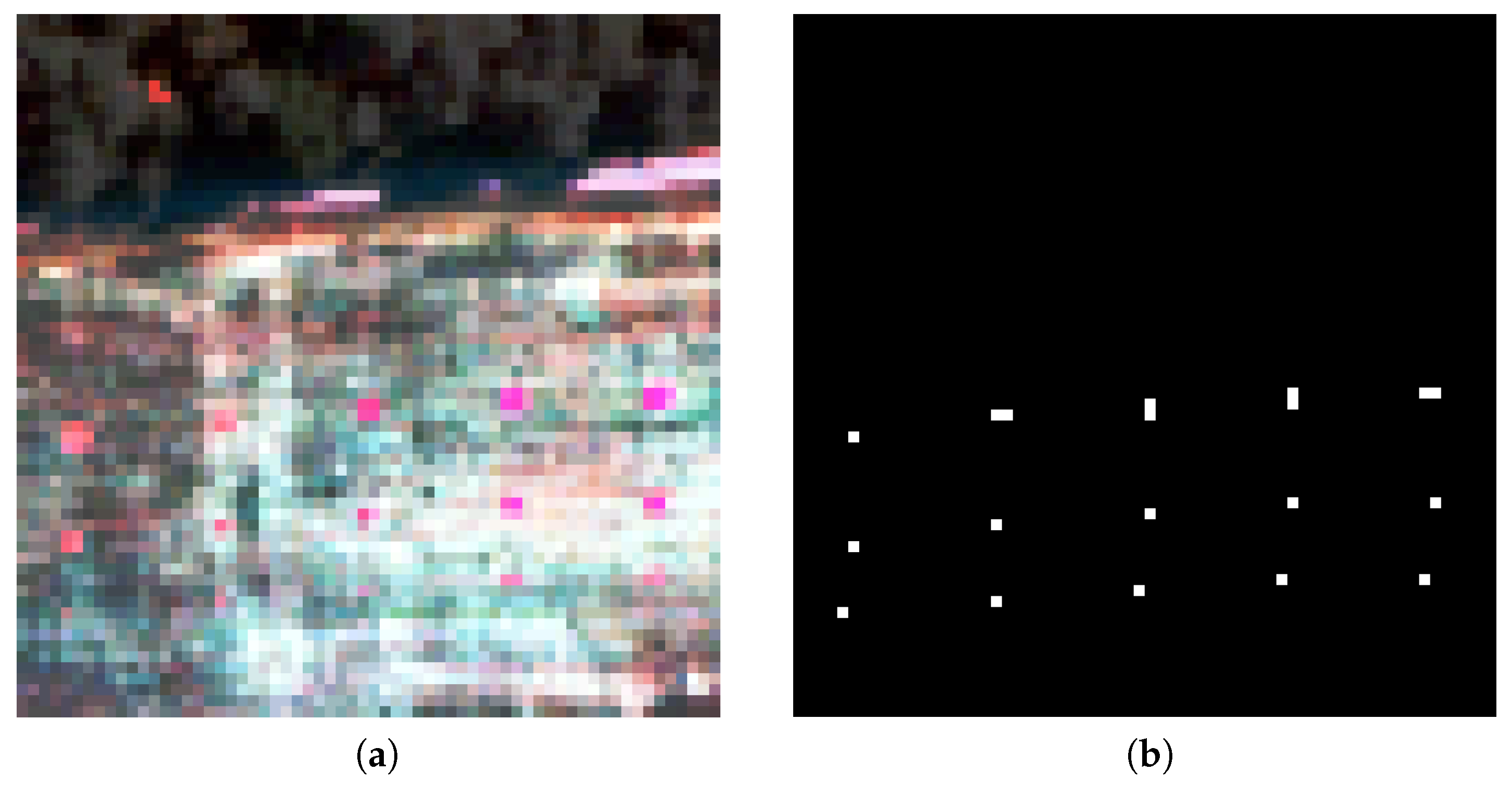

4.1. Hyperspectral Data

4.2. Parameter Setting for Deep Network

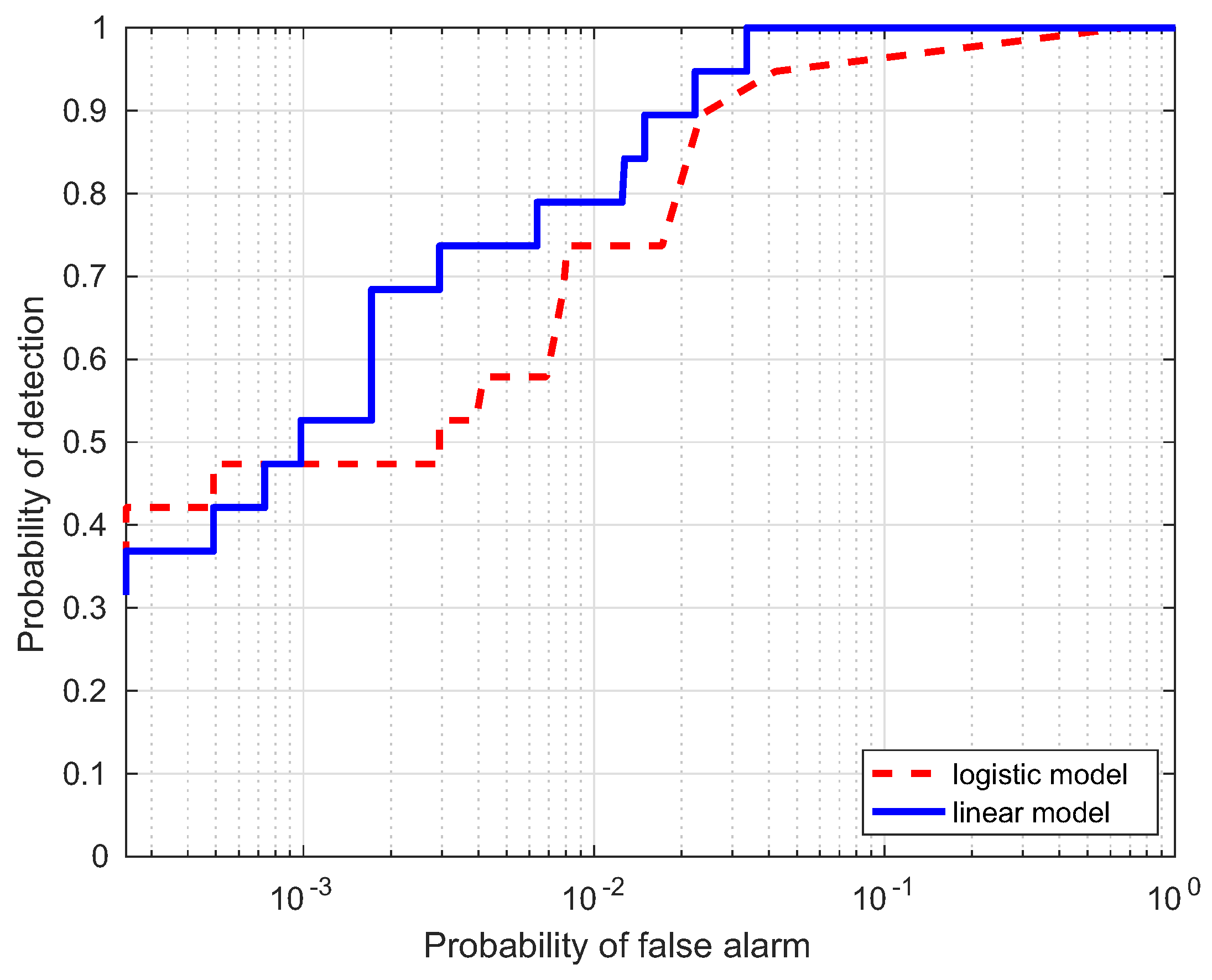

4.3. Comparison between Linear and Logistic Strategies

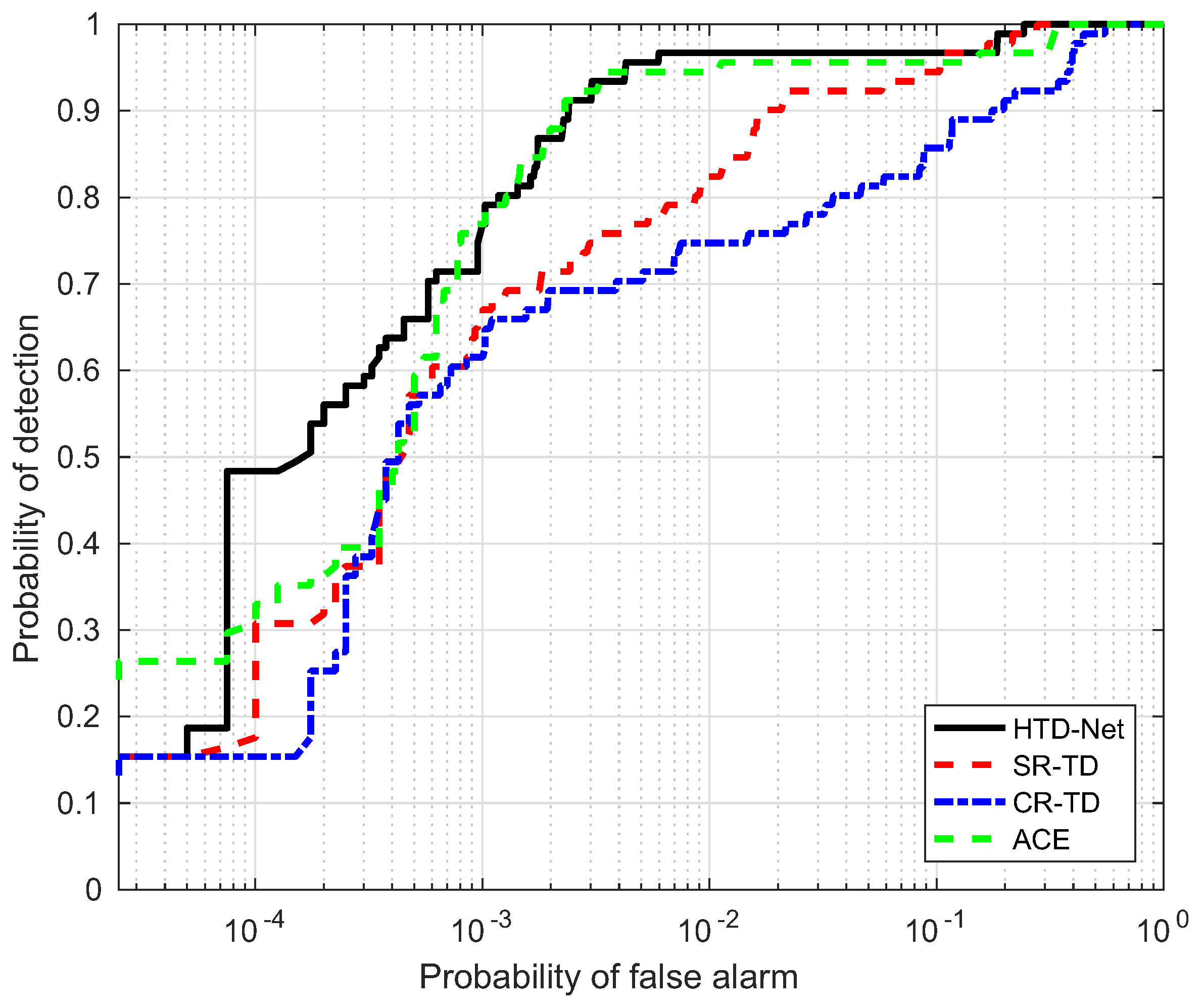

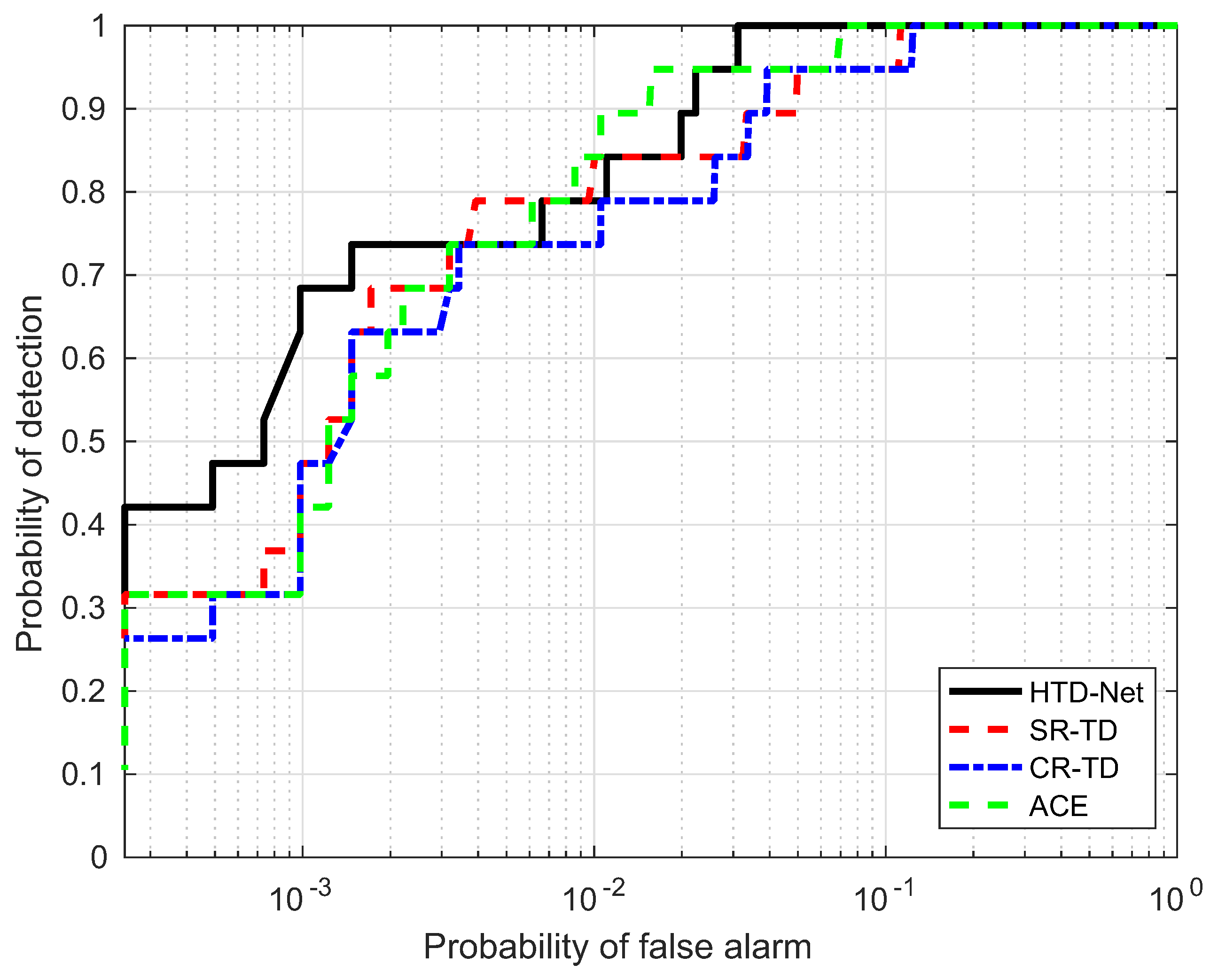

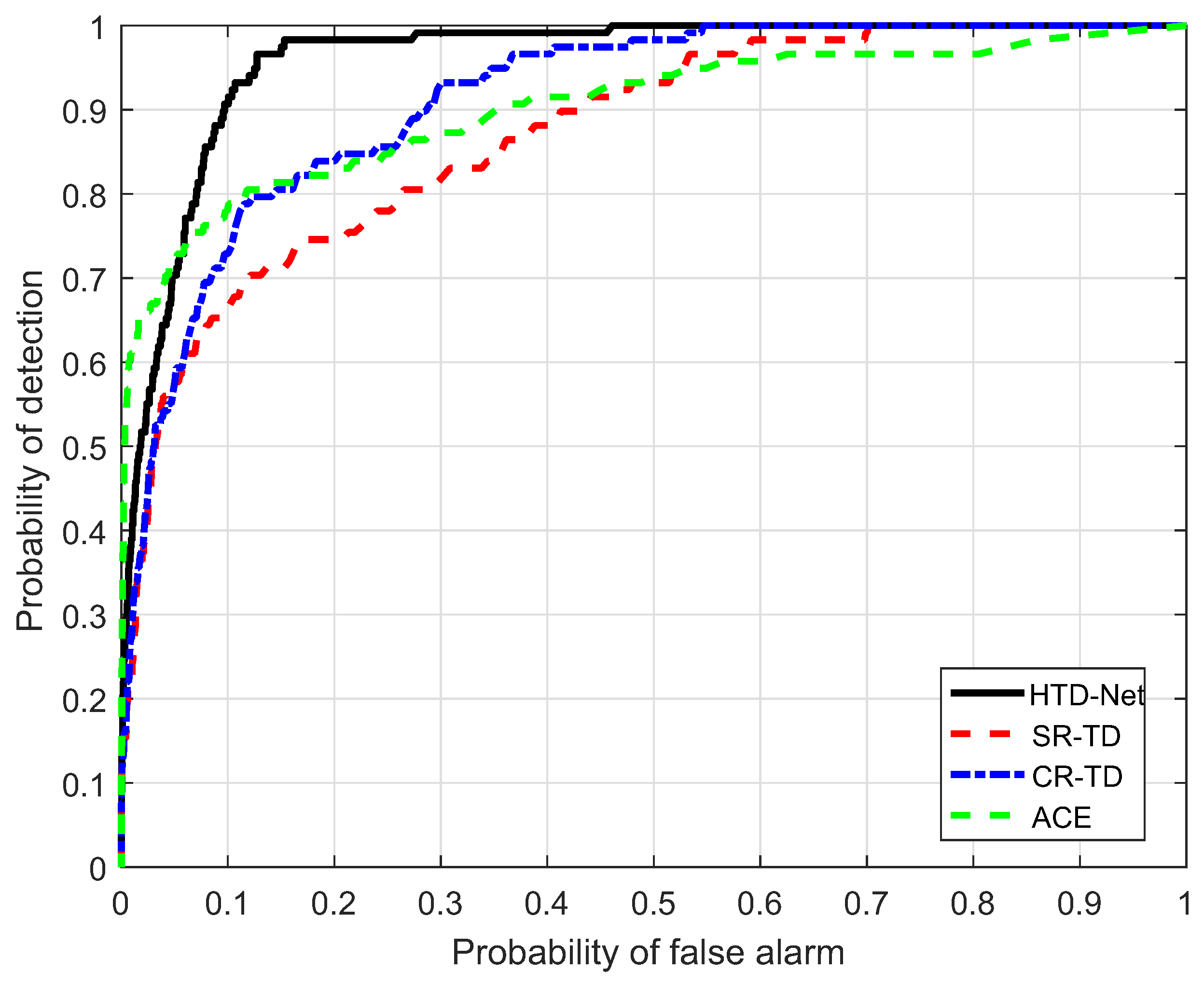

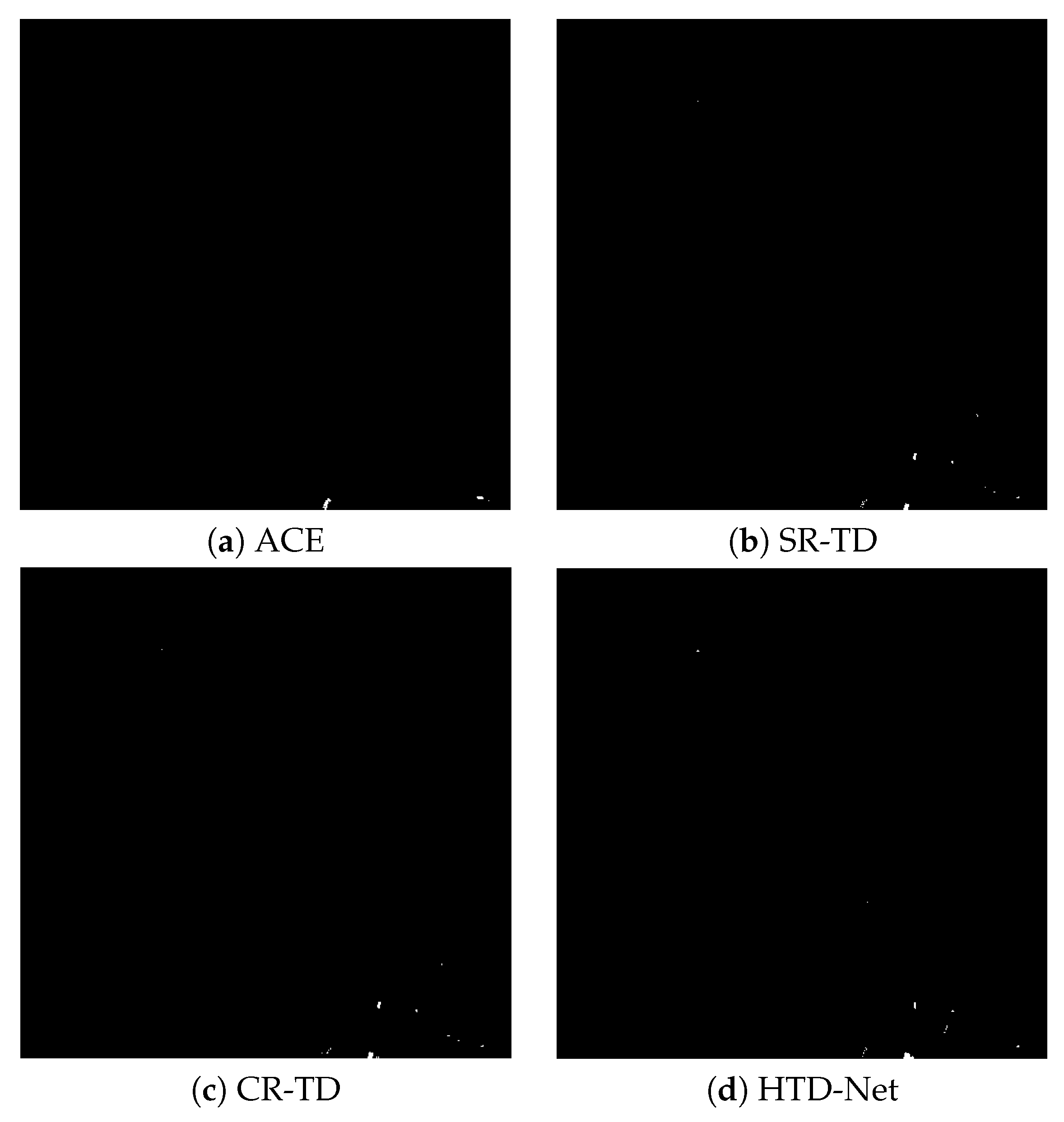

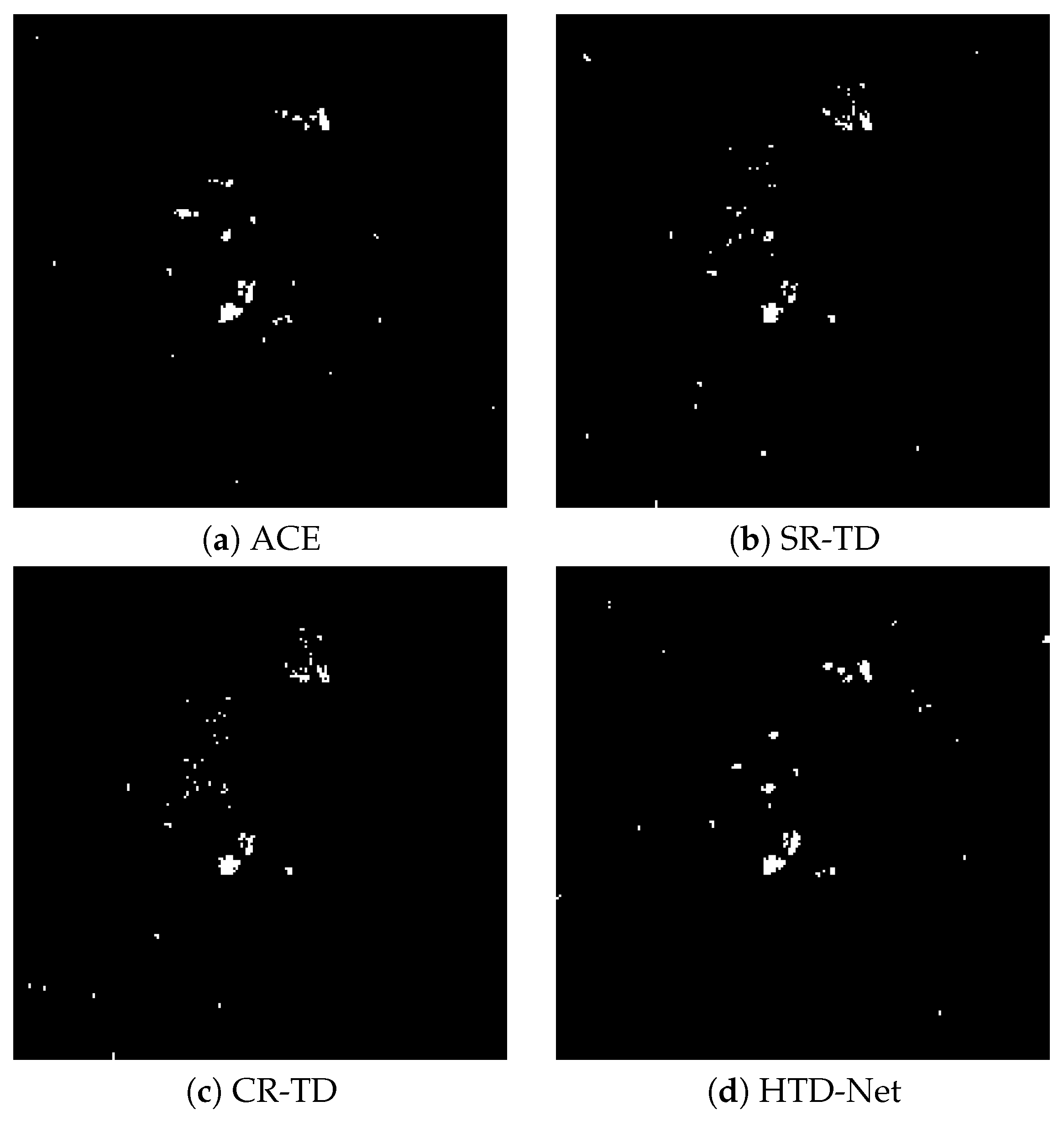

4.4. Comparison Performance with Traditional Methods

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Eismann, M.T.; Stocker, A.D.; Nasrabadi, N.M. Automated Hyperspectral Cueing for Civilian Search and Rescue. Proc. IEEE 2009, 97, 1031–1055. [Google Scholar] [CrossRef]

- Datt, B.; McVicar, T.R.; Van Niel, T.G.; Jupp, D.L.; Pearlman, J.S. Preprocessing EO-1 Hyperion hyperspectral data to support the application of agricultural indexes. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1246–1259. [Google Scholar] [CrossRef] [Green Version]

- Hörig, B.; Kühn, F.; Oschütz, F.; Lehmann, F. HyMap hyperspectral remote sensing to detect hydrocarbons. Int. J. Remote Sens. 2001, 22, 1413–1422. [Google Scholar] [CrossRef]

- Jin, X.; Paswaters, S.; Cline, H. A comparative study of target detection algorithms for hyperspectral imagery. Proc. SPIE Int. Soc. Opt. Eng. 2009, 7334, 73341W. [Google Scholar]

- Robey, F.C.; Fuhrmann, D.R.; Kelly, E.J.; Nitzberg, R. A CFAR adaptive matched filter detector. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 208–216. [Google Scholar] [CrossRef] [Green Version]

- Funk, C.C.; Theiler, J.; Roberts, D.A.; Borel, C.C. Clustering to improve matched filter detection of weak gas plumes in hyperspectral thermal imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1410–1420. [Google Scholar] [CrossRef] [Green Version]

- Kraut, S.; Scharf, L.L.; Butler, R.W. The adaptive coherence estimator: A uniformly most-powerful-invariant adaptive detection statistic. IEEE Trans. Signal Process. 2005, 53, 427–438. [Google Scholar] [CrossRef]

- Matteoli, S.; Acito, N.; Diani, M.; Corsini, G. Local approach to orthogonal subspace-based target detection in hyperspectral images. In Proceedings of the Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Grenoble, France, 26–28 August 2009; pp. 1–4. [Google Scholar]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Sparse Representation for Target Detection in Hyperspectral Imagery. IEEE J. Sel. Top. Signal Process. 2011, 5, 629–640. [Google Scholar] [CrossRef]

- Du, B.; Zhang, Y.; Zhang, L.; Tao, D. Beyond the Sparsity-Based Target Detector: A Hybrid Sparsity and Statistics-Based Detector for Hyperspectral Images. IEEE Trans. Image Process. 2016, 25, 5345–5357. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, H.; Lin, H.; Jia, X. A Sparse Representation Method for a Priori Target Signature Optimization in Hyperspectral Target Detection. IEEE Access 2018, 6, 3408–3424. [Google Scholar] [CrossRef]

- Li, W.; Du, Q.; Zhang, B. Combined sparse and collaborative representation for hyperspectral target detection. Pattern Recognit. 2015, 48, 3904–3916. [Google Scholar] [CrossRef]

- Li, C.; Gao, L.; Wu, Y.; Zhang, B.; Plaza, J.; Plaza, A. A real-time unsupervised background extraction-based target detection method for hyperspectral imagery. J. Real Time Image Process. 2018, 15, 597–615. [Google Scholar] [CrossRef]

- Li, W.; Zhao, M.; Deng, X.; Li, L.; Zhang, W. Infrared Small Target Detection Using Local and Nonlocal Spatial Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3677–3689. [Google Scholar] [CrossRef]

- Tao, R.; Zhao, X.; Li, W.; Li, H.C.; Du, Q. Hyperspectral Anomaly Detection by Fractional Fourier Entropy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4920–4929. [Google Scholar] [CrossRef]

- Ma, X.; Geng, J.; Wang, H. Hyperspectral image classification via contextual deep learning. Eurasip J. Image Video Process. 2015, 2015, 20. [Google Scholar] [CrossRef] [Green Version]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, X.; Jia, X. Spectral Spatial Classification of Hyperspectral Data Based on Deep Belief Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2381–2392. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Geng, J. Spectral Spatial Classification of Hyperspectral Image Based on Deep Auto-Encoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4073–4085. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, J.; Liu, P.; Choo, K.K.R.; Huang, F. Spectral–spatial multi-feature-based deep learning for hyperspectral remote sensing image classification. Soft Comput. 2017, 21, 213–221. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral-Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Li, W. Deep Convolutional Neural Networks for Hyperspectral Image Classification. J. Sens. 2015, 2015, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Slavkovikj, V.; Verstockt, S.; De Neve, W.; Van Hoecke, S.; Van de Walle, R. Hyperspectral image classification with convolutional neural networks. In Proceedings of the 23rd ACM International Conference on Multimedia; ACM: New York, NY, USA, 2015; pp. 1159–1162. [Google Scholar]

- Makantasis, K.; Karantzalos, K.; Doulamis, A.; Doulamis, N. Deep supervised learning for hyperspectral data classification through convolutional neural networks. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 4959–4962. [Google Scholar]

- Yu, S.; Jia, S.; Xu, C. Convolutional neural networks for hyperspectral image classification. Neurocomputing 2017, 219, 88–98. [Google Scholar] [CrossRef]

- Li, W.; Wu, G.; Zhang, F.; Du, Q. Hyperspectral Image Classification Using Deep Pixel-Pair Features. IEEE Trans. Geosci. Remote Sens. 2017, 55, 844–853. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.Q.; Chan, J.C.W. Learning and Transferring Deep Joint Spectral-Spatial Features for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Mei, S.; Ji, J.; Hou, J.; Li, X.; Du, Q. Learning Sensor-Specific Spatial-Spectral Features of Hyperspectral Images via Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4520–4533. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [Green Version]

- Du, Q.; Yang, H. Similarity-Based Unsupervised Band Selection for Hyperspectral Image Analysis. IEEE Geosci. Remote Sens. Lett. 2008, 5, 564–568. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Li, W.; Wu, G.; Du, Q. Transferred Deep Learning for Anomaly Detection in Hyperspectral Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 597–601. [Google Scholar] [CrossRef]

- Baraniuk, R.G. Compressive sensing [lecture notes]. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Du, Q.; Zhu, W.; Fowler, J.E. Anomaly-based hyperspectral image compression. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; Volume 2. [Google Scholar] [CrossRef] [Green Version]

- Plaza, A.; Du, Q.; Chang, Y.L.; King, R.L. High performance computing for hyperspectral remote sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 528–544. [Google Scholar] [CrossRef]

- Snyder, D.; Kerekes, J.; Fairweather, I.; Crabtree, R. Development of a Web-Based Application to Evaluate Target Finding Algorithms. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. II-915–II-918. [Google Scholar]

- Li, J.; Du, Q.; Li, Y.; Li, W. Hyperspectral Image Classification With Imbalanced Data Based on Orthogonal Complement Subspace Projection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3838–3851. [Google Scholar] [CrossRef]

- Hensman, P.; Masko, D. The Impact of Imbalanced Training Data for Convolutional Neural Networks; Degree Project in Computer Science; KTH Royal Institute of Technology: Stockholm, Sweden, 2015. [Google Scholar]

| Data | Model | Target Samples | Background Samples | Combined Samples |

|---|---|---|---|---|

| Moffett Filed | Logistic | 98.12 | 64.39 | 99.72 |

| Linear | 99.8 | 72.24 | 99.91 | |

| WTC | Logistic | 84.77 | 67.63 | 84.19 |

| Linear | 85.59 | 91.57 | 99.31 | |

| Hydice Forest | Logistic | 93.80 | 70.74 | 97.47 |

| Linear | 98.50 | 86.72 | 99.49 | |

| HyMap | Logistic | 72.64 | 70.60 | 80.47 |

| Linear | 95.34 | 90.27 | 96.03 |

| Data | Detectors | |||

|---|---|---|---|---|

| ACE | CR-TD | SR-TD | HTD-Net | |

| Moffett Filed | 97.98 | 98.25 | 98.04 | 99.91 |

| WTC | 98.69 | 95.17 | 98.65 | 99.31 |

| Hydice Forest | 99.32 | 98.68 | 98.82 | 99.49 |

| HyMap | 90.29 | 91.29 | 87.20 | 96.03 |

| HTD-Net | AUC (%) | Standard | Z | p | Significant? | Significant? |

|---|---|---|---|---|---|---|

| vs. | Difference | Error | Statistic | Value | (95% Confidence) | (99% Confidence) |

| Moffett Filed | ||||||

| ACE | 1.93 | 0.0026 | 7.415 | <0.0001 | Yes | Yes |

| CR-TD | 1.66 | 0.0022 | 7.533 | <0.0001 | Yes | Yes |

| SR-TD | 1.87 | 0.0025 | 7.471 | <0.0001 | Yes | Yes |

| WTC | ||||||

| ACE | 0.62 | 0.0016 | 3.873 | <0.0001 | Yes | Yes |

| CR-TD | 4.14 | 0.0049 | 8.345 | <0.0001 | Yes | Yes |

| SR-TD | 0.66 | 0.0017 | 3.908 | 0.0001 | Yes | Yes |

| Hydice Forest | ||||||

| ACE | 0.17 | 0.0023 | 0.745 | 0.745 | No | No |

| CR-TD | 0.81 | 0.0036 | 2.260 | 0.0238 | Yes | No |

| SR-TD | 0.67 | 0.0032 | 2.081 | 0.0375 | Yes | No |

| HyMap | ||||||

| ACE | 5.74 | 0.0089 | 6.410 | <0.0001 | Yes | Yes |

| CR-TD | 4.74 | 0.0082 | 5.760 | <0.0001 | Yes | Yes |

| SR-TD | 8.83 | 0.0110 | 8.023 | <0.0001 | Yes | Yes |

| Data | Detectors | |||

|---|---|---|---|---|

| ACE | CR-TD | SR-TD | HTD-Net | |

| Moffett Filed | 4.68 | 10.38 | 15.53 | 83.33 |

| WTC | 0.71 | 1.83 | 2.49 | 58.35 |

| Hydice Forest | 0.05 | 0.17 | 0.21 | 2.23 |

| HyMap | 0.31 | 1.02 | 1.29 | 28.17 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Zhao, S.; Li, W.; Du, Q.; Ran, Q.; Tao, R. HTD-Net: A Deep Convolutional Neural Network for Target Detection in Hyperspectral Imagery. Remote Sens. 2020, 12, 1489. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12091489

Zhang G, Zhao S, Li W, Du Q, Ran Q, Tao R. HTD-Net: A Deep Convolutional Neural Network for Target Detection in Hyperspectral Imagery. Remote Sensing. 2020; 12(9):1489. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12091489

Chicago/Turabian StyleZhang, Gaigai, Shizhi Zhao, Wei Li, Qian Du, Qiong Ran, and Ran Tao. 2020. "HTD-Net: A Deep Convolutional Neural Network for Target Detection in Hyperspectral Imagery" Remote Sensing 12, no. 9: 1489. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12091489