The Influence of Camera Calibration on Nearshore Bathymetry Estimation from UAV Videos

Abstract

:1. Introduction

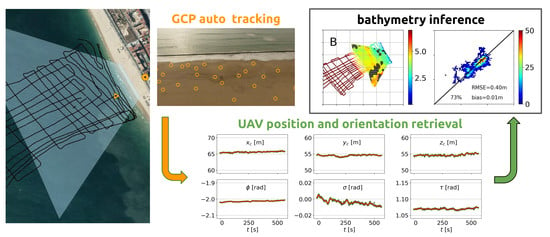

2. Methodology

2.1. Study Site: Victoria Beach

2.2. Data Collection

2.3. Video Calibration and Stabilization

2.3.1. Camera Model

2.3.2. Intrinsic Calibration

2.3.3. Extrinsic Calibration

2.4. Bathymetry Estimation

3. Results

3.1. Video Calibration and Stabilization

3.1.1. Intrinsic Calibration

3.1.2. Extrinsic Calibration

3.2. Bathymetry Estimation

3.3. Intrinsic Calibration: Laboratory Calibrations

3.4. Camera Governing Equations

- : squared pixels (i.e., );

- : + no decentering (i.e., and at the center of the image); and,

- : + + parabolic radial distortion only (i.e., ).

3.5. Extrinsic Calibration: Homographies and GCP Tracking

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GCP | Ground Control Point |

| NAO | North Atlantic Oscillations |

| RMSE | Root Mean Square Error |

| RTK-GPS | Real-Time Kinematic Global Positioning System |

| UAV | Unmaned Aerial Vehicle |

References

- Birkemeier, W.; Mason, C. The crab: A unique nearshore surveying vehicle. J. Surv. Eng. 1984, 110, 1–7. [Google Scholar] [CrossRef]

- Davidson, M.; van Koningsveld, M.; de Kruif, A.; Rawson, J.; Holman, R.; Lamberti, A.; Medina, R.; Kroon, A.; Aarninkhof, S. The CoastView project: Developing video-derived Coastal State Indicators in support of coastal zone management. Coast. Eng. 2007, 54, 463–475. [Google Scholar] [CrossRef]

- Kroon, A.; Davidson, M.; Aarninkhof, S.; Archetti, R.; Armaroli, C.; Gonzalez, M.; Medri, S.; Osorio, A.; Aagaard, T.; Holman, R.; et al. Application of remote sensing video systems to coastline management problems. Coast. Eng. 2007, 54, 493–505. [Google Scholar] [CrossRef]

- Monteys, X.; Harris, P.; Caloca, S.; Cahalane, C. Spatial prediction of coastal bathymetry based on multispectral satellite imagery and multibeam data. Remote Sens. 2015, 7, 13782–13806. [Google Scholar] [CrossRef] [Green Version]

- Nicholls, R.J.; Birkemeier, W.A.; Lee, G.H. Evaluation of depth of closure using data from Duck, NC, USA. Mar. Geol. 1998, 148, 179–201. [Google Scholar] [CrossRef]

- Ortiz, A.C.; Ashton, A.D. Exploring shoreface dynamics and a mechanistic explanation for a morphodynamic depth of closure. J. Geophys. Res. Earth Surf. 2016, 121, 442–464. [Google Scholar] [CrossRef]

- Valiente, N.G.; Masselink, G.; McCarroll, R.J.; Scott, T.; Conley, D.; King, E. Nearshore sediment pathways and potential sediment budgets in embayed settings over a multi-annual timescale. Mar. Geol. 2020, 427, 106270. [Google Scholar]

- Alvarez-Ellacuria, A.; Orfila, A.; Gómez-Pujol, L.; Simarro, G.; Obregon, N. Decoupling spatial and temporal patterns in short-term beach shoreline response to wave climate. Geomorphology 2011, 128, 199–208. [Google Scholar] [CrossRef]

- Arriaga, J.; Rutten, J.; Ribas, F.; Falqués, A.; Ruessink, G. Modeling the long-term diffusion and feeding capability of a mega-nourishment. Coast. Eng. 2017, 121, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Hughes Clarke, J.; Mayer, L.; Wells, D. Shallow-water imaging multibeam sonars: A new tool for investigating seafloor processes in the coastal zone and on the continental shelf. Mar. Geophys. Res. 1996, 18, 607–629. [Google Scholar] [CrossRef]

- Guenther, G.; Thomas, R.; LaRocque, P. Design Considerations for Achieving High Accuracy with the SHOALS Bathymetric Lidar System; International Society for Optics and Photonics: St. Petersburg, Russia, 1996; Volume 2964, pp. 54–71. [Google Scholar] [CrossRef]

- Bell, P. Shallow water bathymetry derived from an analysis of X-band marine radar images of waves. Coast. Eng. 1999, 37, 513–527. [Google Scholar] [CrossRef]

- Borge, J.; Rodríquez Rodríguez, G.; Hessner, K.; González, P. Inversion of marine radar images for surface wave analysis. J. Atmos. Ocean. Technol. 2004, 21, 1291–1300. [Google Scholar] [CrossRef]

- Stockdon, H.; Holman, R. Estimation of wave phase speed and nearshore bathymetry from video imagery. J. Geophys. Res. Ocean. 2000, 105, 22015–22033. [Google Scholar] [CrossRef]

- Van Dongeren, A.; Plant, N.; Cohen, A.; Roelvink, D.; Haller, M.; Catalán, P. Beach Wizard: Nearshore bathymetry estimation through assimilation of model computations and remote observations. Coast. Eng. 2008, 55, 1016–1027. [Google Scholar] [CrossRef]

- Holman, R.; Plant, N.; Holland, T. CBathy: A robust algorithm for estimating nearshore bathymetry. J. Geophys. Res. Ocean. 2013, 118, 2595–2609. [Google Scholar] [CrossRef]

- Simarro, G.; Calvete, D.; Luque, P.; Orfila, A.; Ribas, F. UBathy: A new approach for bathymetric inversion from video imagery. Remote Sens. 2019, 11, 2711. [Google Scholar] [CrossRef] [Green Version]

- Holland, K.; Holman, R.; Lippmann, T.; Stanley, J.; Plant, N. Practical use of video imagery in nearshore oceanographic field studies. IEEE J. Ocean. Eng. 1997, 22, 81–91. [Google Scholar] [CrossRef]

- Holman, R.; Stanley, J. The history and technical capabilities of Argus. Coast. Eng. 2007, 54, 477–491. [Google Scholar] [CrossRef]

- Nieto, M.; Garau, B.; Balle, S.; Simarro, G.; Zarruk, G.; Ortiz, A.; Tintoré, J.; Álvarez Ellacuría, A.; Gómez-Pujol, L.; Orfila, A. An open source, low cost video-based coastal monitoring system. Earth Surf. Process. Landforms 2010, 35, 1712–1719. [Google Scholar] [CrossRef]

- Simarro, G.; Ribas, F.; Alvarez, A.; Guillén, J.; Chic, O.; Orfila, A. ULISES: An open source code for extrinsic calibrations and planview generations in coastal video monitoring systems. J. Coast. Res. 2017, 33, 1217–1227. [Google Scholar] [CrossRef]

- Boak, E.; Turner, I. Shoreline definition and detection: A review. J. Coast. Res. 2005, 21, 688–703. [Google Scholar] [CrossRef] [Green Version]

- Simarro, G.; Bryan, K.; Guedes, R.; Sancho, A.; Guillen, J.; Coco, G. On the use of variance images for runup and shoreline detection. Coast. Eng. 2015, 99, 136–147. [Google Scholar] [CrossRef]

- Plant, N.; Holman, R. Intertidal beach profile estimation using video images. Mar. Geol. 1997, 140, 1–24. [Google Scholar] [CrossRef]

- Aarninkhof, S.; Turner, I.; Dronkers, T.; Caljouw, M.; Nipius, L. A video-based technique for mapping intertidal beach bathymetry. Coast. Eng. 2003, 49, 275–289. [Google Scholar] [CrossRef]

- Alexander, P.; Holman, R. Quantification of nearshore morphology based on video imaging. Mar. Geol. 2004, 208, 101–111. [Google Scholar] [CrossRef]

- Almar, R.; Coco, G.; Bryan, K.; Huntley, D.; Short, A.; Senechal, N. Video observations of beach cusp morphodynamics. Mar. Geol. 2008, 254, 216–223. [Google Scholar] [CrossRef]

- Ojeda, E.; Guillén, J. Shoreline dynamics and beach rotation of artificial embayed beaches. Mar. Geol. 2008, 253, 51–62. [Google Scholar] [CrossRef]

- Morales-Márquez, V.; Orfila, A.; Simarro, G.; Gómez-Pujol, L.; Alvarez-Ellacuría, A.; Conti, D.; Galán, A.; Osorio, A.; Marcos, M. Numerical and remote techniques for operational beach management under storm group forcing. Nat. Hazards Earth Syst. Sci. 2018, 18, 3211–3223. [Google Scholar] [CrossRef] [Green Version]

- Holman, R.; Brodie, K.; Spore, N. Surf Zone Characterization Using a Small Quadcopter: Technical Issues and Procedures. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2017–2027. [Google Scholar] [CrossRef]

- Matsuba, Y.; Sato, S. Nearshore bathymetry estimation using UAV. Coast. Eng. J. 2018, 60, 51–59. [Google Scholar] [CrossRef]

- Bergsma, E.; Almar, R.; Melo de Almeida, L.; Sall, M. On the operational use of UAVs for video-derived bathymetry. Coast. Eng. 2019, 152. [Google Scholar] [CrossRef]

- Plomaritis, T.A.; Benavente, J.; Laiz, I.; del Rio, L. Variability in storm climate along the Gulf of Cadiz: the role of large scale atmospheric forcing and implications to coastal hazards. Clim. Dyn. 2015, 45, 2499–2514. [Google Scholar] [CrossRef] [Green Version]

- Muñoz-Perez, J.; Medina, R. Comparison of long-, medium- and short-term variations of beach profiles with and without submerged geological control. Coast. Eng. 2010, 57, 241–251. [Google Scholar] [CrossRef]

- Benavente, J.; Plomaritis, T.A.; del Río, L.; Puig, M.; Valenzuela, C.; Minuzzi, B. Differential short- and medium-term behavior of two sections of an urban beach. J. Coast. Res. 2014, 621–626. [Google Scholar] [CrossRef]

- Montes, J.; Simarro, G.; Benavente, J.; Plomaritis, T.; del Río, L. Morphodynamics Assessment by Means of Mesoforms and Video-Monitoring in a Dissipative Beach. Geosciences 2018, 8, 448. [Google Scholar] [CrossRef] [Green Version]

- Del Río, L.; Benavente, J.; Gracia, F.J.; Anfuso, G.; Aranda, M.; Montes, J.B.; Puig, M.; Talavera, L.; Plomaritis, T.A. Beaches of Cadiz. In The Spanish Coastal Systems: Dynamic Processes, Sediments and Management; Morales, J.A., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 311–334. [Google Scholar]

- Shapiro, L.; Stockman, G. Computer Vision; Prentice Hall PTR: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rodriguez-Padilla, I.; Castelle, B.; Marieu, V.; Morichon, D. A simple and efficient image stabilization method for coastal monitoring video systems. Remote Sens. 2020, 12, 70. [Google Scholar] [CrossRef] [Green Version]

- Perugini, E.; Soldini, L.; Palmsten, M.; Calantoni, J.; Brocchini, M. Linear depth inversion sensitivity to wave viewing angle using synthetic optical video. Coast. Eng. 2019, 152. [Google Scholar] [CrossRef]

- Simarro, G.; Calvete, D.; Souto, P.; Guillén, J. Camera calibration for coastal monitoring using available snapshot images. Remote Sens. 2020, 12, 1840. [Google Scholar] [CrossRef]

- Evangelidis, G.; Psarakis, E. Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [Green Version]

| video 1 | ||||||||

| video 2 | ||||||||

| 0 s | 5 s | 10 s | |||||

|---|---|---|---|---|---|---|---|

| RMSE | Bias | RMSE | Bias | RMSE | Bias | ||

| video 1 | 1 | — | — | ||||

| 3 | — | — | |||||

| 5 | — | — | |||||

| 10 | — | — | |||||

| video 2 | 1 | ||||||

| 3 | |||||||

| 5 | |||||||

| 10 | |||||||

| Simplification | RMSE [m] | Bias [m] | |

|---|---|---|---|

| video 1 | F | ||

| video 2 | F | ||

| With Refinement | Without Refinement | ||||

|---|---|---|---|---|---|

| Method | RMSE [m] | Bias [m] | RMSE [m] | Bias [m] | |

| video 1 | ORB | ||||

| ECC-A | |||||

| ECC-B | |||||

| video 2 | ORB | ||||

| ECC-A | |||||

| ECC-B | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simarro, G.; Calvete, D.; Plomaritis, T.A.; Moreno-Noguer, F.; Giannoukakou-Leontsini, I.; Montes, J.; Durán, R. The Influence of Camera Calibration on Nearshore Bathymetry Estimation from UAV Videos. Remote Sens. 2021, 13, 150. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13010150

Simarro G, Calvete D, Plomaritis TA, Moreno-Noguer F, Giannoukakou-Leontsini I, Montes J, Durán R. The Influence of Camera Calibration on Nearshore Bathymetry Estimation from UAV Videos. Remote Sensing. 2021; 13(1):150. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13010150

Chicago/Turabian StyleSimarro, Gonzalo, Daniel Calvete, Theocharis A. Plomaritis, Francesc Moreno-Noguer, Ifigeneia Giannoukakou-Leontsini, Juan Montes, and Ruth Durán. 2021. "The Influence of Camera Calibration on Nearshore Bathymetry Estimation from UAV Videos" Remote Sensing 13, no. 1: 150. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13010150