GNSS Localization in Constraint Environment by Image Fusing Techniques

Abstract

:1. Introduction

- loss of service;

- loss of accuracy;

- inability to estimate any position boundary (e.g., integrity information, also called Quality of Service, QoS, in mass market terminology).

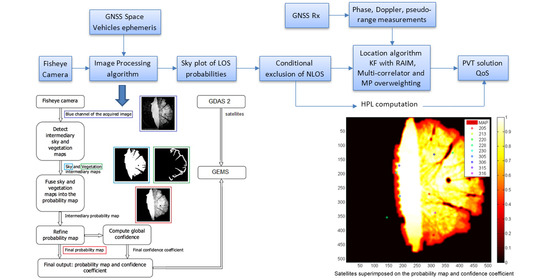

2. Materials and Methods

- Alert Limit (AL): The alert limit for a given parameter measurement is the error tolerance not to be exceeded without issuing an alert. The horizontal alert limit is the maximum allowable horizontal positioning error beyond which the system should be declared unavailable for the intended application.

- Time to Alert (TA): The maximum allowable time elapsed from the onset of the navigation system being out of tolerance until the equipment enunciates the alert.

- Integrity Risk (IR): The probability that, at any moment, the position error exceeds the Alert Limit.

- Protection Level (PL): Statistical bound error computed so as to guarantee that the probability of the absolute position error exceeding said number is smaller than or equal to the target integrity risk. The horizontal protection level provides a bound on the horizontal positioning error with a probability derived from the integrity requirement.

- The GNSS pseudoranges and pseudorange rates are computed with the software receiver GNSS Environment Monitoring Station (GEMS) [7] using the Multi-Correlator (MC) tracking loop with the multipath detection activated.

- These measurements are fed into the EKF prediction step and the associated Receiver Autonomous Integrity Monitoring (RAIM) can thus exclude faulty satellites.

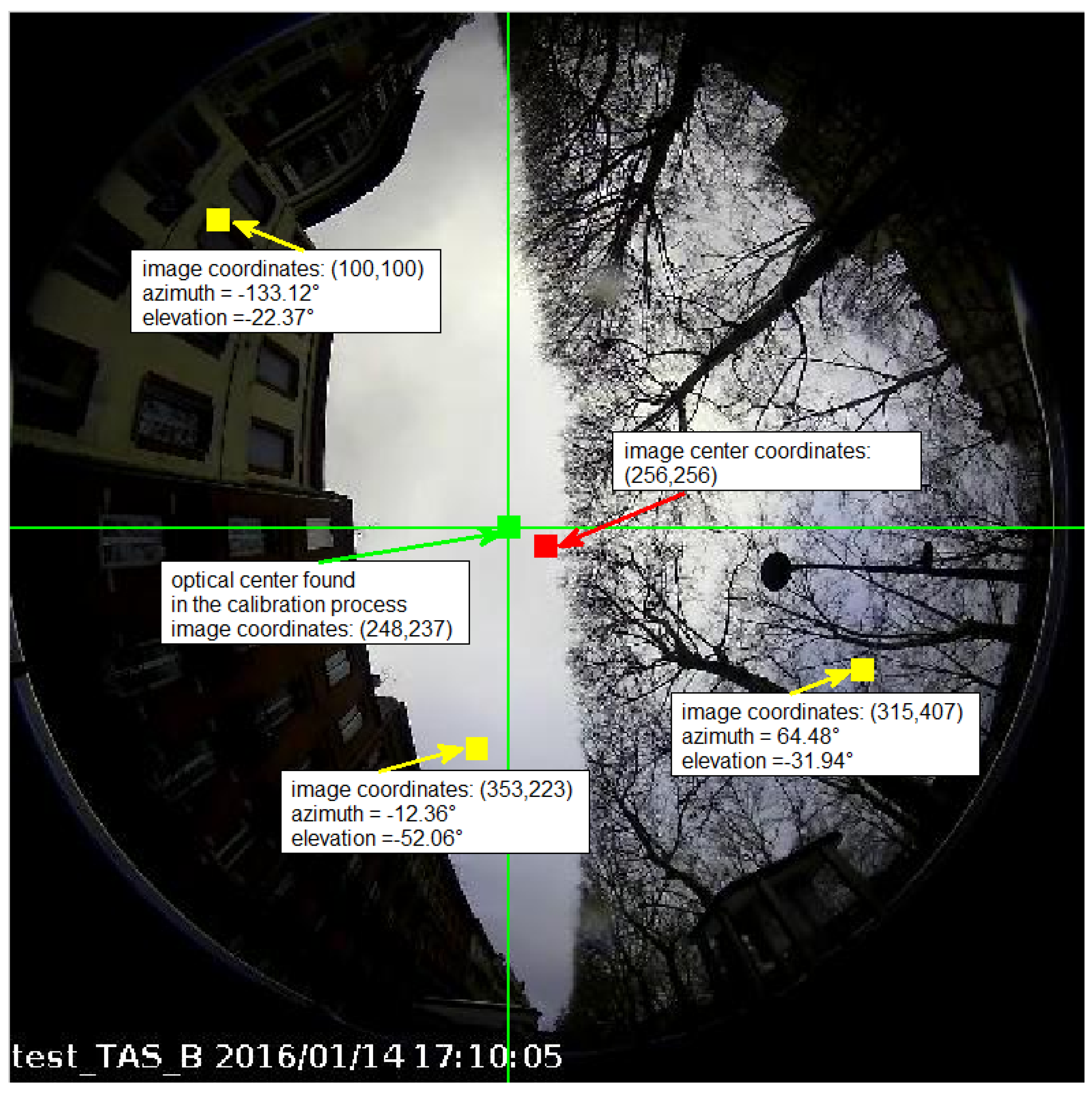

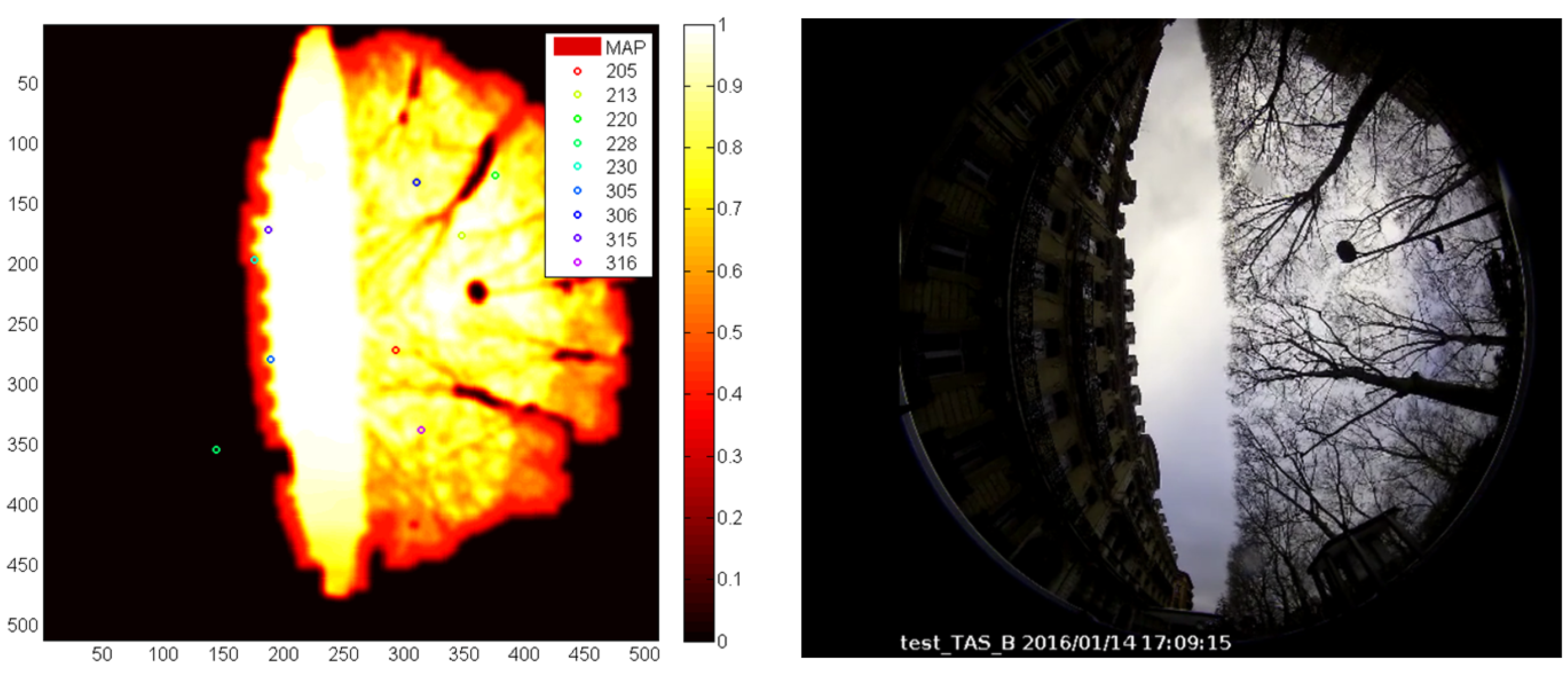

- For each satellite left, the elevation and the azimuth are computed and projected onto the camera reference frame using the vehicle heading computed from the reference trajectory. In an operational mode, the heading would be computed from the GNSS velocity. For our experiments, it is computed from the reference trajectory, so there is no error on the projection of the satellites on the camera reference frame and then the best possible contribution of the camera aiding can be assessed.

- A three-levels discretization of LoS probability map has been considered:

- ⚬

- LoS level: When the probability to be in LoS (pLoS) is between 0.75 and 1, the received signal is considered as the LoS, and the measurements are kept unchanged.

- ⚬

- Doubt level: When the pLoS is between 0.25 and 0.75 (most likely related to building edges or vegetation), there is doubt on the quality of the received signal, so an overweighting is applied on the measurements as:where el is the elevation angle, f is a factor set to 2 (corresponding to the number of extra street crossings of the multipath with respect to the LoS) and L is the average width of the street, which is set to 10 m.

- ⚬

- NLoS level: Finally, when pLoS is between 0 and 0.25, the received signal is considered as NLoS and the measurements are excluded if there are enough LoS space vehicles; otherwise, they are kept and overweighted as above.

- The remaining GNSS data go through the Kalman filter correction step, which provides the final PVT solution.

- The Horizontal Protection Level (HPL) is computed from the output of the Kalman filter.

- The Horizontal Positioning Error (HPE) and Miss-Integrity (MI) are computed from the output of the Kalman filter and the reference trajectory.

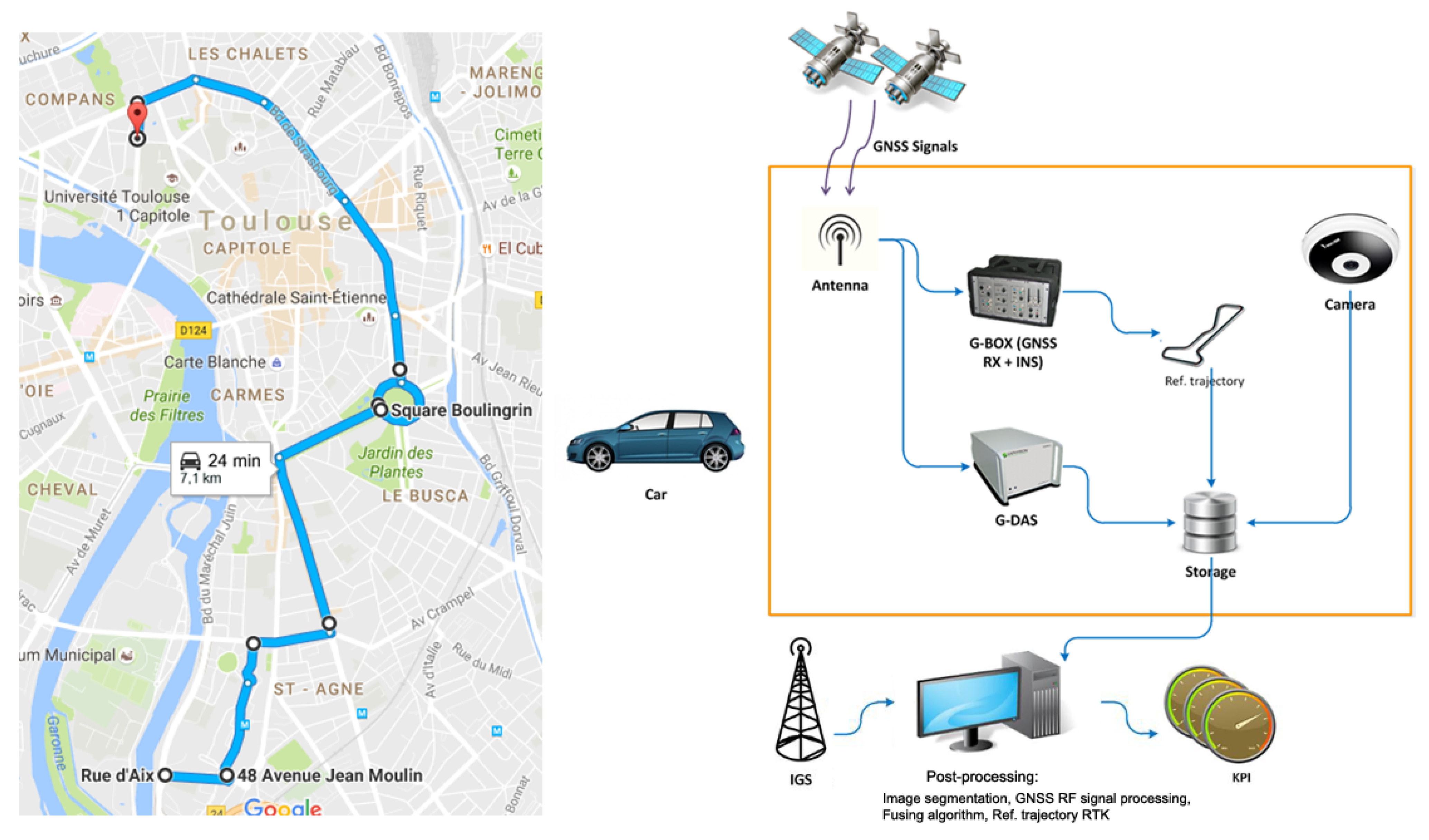

2.1. Data Acquisition

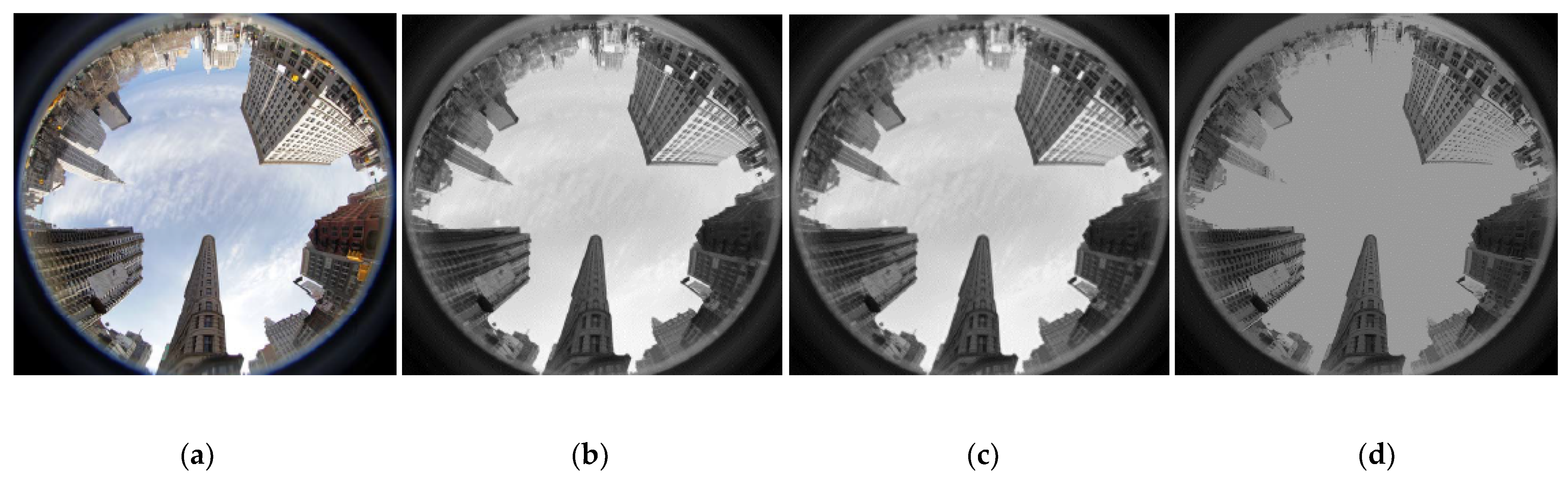

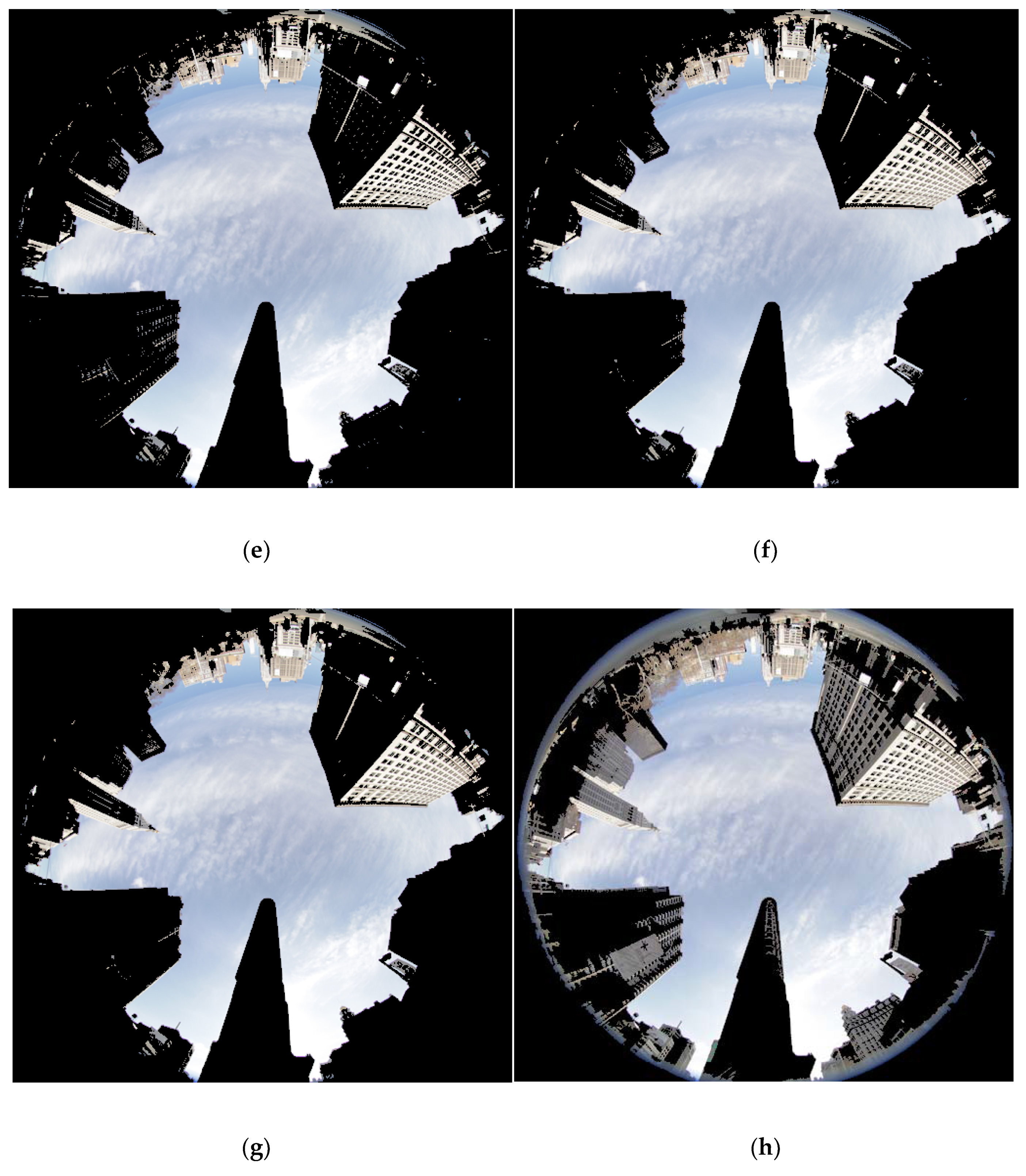

2.2. Image Processing Module

2.2.1. Image Segmentation

2.2.2. Image Processing Module Block Diagram

2.3. GNSS Signal Processing

3. Results and Discussion

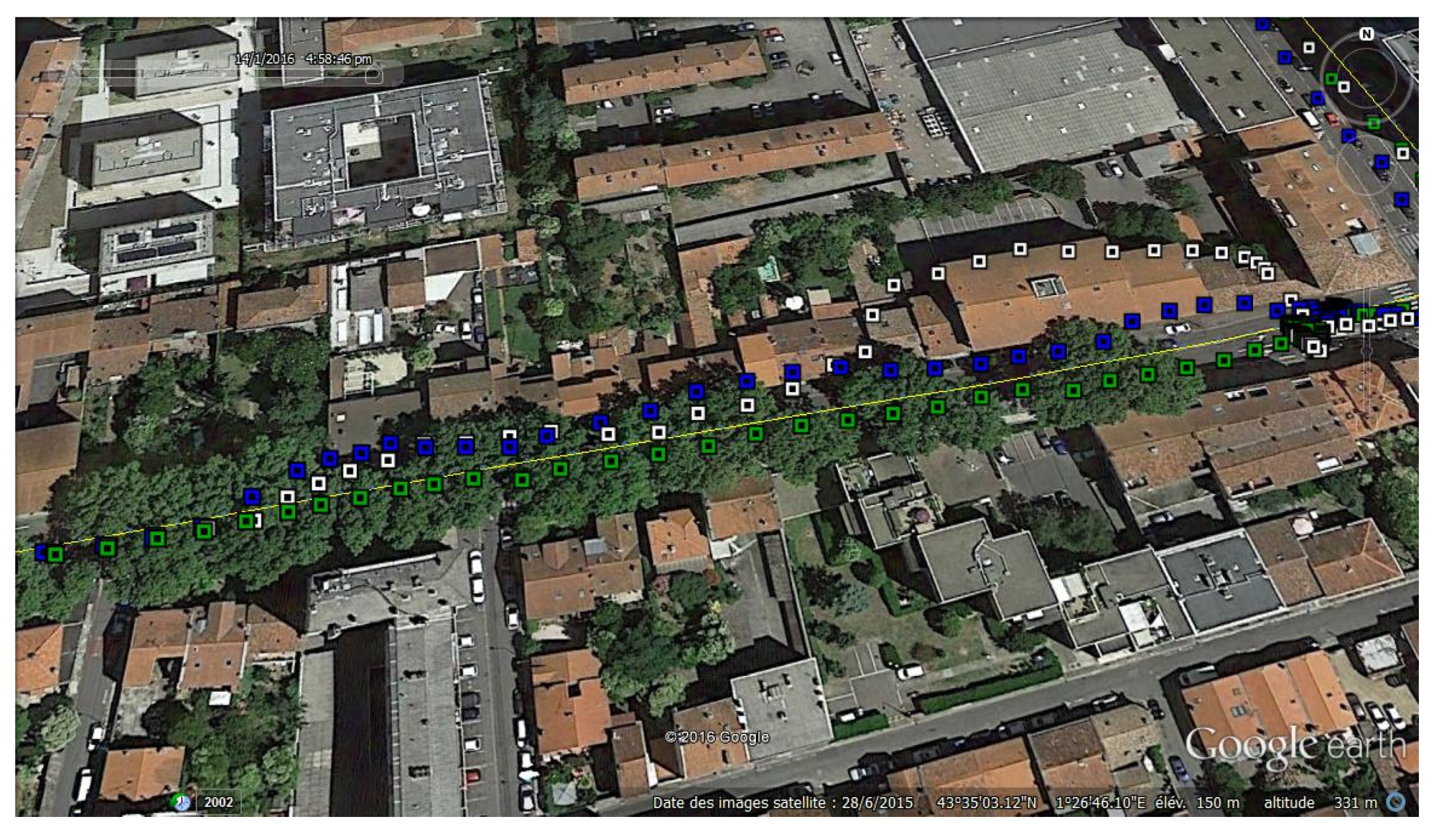

3.1. Experimental Campaign

- Fisheye camera;

- GNSS Data Acquisition System (GDAS-2) used to capture and record GNSS raw signals;

- The live-sky testing platform, including the car carrying the equipment, was provided by GNSS Usage Innovation and Development of Excellence (GUIDE), a Toulousian test laboratory. It embeds a GBOX that provides the reference trajectory based on high-grade INS and GNSS differential corrections from the International GNSS Service (IGS).

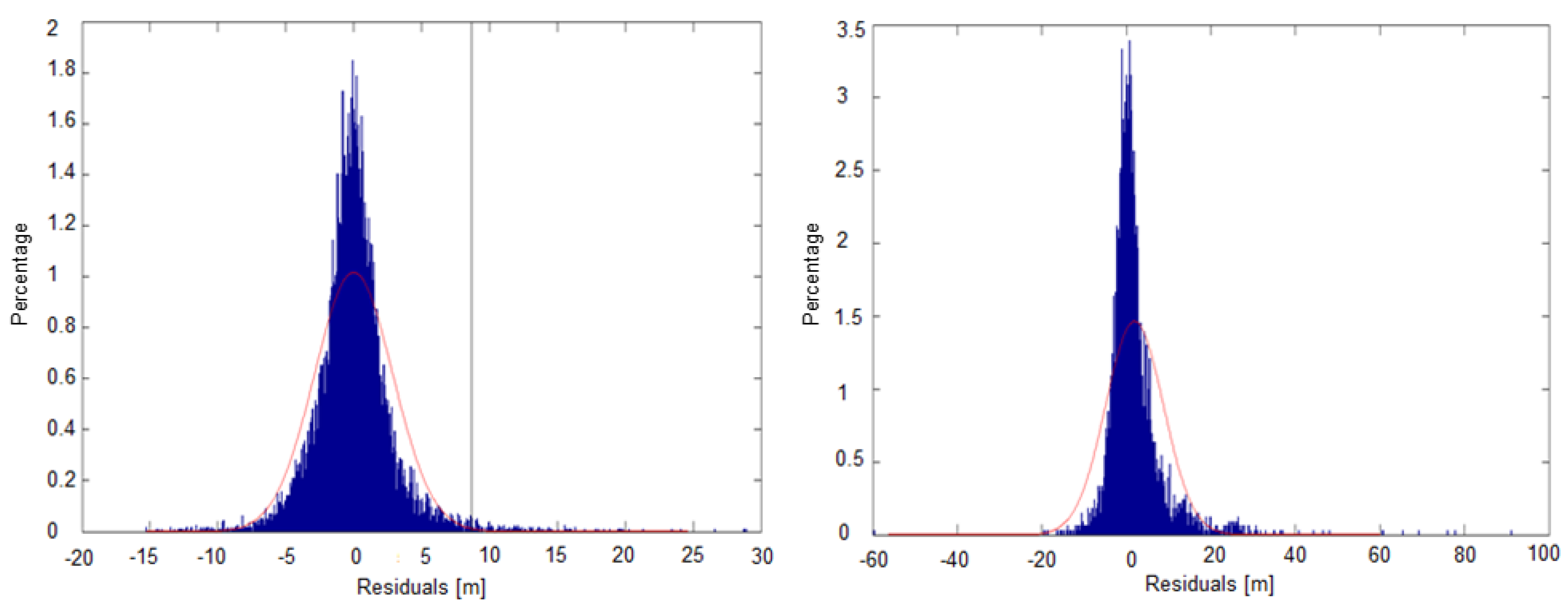

3.2. Global Results

3.3. Discussion

3.3.1. Focus on an Area Where the Camera Aiding Algorithm Improves the PVT

3.3.2. Statistic on Pseudorange Residuals

- Propose a criterion or a set of criteria in order to decide which data to exclude or to overweight in case the camera flags too many data as NLoS;

- Detect small and close “obstacles” (such as lamp poles) in order not to mark the related area as NLoS;

- Improve classification of vegetation;

- Feedback loop and additional criteria for adaptively tuning the image processing parameters regarding the threshold used on the probability map.

4. Conclusions

- Effect of small obstacles such as lamp poles that cause a NLoS flag by the camera while not significantly affecting the quality of the data;

- Difficult classification of the vegetation that can be considered a wrong source of NLoS, leading to the exclusion of too many measurements.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. GNSS Basics

- to detect the GNSS signals which are present;

- to realize a first estimation of their frequency and delay characteristics.

- to rest locked on the signals received from the acquisition sub-system;

- to decode the navigation message;

- to estimate the distance traveled by each signal to arrive at the receiver.

- to calculate the position of the receiver;

- to determine the bias of the receiver’s internal clock versus the reference system.

References

- ETSI. Technical Report ETSI TR 101 593. ETSI Satellite Earth Stations and Systems (SES); Global Navigation Satellite System (GNSS) Based Location Systems, Minimum Performance and Features; V1.1.1; ETSI: Sophia Antipolis, France, 2012. [Google Scholar]

- Zhang, H.; Li, W.; Qian, C.; Li, B. A Real Time Localization System for Vehicles Using Terrain-Based Time Series Subsequence Matching. Remote Sens. 2020, 12, 2607. [Google Scholar] [CrossRef]

- Li, N.; Guan, L.; Gao, Y.; Du, S.; Wu, M.; Guang, X.; Cong, X. Indoor and Outdoor Low-Cost Seamless Integrated Navigation System Based on the Integration of INS/GNSS/LIDAR System. Remote Sens. 2020, 12, 3271. [Google Scholar] [CrossRef]

- Bovik, A. (Ed.) Handbook of Image & Video Processing; Academic Press: San Diego, CA, USA, 2000. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A flexible technique for accurate omnidirectional camera calibration and structure from motion. In Proceedings of the Fourth IEEE International Conference on Computer Vision Systems (ICVS’06), New York, NY, USA, 4–7 January 2006; p. 45. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A Toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar]

- Kubrak, D.; Carrie, G.; Serant, D. GNSS Environment Monitoring Station (GEMS): A real time software multi constellation, multi frequency signal monitoring system. In Proceedings of the International Symposium on GNSS, ISGNSS, Jeju, Korea, 21–24 October 2014. [Google Scholar]

- Béréziat, D.; Herlin, I. Solving ill-posed Image Processing problems using Data Assimilation. Numer. Algorithms 2010, 56, 219–252. [Google Scholar] [CrossRef] [Green Version]

- Zafarifar, B.; de With, P.H.N. Adaptive modeling of sky for video processing and coding applications. In Proceedings of the 27th Symposium on Information Theory in the Benelux, Noordwijk, The Netherlands, 8–9 June 2006; pp. 31–38. [Google Scholar]

- Schmitt, F.; Priese, L. Sky detection In Csc-segmented color images. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications; SCITEPRESS Science and Technology Publications: Setúbal, Portugal, 2009; pp. 101–106. [Google Scholar]

- Rehrmann, V.; Priese, L. Fast and robust Segmentation of natural color scenes. In Proceedings of the Transactions on Petri Nets and Other Models of Concurrency XV; Springer: Berlin/Heidelberg, Germany, 1997; pp. 598–606. [Google Scholar]

- Attia, D.; Meurie, C.; Ruichek, Y.; Marais, J. Counting of satellites with direct GNSS signals using Fisheye camera: A comparison of clustering algorithms. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems, Washington, DC, USA, 5–7 October 2011; pp. 7–12. [Google Scholar]

- Attia, D. Segmentation D’images par Combinaison Adaptative Couleur/Texture et Classification de Pixels. Ph.D. Thesis, Universite de Technologie de Belfort-Montbeliard, Belfort, France, 2013. (In French). [Google Scholar]

- Nafornita, C.; David, C.; Isar, A. Preliminary results on sky segmentation. In Proceedings of the 2015 International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 9–10 July 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Atto, A.M.; Fillatre, L.; Antonini, M.; Nikiforov, I. Simulation of image time series from dynamical fractional brownian fields. In Proceedings of the 2014 IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 6086–6090. [Google Scholar]

- Nafornita, C.; Isar, A.; Nelson, J.D.B. Regularised, semi-local hurst estimation via generalised lasso and dual-tree complex wavelets. In Proceedings of the 2014 IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 2689–2693. [Google Scholar]

- Nelson, J.D.B.; Kingsbury, N.G. Dual-tree wavelets for estimation of locally varying and anisotropic fractal dimension. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 341–344. [Google Scholar]

- Selesnick, I.W.; Baraniuk, R.G.; Kingsbury, N.G. The dual-tree complex wavelet transform. IEEE Signal. Process. Mag. 2005, 22, 123–151. [Google Scholar] [CrossRef] [Green Version]

- Hudson, T. The Flatiron Building Shot with a Pelang 8 mm Fisheye. Available online: http://commons.wikimedia.org/wiki/File:Flatiron_fisheye.jpg (accessed on 3 February 2021).

- Statistical Data Analysis Based on the L1-Norm and Related Methods. In Statistical Data Analysis Based on the L1-Norm and Related Methods; Springer Science and Business Media LLC: Berlin, Germany, 2002; pp. 405–416.

- Lee, Y. Optimization of Position Domain Relative RAIM. In Proceedings of the 21st International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2008), Savannah, GA, USA, 16–19 September 2008; pp. 1299–1314. [Google Scholar]

- Gratton, L.; Joerger, M.; Pervan, B. Carrier Phase Relative RAIM Algorithms and Protection Level Derivation. J. Navig. 2010, 63, 215–231. [Google Scholar] [CrossRef] [Green Version]

- Ma, C.; Jee, G.-I.; MacGougan, G.; Lachapelle, G.; Bloebaum, S.; Cox, G.; Garin, L.; Shewfelt, J. GPS Signal Degradation Modeling. In Proceedings of the 14th International Technical Meeting of the Satellite Division of The Institute of Navigation ION GPS 2001, Salt Lake City, UT, USA, 12–14 September 2001; pp. 882–893. [Google Scholar]

- Teunissen, P.J.G. An integrity and quality control procedure for use in multi sensor integration. In Proceedings of the 3rd Inter-national Technical Meeting of the Satellite Division of The Institute of Navigation ION GPS 1990, Colorado Springs, CO, USA, 19–21 September 1990; pp. 512–522. [Google Scholar]

- Carrie, G.; Kubrak, D.; Rozo, F.; Monnerat, M.; Rougerie, S.; Ries, L. Potential Benefits of Flexible GNSS Receivers for Signal Quality Analyses. In Proceedings of the 36th European Workshop on GNSS Signals and Signal Processing, Neubiberg, Germany, 5–6 December 2013. [Google Scholar]

- Salós Andrés, C.D. Integrity Monitoring Applied to the Reception of GNSS Signals in Urban Environments. Ph.D. Thesis, INP, Toulouse, France, 2012. [Google Scholar]

- Carrie, G.; Kubrak, D.; Monnerat, M.; Lesouple, J.; Monnerat, M.; Lesouple, J. Toward a new definition of a PNT trust level in a challenged multi frequency, multi-constellation environment. In Proceedings of the NAVITEC 2014, Noordwijk, The Netherlands, 3–5 December 2014. [Google Scholar]

- Carrie, G.; Kubrak, D.; Monnerat, M. Performances of Multicorrelator-Based Maximum Likelihood Discriminator. In Proceedings of the 27th International Technical Meeting of the Satellite Division of The Institute of Navigation ION GNSS+ 2014, Tampa, FL, USA, 8–12 September 2014; pp. 2720–2727. [Google Scholar]

- Nafornita, C.; Isar, A.; Otesteanu, M.; Nafornita, I.; Campeanu, A. The Evaluation of the Effect of NLOS Multipath Propagation in Case of GNSS Receivers. In Proceedings of the 2018 International Symposium on Electronics and Telecommunications, Timisoara, Romania, 8–9 November 2018; pp. 1–4. [Google Scholar]

| Camera Module | HPE | HPL | MI | ||||

|---|---|---|---|---|---|---|---|

| 67% | 95% | 99% | 67% | 95% | 99% | ||

| OFF | 4.27 | 8.79 | 12.17 | 31.71 | 41.56 | 58.45 | 0 |

| ON | 3.85 | 6.59 | 10.14 | 36.64 | 47.62 | 78.28 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

David, C.; Nafornita, C.; Gui, V.; Campeanu, A.; Carrie, G.; Monnerat, M. GNSS Localization in Constraint Environment by Image Fusing Techniques. Remote Sens. 2021, 13, 2021. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13102021

David C, Nafornita C, Gui V, Campeanu A, Carrie G, Monnerat M. GNSS Localization in Constraint Environment by Image Fusing Techniques. Remote Sensing. 2021; 13(10):2021. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13102021

Chicago/Turabian StyleDavid, Ciprian, Corina Nafornita, Vasile Gui, Andrei Campeanu, Guillaume Carrie, and Michel Monnerat. 2021. "GNSS Localization in Constraint Environment by Image Fusing Techniques" Remote Sensing 13, no. 10: 2021. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13102021