Experimental Assessment of UWB and Vision-Based Car Cooperative Positioning System

Abstract

:1. Introduction

2. Related Works and Objective of the Paper

- UWB ranging performance in general; for example, the success rate of ranging measurements, the ranging accuracy dependence on the range.

- Positioning performance of the platforms using only infrastructure ranging; i.e., V2I-based positioning.

- Assessment on vision and UWB relative positioning; i.e., V2V based relative positioning.

- Cooperative positioning of the platforms based on V2V and V2I ranges.

- Cooperative positioning of the platforms based on V2V and V2I ranges and partial GPS/GNSS data.

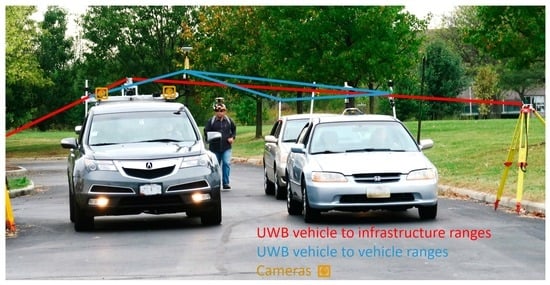

3. Experiment Setup

3.1. Setting Up the Local Positioning System

3.2. Trajectory

3.3. Platform Setup

- Sensors on the GPSVan: all UWB devices mounted on the vehicle, two GNSS receivers and one GoPro camera (GPR1).

- Sensors on other cars: GNSS receiver, two Pozyx UWB devices (on the right and of the left of each vehicle), and one TimeDomain UWB transceiver.

- Static network of 10 TimeDomain UWB transceivers.

4. Data Characterisation

4.1. TimeDomain Static UWB Network

- Average error;

- Median error;

- Standard deviation of the error;

- Median absolute deviation (MAD) = median − median () ;

- Median absolute error = ;

- Root mean square (RMS) error = ;

- Percentage of errors larger than 1 m;

- Percentage of unreliable measurements in accordance to the QR criteria, as mentioned above,

4.2. TimeDomain V2V UWB Network

4.3. Pozyx V2V UWB Network

4.4. NLOS Detection and UWB Calibration

4.5. GoPro Video

5. Positioning Approaches

- V2I UWB positioning: vehicle position is estimated by exploiting only UWB range measurements with the static UWB infrastructure (Section 5.1).

- Vision + UWB relative positioning: position of the other vehicles are computed by the GPSVan given the V2V UWB ranges and the visual information provided by the GoPro camera GPR1 (Section 5.2).

- Cooperative positioning: vehicle position is estimated by exploiting V2V UWB range measurements, V2I measurements, visual information, and partial GPS/GNSS data (Section 5.3).

5.1. V2I UWB Positioning

5.2. Relative Positioning with Vision and UWB

- if just one UWB range measurement is available (assume, without loss of generalization, that is provided by the right Pozyx network), then the vehicle position is estimated as the intersection between the line passing through the GPR1 optical centre associated with the direction (black solid line in Figure 15) and the circumference associated with the range measurement (light blue dashed circumference in Figure 15);

- if two UWB range measurements , are available, then the vehicle relative position with respect to the GPSVan is estimated along with the vehicle heading orientation by combining the three measurements. Let be car heading direction at time , and the corresponding transverse direction, then define a car local reference system (see Figure 16). It is worth noting that can be uniquely identified in the 2D space by an angle , and that once is known, then is uniquely determined as well. Assume also that the relative position of the two Pozyx devices is known in the car local reference system. Then, vehicle relative position and orientation with respect to the GPSVan are determined by solving the following optimisation problem:where , , and are the distances between the Pozyx devices in the two cars and the orientation according to the estimated position. The solution of such optimisation problem can be quickly obtained by means of the Gauss–Newton algorithm, where the stopping condition can be set imposing a threshold on the computed solution variation (only up to the millimetre level for what concerns the variation on the estimated position).

5.3. Cooperative Positioning

6. Results and Discussion

6.1. Positioning with a Static UWB Infrastructure (V2I)

6.2. Relative Positioning with Vision and UWB

6.3. Cooperative Positioning

- V2I: positioning obtained by considering only UWB V2I measurements. Since V2I measurements are available only for the GPSVan (here and in all the cases where they are used), positions of all the other cars inside the main road area are obtained just as Kalman predictions from the last available GNSS measurements, i.e., their trajectories will be straight lines until GNSS updates are available.

- V2I + V2V cooperative approach: cooperative positioning obtained by considering UWB V2I and V2V measurements.

- V2I + V2V + vision cooperative approach: cooperative positioning obtained by considering UWB V2I and V2V measurements and the information coming from the vision based relative positioning system.

- V2I + V2V + partial random GNSS availability: cooperative positioning obtained by considering UWB V2I and V2V measurements and a certain percentage of GNSS measurements randomly available in the main road area (car and time instant of any available measurement are randomly selected). The percentage of available GNSS measurements varies from 0.5% to 4%.

- V2V + GNSS available on certain vehicles: cooperative positioning obtained by considering all UWB V2V measurements and GNSS measurements available on certain cars, varying the number of cars from 1 to 3.

- V2I + V2V: GPSVan is provided with UWB V2I measurements, whereas all the other cars can only exploit UWB V2V measurements to determine their positions.

- V2V + (): GPSVan is provided with GNSS measurements, whereas all the other cars can only exploit UWB V2V measurements to determine their positions.

- V2V + (): GPSVan and Honda are provided with GNSS measurements, whereas the other cars can only exploit UWB V2V measurements to determine their positions.

- V2V + (): GPSVan, Honda, and Acura are provided with GNSS measurements, whereas Toyota can only exploit UWB V2V measurements to determine its position.

6.4. Discussion

- First, the results reported in Table 7 show that the use of UWB V2V measurements allowed to assess car relative distances with an uncertainty approximately at meter level. Instead, the car relative distance assessment reached an uncertainty at decimeter level when ranges from at least other two cars are available on all the vehicles (). Hence, in such working conditions, the relative distance between cars can be quite effectively assessed.

- The first goal of Table 8 is that of evaluating the collaborative positioning performance of the V2I + V2V approach: the obtained 2D positioning error is at meter level (median = 2.4 m, MAD = 3.2 m), with a quite clear improvement when working in good V2V measurement conditions, i.e., median error = 2.0 m, MAD = 2.0 m when . The positioning error increases with the outage time (see Figure 20a), as expected. The median error is lower than 2 m for approximately 8 s of outage. It is worth noting that V2I ranges were available only for the GPSVan; hence, V2I enables computing the absolute positioning of the GPSVan, whereas the positions of the other cars can be assessed only through the V2V measurements.

- Then, Table 8 and Figure 20b show that the introduction of vision in the positioning algorithm can reduce the error (median = 2.3 m, MAD = 2.0 m) and its increase with the outage time (the median error is lower than 2 m for approximately 12 s of outage). Similarly to the V2I + V2V case, the positioning error is reduced when working in good V2V measurement conditions: median error = 1.5 m, MAD = 1.6 m, when . Since video frames have currently been extracted from the video (and processed) at 10 Hz, processing them at the original video frame rate is expected to improve the overall positioning results.

- Figure 22 shows an example of the (b) V2I + V2V and (c) V2I + V2V + vision performance on a portion of the car tracks, to be compared with (a) the reference one. By comparing the car positions in (b) with those in (a), it is quite apparent that the relative distances between cars are quite consistent with the correct ones when the corresponding range measurements are available, as expected. However, since only the GPSVan absolute position can be assessed (from the V2I ranges), the absolute positioning problem for the other cars is ill posed. Let us consider the static positioning problem on a certain time instant: any rotation, pivoting on the GPSVan, of the real car configuration is equally acceptable. Instead, since the vision-aided solution includes also some (indirect) information on the car configuration orientation (i.e., the angle in Figure 7), such solution is less prone to the above mentioned ill-posedness.

- The above considerations suggest that, in order to avoid ill-posed solutions in the UWB-based cooperative positioning, either some information shall be provided by external sensors (e.g., vision), or more than one vehicle shall be provided with measurements to enable absolute positioning, e.g., V2I measurements.

- The results reported in Table 9 aim at investigating the absolute positioning performance that can be achieved when GNSS is partially available in the main road area. In particular, the performance is evaluated varying the percentage of available GNSS measurements. Comparing the results of Table 9 with those of Table 10, it is quite apparent the importance of the availability of a sufficient number of successful V2V range measurements in order to effectively propagate to the other vehicles the information provided by the few available GNSS positions. In particular, the results obtained when (Table 10) with a certain amount of GNSS measurements are similar to those obtained in Table 9, doubling the percentage of GNSS measurements. Overall, the obtained positioning error is at meter level, reaching sub-meter level in good working conditions for the V2V communications and with 4% of the GNSS measurements (last column in Table 10). The median error is usually lower than 2 m for more than 20 outage seconds when at least 2% of the GNSS measurements are available, as shown in Figure 23. These results confirm that the use of a cooperative approach and an effective V2V ranging system can reduce the need for GNSS measurements when aiming at meter/sub-meter positioning of groups of vehicles.

- Finally, the last three columns of Table 11 and Table 12 aim at evaluating the performance of the cooperative positioning (evaluated on the same car, e.g., Toyota) when varying the number of vehicles provided with GNSS measurements in the main road area. The obtained results show a significant improvement when increasing from 1 to 2, whereas the difference between and 3 is quite modest. Similarly to the previously considered cases, Table 12 confirms that good V2V ranging is very important for the efficiency of the cooperative approach, as expected. Furthermore, Figure 25 shows that, despite the fact that is not sufficient for theoretically ensuring to avoid ill-posedness of the positioning solution, it is enough to avoid it in the considered example and in most real-world scenarios as well.

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Retscher, G.; Kealy, A.; Gabela, J.; Li, Y.; Goel, S.; Toth, C.K.; Masiero, A.; Błaszczak-Bąk, W.; Gikas, V.; Perakis, H.; et al. A benchmarking measurement campaign in GNSS-denied/challenged indoor/outdoor and transitional environments. J. Appl. Geod. 2020, 14, 215–229. [Google Scholar] [CrossRef]

- Li, Y.; Williams, S.; Moran, B.; Kealy, A.; Retscher, G. High-dimensional probabilistic fingerprinting in wireless sensor networks based on a multivariate Gaussian mixture model. Sensors 2018, 18, 2602. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shan, J.; Toth, C. Topographic Laser Ranging and Scanning: Principles and Processing; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar] [CrossRef]

- Shen, F.; Cheong, J.W.; Dempster, A.G. A DSRC Doppler/IMU/GNSS tightly-coupled cooperative positioning method for relative positioning in VANETs. J. Navig. 2017, 70, 120–136. [Google Scholar] [CrossRef] [Green Version]

- Petovello, M.G.; O’Keefe, K.; Chan, B.; Spiller, S.; Pedrosa, C.; Basnayake, C. Demonstration of inter-vehicle UWB ranging to augment DGPS for improved relative positioning. In Proceedings of the 23rd International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2010), Portland, OR, USA, 21–24 September 2010; pp. 1198–1209. [Google Scholar]

- Adegoke, E.I.; Zidane, J.; Kampert, E.; Ford, C.R.; Birrell, S.A.; Higgins, M.D. Infrastructure Wi-Fi for connected autonomous vehicle positioning: A review of the state-of-the-art. Veh. Commun. 2019, 20, 100185. [Google Scholar] [CrossRef] [Green Version]

- Zampella, F.; Ruiz, A.R.J.; Granja, F.S. Indoor positioning using efficient map matching, RSS measurements, and an improved motion model. IEEE Trans. Veh. Technol. 2015, 64, 1304–1317. [Google Scholar] [CrossRef]

- Li, X.; Wang, Y.; Liu, D. Research on extended Kalman filter and particle filter combinational algorithm in UWB and foot-mounted IMU fusion positioning. Mob. Inf. Syst. 2018, 2018, 215–229. [Google Scholar] [CrossRef]

- Retscher, G.; Gikas, V.; Hofer, H.; Perakis, H.; Kealy, A. Range validation of UWB and Wi-Fi for integrated indoor positioning. Appl. Geomat. 2019, 11, 187–195. [Google Scholar] [CrossRef] [Green Version]

- Toth, C.K.; Jozkow, G.; Koppanyi, Z.; Grejner-Brzezinska, D. Positioning slow-moving platforms by UWB technology in GPS-challenged areas. J. Surv. Eng. 2017, 143, 04017011. [Google Scholar] [CrossRef]

- Kurazume, R.; Nagata, S.; Hirose, S. Cooperative positioning with multiple robots. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; pp. 1250–1257. [Google Scholar]

- Grejner-Brzezinska, D.A.; Toth, C.K.; Moore, T.; Raquet, J.F.; Miller, M.M.; Kealy, A. Multisensor navigation systems: A remedy for GNSS vulnerabilities? Proc. IEEE 2016, 104, 1339–1353. [Google Scholar] [CrossRef]

- De Ponte Müller, F. Survey on ranging sensors and cooperative techniques for relative positioning of vehicles. Sensors 2017, 17, 271. [Google Scholar] [CrossRef] [Green Version]

- Fascista, A.; Ciccarese, G.; Coluccia, A.; Ricci, G. Angle of Arrival-Based Cooperative Positioning for Smart Vehicles. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2880–2892. [Google Scholar] [CrossRef]

- Dressler, F.; Hartenstein, H.; Altintas, O.; Tonguz, O.K. Inter-vehicle communication: Quo vadis. IEEE Commun. Mag. 2014, 52, 170–177. [Google Scholar] [CrossRef]

- Amini, A.; Vaghefi, R.M.; Jesus, M.; Buehrer, R.M. Improving GPS-based vehicle positioning for intelligent transportation systems. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 1023–1029. [Google Scholar]

- Mohammadmoradi, H.; Heydariaan, M.; Gnawali, O. SRAC: Simultaneous ranging and communication in UWB networks. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 9–16. [Google Scholar]

- Alam, N.; Dempster, A.G. Cooperative Positioning for Vehicular Networks: Facts and Future. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1708–1717. [Google Scholar] [CrossRef]

- Wang, D.; O’Keefe, K.; Petovello, M. Decentralized cooperative positioning for vehicle-to-vehicle (V2V) application using GPS integrated with UWB range. In Proceedings of the ION 2013 Pacific PNT Meeting, Honolulu, HI, USA, 23–25 April 2013; pp. 793–803. [Google Scholar]

- Ćesić, J.; Marković, I.; Cvišić, I.; Petrović, I. Radar and stereo vision fusion for multitarget tracking on the special Euclidean group. Robot. Auton. Syst. 2016, 83, 338–348. [Google Scholar] [CrossRef]

- Bombini, L.; Cerri, P.; Medici, P.; Alessandretti, G. Radar-vision fusion for vehicle detection. In Proceedings of the International Workshop on Intelligent Transportation, Toronto, ON, Canada, 17–20 September 2006; Volume 65, p. 70. [Google Scholar]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-Road Vehicle Detection and Tracking Using MMW Radar and Monovision Fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Mahlisch, M.; Schweiger, R.; Ritter, W.; Dietmayer, K. Sensorfusion using spatio-temporal aligned video and lidar for improved vehicle detection. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Meguro-Ku, Japan, 3–15 June 2006; pp. 424–429. [Google Scholar]

- Roeckl, M.; Gacnik, J.; Schomerus, J.; Strang, T.; Kranz, M. Sensing the environment for future driver assistance combining autonomous and cooperative appliances. In Proceedings of the Fourth International Workshop on Vehicle-to-Vehicle Communications (V2VCOM), Eindhoven, The Netherlands, 3 June 2008; pp. 45–56. [Google Scholar]

- Rockl, M.; Frank, K.; Strang, T.; Kranz, M.; Gacnik, J.; Schomerus, J. Hybrid fusion approach combining autonomous and cooperative detection and ranging methods for situation-aware driver assistance systems. In Proceedings of the 2008 IEEE 19th International Symposium on Personal, Indoor and Mobile Radio Communications, Cannes, France, 31 August–4 September 2008; pp. 1–5. [Google Scholar]

- De Ponte Müller, F.; Diaz, E.M.; Rashdan, I. Cooperative positioning and radar sensor fusion for relative localization of vehicles. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gotenburg, Sweden, 19–22 June 2016; pp. 1060–1065. [Google Scholar]

- Fujii, S.; Fujita, A.; Umedu, T.; Kaneda, S.; Yamaguchi, H.; Higashino, T.; Takai, M. Cooperative vehicle positioning via V2V communications and onboard sensors. In Proceedings of the 2011 IEEE Vehicular Technology Conference (VTC Fall), San Francisco, CA, USA, 5–8 September 2011; pp. 1–5. [Google Scholar]

- Khodjaev, J.; Park, Y.; Malik, A.S. Survey of NLOS identification and error mitigation problems in UWB-based positioning algorithms for dense environments. Ann. Telecommun.-Ann. Des Télécommun. 2010, 65, 301–311. [Google Scholar] [CrossRef]

- Yu, K.; Wen, K.; Li, Y.; Zhang, S.; Zhang, K. A novel NLOS mitigation algorithm for UWB localization in harsh indoor environments. IEEE Trans. Veh. Technol. 2018, 68, 686–699. [Google Scholar] [CrossRef]

- Yang, X. NLOS mitigation for UWB localization based on sparse pseudo-input Gaussian process. IEEE Sens. J. 2018, 18, 4311–4316. [Google Scholar] [CrossRef]

- Pointon, H.A.; McLoughlin, B.J.; Matthews, C.; Bezombes, F.A. Towards a model based sensor measurement variance input for extended Kalman filter state estimation. Drones 2019, 3, 19. [Google Scholar] [CrossRef] [Green Version]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Biau, G.; Scornet, E. A random forest guided tour. TEST 2016, 25, 197–227. [Google Scholar] [CrossRef] [Green Version]

- Perakis, H.; Gikas, V. Evaluation of Range Error Calibration Models for Indoor UWB Positioning Applications. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; pp. 206–212. [Google Scholar]

- Gabela, J.; Kealy, A.; Li, S.; Hedley, M.; Moran, W.; Ni, W.; Williams, S. The effect of linear approximation and Gaussian noise assumption in multi-sensor positioning through experimental evaluation. IEEE Sens. J. 2019, 19, 10719–10727. [Google Scholar] [CrossRef]

- Anderson, B.D.; Moore, J.B. Optimal Filtering; Prentice-Hall: Englewood Cliffs, NJ, USA, 2012. [Google Scholar]

- Jazwinski, A.H. Stochastic Processes and Filtering Theory; Academic Press: New York, NY, USA; London, UK, 2007. [Google Scholar]

- Kailath, T.; Sayed, A.; Hassibi, B. Linear Estimation; Prentice-Hall: Englewood Cliffs, NJ, USA, 2000. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Soatti, G.; Nicoli, M.; Garcia, N.; Denis, B.; Raulefs, R.; Wymeersch, H. Implicit cooperative positioning in vehicular networks. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3964–3980. [Google Scholar] [CrossRef] [Green Version]

- Yao, J.; Balaei, A.T.; Hassan, M.; Alam, N.; Dempster, A.G. Improving cooperative positioning for vehicular networks. IEEE Trans. Veh. Technol. 2011, 60, 2810–2823. [Google Scholar] [CrossRef] [Green Version]

| Platforms | GPS | TD V2I | TD V2V | Pozyx-L | Pozyx-R | IMU | Camera |

|---|---|---|---|---|---|---|---|

| GPSVan, reference vehicle | X | X | X | X | X | X | X |

| Honda Accord | X | X | X | X | |||

| Acura SUV | X | X | X | X | |||

| Toyota Corolla | X | X | X | X | |||

| Bicycle | X | X | |||||

| Pedestrian | X | X | X | X |

| V2I TD | V2V TD | V2V Pozyx R | V2V Pozyx L | |

|---|---|---|---|---|

| Avg. measurement sample period [s] | 0.032 | 0.031 | 0.018 | 0.018 |

| Avg. ranging loop period [s] | 0.32 | 0.24 | 0.16 | 0.15 |

| Avg. success rate [%] | 13.4 | 34.9 | 39.0 | 31.3 |

| Max. range [m] | 96 | 168 | 139 | 73 |

| V2I TD | V2V TD | V2V Pozyx R | V2V Pozyx L | |

|---|---|---|---|---|

| Average [cm] | 6 | 1 | 27 | 15 |

| Median [cm] | −1 | −1 | 20 | 11 |

| Stand. dev. [cm] | 74 | 108 | 70 | 69 |

| MAD [cm] | 18 | 45 | 37 | 29 |

| Mean. Abs. err. [cm] | 17 | 41 | 41 | 30 |

| RMS [cm] | 74 | 109 | 75 | 71 |

| Max [m] | 13 | 21 | 39 | 46 |

| m | 1.8 | 6.4 | 9.6 | 4.3 |

| % unreliable ranges (QR) | 3.1 | 6.0 | – | – |

| V2I TD | V2V TD | V2V Pozyx R | V2V Pozyx L | |

|---|---|---|---|---|

| Data examined by RF (%) | 60.8 | 44.7 | 89.6 | 88.3 |

| RF accuracy (%) | 99.6 | 97.6 | 94.1 | 96.7 |

| Median [cm] | 0 | 0 | 2 | 2 |

| MAD [cm] | 13 | 36 | 33 | 27 |

| Mean. Abs. err. [cm] | 12 | 34 | 32 | 27 |

| RMS [cm] | 61 | 88 | 67 | 68 |

| m | 1.4 | 5.2 | 5.8 | 3.4 |

| All Area | Main Road | Main Road Along Track | Main Road Across-Track | |

|---|---|---|---|---|

| Median [cm] | 16 | 12 | 2 | −3 |

| MAD [cm] | 268 | 31 | 15 | 21 |

| Mean. Abs. err. [cm] | 182 | 30 | 15 | 21 |

| RMS [cm] | 764 | 73 | 36 | 63 |

| m | 13.3 | 3.1 | 2.7 | 4.6 |

| 2D Error | Heading | Transverse | |

|---|---|---|---|

| Median [cm] | 24 | 12 | −11 |

| MAD [cm] | 50 | 26 | 37 |

| Mean. Abs. err. [cm] | 53 | 31 | 39 |

| RMS [cm] | 91 | 58 | 70 |

| m | 16.7 | 8.9 | 11.0 |

| V2I | V2I + V2V | V2I + V2V () | V2I + V2V () | |

|---|---|---|---|---|

| Median [m] | 0.0 | 0.00 | 0.00 | 0.00 |

| MAD [m] | 16.0 | 0.80 | 0.30 | 0.24 |

| Mean. Abs. err. [m] | 11.5 | 0.79 | 0.30 | 0.24 |

| RMS [m] | 34.2 | 2.36 | 0.52 | 0.41 |

| V2I | V2I + V2V | V2I + V2V () | V2I + V2V + Vision | V2I + V2V + Vision () | |

|---|---|---|---|---|---|

| Median [m] | 13.4 | 2.4 | 2.0 | 2.3 | 1.5 |

| MAD [m] | 33.6 | 3.2 | 2.0 | 2.0 | 1.6 |

| Mean. Abs. err. [m] | 31.2 | 4.2 | 3.0 | 3.1 | 2.4 |

| RMS [m] | 61.7 | 5.9 | 4.0 | 4.2 | 3.3 |

| 0.5% | 1% | 2% | 4% | |

|---|---|---|---|---|

| Median [m] | 2.1 | 1.4 | 0.9 | 0.6 |

| MAD [m] | 4.1 | 2.8 | 1.7 | 0.9 |

| Mean. Abs. err. [m] | 4.5 | 3.1 | 1.9 | 1.1 |

| RMS [m] | 8.1 | 5.9 | 3.7 | 1.9 |

| 0.5% | 1% | 2% | 4% | |

|---|---|---|---|---|

| Median [m] | 1.5 | 1.0 | 0.7 | 0.5 |

| MAD [m] | 2.4 | 1.5 | 0.8 | 0.5 |

| Mean. Abs. err. [m] | 2.8 | 1.8 | 1.1 | 0.7 |

| RMS [m] | 4.6 | 3.0 | 1.7 | 1.0 |

| V2I + V2V | V2V + | V2V + | V2V + | |

|---|---|---|---|---|

| Median [m] | 4.0 | 3.7 | 1.2 | 1.2 |

| MAD [m] | 6.6 | 5.5 | 3.6 | 2.9 |

| Mean. Abs. err. [m] | 7.7 | 6.6 | 3.5 | 3.0 |

| RMS [m] | 12.8 | 11.5 | 8.5 | 5.9 |

| V2I + V2V | V2V + () | V2V + () | V2V + () | |

|---|---|---|---|---|

| () | () | () | () | |

| Median [m] | 4.0 | 3.7 | 0.8 | 0.8 |

| MAD [m] | 4.4 | 4.3 | 1.2 | 0.9 |

| Mean. Abs. err. [m] | 6.0 | 5.5 | 1.5 | 1.2 |

| RMS [m] | 8.3 | 7.6 | 2.7 | 2.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masiero, A.; Toth, C.; Gabela, J.; Retscher, G.; Kealy, A.; Perakis, H.; Gikas, V.; Grejner-Brzezinska, D. Experimental Assessment of UWB and Vision-Based Car Cooperative Positioning System. Remote Sens. 2021, 13, 4858. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13234858

Masiero A, Toth C, Gabela J, Retscher G, Kealy A, Perakis H, Gikas V, Grejner-Brzezinska D. Experimental Assessment of UWB and Vision-Based Car Cooperative Positioning System. Remote Sensing. 2021; 13(23):4858. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13234858

Chicago/Turabian StyleMasiero, Andrea, Charles Toth, Jelena Gabela, Guenther Retscher, Allison Kealy, Harris Perakis, Vassilis Gikas, and Dorota Grejner-Brzezinska. 2021. "Experimental Assessment of UWB and Vision-Based Car Cooperative Positioning System" Remote Sensing 13, no. 23: 4858. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13234858