Image-Aided LiDAR Mapping Platform and Data Processing Strategy for Stockpile Volume Estimation

Abstract

:1. Introduction

2. Related Work

- Unlike relying on a system-driven approach using sophisticated, expensive encoders and/or inertial measurement units, the SMART system focuses on a data-driven strategy for stockpile volume estimation using only acquired data from a simple, cost-effective acquisition procedure;

- It is easy to deploy and has the potential of permanent installation in indoor facilities (after suitable modifications of the setup) for continuous monitoring of stockpiles; and,

- Low-cost, high-precision image/LiDAR hybrid technology such as SMART can influence system manufacturers to develop inexpensive stockpile monitoring solutions, which could be even less expensive.

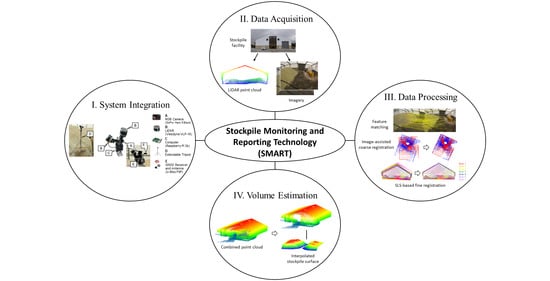

3. SMART System Integration and Field Surveys

3.1. SMART System Components

- LiDAR units: In order to derive a 3D point cloud of the stockpile in question, LiDAR data is first acquired through the VLP-16 sensors. The Velodyne VLP-16 3D LiDAR [48] has a vertical field of view (FOV) of 30° and a 360° horizontal FOV. This FOV is facilitated by the unit construction, which consists of 16 radially oriented laser rangefinders that are aligned vertically from −15° to +15° and designed for 360° internal rotation. The sensor weight is 0.83 kg and the point capture rate in a single return mode is 300,000 points per second. The range accuracy is ±3 cm with a maximum measurement range of 100 m. The vertical angular resolution is 2° and horizontal angular resolution is 0.1–0.4°. The angular resolution of the LiDAR unit enables an average point spacing within one scan line of 3 cm, and between neighboring scan lines of 30 cm at 5 m range (average distance to the salt surface). Given the sensor specifications, two LiDAR units with cross orientation are adopted to increase the area covered by the SMART system in each instance of data collection. The horizontal coverage of the SMART LiDAR units is schematically illustrated in Figure 2. As shown in this figure, two orthogonally installed LiDAR sensors simultaneously scan the environment in four directions. The 360° horizontal FOV of the VLP-16 sensors implies that the entire salt facility within the system’s vertical coverage is captured by the LiDAR units. In addition to the possibility of covering a larger area of the stockpile, this design allows for scanning surrounding structures, thereby increasing the likelihood of acquiring diverse features in all directions from a given scan. These features (linear, planar, or cylindrical) can be used for the alignment of LiDAR data collected from multiple scans to derive point clouds in a single reference frame.

- RGB camera: The SMART system uses a GoPro Hero 9 camera, which weighs 158 g. The camera has a 5184 × 3888 CMOS array with a 1.4 pixel size and a lens with a nominal focal length of 3 mm. Horizontal FOV of 118° and 69° vertical FOV enable the camera to cover roughly 460 square meters with a 10 m range. A schematic diagram of the camera coverage from the SMART system is depicted in Figure 2. In addition to providing RGB information from the stockpile, images captured by the RGB camera are used to assist the initial alignment process of the LiDAR point clouds collected at a given station. This process will be discussed in detail in Section 4.3.

- Computer module: A Raspberry Pi 3b computer is installed on the system body and is used for LiDAR data acquisition and storage. Both LiDAR sensors are triggered simultaneously through a physical button that has wired connection to the computer module. Once the button is pushed, the Raspberry Pi initiates a 10 s data capture from the two LiDAR units. In the meantime, the RGB camera is controlled wirelessly (using a Wi-Fi connection) through a mobile device, which enables access to the camera’s live view for the operator. All the images captured are transferred to the processing computer through a wireless network. The LiDAR data is transferred from the Raspberry Pi using a USB drive. Figure 3 shows the block diagram of the system indicating triggering signals and communication wires/ports between the onboard sensors and Raspberry Pi module.

- GNSS receiver and antenna: As one of the potential ways to enhance SMART system capabilities, a GNSS receiver and antenna are added as one of the system components. The purpose of the GNSS unit is to provide location information when operating in outdoor environments. The location information serves as an additional input to aid the point cloud alignment from multiple positions of the system. In this study however, data collection is targeted in a more challenging indoor environment. Therefore, GNSS positioning capabilities of the system are not utilized.

- System body: LiDAR sensors, an RGB camera, and a GNSS unit of the SMART system are placed on a metal plate attached to an extendable tripod pole that are together considered as the system body. The computer module and power source are located on the tripod pole. The extendable tripod, with a maximum height of 6 m, helps the system minimize occlusions when collecting data from large salt storage facilities and/or stockpiles with complex shapes.

3.2. System Operation and Data Collection Strategy

3.3. Dataset Description

4. Data Processing Workflow

4.1. System Calibration

4.2. Scan Line-Based Segmentation

- (a)

- Scans are acquired by spinning multi-beam LiDAR unit(s), i.e., VLP-16;

- (b)

- LiDAR scans are acquired inside facilities bounded by planar surfaces that are sufficiently distributed in different orientations/locations, e.g., floor, walls, and ceiling;

- (c)

- A point cloud exhibits significant variability in point density, as shown in Figure 8.

4.3. Image-Based Coarse Registration

4.4. Feature Matching and Fine Registration of Point Clouds from a Single Station

4.5. Coarse Registration of Point Clouds from Multiple Stations

4.6. Volume Estimation

5. Experimental Results and Discussion

5.1. System Calibration Results

5.2. Results of Image-Based Coarse Registration at a Single Station

- Number of matches/projection residuals: For the automated approaches, the number of matches signifies the ability to establish enough conjugate features between two successive images. In the case of manual measurements, few reliable conjugate points with a relatively good distribution are established. The projection residual, which is the RMSE value of differences between the coordinates of projected features from the left to right image and their corresponding features in the right image, can be used to infer the quality of established matches and/or estimated rotation angles. Large projection residual is an indication of high percentage of matching outliers, and consequently, inaccurate estimates of the incremental pole rotation angles.

- Incremental pole rotation angles (, , and ): Considering the results form manual measurements as a reference, this criterion shows how accurately the automated approaches can estimate the incremental pole rotation between two scans. As mentioned earlier, the nominal incremental pole rotation between two scans (i.e., , , and ) are (0.0°, 0.0°, and −30.0°), respectively.

- Processing time: For the automated approaches, this refers to the processing time for feature detection, descriptor generation, and matching steps. In case of manual measurements, this refers to the approximate time required for manually identifying point correspondences between the two images.

5.3. Fine Registration Results

5.4. Stockpile Volume Estimation

6. Discussion

7. Conclusions and Recommendations for Future Work

- The integrated hardware system composed of an RGB Camera, two LiDAR units, and an extendable tripod. This system addresses the limitations of current stockpile volume estimation techniques by providing a time-efficient, cost-effective, and scalable solution for routine monitoring of stockpiles with varying sizes and shape complexity.

- An image-aided coarse registration technique has been designed to mitigate challenges in identifying common features in sparse LiDAR scans with insufficient overlap. This new approach uses the designed system characteristics and operation to derive a reliable set of conjugate points in successive images for precise estimation of the incremental pole rotation at a given station.

- A scan line-based segmentation (SLS) approach for extracting planar features from spinning multi-beam LiDAR scans has been proposed. The SLS can handle significant variability in point density and provides a set of planar features that could be used for reliable fine registration.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Desai, J.; Mahlberg, J.; Kim, W.; Sakhare, R.; Li, H.; McGuffey, J.; Bullock, D.M. Leveraging Telematics for Winter Operations Performance Measures and Tactical Adjustment. J. Transp. Technol. 2021, 11, 611–627. [Google Scholar] [CrossRef]

- FHWA Road Weather Management Program. Available online: https://ops.fhwa.dot.gov/weather/weather_events/snow_ice.htm (accessed on 23 October 2021).

- Kuemmel, D.; Hanbali, R. Accident Analysis of Ice Control Operations. In Transportation Research Center: Accident Analysis of Ice Control Operations; Marquette University: Milwaukee, WI, USA, 1992. [Google Scholar]

- Ketcham, S.; Minsk, L.D.; Blackburn, R.R.; Fleege, E.J. Manual of Practice for an Effective Anti-Icing Program: A Guide for Highway Winter Maintenance Personnel; No. FHWA-RD-95-202; Federal Highway Administration: Washington, DC, USA, 1996.

- State of New Hampshire Department of Transportation. Winter Maintenance Snow Removal and Ice Control Policy; New Hampshire Department of Transportation: Concord, NH, USA, 2001. Available online: https://www.nh.gov/dot/org/operations/highwaymaintenance/documents/WinterMaintSnowandIcePolicy.pdf (accessed on 19 November 2021).

- Mayfield, M.; Narayanaswamy, K.; Jackson, D.W.; Systematics, C. Determining the State of the Practice in Data Collection and Performance Measurement of Stormwater Best Management Practices; U.S. Department of Transportations: Washington, DC, USA, 2014.

- Steinfeld, D.E.; Riley, S.A.; Wilkinson, K.M.; Landis, T.D.; Riley, L.E. Roadside Revegetation: An Integrated Approach to Establishing Native Plants (No. FHWA-WFL/TD-07-005); United States Federal Highway Administration, U.S. Department of Transportation: Washington, DC, USA, 2007.

- Iyer, A.V.; Dunlop, S.R.; Senicheva, O.; Thakkar, D.J.; Yan, R.; Subramanian, K.; Vasu, S.; Siddharthan, G.; Vasandani, J.; Saurabh, S. Improve and Gain Efficiency in Winter Operations; Joint Transportation Research Program Publication No. FHWA/IN/JTRP-2021/11; Purdue University: West Lafayette, IN, USA, 2021. [Google Scholar] [CrossRef]

- He, H.; Chen, T.; Zeng, H.; Huang, S. Ground Control Point-Free Unmanned Aerial Vehicle-Based Photogrammetry for Volume Estimation of Stockpiles Carried on Barges. Sensors 2019, 19, 3534. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yilmaz, H.M. Close Range Photogrammetry in Volume Computing. Exp. Tech. 2010, 34, 48–54. [Google Scholar] [CrossRef]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of UAV SFM MVS Photogrammetry and Remote Sensing: Short Review. In Proceedings of the IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020. [Google Scholar] [CrossRef]

- Mora, O.E.; Chen, J.; Stoiber, P.; Koppanyi, Z.; Pluta, D.; Josenhans, R.; Okubo, M. Accuracy of Stockpile Estimates Using Low-Cost sUAS Photogrammetry. Int. J. Remote Sens. 2020, 41, 4512–4529. [Google Scholar] [CrossRef]

- Fay, L.; Akin, M.; Shi, X.; Veneziano, D. Revised Chapter 8, Winter Operations and Salt, Sand and Chemical Management, of the Final Report on NCHRP 25-25(04). In Western Transportation Institute; Montana State University: Bozeman, MT, USA, 2013. [Google Scholar]

- Hugenholtz, C.H.; Walker, J.; Brown, O.; Myshak, S. Earthwork Volumetrics with an Unmanned Aerial Vehicle and Softcopy Photogrammetry. J. Surv. Eng. 2015, 141, 06014003. [Google Scholar] [CrossRef]

- United States Department of Labor Mine Safety and Health Administration. Sand, Gravel, and Crushed Stone on-the-Job Training Modules. MSHA IG-40. 2000. Available online: https://arlweb.msha.gov/training/part46/ig40/ig40.htm (accessed on 19 November 2021).

- Arango, C.; Morales, C.A. Comparison between Multicopter UAV and Total Station for Estimating Stockpile Volumes. In Proceedings of the International Conference on Unmanned Aerial Vehicles in Geomatics, Toronto, ON, Canada, 30 August–2 September 2015. [Google Scholar] [CrossRef] [Green Version]

- Raeva, P.L.; Filipova, S.L.; Filipov, D.G. Volume Computation of a Stockpile—A Study Case Comparing GPS and UAV Measurements in an Open Pit Quarry. In Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; pp. 999–1004. [Google Scholar] [CrossRef] [Green Version]

- Khomsin; Pratomo, D.G.; Anjasmara, I.M.; Ahmad, F. Analysis of the Volume Comparation of 3′S (TS, GNSS and TLS). In Proceedings of the E3S Web of Conferences, EDP Sciences, Online, 8 May 2019; Volume 94. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Ajulo, J. Investigating the Applicability of Unmanned Aerial Vehicles (UAV) Photogrammetry for the Estimation of the Volume of Stockpiles. Quaest. Geogr. 2021, 40, 25–38. [Google Scholar] [CrossRef]

- Luo, Y.; Chen, J.; Xi, W.; Zhao, P.; Qiao, X.; Deng, X.; Liu, Q. Analysis of Tunnel Displacement Accuracy with Total Station. Meas. J. Int. Meas. Confed. 2016, 83, 29–37. [Google Scholar] [CrossRef]

- Zhu, J.; Yang, J.; Fan, J.; Danni, A.; Jiang, Y.; Song, H.; Wang, Y. Accurate Measurement of Granary Stockpile Volume Based on Fast Registration of Multi-Station Scans. Remote Sens. Lett. 2018, 9, 569–577. [Google Scholar] [CrossRef]

- Little, M.J. Slope Monitoring Strategy at PPRust Open Pit Operation. In Proceedings of the International Symposium on Stability of Rock Slopes in Open Pit Mining and Civil Engineering, Cape Town, South Africa, 3–6 April 2006; The South African Institute of Mining and Metallurgy: Cape Town, South Africa, 2006; pp. 211–230. [Google Scholar]

- Campos, M.B.; Litkey, P.; Wang, Y.; Chen, Y.; Hyyti, H.; Hyyppä, J.; Puttonen, E. A Long-Term Terrestrial Laser Scanning Measurement Station to Continuously Monitor Structural and Phenological Dynamics of Boreal Forest Canopy. Front. Plant Sci. 2021, 11, 2132. [Google Scholar] [CrossRef]

- Voordendag, A.B.; Goger, B.; Klug, C.; Prinz, R.; Rutzinger, M.; Kaser, G. Automated and Permanent Long-Range Terrestrial Laser Scanning in a High Mountain Environment: Setup and First Results. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 2, 153–160. [Google Scholar] [CrossRef]

- Christie, G.A.; Kochersberger; Abbott, B.; Westman, A.L. Image-Based 3D Reconstructions for Stockpile Volume Measurement. Min. Eng. 2015, 67, 34–37. [Google Scholar]

- Rhodes, R.K. UAS as an Inventory Tool: A Photogrammetric Approach to Volume Estimation. Master’s Thesis, University of Arkansas, Fayetteville, AR, USA, 2017. [Google Scholar]

- Idrees, A.; Heeto, F. Evaluation of UAV-Based DEM for Volume Calculation. J. Univ. Duhok 2020, 23, 11–24. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Oyeboade, T.O.; Samaila-Ija, H.A.; Adewale, T.J. Development of a UAV-Based System for the Semi-Automatic Estimation of the Volume of Earthworks. Rep. Geod. Geoinform. 2020, 110, 21–28. [Google Scholar] [CrossRef]

- Long, N.Q.; Nam, B.X.; Cuong, C.X.; van Canh, L. An Approach of Mapping Quarries in Vietnam Using Low-Cost Unmanned Aerial Vehicles. J. Pol. Miner. Eng. Soc. 2019, 2, 248–262. [Google Scholar] [CrossRef]

- Liu, S.; Yu, J.; Ke, Z.; Dai, F.; Chen, Y. Aerial–Ground Collaborative 3D Reconstruction for Fast Pile Volume Estimation with Unexplored Surroundings. Int. J. Adv. Robot. Syst. 2020, 17. [Google Scholar] [CrossRef] [Green Version]

- Son, S.W.; Kim, D.W.; Sung, W.G.; Yu, J.J. Integrating UAV and TLS Approaches for Environmental Management: A Case Study of a Waste Stockpile Area. Remote Sens. 2020, 12, 1615. [Google Scholar] [CrossRef]

- Alsayed, A.; Yunusa-Kaltungo, A.; Quinn, M.K.; Arvin, F.; Nabawy, M.R.A. Drone-Assisted Confined Space Inspection and Stockpile Volume Estimation. Remote Sens. 2021, 13, 3356. [Google Scholar] [CrossRef]

- Gago, R.M.; Pereira, M.Y.A.; Pereira, G.A.S. An Aerial Robotic System for Inventory of Stockpile Warehouses. Eng. Rep. 2021, 3, e12396. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Hasheminasab, S.M.; Zhou, T.; Habib, A. GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sens. 2020, 12, 351. [Google Scholar] [CrossRef] [Green Version]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.M.; Habib, A. Automated Aerial Triangulation for UAV-Based Mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef] [Green Version]

- Hirschmüller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005, San Diego, CA, USA, 20–25 June 2005; Volume 2. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Nuevo, J.; Bartoli, A. Fast Explicit Diffusion for Accelerated Features in Nonlinear Scale Spaces. In Proceedings of the BMVC 2013—Electronic Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013. [Google Scholar]

- Li, R.; Liu, J.; Zhang, L.; Hang, Y. LIDAR/MEMS IMU Integrated Navigation (SLAM) Method for a Small UAV in Indoor Environments. In Proceedings of the 2014 DGON Inertial Sensors and Systems, Karlsruhe, Germany, 16–17 September 2014. [Google Scholar]

- Youn, W.; Ko, H.; Choi, H.; Choi, I.; Baek, J.H.; Myung, H. Collision-Free Autonomous Navigation of a Small UAV Using Low-Cost Sensors in GPS-Denied Environments. Int. J. Control. Autom. Syst. 2021, 19, 953–968. [Google Scholar] [CrossRef]

- HOVERMAP(TM). Available online: https://www.emesent.io/hovermap/ (accessed on 19 November 2021).

- Kuhar, M.S. Technology Beyond Measure: New System for Calculating Stockpile Volume Via Smartphone May be a Game-Changer. Rock Prod. 2013, 116, 36–39. Available online: https://web.archive.org/web/20200129142605/http://www.rockproducts.com/index.php/technology/loadout-a-transportation/12343-technology-beyond-measure.html (accessed on 19 November 2021).

- Boardman, D.; Erignac, C.; Kapaganty, S.; Frahm, J.-M.; Semerjian, B. Determining Object Volume from Mobile Device Images. U.S. Patent No. 9,196,084, 24 November 2015. [Google Scholar]

- Stockpile Reports Inventory Measurement App Delivers Instant Results. Rock Products. 2020, Volume 123, p. 37. Available online: https://link.gale.com/apps/doc/A639274390/AONE (accessed on 19 November 2021).

- Velodyne VLP16 Puck. Available online: https://velodynelidar.com/products/puck/ (accessed on 3 November 2021).

- He, F.; Habib, A. Target-Based and Feature-Based Calibration of Low-Cost Digital Cameras with Large Field-of-View. In Proceedings of the Imaging and Geospatial Technology Forum, IGTF 2015—ASPRS Annual Conference and co-located JACIE Workshop, Tampa, FL, USA, 4–8 May 2015. [Google Scholar]

- Ravi, R.; Lin, Y.J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous System Calibration of a Multi-LiDAR Multicamera Mobile Mapping Platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Zhou, T.; Hasheminasab, S.M.; Habib, A. Tightly-Coupled Camera/LiDAR Integration for Point Cloud Generation from GNSS/INS-Assisted UAV Mapping Systems. ISPRS J. Photogramm. Remote Sens. 2021, 180, 336–356. [Google Scholar] [CrossRef]

- Nistér, D. An Efficient Solution to the Five-Point Relative Pose Problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef] [PubMed]

- Horn, B.K.P. Closed-Form Solution of Absolute Orientation Using Unit Quaternions. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A Performance Evaluation of Local Descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schmid, C.; Mohr, R.; Bauckhage, C. Evaluation of Interest Point Detectors. Int. J. Comput. Vis. 2000, 37, 151–172. [Google Scholar] [CrossRef] [Green Version]

- Karami, E.; Prasad, S.; Shehata, M. Image matching using SIFT, SURF, BRIEF and ORB: Performance comparison for distorted images. arXiv 2017, arXiv:1710.02726. [Google Scholar]

- Lin, Y.-C.; Liu, J.; Cheng, Y.-T.; Hasheminasab, S.M.; Wells, T.; Bullock, D.; Habib, A. Processing Strategy and Comparative Performance of Different Mobile LiDAR System Grades for Bridge Monitoring: A Case Study. Sensors 2021, 21, 7550. [Google Scholar] [CrossRef]

- Sampath, A.; Shan, J. Building Boundary Tracing and Regularization from Airborne Lidar Point Clouds. Photogramm. Eng. Remote Sens. 2007, 73, 805–812. [Google Scholar] [CrossRef] [Green Version]

- Kwak, E.; Habib, A. Automatic Representation and Reconstruction of DBM from LiDAR Data Using Recursive Minimum Bounding Rectangle. ISPRS J. Photogramm. Remote Sens. 2014, 93, 171–191. [Google Scholar] [CrossRef]

- OpenCV. Open-Source Computer Vision Library. 2015. Available online: https://opencv.org/ (accessed on 19 November 2021).

| Operator’s Safety | Scalability | Featureless Surfaces | Cost-Effective | Indoor | Operator’s Skill | |

|---|---|---|---|---|---|---|

| Total Station | ✕ | ✕ | ✓ | ✕ | ✓ | High |

| RTK-GNSS | ✕ | ✕ | ✓ | ✕ | ✕ | Low |

| Terrestrial Photogrammetry | ✕ | ✓ | ✕ | ✓ | ✓ | Low |

| Terrestrial LiDAR | ✕ | ✕ | ✓ | ✕ | ✓ | Low |

| UAV Photogrammetry | ✓ | ✓ | ✕ | ✓ | ✕ | Low |

| UAV LiDAR | ✓ | ✓ | ✓ | ✕ | ✕ | High |

| SMART | ✓ | ✓ | ✓ | ✓ | ✓ | Low |

| Salt Storage Facility | SMART | Faro Focus (TLS) | Size (W × L × H) | |

|---|---|---|---|---|

| Number of Stations | Number of Scans Per Station | Number of Stations | ||

| Lebanon unit | 2 | 7 | 2 | 26 m × 48 m × 10.5 m |

| US-231 unit | 1 | 7 | 3 | 30.5 m × 25.5 m × 10 m |

| Sensor | Lever-Arm Offset | Boresight Angles | ||||

|---|---|---|---|---|---|---|

| ΔX (m) | ΔY (m) | ΔZ (m) | Δω(°) | Δφ(°) | Δκ(°) | |

| LiDAR Unit 1 | 0 | −0.20 | 0 | 42 | 0 | 0 |

| LiDAR Unit 2 | −0.165 | −0.029 | −0.072 | −7.102 | −57.144 | −104.146 |

| (Std. Dev.) | ±0.001 | ±0.0004 | ±0.001 | ±0.004 | ±0.002 | ±0.004 |

| Camera | 0.017 | −0.034 | 0.024 | −14.686 | −66.394 | −103.886 |

| (Std. Dev.) | ±0.014 | ±0.015 | ±0.020 | ±0.787 | ±0.288 | ±0.801 |

| Image-Based Coarse Registration Technique | Stereo (Image) ID | Number of Matches | Projection Residuals (Pixel) | Pole Δω(°) | Pole Δφ(°) | Pole Δκ(°) | Processing Time (Second) |

|---|---|---|---|---|---|---|---|

| Exhaustive | 1 (1–2) | 3466 | 348 | 1.1 | 0.1 | −22.3 | 2.06 |

| One-step constrained | 1922 | 66 | 1.5 | 0.2 | −22.8 | 39.00 | |

| Iterative constrained | 3408 | 25 | 1.5 | 0.2 | −22.5 | 81.19 | |

| Manual measurements | 11 | 10 | 1.5 | 0.3 | −22.4 | ~600 | |

| Exhaustive | 2 (2–3) | 3159 | 447 | 1.6 | −0.5 | −22.6 | 2.10 |

| One-step constrained | 2065 | 40 | 0.8 | −0.5 | −23.6 | 33.14 | |

| Iterative constrained | 3079 | 29 | 0.9 | −0.5 | −23.6 | 72.33 | |

| Manual measurements | 10 | 8 | 0.8 | −0.5 | −23.6 | ~420 | |

| Exhaustive | 3 (3–4) | 2394 | 4042 | 4.9 | −1.2 | −40.6 | 1.99 |

| One-step constrained | 513 | 230 | −2.8 | 0.9 | −38.3 | 21.15 | |

| Iterative constrained | 2127 | 25 | 1.2 | −0.2 | −43.6 | 44.47 | |

| Manual measurements | 12 | 7 | 1.2 | −0.1 | −43.6 | ~540 | |

| Exhaustive | 4 (4–5) | 2798 | 8244 | 5.1 | −2.8 | −36.6 | 2.04 |

| One-step constrained | 1744 | 76 | 1.0 | −0.5 | −38.2 | 25.41 | |

| Iterative constrained | 2506 | 32 | 1.1 | −0.6 | −38.4 | 49.62 | |

| Manual measurements | 10 | 8 | 1.1 | −0.7 | −38.4 | ~300 | |

| Exhaustive | 5 (5–6) | 4066 | 519 | 2.1 | −0.2 | −21.2 | 2.12 |

| One-step constrained | 2398 | 47 | 0.9 | 0.1 | −22.0 | 40.26 | |

| Iterative constrained | 3914 | 34 | 1.0 | 0.1 | −21.9 | 82.80 | |

| Manual measurements | 10 | 7 | 1.2 | 0.2 | −21.9 | ~300 | |

| Exhaustive | 6 (6–7) | 3680 | 1121 | 1.9 | −0.7 | −31.4 | 2.07 |

| One-step constrained | 3495 | 27 | 1.1 | −0.4 | −32.5 | 43.83 | |

| Iterative constrained | 3495 | 27 | 1.1 | −0.4 | −32.5 | 42.83 | |

| Manual measurements | 10 | 5 | 1.3 | −0.4 | −32.5 | ~480 |

| Dataset | Total Number of Planar Features Used | Total Number of Points | Point Density Range (Points/m2) | RMSE of Normal Distance (m) | LiDAR Ranging Noise (m) |

|---|---|---|---|---|---|

| Lebanon | 13 | 516,756 | 2–1890 * | 0.0211 | 0.03 |

| US-231 | 13 | 275,146 | 0.3–790 | 0.0212 |

| Dataset | Occlusion (%) | SMART Volume | TLS Volume | Error (%) | ||

|---|---|---|---|---|---|---|

| SMART | TLS | |||||

| Lebanon | 31.8 | 34.3 | 1438.9 | 1437.5 | 1.4 | ~0.0 |

| US-231 | 49.6 | 20.6 | 999.5 | 968.4 | 31.1 | 3.2 |

| Volume from SMART | Reference/TLS | Error (%) | ||

|---|---|---|---|---|

| Left big pile | 798 | 808.9 | 10.9 | 1.4 |

| Left small pile | 17.2 | 17.5 | 0.3 | 1.7 |

| Combined left | 815.2 | 826.4 | 11.2 | 1.4 |

| Volume from SMART | Reference/TLS | Error (%) | ||

|---|---|---|---|---|

| Right big pile | 603.9 | 604.1 | 0.2 | ~0.0 |

| Right small pile | 17.04 | 17.88 | 0.84 | 4.7 |

| Combined right | 620.94 | 621.98 | 1.04 | 0.2 |

| Difference | Error (%) | |||

|---|---|---|---|---|

| SMART | 819.3 | 815.2 | 4.1 | 0.5 |

| Reference/TLS | 815.1 | 826.4 | 11.3 | 1.4 |

| Difference | Error (%) | |||

|---|---|---|---|---|

| SMART | 619.3 | 620.94 | 1.64 | 0.3 |

| Reference/TLS | 622.4 | 621.98 | 0.42 | ~0.0 |

| Platform | Approximate Cost (USD) |

|---|---|

| SMART | 8000 |

| UAV LiDAR | 30,000+ |

| Terrestrial LiDAR | 20,000–35,000+ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manish, R.; Hasheminasab, S.M.; Liu, J.; Koshan, Y.; Mahlberg, J.A.; Lin, Y.-C.; Ravi, R.; Zhou, T.; McGuffey, J.; Wells, T.; et al. Image-Aided LiDAR Mapping Platform and Data Processing Strategy for Stockpile Volume Estimation. Remote Sens. 2022, 14, 231. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14010231

Manish R, Hasheminasab SM, Liu J, Koshan Y, Mahlberg JA, Lin Y-C, Ravi R, Zhou T, McGuffey J, Wells T, et al. Image-Aided LiDAR Mapping Platform and Data Processing Strategy for Stockpile Volume Estimation. Remote Sensing. 2022; 14(1):231. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14010231

Chicago/Turabian StyleManish, Raja, Seyyed Meghdad Hasheminasab, Jidong Liu, Yerassyl Koshan, Justin Anthony Mahlberg, Yi-Chun Lin, Radhika Ravi, Tian Zhou, Jeremy McGuffey, Timothy Wells, and et al. 2022. "Image-Aided LiDAR Mapping Platform and Data Processing Strategy for Stockpile Volume Estimation" Remote Sensing 14, no. 1: 231. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14010231