Suggestive Data Annotation for CNN-Based Building Footprint Mapping Based on Deep Active Learning and Landscape Metrics

Abstract

:1. Introduction

2. Materials and Methods

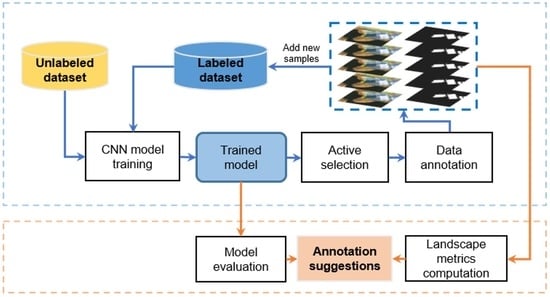

2.1. Framework of Suggestive Data Annotation for CNN-Based Building Footprint Mapping

2.2. Dataset and Pre-Processing

2.3. U-Net and DeeplabV3+ Architectures

2.4. Active Learning and Random Selection

2.5. Landscape Metrics

2.6. Selection of Best Model per Iteration

3. Results

3.1. Comparison of Active Learning Strategies and Baseline Model

3.2. Landscape Features of Selected Image Tiles Based on U-Net and DeeplabV3+

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Schneider, A.; Friedl, M.A.; Potere, D. A new map of global urban extent from MODIS satellite data. Environ. Res. Lett. 2009, 4, 044003. [Google Scholar] [CrossRef] [Green Version]

- Jochem, W.C.; Leasure, D.R.; Pannell, O.; Chamberlain, H.R.; Jones, P.; Tatem, A.J. Classifying settlement types from multi-scale spatial patterns of building footprints. Environ. Plan. B Urban Anal. City Sci. 2021, 48, 1161–1179. [Google Scholar] [CrossRef]

- Seto, K.C.; Sánchez-Rodríguez, R.; Fragkias, M. The New Geography of Contemporary Urbanization and the Environment. Annu. Rev. Environ. Resour. 2010, 35, 167–194. [Google Scholar] [CrossRef] [Green Version]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Zhao, F.; Zhang, C. Building Damage Evaluation from Satellite Imagery using Deep Learning. In Proceedings of the 2020 IEEE 21st International Conference on Information Reuse and Integration for Data Science (IRI), Las Vegas, NV, USA, 11–13 August 2020; pp. 82–89. [Google Scholar]

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Wagner, F.H.; Dalagnol, R.; Tarabalka, Y.; Segantine, T.Y.; Thomé, R.; Hirye, M. U-net-id, an instance segmentation model for building extraction from satellite images—Case study in the Joanopolis City, Brazil. Remote Sens. 2020, 12, 1544. [Google Scholar] [CrossRef]

- Rastogi, K.; Bodani, P.; Sharma, S.A. Automatic building footprint extraction from very high-resolution imagery using deep learning techniques. Geocarto Int. 2020, 37, 1501–1513. [Google Scholar] [CrossRef]

- Li, C.; Fu, L.; Zhu, Q.; Zhu, J.; Fang, Z.; Xie, Y.; Guo, Y.; Gong, Y. Attention Enhanced U-Net for Building Extraction from Farmland Based on Google and WorldView-2 Remote Sensing Images. Remote Sens. 2021, 13, 4411. [Google Scholar] [CrossRef]

- Pasquali, G.; Iannelli, G.C.; Dell’Acqua, F. Building footprint extraction from multispectral, spaceborne earth observation datasets using a structurally optimized U-Net convolutional neural network. Remote Sens. 2019, 11, 2803. [Google Scholar] [CrossRef] [Green Version]

- Touzani, S.; Granderson, J. Open Data and Deep Semantic Segmentation for Automated Extraction of Building Footprints. Remote Sens. 2021, 13, 2578. [Google Scholar] [CrossRef]

- Yang, N.; Tang, H. GeoBoost: An Incremental Deep Learning Approach toward Global Mapping of Buildings from VHR Remote Sensing Images. Remote Sens. 2020, 12, 1794. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, G.; He, G.; Yin, R.; Long, T.; Zhang, Z.; Chen, S.; Luo, B. A Large-Scale Mapping Scheme for Urban Building From Gaofen-2 Images Using Deep Learning and Hierarchical Approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11530–11545. [Google Scholar] [CrossRef]

- Yang, H.L.; Yuan, J.; Lunga, D.; Laverdiere, M.; Rose, A.; Bhaduri, B. Building Extraction at Scale Using Convolutional Neural Network: Mapping of the United States. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2600–2614. [Google Scholar] [CrossRef] [Green Version]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction fom an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, L.; Wu, Y.; Wu, G.; Guo, Z.; Waslander, S.L. TEMPORARY REMOVAL: Aerial imagery for roof segmentation: A large-scale dataset towards automatic mapping of buildings. ISPRS J. Photogramm. Remote Sens. 2019, 147, 42–55. [Google Scholar] [CrossRef] [Green Version]

- Van Etten, A.; Lindenbaum, D.; Bacastow, T.M. Spacenet: A remote sensing dataset and challenge series. arXiv 2018, arXiv:1807.01232. [Google Scholar]

- Mace, E.; Manville, K.; Barbu-McInnis, M.; Laielli, M.; Klaric, M.K.; Dooley, S. Overhead Detection: Beyond 8-bits and RGB. arXiv 2018, arXiv:1808.02443. [Google Scholar]

- Kang, J.; Tariq, S.; Oh, H.; Woo, S.S. A Survey of Deep Learning-Based Object Detection Methods and Datasets for Overhead Imagery. IEEE Access 2022, 10, 20118–20134. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fang, J.; Zheng, J.; Fu, H.; Yu, L. Semantic Segmentation-Based Building Footprint Extraction Using Very High-Resolution Satellite Images and Multi-Source GIS Data. Remote Sens. 2019, 11, 403. [Google Scholar] [CrossRef] [Green Version]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? the inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto: Toronto, ON, Canada, 2013. [Google Scholar]

- Chen, Q.; Zhang, Y.; Li, X.; Tao, P. Extracting Rectified Building Footprints from Traditional Orthophotos: A New Workflow. Sensors 2022, 22, 207. [Google Scholar] [CrossRef]

- Rahman, A.K.M.M.; Zaber, M.; Cheng, Q.; Nayem, A.B.S.; Sarker, A.; Paul, O.; Shibasaki, R. Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World. Sensors 2021, 21, 7469. [Google Scholar] [CrossRef] [PubMed]

- Gergelova, M.B.; Labant, S.; Kuzevic, S.; Kuzevicova, Z.; Pavolova, H. Identification of Roof Surfaces from LiDAR Cloud Points by GIS Tools: A Case Study of Lučenec, Slovakia. Sustainability 2020, 12, 6847. [Google Scholar] [CrossRef]

- Li, J.; Meng, L.; Yang, B.; Tao, C.; Li, L.; Zhang, W. LabelRS: An Automated Toolbox to Make Deep Learning Samples from Remote Sensing Images. Remote Sens. 2021, 13, 2064. [Google Scholar] [CrossRef]

- Xia, G.-S.; Wang, Z.; Xiong, C.; Zhang, L. Accurate Annotation of Remote Sensing Images via Active Spectral Clustering with Little Expert Knowledge. Remote Sens. 2015, 7, 15014–15045. [Google Scholar] [CrossRef] [Green Version]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.-Y.; Li, Z.; Gupta, B.B.; Chen, X.; Wang, X. A survey of deep active learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Robinson, C.; Ortiz, A.; Malkin, K.; Elias, B.; Peng, A.; Morris, D.; Dilkina, B.; Jojic, N. Human-machine collaboration for fast land cover mapping. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 2509–2517. [Google Scholar]

- Hamrouni, Y.; Paillassa, E.; Chéret, V.; Monteil, C.; Sheeren, D. From local to global: A transfer learning-based approach for mapping poplar plantations at national scale using Sentinel-2. ISPRS J. Photogramm. Remote Sens. 2021, 171, 76–100. [Google Scholar] [CrossRef]

- Bi, H.; Xu, F.; Wei, Z.; Xue, Y.; Xu, Z. An active deep learning approach for minimally supervised PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9378–9395. [Google Scholar] [CrossRef]

- Xu, G.; Zhu, X.; Tapper, N. Using convolutional neural networks incorporating hierarchical active learning for target-searching in large-scale remote sensing images. Int. J. Remote Sens. 2020, 41, 4057–4079. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Y.; Chen, J.; Zhang, S.; Chen, D.Z. Suggestive annotation: A deep active learning framework for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec City, QC, Canada, 11–13 September 2017; pp. 399–407. [Google Scholar]

- McGarigal, K.; Cushman, S.A.; Ene, E. FRAGSTATS v4: Spatial Pattern Analysis Program for Categorical and Continuous Maps. Computer Software Program Produced by the Authors at the University of Massachusetts, Amherst. 2012, Volume 15. Available online: http://www.umass.edu/landeco/research/fragstats/fragstats.html (accessed on 1 May 2022).

- Frazier, A.E.; Kedron, P. Landscape metrics: Past progress and future directions. Curr. Landsc. Ecol. Rep. 2017, 2, 63–72. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Roux, E.; Dessay, N.; Girod, R.; Stefani, A.; Nacher, M.; Moiret, A.; Seyler, F. Mapping a knowledge-based malaria hazard index related to landscape using remote sensing: Application to the cross-border area between French Guiana and Brazil. Remote Sens. 2016, 8, 319. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Feng, Y.; Dessay, N.; Delaitre, E.; Gurgel, H.; Gong, P. Continuous monitoring of the spatio-temporal patterns of surface water in response to land use and land cover types in a Mediterranean lagoon complex. Remote Sens. 2019, 11, 1425. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.; Xu, M.; Chen, Y.; Wu, W.; Dong, W. A Postprocessing Method Based on Regions and Boundaries Using Convolutional Neural Networks and a New Dataset for Building Extraction. Remote Sens. 2022, 14, 647. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Settles, B. Active Learning Literature Survey; University of Wisconsin-Madison: Madison, WI, USA, 2009. [Google Scholar]

- Bosch, M. PyLandStats: An open-source Pythonic library to compute landscape metrics. PLoS ONE 2019, 14, e0225734. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, X.; Blanchet, F.G.; Koper, N. Measuring habitat fragmentation: An evaluation of landscape pattern metrics. Methods Ecol. Evol. 2014, 5, 634–646. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Uuemaa, E.; Antrop, M.; Roosaare, J.; Marja, R.; Mander, Ü. Landscape Metrics and Indices: An Overview of Their Use in Landscape Research. Living Rev. Landsc. Res. 2009, 3, 1–28. [Google Scholar] [CrossRef]

- Plexida, S.G.; Sfougaris, A.I.; Ispikoudis, I.P.; Papanastasis, V.P. Selecting landscape metrics as indicators of spatial heterogeneity—A comparison among Greek landscapes. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 26–35. [Google Scholar] [CrossRef]

- Cushman, S.A.; McGarigal, K.; Neel, M.C. Parsimony in landscape metrics: Strength, universality, and consistency. Ecol. Indic. 2008, 8, 691–703. [Google Scholar] [CrossRef]

- Openshaw, S. The modifiable areal unit problem. Quant. Geogr. A Br. View 1981, 60–69. Available online: https://cir.nii.ac.jp/crid/1572824498971908736 (accessed on 1 May 2022).

- Chen, F.; Wang, N.; Yu, B.; Wang, L. Res2-Unet, a New Deep Architecture for Building Detection from High Spatial Resolution Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1494–1501. [Google Scholar] [CrossRef]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building Extraction from Satellite Images Using Mask R-CNN with Building Boundary Regularization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 242–2424. [Google Scholar]

- Wu, X.; Chen, C.; Zhong, M.; Wang, J. HAL: Hybrid active learning for efficient labeling in medical domain. Neurocomputing 2021, 456, 563–572. [Google Scholar] [CrossRef]

- Jin, Q.; Yuan, M.; Qiao, Q.; Song, Z. One-shot active learning for image segmentation via contrastive learning and diversity-based sampling. Knowl. Based Syst. 2022, 241, 108278. [Google Scholar] [CrossRef]

| Datasets | Resolution (m) | Number of Image–Label Pairs | Size of Tiles (Pixels) | Geolocation | References |

|---|---|---|---|---|---|

| WHU satellite dataset I | 0.3 to 2.5 | 204 | 512 × 512 | 10 cities worldwide | [15] |

| WHU satellite dataset II | 0.45 | 4038 | 512 × 512 | East Asia cities | [15] |

| WHU aerial dataset | 0.3 | 8189 | 512 × 512 | Christchurch, New Zealand | [15] |

| Aerial imagery for roof segmentation | 0.075 | 1047 | 10,000 × 10,000 | Christchurch, New Zealand | [16] |

| SpaceNet 1 | 0.5 | 9735 | 439 × 407 | Rio de Janeiro, Brazil | [17,18,19] |

| SpaceNet 2 | 0.3 | 10,593 | 650 × 650 | Las Vegas, Paris, Shanghai, Khartoum | [17,19,20] |

| Inria Aerial Image Labeling Dataset | 0.3 | 360 | 5000 × 5000 | 10 cities worldwide | [21] |

| Massachusetts Buildings Dataset | 1 | 151 | 1500 × 1500 | Massachusetts, USA | [22] |

| Dataset | Seed Set | Training Set | Validation Set | Tiles Selected per Iteration | Number of Iterations |

|---|---|---|---|---|---|

| WHU satellite dataset II | 160 tiles | 2868 tiles | 1010 tiles | 160 tiles | 18 |

| WHU aerial dataset | 320 tiles | 5822 tiles | 2047 tiles | 320 tiles | 19 |

| Models | WHU Satellite Dataset II | WHU Aerial Dataset | |

|---|---|---|---|

| U-Net | Active learning | 6th iteration (160 tiles × 6) | 4th iteration (320 tiles × 4) |

| Random sampling | 12th iteration (160 tiles × 12) | 6th iteration (320 tiles × 6) | |

| Reduced number of tiles to be annotated | 960 tiles (160 tiles × 6) | 640 tiles (320 tiles × 2) | |

| DeeplabV3+ | Active learning | 8th iteration (160 tiles × 8) | 8th iteration (320 tiles × 8) |

| Random sampling | 11th iteration (160 tiles × 11) | 10th iteration (320 tiles × 10) | |

| Reduced number of tiles to be annotated | 480 tiles (160 tiles × 3) | 640 tiles (320 tiles × 2) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhang, S.; Dong, J. Suggestive Data Annotation for CNN-Based Building Footprint Mapping Based on Deep Active Learning and Landscape Metrics. Remote Sens. 2022, 14, 3147. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14133147

Li Z, Zhang S, Dong J. Suggestive Data Annotation for CNN-Based Building Footprint Mapping Based on Deep Active Learning and Landscape Metrics. Remote Sensing. 2022; 14(13):3147. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14133147

Chicago/Turabian StyleLi, Zhichao, Shuai Zhang, and Jinwei Dong. 2022. "Suggestive Data Annotation for CNN-Based Building Footprint Mapping Based on Deep Active Learning and Landscape Metrics" Remote Sensing 14, no. 13: 3147. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14133147