ZY-1 02D Hyperspectral Imagery Super-Resolution via Endmember Matrix Constraint Unmixing

Abstract

:1. Introduction

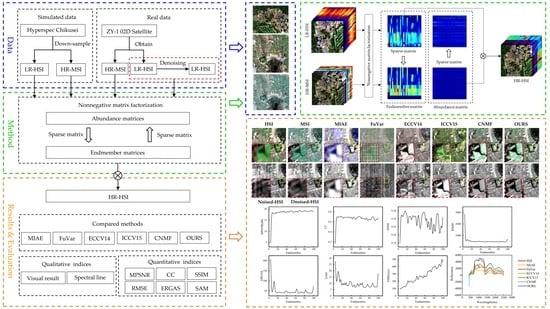

2. Materials and Methods

2.1. Data

2.2. Methods

2.2.1. HSI SRR by Endmember Matrix Constraint Unmixing

SRR Model

- is the spectral signature matrix, with each column vector representing the endmember spectrum and being the number of endmembers.

- is the abundance matrix, with each column vector denoting the abundance fractions of all endmembers at each pixel.

- is the residual.

- is the spatial spread transform matrix, with each column vector representing the transformation of the PSF from the MSI to the HSI.

- is the spectral response transform matrix, with each row vector representing the transformation of the SRF from the hyperspectral sensor to the multispectral for each band.

- and are sparse matrices composed of non-negative components.

- and are the residuals.

- denotes the spatially degraded abundance matrix.

- denotes the spectrally degraded endmember matrix.

Endmember Matrix Constraint Unmixing

- and .

- denotes F-norm.

- and are penalty terms constraining the solution of the formulas.

- and are their corresponding Lagrange multipliers, or the regularization parameters. The solution efficiency of different penalty terms varies from different actual problems.

2.2.2. Quality Measures

3. Experiment Results

3.1. The Simulated Data

3.2. ZY-1 02D HSI

3.3. ZY-1 02D HSI SRR Results for before and after Denoising

4. Discussion

4.1. SRR via Constraint Endmember Matrix Unmixing

4.2. Denoising Effect on SRR for ZY-102D HSI

4.3. Future Works

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HSI | Hyperspectral Imagery |

| SRR | Super-Resolution Reconstruction |

| MSI | Multispectral Imagery |

| CS | Component Substitution |

| DL | Deep Learning |

| SR | Sparse Representation |

| PCA | Principal Component Analysis |

| PSF | Point Spread Function |

| SRF | Spectral Response Function |

| CNMF | Coupled Non-Negative Matrix Factorization |

| HR-HSI | High-Spatial Resolution HSI |

| LR-HSI | Low-Space Resolution HSI |

| HR-MSI | High-Space Resolution |

| MPSNR | Mean Peak Signal to Noise Ratio |

| CC | Cross Correlations |

| SSIM | Structure Similarity Index |

| RMSE | Root Mean Squared Error |

| ERGAS | Relative Dimensionless Global Error in Synthesis |

| SAM | Spectral Angle Mapper |

References

- Lu, H.; Qiao, D.; Li, Y.; Wu, S.; Deng, L. Fusion of China ZY-1 02D Hyperspectral Data and Multispectral Data: Which Methods Should Be Used? Remote Sens. 2021, 13, 2354. [Google Scholar] [CrossRef]

- Zhang, H.; Han, B.; Wang, X.; An, M.; Lei, Y. System design and technique characteristic of ZY-1-02D satellite. Spacecr. Eng. 2020, 29. [Google Scholar] [CrossRef]

- Guo, H.; Han, B.; Wang, X.; Tan, K. Hyperspectral and Multispectral Remote Sensing Images Fusion Method of ZY-1-02D Satellite. Spacecr. Eng. 2020, 29, 180–185. [Google Scholar]

- Park, S.C.; Park, M.K.; Kang, M.G. Super-resolution image reconstruction: A technical overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef]

- Besiris, D.; Tsagaris, V.; Fragoulis, N.; Theoharatos, C. An FPGA-based hardware implementation of configurable pixel-level color image fusion. IEEE Trans. Geosci. Remote Sens. 2011, 50, 362–373. [Google Scholar] [CrossRef]

- Mamatha, G.; Sumalatha, V.; Lakshmaiah, M.V. FPGA implementation of satellite image fusion using wavelet substitution method. In Proceedings of the 2015 Science and Information Conference (SAI), London, UK, 28–30 July 2015; pp. 1155–1159. [Google Scholar]

- Chauhan, R.P.S.; Dwivedi, R.; Asthana, R. A high-speed image fusion method using hardware and software co-simulation. In Proceedings of the International Conference on Information and Communication Technology for Intelligent Systems, Ahmedabad, India, 25–26 March 2017; pp. 50–58. [Google Scholar]

- Yue, L.; Shen, H.; Li, J.; Yuan, Q.; Zhang, H.; Zhang, L. Image super-resolution: The techniques, applications, and future. Signal Process. 2016, 128, 389–408. [Google Scholar] [CrossRef]

- Zhou, C.; Tian, Y.; Ji, T.; Wu, S.; Zhang, F. The Study of Method for Improving the Spatial Resolution of Satellite Images with CCD Cameras. J. Remote Sens. 2002, 6, 179–182. [Google Scholar]

- Zhang, X.; Zhang, A.; Li, M.; Liu, L.; Kang, X. Restoration and Calibration of Tilting Hyperspectral Super-Resolution Image. Sensors 2020, 20, 4589. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Yang, C.; Li, X.; Zhou, C.; Zhong, R. High-Efficiency Microsatellite-Using Super-Resolution Algorithm Based on the Multi-Modality Super-CMOS Sensor. Sensors 2020, 20, 4019. [Google Scholar] [CrossRef]

- Wang, J.; Wu, Z.; Lee, Y.-S. Super-resolution of hyperspectral image using advanced nonlocal means filter and iterative back projection. J. Sens. 2015, 2015, 943561. [Google Scholar] [CrossRef]

- Farsiu, S.; Robinson, D.; Elad, M.; Milanfar, P. Advances and challenges in super-resolution. Int. J. Imaging Syst. Technol. 2004, 14, 47–57. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Ge, Z.; Cao, G.; Shi, H.; Fu, P. Adaptive Nonnegative Sparse Representation for Hyperspectral Image Super-Resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4267–4283. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Vivone, G.; Restaino, R.; Addesso, P.; Chanussot, J. Global and local Gram-Schmidt methods for hyperspectral pansharpening. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 37–40. [Google Scholar]

- Kwarteng, P.; Chavez, A. Extracting spectral contrast in Landsat Thematic Mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens. 1989, 55, 339–348. [Google Scholar]

- Shah, V.P.; Younan, N.H.; King, R.L. An efficient pan-sharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1323–1335. [Google Scholar] [CrossRef]

- González-Audícana, M.; Saleta, J.L.; Catalán, R.G.; García, R. Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1291–1299. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Chanussot, J.; Wei, Z. Nonlocal patch tensor sparse representation for hyperspectral image super-resolution. IEEE Trans. Image Process. 2019, 28, 3034–3047. [Google Scholar] [CrossRef] [PubMed]

- Zou, C.; Xia, Y. Bayesian dictionary learning for hyperspectral image super resolution in mixed Poisson–Gaussian noise. Signal Process. Image Commun. 2018, 60, 29–41. [Google Scholar] [CrossRef]

- Vella, M.; Zhang, B.; Chen, W.; Mota, J.F.C. Enhanced Hyperspectral Image Super-Resolution via RGB Fusion and TV-TV Minimization. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 3837–3841. [Google Scholar]

- Bungert, L.; Coomes, D.A.; Ehrhardt, M.J.; Rasch, J.; Reisenhofer, R.; Schönlieb, C.-B. Blind image fusion for hyperspectral imaging with the directional total variation. Inverse Probl. 2018, 34, 044003. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Bayesian sparse representation for hyperspectral image super resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3631–3640. [Google Scholar]

- Zhang, M.; Sun, X.; Zhu, Q.; Zheng, G. A Survey of Hyperspectral Image Super-Resolution Technology. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–6 July 2021; pp. 4476–4479. [Google Scholar]

- Han, J.; Zhang, D.; Cheng, G.; Liu, N.; Xu, D. Advanced deep-learning techniques for salient and category-specific object detection: A survey. IEEE Signal Process. Mag. 2018, 35, 84–100. [Google Scholar] [CrossRef]

- Liu, N.; Han, J. A deep spatial contextual long-term recurrent convolutional network for saliency detection. IEEE Trans. Image Process. 2018, 27, 3264–3274. [Google Scholar] [CrossRef]

- Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Maltezos, E. Stacked autoencoders driven by semi-supervised learning for building extraction from near infrared remote sensing imagery. Remote Sens. 2021, 13, 371. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When deep learning meets metric learning: Remote sensing image scene classification via learning discriminative CNNs. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Wu, H.; Prasad, S. Semi-supervised deep learning using pseudo labels for hyperspectral image classification. IEEE Trans. Image Process. 2017, 27, 1259–1270. [Google Scholar] [CrossRef]

- Lu, X.; Zheng, X.; Yuan, Y. Remote sensing scene classification by unsupervised representation learning. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5148–5157. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Lai, W.-S.; Huang, J.-B.; Ahuja, N.; Yang, M.-H. Fast and accurate image super-resolution with deep laplacian pyramid networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2599–2613. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Dong, W.; Zhou, C.; Wu, F.; Wu, J.; Shi, G.; Li, X. Model-guided deep hyperspectral image super-resolution. IEEE Trans. Image Process. 2021, 30, 5754–5768. [Google Scholar] [CrossRef]

- Wei, W.; Nie, J.; Li, Y.; Zhang, L.; Zhang, Y. Deep recursive network for hyperspectral image super-resolution. IEEE Trans. Comput. Imaging 2020, 6, 1233–1244. [Google Scholar] [CrossRef]

- Zheng, K.; Gao, L.; Liao, W.; Hong, D.; Zhang, B.; Cui, X.; Chanussot, J. Coupled convolutional neural network with adaptive response function learning for unsupervised hyperspectral super resolution. IEEE Trans. Geosci. Remote Sens. 2020, 59, 2487–2502. [Google Scholar] [CrossRef]

- Yao, J.; Hong, D.; Chanussot, J.; Meng, D.; Zhu, X.; Xu, Z. Cross-attention in coupled unmixing nets for unsupervised hyperspectral super-resolution. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 208–224. [Google Scholar]

- Liu, J.; Wu, Z.; Xiao, L.; Wu, X.-J. Model inspired autoencoder for unsupervised hyperspectral image super-resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Lu, X.; Yang, D.; Zhang, J.; Jia, F. Hyperspectral image super-resolution based on spatial correlation-regularized unmixing convolutional neural network. Remote Sens. 2021, 13, 4074. [Google Scholar] [CrossRef]

- Wang, X.; Ma, J.; Jiang, J.; Zhang, X.-P. Dilated projection correction network based on autoencoder for hyperspectral image super-resolution. Neural Netw. 2022, 146, 107–119. [Google Scholar] [CrossRef] [PubMed]

- Yi, C.; Zhao, Y.-Q.; Chan, J.C.-W. Hyperspectral image super-resolution based on spatial and spectral correlation fusion. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4165–4177. [Google Scholar] [CrossRef]

- Dong, W.; Fu, F.; Shi, G.; Cao, X.; Wu, J.; Li, G.; Li, X. Hyperspectral image super-resolution via non-negative structured sparse representation. IEEE Trans. Image Process. 2016, 25, 2337–2352. [Google Scholar] [CrossRef] [PubMed]

- Guo, F.; Zhang, C.; Zhang, M. Hyperspectral image super-resolution through clustering-based sparse representation. Multimed. Tools Appl. 2021, 80, 7351–7366. [Google Scholar] [CrossRef]

- Sun, L.; Cheng, Q.; Chen, Z. Hyperspectral Image Super-Resolution Method Based on Spectral Smoothing Prior and Tensor Tubal Row-Sparse Representation. Remote Sens. 2022, 14, 2142. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.-Q.; Bu, Y.; Liao, W.; Chan, J.C.-W.; Philips, W. Spatial-spectral structured sparse low-rank representation for hyperspectral image super-resolution. IEEE Trans. Image Process. 2021, 30, 3084–3097. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, M.; Yang, S. Multispectral and hyperspectral image fusion based on group spectral embedding and low-rank factorization. IEEE Trans. Geosci. Remote Sens. 2016, 55, 1363–1371. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled non-negative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2011, 50, 528–537. [Google Scholar] [CrossRef]

- Borsoi, R.A.; Imbiriba, T.; Bermudez, J.C.M. Super-resolution for hyperspectral and multispectral image fusion accounting for seasonal spectral variability. IEEE Trans. Image Process. 2019, 29, 116–127. [Google Scholar] [CrossRef] [PubMed]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution by coupled spectral unmixing. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3586–3594. [Google Scholar]

- Han, X.; Yu, J.; Xue, J.-H.; Sun, W. Hyperspectral and multispectral image fusion using optimized twin dictionaries. IEEE Trans. Image Process. 2020, 29, 4709–4720. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse spatio-spectral representation for hyperspectral image super-resolution. In Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 63–78. [Google Scholar]

- Li, J.; Peng, Y.; Jiang, T.; Zhang, L.; Long, J. Hyperspectral image super-resolution based on spatial group sparsity regularization unmixing. Appl. Sci. 2020, 10, 5583. [Google Scholar] [CrossRef]

- Yokoya, N.; Iwasaki, A. Airborne Hyperspectral Data over Chikusei. Space Appl. Lab., Univ. Tokyo, Tokyo, Japan, Tech. Rep. SAL-2016-05-27 2016. Available online: https://www.researchgate.net/publication/304013716_Airborne_hyperspectral_data_over_Chikusei (accessed on 16 June 2016).

- Pauca, V.P.; Piper, J.; Plemmons, R.J. Non-negative matrix factorization for spectral data analysis. Linear Algebra Its Appl. 2006, 416, 29–47. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Yi, C.; Zhao, Y.-Q.; Yang, J.; Chan, J.C.-W.; Kong, S.G. Joint hyperspectral super-resolution and unmixing with interactive feedback. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3823–3834. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X. An overview on linear unmixing of hyperspectral data. Math. Probl. Eng. 2020, 2020, 3735403. [Google Scholar] [CrossRef]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution with spectral unmixing constraints. Remote Sens. 2017, 9, 1196. [Google Scholar] [CrossRef]

- He, Y.; Gan, T.; Chen, W.; Wang, H. Adaptive denoising by singular value decomposition. IEEE Signal Process. Lett. 2011, 18, 215–218. [Google Scholar] [CrossRef]

- Cao, C.; Yu, J.; Zhou, C.; Hu, K.; Xiao, F.; Gao, X. Hyperspectral image denoising via subspace-based nonlocal low-rank and sparse factorization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 973–988. [Google Scholar] [CrossRef]

| Method | MPSNR | CC | SSIM | RMSE | ERGAS | SAM | TIME (s) |

|---|---|---|---|---|---|---|---|

| MIAE | 31.9489 | 0.9624 | 0.8888492 | 184.37565 | 17.3296 | 2.3846 | 325.1728 |

| FuVar | 19.7156 | 0.5038 | 0.3319486 | 791.5662 | 56.8757 | 21.4455 | 1861.6585 |

| ECCV14 | 13.4956 | 0.9870 | 0.0000111 | 1680.3724 | 114.5954 | 1.4832 | 1302.0734 |

| ICCV15 | 13.4956 | 0.9868 | 0.0000111 | 1680.3726 | 114.5954 | 1.3751 | 357.9853 |

| CNMF | 47.3551 | 0.9890 | 0.9999995 | 0.0039 | 1.5966 | 1.3619 | 240.6289 |

| OURS | 47.4468 | 0.9896 | 0.9999996 | 0.0037 | 1.5117 | 1.3305 | 253.2515 |

| Values | MPSNR | CC | SSIM | RMSE | ERGAS | SAM | TIME (s) |

|---|---|---|---|---|---|---|---|

| 0.00 | 47.2463 | 0.9890 | 0.9999995 | 0.0039 | 1.5688 | 1.3580 | 273.4078 |

| 0.05 | 47.2376 | 0.9892 | 0.9999995 | 0.0039 | 1.5438 | 1.3647 | 281.8419 |

| 0.10 | 47.2711 | 0.9890 | 0.9999995 | 0.0039 | 1.5576 | 1.3695 | 280.2361 |

| 0.15 | 47.2951 | 0.9890 | 0.9999995 | 0.0039 | 1.5646 | 1.3709 | 250.1167 |

| 0.30 | 47.4468 | 0.9896 | 0.9999996 | 0.0037 | 1.5117 | 1.3305 | 253.2515 |

| 0.40 | 47.3949 | 0.9889 | 0.9999995 | 0.0039 | 1.5794 | 1.3799 | 256.5485 |

| 0.50 | 47.3993 | 0.9890 | 0.9999995 | 0.0039 | 1.5761 | 1.3732 | 257.3367 |

| 0.60 | 47.4953 | 0.9890 | 0.9999995 | 0.0039 | 1.5673 | 1.3677 | 281.2979 |

| Method | MPSNR | CC | SSIM | RMSE | ERGAS | SAM | TIME (s) |

|---|---|---|---|---|---|---|---|

| MIAE | 17.8093 | 0.7392 | 0.4168 | 476.9396 | 60.6493 | 11.4362 | 616.3177 |

| FuVar | 11.0014 | 0.2747 | 0.0811 | 1203.2809 | 84.7249 | 44.2109 | 4812.1460 |

| ECCV14 | 10.8087 | 0.5921 | 0.0843 | 1191.3784 | 87.8039 | 25.2693 | 2966.4885 |

| ICCV15 | 18.8991 | 0.6769 | 0.0903 | 468.8753 | 42.3810 | 8.3175 | 188.3746 |

| CNMF | 18.2901 | 0.6937 | 0.2494 | 489.3940 | 41.8792 | 11.1107 | 150.0200 |

| OURS | 19.1274 | 0.6839 | 0.2478 | 443.3611 | 39.4557 | 7.2542 | 153.2204 |

| Method | MPSNR | CC | SSIM | RMSE | ERGAS | SAM | TIME (s) |

|---|---|---|---|---|---|---|---|

| MIAE | 15.0415 | 0.6439 | 0.0599 | 696.3550 | 65.3466 | 10.8613 | 619.4395 |

| FuVar | 10.9691 | 0.2757 | 0.0363 | 1208.1741 | 84.0772 | 43.9273 | 4408.8130 |

| ECCV14 | 10.8002 | 0.5885 | 0.0857 | 1187.8724 | 87.6889 | 23.7784 | 2979.5627 |

| ICCV15 | 18.7260 | 0.5643 | 0.0663 | 479.8861 | 42.9734 | 8.5419 | 173.6451 |

| CNMF | 18.7657 | 0.6957 | 0.2499 | 464.5570 | 39.6662 | 9.6229 | 159.7210 |

| OURS | 18.5660 | 0.7072 | 0.2572 | 476.7362 | 40.0394 | 15.2285 | 159.2980 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Zhang, A.; Portelli, R.; Zhang, X.; Guan, H. ZY-1 02D Hyperspectral Imagery Super-Resolution via Endmember Matrix Constraint Unmixing. Remote Sens. 2022, 14, 4034. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14164034

Zhang X, Zhang A, Portelli R, Zhang X, Guan H. ZY-1 02D Hyperspectral Imagery Super-Resolution via Endmember Matrix Constraint Unmixing. Remote Sensing. 2022; 14(16):4034. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14164034

Chicago/Turabian StyleZhang, Xintong, Aiwu Zhang, Raechel Portelli, Xizhen Zhang, and Hongliang Guan. 2022. "ZY-1 02D Hyperspectral Imagery Super-Resolution via Endmember Matrix Constraint Unmixing" Remote Sensing 14, no. 16: 4034. https://0-doi-org.brum.beds.ac.uk/10.3390/rs14164034