Real-Time Application for Generating Multiple Experiences from 360° Panoramic Video by Tracking Arbitrary Objects and Viewer’s Orientations

Abstract

:Featured Application

Abstract

1. Introduction

- The sharing of users’ personal experiences of 360° videos in VR among each other.

- An authoring system for generating multiple experiences of a single 360° visual content source.

- A real-time object tracker for 360° panoramic videos.

- The provision of adaptive focus assistance for efficient transferring of 360° experiences in VR.

2. Related Work

3. Research Questions and Research Method

- RQ1: Is it possible to generate multiple experiences from a single 360° video?

- RQ2: Does the provision of multiple experiences help viewers experience various interesting content in a single 360° video?

- RQ3: Does sharing of the users’ personal experiences among each other help them easily find the ROIs while watching a 360° video?

- RQ4: Do existing simple object trackers perform well on panoramic 360° videos?

- RQ5: Does object tracking make authoring easy and more productive for the 360° video content providers?

- RQ6: Does the user adaptive focus assistance minimize the loss of visual information and efficiently direct the viewer towards an ROI in 360° VR?

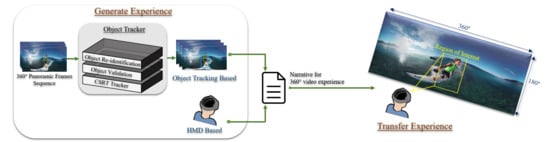

4. Proposed Authoring System

4.1. Creator Part

4.1.1. HMD Based

4.1.2. Object Tracker

4.1.2.1. DCF-CSR Tracker

4.1.2.2. Tracking Validation

4.1.2.3. Object Re-Identification

4.1.2.4. Viewing Angle Generator for 360° VR

4.2. Viewer Part

4.3. Multiple Experiences from Single 360° Video

5. Experimental Results

5.1. User Survey

5.1.1. Usability

5.1.2. Efficiency

5.1.3. Satisfaction

5.2. Object Tracker Performance

5.3. Speed Evaluation

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Cummings, J.J.; Bailenson, J.N. How Immersive Is Enough? A Meta-Analysis of the Effect of Immersive Technology on User Presence. Media Psychol. 2016, 19, 272–309. [Google Scholar] [CrossRef]

- Lin, Y.C.; Chang, Y.J.; Hu, H.N.; Cheng, H.T.; Huang, C.W.; Sun, M. Tell me Where to Look: Investigating Ways for Assisting Focus in 360 Video. In Proceedings of the ACM 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 2535–2545. [Google Scholar]

- Delforouzi, A.; Tabatabaei, S.A.H.; Shirahama, K.; Grzegorzek, M. Unknown Object Tracking in 360-Degree Camera Images. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–6 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1798–1803. [Google Scholar]

- Lukezic, A.; Vojir, T.; Cehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative Correlation Filter With Channel and Spatial Reliability. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6309–6318. [Google Scholar]

- Lin, Y.T.; Liao, Y.C.; Teng, S.Y.; Chung, Y.J.; Chan, L.; Chen, B.Y. Outside-in: Visualizing Out-of-Sight Regions-of-Interest in a 360 Video Using Spatial Picture-in-Picture Previews. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Quebec City, QC, Canada, 22–25 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 255–265. [Google Scholar]

- Liu, T.M.; Ju, C.C.; Huang, Y.H.; Chang, T.S.; Yang, K.M.; Lin, Y.T. A 360-Degree 4K x 2K Panoramic Video Processing Over Smart-Phones. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 247–249. [Google Scholar]

- Facebook. New Publisher Tools for 360 Video. Available online: https://media.fb.com/2016/08/10/new-publisher-toolsfor-360-video/ (accessed on 13 May 2019).

- Su, Y.C.; Jayaraman, D.; Grauman, K. Pano2Vid: Automatic Cinematography for Watching 360° videos. In Proceedings of the 3rd. Asian Conference on Computer Vision (ACCV), Taipei, Taiwan, 20–24 November 2016; p. 1. [Google Scholar]

- Su, Y.C.; Grauman, K. Making 360 Video Watchable in 2d: Learning Videography for Click Free Viewing. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1368–1376. [Google Scholar]

- Hu, H.N.; Lin, Y.C.; Liu, M.Y.; Cheng, H.T.; Chang, Y.J.; Sun, M. Deep 360 Pilot: Learning a Deep Agent for Piloting Through 360 Sports Videos. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1396–1405. [Google Scholar]

- Thaler, M.; Bailer, W. Real-Time Person Detection and Tracking in Panoramic Video. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1027–1032. [Google Scholar]

- Lin, Z.; Doermann, D.; DeMenthon, D. Hierarchical Part-Template Matching for Human Detection and Segmentation. In Proceedings of the IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brasil, 14–21 October 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1–8. [Google Scholar]

- Wang, L.; Ching Yung, N.H. Three-Dimensional Model-Based Human Detection in Crowded Scenes. IEEE Trans. Intell. Transp. Syst. 2012, 13, 691–706. [Google Scholar] [CrossRef] [Green Version]

- Leibe, B.; Seeman, E.; Schiele, B. Pedestrian Detection in Crowded Scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, (CVPR05), Providence, RI, USA, 16–21 June 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 878–885. [Google Scholar]

- Yuntao, C.; Samarasckera, S.; Qian, H.; Greiffenhagen, M. Indoor Monitoring via the Collaboration Between a Peripheral Sensor and a Foveal Sensor. In Proceedings of the 1998 IEEE Workshop on Visual Surveillance, Bombay, India, 2 January 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 2–9. [Google Scholar]

- Delforouzi, A.; Tabatabaei, S.A.H.; Shirahama, K.; Grzegorzek, M. Polar Object Tracking in 360-Degree Camera Images. In Proceedings of the IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 347–352. [Google Scholar]

- Delforouzi, A.; Grzegorzek, M. Robust and Fast Object Tracking for Challenging 360-degree Videos. In Proceedings of the IEEE International Symposium on Multimedia (ISM), Taichung, Taiwan, 11–13 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 274–277. [Google Scholar]

- Delforouzi, A.; Tabatabaei, S.A.H.; Shirahama, K.; Grzegorzek, M. A Polar Model for Fast Object Tracking in 360-degree Camera Images. Multimed. Tools Appl. 2018, 78, 9275–9297. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-Convolutional Siamese Networks for Object Tracking. In Computer Vision—ECCV 2016 Workshops. ECCV 2016. Lecture Notes in Computer Science; Hua, G., Jégou, H., Eds.; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Nam, H.; Han, B. Learning Multi-Domain Convolutional Neural Networks for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4293–4302. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for Speed: A Benchmark for Higher Frame Rate Object Tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1134–1143. [Google Scholar]

- Elmezeny, A.; Edenhofer, N.; Wimmer, J. Immersive Storytelling in 360-degree Videos: An Analysis of Interplay Between Narrative and Technical Immersion. J. Virtual Worlds Res. 2018, 11. [Google Scholar] [CrossRef] [Green Version]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Computer Vision—ECCV 2006. ECCV 2006. Lecture Notes in Computer Science; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary Robust Independent Elementary Features. In Computer Vision—ECCV 2010. ECCV 2010. Lecture Notes in Computer Science; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G.R. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1564–1571. [Google Scholar]

- Muja, M.; Lowe, D. Flann-Fast Library for Approximate Nearest Neighbors User Manual; Computer Science Department, University of British Columbia: Vancouver, BC, Canada, 2009. [Google Scholar]

- Li, X.; Qi, Y.; Wang, Z.; Chen, K.; Liu, Z.; Shi, J.; Luo, P.; Tang, X.; Loy, C.C. Video Object Segmentation With Re-Identification. arXiv 2017, arXiv:1708.00197. [Google Scholar]

- KaewTraKulPong, P.; Bowden, R. An Improved Adaptive Background Mixture Model for Real-Time Tracking with Shadow Detection. In Video-Based Surveillance Systems; Remagnino, P., Jones, G.A., Paragios, N., Regazzoni, C.S., Eds.; Springer: Boston, MA, USA, 2002; pp. 135–144. [Google Scholar]

- Gugenheimer, J.; Wolf, D.; Haas, G.; Krebs, S.; Rukzio, E. Swivrchair: A Motorized Swivel Chair to Nudge Users’ Orientation for 360 Degree Storytelling in Virtual Reality. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1996–2000. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator Sickness Questionnaire: An Enhanced Method for Quantifying Simulator Sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Streijl, R.; Winkler, S.; Hands, D. Mean opinion score (MOS) revisited: Methods and applications, limitations and alternatives. Multimed. Syst. 2016, 22, 213–227. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 37, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Statement | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree | Average Score |

|---|---|---|---|---|---|---|

| Score | 1 | 2 | 3 | 4 | 5 | - |

| Usability | ||||||

| The interface of the system to parse video is easy to understand | 0 | 0 | 1 | 13 | 11 | 4.4 |

| Tasks such as previewing and editing the recorded experience are easy to perform | 0 | 0 | 1 | 11 | 13 | 4.48 |

| Convenient to use | 0 | 0 | 2 | 11 | 12 | 4.4 |

| It does not take a very long time to learn the system and perform operations | 0 | 0 | 2 | 6 | 17 | 4.6 |

| Overall Results for Usability | 0% | 0% | 6% | 41% | 53% | - |

| Efficiency | ||||||

| When you previewed your experience, the recorded experience was similar to the actual one | 0 | 0 | 0 | 7 | 18 | 4.72 |

| Knowing that your experience is being recorded while watching a 360° video does not restrict enjoyment | 0 | 0 | 5 | 4 | 16 | 4.44 |

| The experience with the system was smooth overall and performing operations such as parsing a video did not cause long delays in watching the video | 0 | 0 | 1 | 7 | 17 | 4.64 |

| Overall Results for Efficiency | 0% | 0% | 8% | 24% | 68% | - |

| Satisfaction | ||||||

| You can record your 360° video experience with very little knowledge about creating 360° video experiences | 0 | 0 | 0 | 4 | 21 | 4.84 |

| You will recommend this system to others | 0 | 0 | 0 | 7 | 18 | 4.72 |

| Overall Results for Satisfaction | 0% | 0% | 0% | 22% | 78% | - |

| Overall Result | 4.58 (91.6%) | |||||

| Video | Desired Object | Frames | Recall | Precision | F-Measure |

|---|---|---|---|---|---|

| Street1 | Pedestrian | 500 | 0.94 | 1.0 | 0.97 |

| Snorkeling | Snorkeler | 962 | 1.0 | 1.0 | 1.0 |

| Waterskating1 | Front water skater | 944 | 1.0 | 1.0 | 1.0 |

| Waterskating2 | Water skater in background | 1092 | 0.92 | 0.94 | 0.93 |

| Cartoon | Doll | 2122 | 0.89 | 0.91 | 0.9 |

| Snowboarding | Snowboarder | 4796 | 0.85 | 0.88 | 0.86 |

| Airplane Flight | Airplane cockpit | 4598 | 0.79 | 0.9 | 0.84 |

| Street2 | Playing child | 389 | 1.0 | 1.0 | 1.0 |

| Mean | - | - | 0.92 | 0.95 | 0.93 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shah, S.H.H.; Han, K.; Lee, J.W. Real-Time Application for Generating Multiple Experiences from 360° Panoramic Video by Tracking Arbitrary Objects and Viewer’s Orientations. Appl. Sci. 2020, 10, 2248. https://0-doi-org.brum.beds.ac.uk/10.3390/app10072248

Shah SHH, Han K, Lee JW. Real-Time Application for Generating Multiple Experiences from 360° Panoramic Video by Tracking Arbitrary Objects and Viewer’s Orientations. Applied Sciences. 2020; 10(7):2248. https://0-doi-org.brum.beds.ac.uk/10.3390/app10072248

Chicago/Turabian StyleShah, Syed Hammad Hussain, Kyungjin Han, and Jong Weon Lee. 2020. "Real-Time Application for Generating Multiple Experiences from 360° Panoramic Video by Tracking Arbitrary Objects and Viewer’s Orientations" Applied Sciences 10, no. 7: 2248. https://0-doi-org.brum.beds.ac.uk/10.3390/app10072248