Provider Fairness for Diversity and Coverage in Multi-Stakeholder Recommender Systems

Abstract

:1. Introduction

1.1. Objectives

1.2. Research Questions

1.3. Contribution

- Review of the recent literature about provider fairness, coverage, and diversity.

- Formalization of the problem at hand, as provided in the literature.

- Application and extension of the proposed methodology to a public and well-known dataset.

- Because the proposed solution in the literature is vague in terms of solving the diversity constraints, in this publication, we present a concrete heuristic approach for solving the latter.

- Evaluation of the results and discussion on the proposed solution.

- Introduction of new definitions for quantifying diversity, as the proposed approach in the literature posed significant limitations.

- Introduction of new high-level heuristic approaches for solving the newly-defined diversity constraints as the problem for diversity remains NP-Hard.

2. Background and Related Work

2.1. Multi-Stakeholder Recommender Systems (MSRS)

2.2. Coverage

2.3. Diversity

2.4. Fairness

2.5. Problem Formalization

3. Heuristic Solution Approach

3.1. Baseline Solution (Unconstrained Problem)

3.2. Addressing Coverage Constraints

3.3. Addressing Diversity Constraints

| Algorithm 1 Heuristic algorithm for addressing diversity constraints |

| 1: Xcov = calculate_solution_for_Coverage(A_pred, Kc) // as a linear programming problem through cvxpy 2: Xnew = Xcov // copy Coverage solution as a starting point for the final solution 3: foreach category c: 4: Div[c] = calculate_Diversity_for_category(Xnew, c) // as described in Formula (11) 5: categories_ordered_by_Diversity = argsort(Div) 6: categories_ordered_by_Diversity_desc = reverse(categories_ordered_by_Diversity) 7: categories to change, changeable_categories = [], categories_ordered_by_Diversity 8: foreach category c in categories_ordered_by_Diversity: 9: If Div[c] < D: 10: Categories_to_change.add(c) 11: changeable_categories.remove(c) 12: foreach category c in categories_to_change: 13: Most_recommended_item[c] = find_most_recommended_item_of_category(c) 14: Users_to_recommend = argsort(A_pred[most_recommended_item[c]]) 15: Users_to_recommend_except_recommended=users not recommended with the most recommended item 16: foreach user u in users_to_recommend_except_recommended: 17: foreach category c2 in changeable_categories: 18: rec_items_of_categ_to_usr = find_rec_items_of_category_to_user(c2, u) 19: foreach item i in rec_items_of_categ_to_usr: 20: Xnew[u,i] = 0 21: Xnew[u, most_recommeded_item[c]] = 1 // Calculate new Coverage for category c2 according to formula (9) 22: Cov_xnew[c2] = calculate_Coverage_for_category(X_new, c2) // Calculate new Diversity for categories c2 and c according to Formula (11) 23: Div_xnew[c2] = calculate_Diversity_for_category(Xnew, c2) 24: Div_xnew[c] = calculate_Diversity_for_category(Xnew, c) // If Coverage or Diversity constraints for c2 are violated rollback 25: If Cov_xnew[c2]< K[c2] or Div_xnew[c2]<D: 26: Xnew[u,i] = 1 27: Xnew[u, most_recommended_item[c2]] = 0 28: Else if Div_xnew[c] > D: 29: Break (line 12) |

4. Experiment Details and Results

4.1. Dataset Overview and Preprocessing

4.2. Baseline (Unconstrained) Solution

4.3. Item Providers or Categories

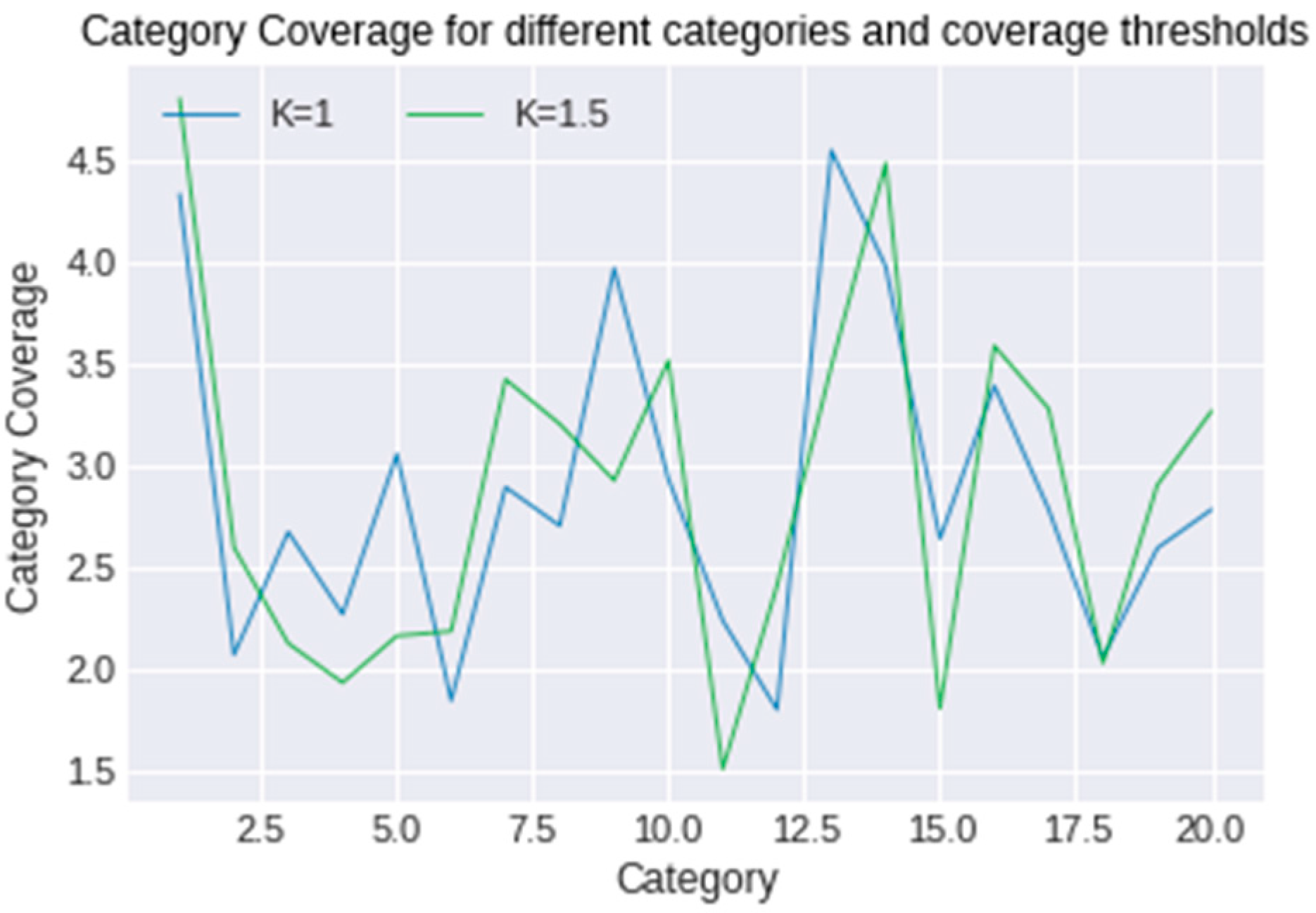

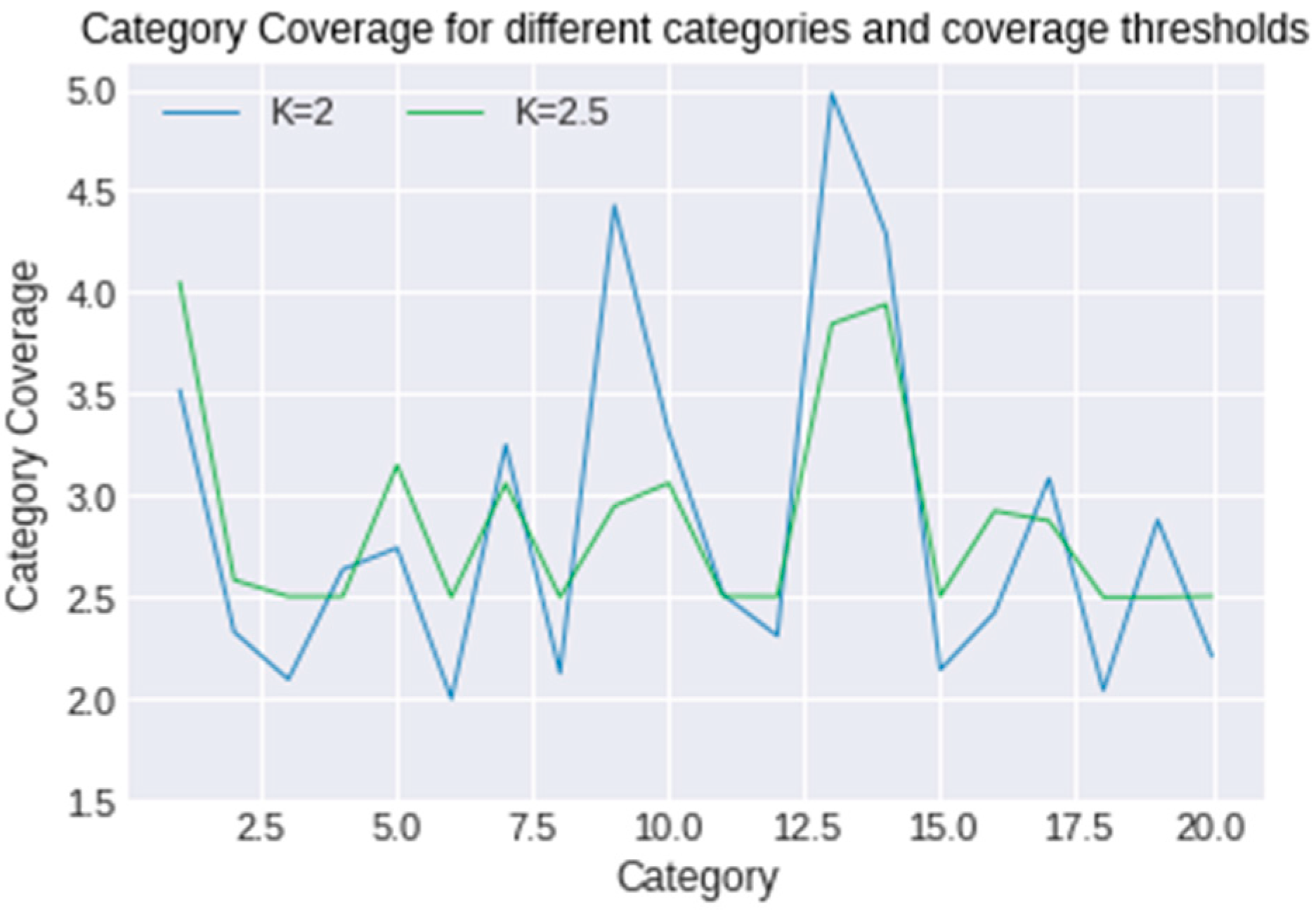

4.4. Coverage Solution

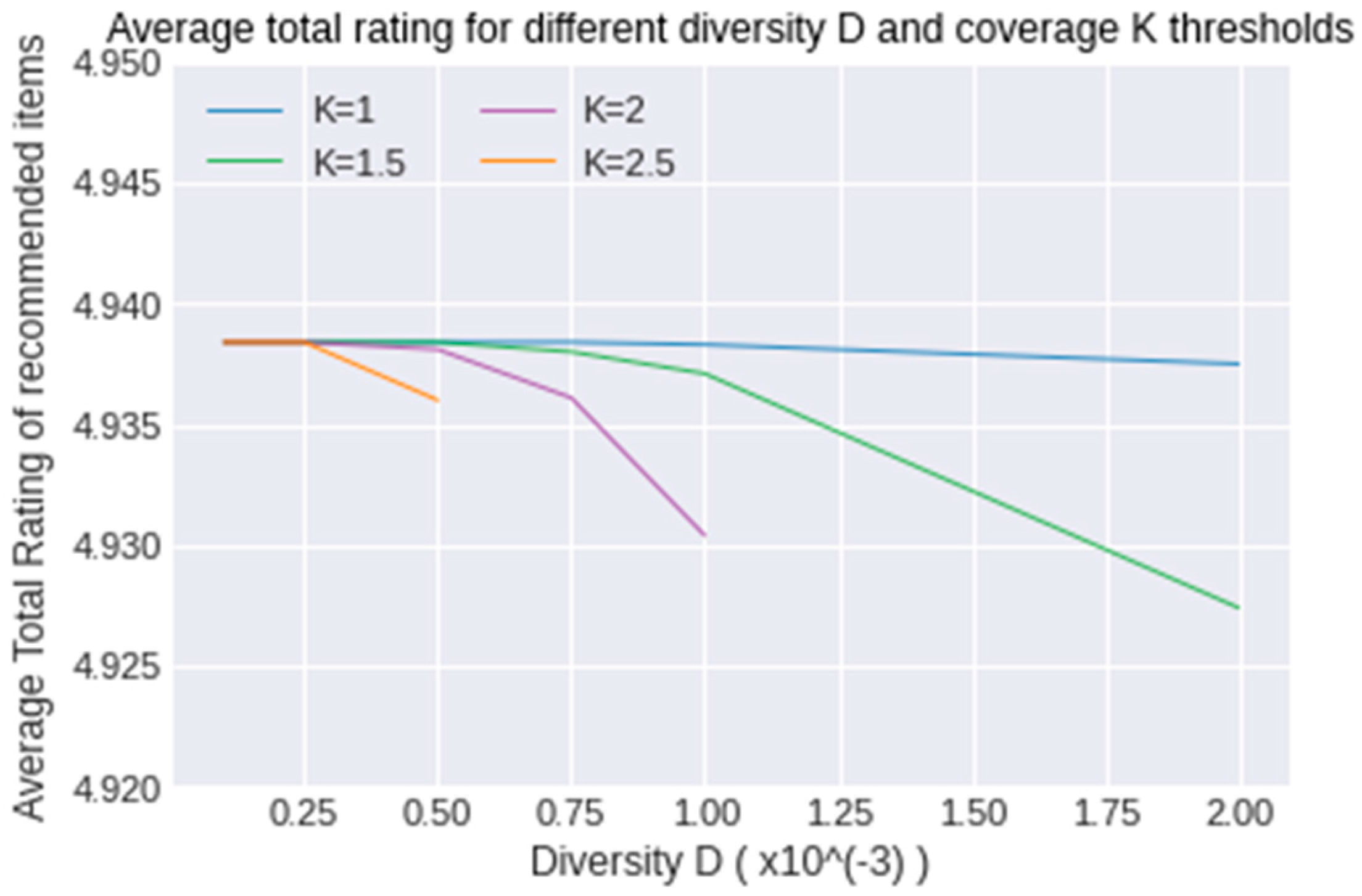

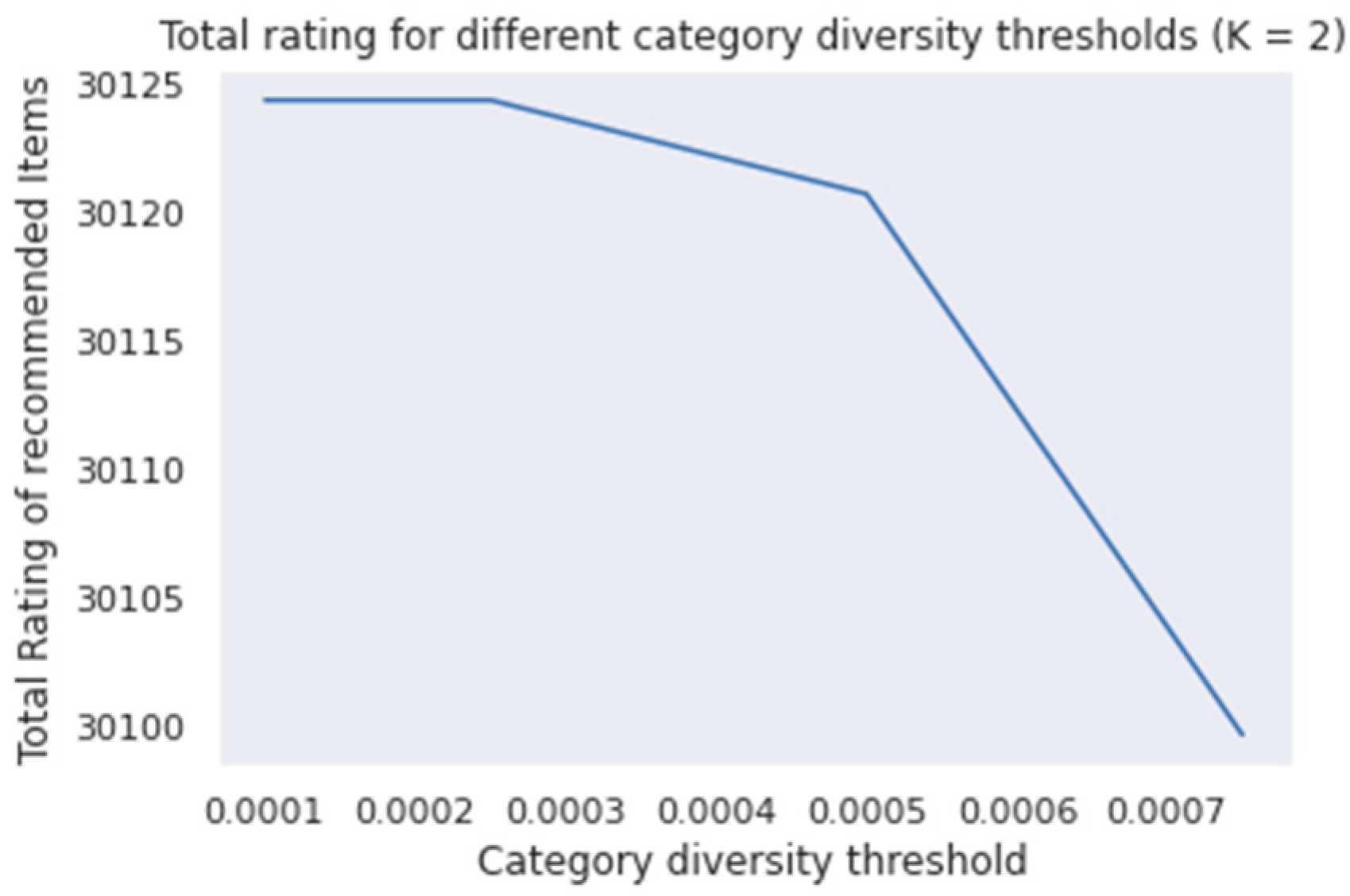

4.5. Final Solution

5. Discussion

5.1. Evaluation

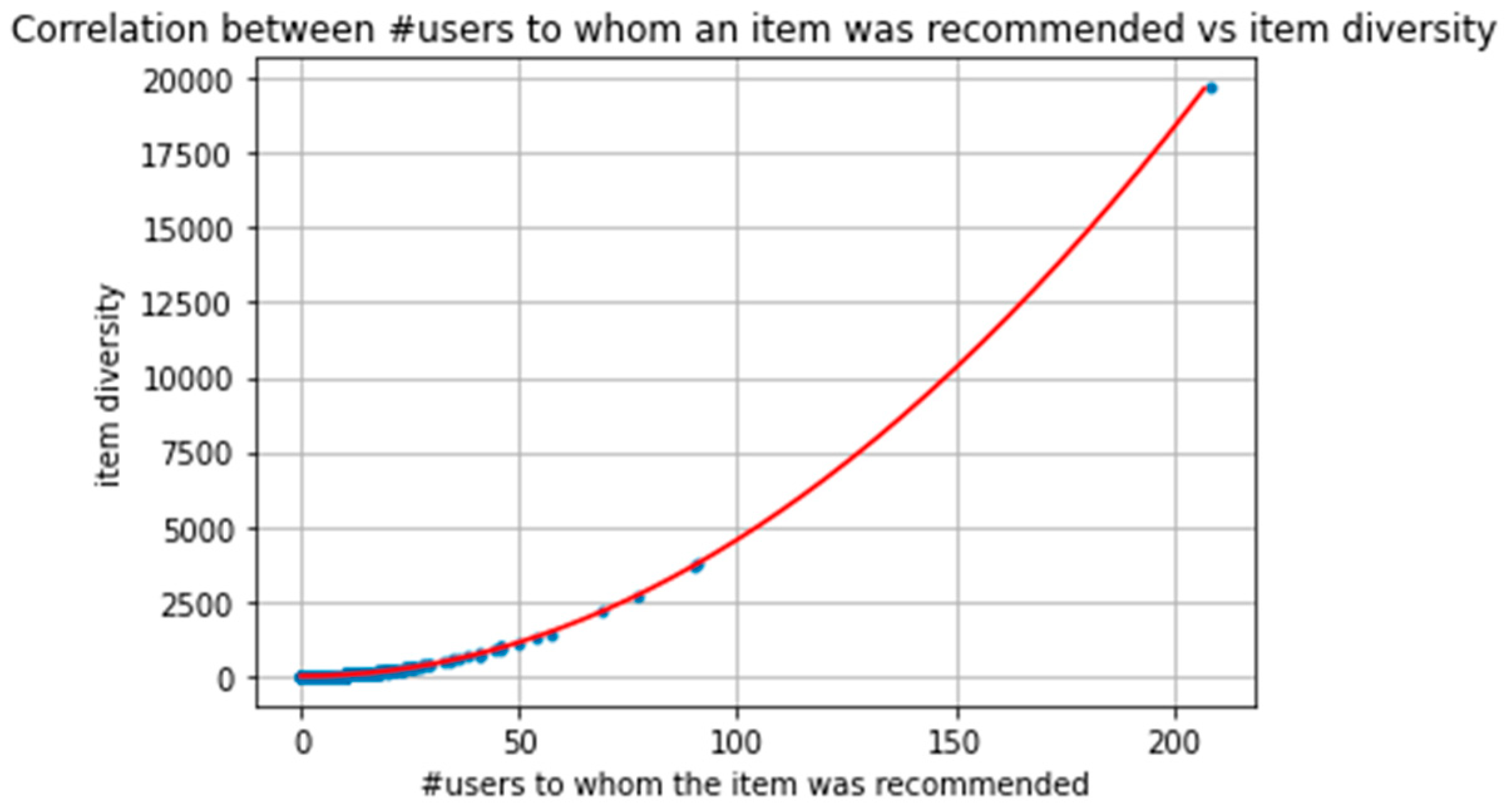

5.2. Redefining User Diversity and a New Heuristic Solution Approach

5.3. Answers to Research Questions

6. Conclusions and Future Extensions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koutsopoulos, I.; Halkidi, M. Efficient and Fair Item Coverage in Recommender Systems. In Proceedings of the IEEE 16th Intl Conf on Dependable, Autonomic and Secure Computing, Athens, Greece, 12–15 August 2018; pp. 912–918. [Google Scholar]

- Kunaver, M.; Požrl, T. Diversity in recommender systems—A survey. Knowl.-Based Syst. 2017, 123, 154–162. [Google Scholar] [CrossRef]

- McNee, S.M.; Riedl, J.; Konstan, J.A. Being accurate is not enough: How accuracy metrics have hurt recommender systems. In CHI’06 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Saranya, K.G.; Sudha Sadasivam, G. Personalized News Article Recommendation with Novelty Using Collaborative Filtering Based Rough Set Theory. Mob. Netw. Appl. 2017, 22, 719–729. [Google Scholar] [CrossRef]

- Abdollahpouri, H.; Burke, R.; Mobasher, B. Recommender systems as multistakeholder environments. In Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, Bratislava, Slovakia, 9 July 2017. [Google Scholar] [CrossRef]

- Burke, R.; Sonboli, N.; Ordoñez-Gauger, A. Balanced Neighborhoods for Multi-sided Fairness in Recommendation. In Proceedings of the Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018. [Google Scholar]

- Koutsopoulos, I.; Halkidi, M. Optimization of Multi-stakeholder Recommender Systems for Diversity and Coverage. In Proceedings of the IFIP Advances in Information and Communication Technology, Crete, Greece, 25–27 June 2021; Volume 627. [Google Scholar] [CrossRef]

- Burke, R.; Abdollahpouri, H.; Malthouse, E.C.; Thai, K.P.; Zhang, Y. Recommendation in multistakeholder environments. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–19 September 2019. [Google Scholar] [CrossRef]

- Abdollahpouri, H.; Burke, R. Multi-stakeholder recommendation and its connection to multi-sided fairness. In Proceedings of the CEUR Workshop, Lviv, Ukraine, 16–17 May 2019; Volume 2440. [Google Scholar]

- Milano, S.; Taddeo, M.; Floridi, L. Ethical aspects of multi-stakeholder recommendation systems. Inf. Soc. 2021, 37, 35–45. [Google Scholar] [CrossRef]

- Abdollahpouri, H. Multistakeholder recommendation: Survey and research directions. User Model. User-Adapt. Interact. 2020, 30, 127–158. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Y. Multi-stakeholder recommendations: Case studies, methods and challenges. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–20 September 2019. [Google Scholar] [CrossRef]

- Sürer, Ö.; Burke, R.; Malthouse, E.C. Multistakeholder recommendation with provider constraints. In Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, BC, Canada, 2 October 2018. [Google Scholar] [CrossRef]

- Malthouse, E.C.; Vakeel, K.A.; Hessary, Y.K.; Burke, R.; Fudurić, M. A multistakeholder recommender systems algorithm for allocating sponsored recommendations. In Proceedings of the CEUR Workshop, Lviv, Ukraine, 16–17 May 2019; Volume 2440. [Google Scholar]

- Ge, M.; Delgado-Battenfeld, C.; Jannach, D. Beyond accuracy: Evaluating recommender systems by coverage and serendipity. In Proceedings of the Fourth ACM conference on Recommender Systems, Barcelona, Spain, 26–30 September 2010. [Google Scholar] [CrossRef]

- Kaminskas, M.; Bridge, D. Diversity, serendipity, novelty, and coverage: A survey and empirical analysis of beyond-Accuracy objectives in recommender systems. ACM Transactions on Interactive Intelligent Systems. ACM Trans. Interact. Intell. Syst. 2016, 7, 1–42. [Google Scholar] [CrossRef]

- Rahman, M.; Oh, J.C. Graph bandit for diverse user coverage in online recommendation. Appl. Intell. 2018, 48, 1979–1995. [Google Scholar] [CrossRef]

- Hammar, M.; Karlsson, R.; Nilsson, B.J. Using maximum coverage to optimize recommendation systems in E-commerce. In Proceedings of the 7th ACM Conference on Recommender Systems, Hong Kong, China, 12–16 October 2013. [Google Scholar] [CrossRef] [Green Version]

- Ziegler, C.-N.; McNee, S.M.; Konstan, J.A.; Lausen, G. Improving recommendation lists through topic diversification. In Proceedings of the 14th International Conference on World Wide Web, Chiba, Japan, 10–14 May 2005. [Google Scholar] [CrossRef] [Green Version]

- Vargas, S.; Castells, P.; Vallet, D. Intent-oriented diversity in recommender systems. In Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval, Beijing, China, 24–28 July 2011. [Google Scholar] [CrossRef] [Green Version]

- Ekstrand, M.D. Collaborative Filtering Recommender Systems. In The Adaptive Web; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Kelly, J.P.; Bridge, D. Enhancing the diversity of conversational collaborative recommendations: A comparison. Artif. Intell. Rev. 2006, 25, 79–95. [Google Scholar] [CrossRef]

- Yu, C.; Lakshmanan, L.V.S.; Amer-Yahia, S. Recommendation diversification using explanations. In Proceedings of the 2009 IEEE 25th International Conference on Data Engineering, Shanghai, China, 29 March–2 April 2009. [Google Scholar] [CrossRef]

- Boratto, L.; Fenu, G.; Marras, M. Interplay between upsampling and regularization for provider fairness in recommender systems. User Modeling User-Adapt. Interact. 2021, 31, 421–455. [Google Scholar] [CrossRef]

- Sonboli, N.; Eskandanian, F.; Burke, R.; Liu, W.; Mobasher, B. Opportunistic Multi-aspect Fairness through Personalized Re-ranking. In Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization, Genoa, Italy, 12–18 July 2020. [Google Scholar] [CrossRef]

- Gómez, E.; Shui Zhang, C.; Boratto, L.; Salamó, M.; Marras, M. The Winner Takes it All: Geographic Imbalance and Provider (Un) fairness in Educational Recommender Systems. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021. [Google Scholar] [CrossRef]

- Beutel, A.; Chen, J.; Doshi, T.; Qian, H.; Wei, L.; Wu, Y.; Goodrow, C. Fairness in recommendation ranking through pairwise comparisons. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019. [Google Scholar] [CrossRef] [Green Version]

- Lin, X.; Zhang, M.; Zhang, Y.; Gu, Z.; Liu, Y.; Ma, S. Fairness-aware group recommendation with pareto-efficiency. In Proceedings of the Eleventh ACM Conference on Recommender Systems, Como, Italy, 27–31 August 2017. [Google Scholar] [CrossRef]

- Serbos, D.; Qi, S.; Mamoulis, N.; Pitoura, E.; Tsaparas, P. Fairness in package-to-group recommendations. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017. [Google Scholar] [CrossRef] [Green Version]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Item-based collaborative filtering recommendation algorithms. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, China, 1–5 May 2001. [Google Scholar] [CrossRef] [Green Version]

- Provider Fairness for Coverage and Diversity Experiments Github Repository. Available online: https://github.com/vkarakolis-epu/recsys_provider_fairness_optimization (accessed on 20 March 2022).

- Movielens Datasets. Available online: http://files.grouplens.org/datasets/movielens/ml-latest-small.zip (accessed on 20 March 2022).

- Cvxpy Python Library, Convex Optimization, for Everyone. Available online: https://www.cvxpy.org/ (accessed on 20 March 2022).

| Category | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Items | 101 | 91 | 105 | 115 | 105 | 107 | 90 | 121 | 101 | 101 | 90 | 114 | 94 | 120 | 115 | 104 | 114 | 108 | 107 | 118 |

| Diversity Threshold D | Total Rating (Kc = 1) | Average Rating (Kc = 1) | Total Rating (Kc = 2.5) | Average Rating (Kc = 2.5) |

|---|---|---|---|---|

| 0.0001 | 30,124.411 | 4.9384 | 30,124.411 | 4.9384 |

| 0.0002 | 30,124.411 | 4.9384 | 30,124.411 | 4.9384 |

| 0.00025 | 30,124.411 | 4.9384 | 30,124.411 | 4.9384 |

| 0.0005 | 30,124.411 | 4.9384 | 30,110.984 | 4.9362 |

| 0.00075 | 30,124.411 | 4.9384 | No solution | No solution |

| 0.001 | 30,124.120 | 4.9383 | No solution | No solution |

| 0.002 | 30,119.159 | 4.9375 | No solution | No solution |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karakolis, E.; Kokkinakos, P.; Askounis, D. Provider Fairness for Diversity and Coverage in Multi-Stakeholder Recommender Systems. Appl. Sci. 2022, 12, 4984. https://0-doi-org.brum.beds.ac.uk/10.3390/app12104984

Karakolis E, Kokkinakos P, Askounis D. Provider Fairness for Diversity and Coverage in Multi-Stakeholder Recommender Systems. Applied Sciences. 2022; 12(10):4984. https://0-doi-org.brum.beds.ac.uk/10.3390/app12104984

Chicago/Turabian StyleKarakolis, Evangelos, Panagiotis Kokkinakos, and Dimitrios Askounis. 2022. "Provider Fairness for Diversity and Coverage in Multi-Stakeholder Recommender Systems" Applied Sciences 12, no. 10: 4984. https://0-doi-org.brum.beds.ac.uk/10.3390/app12104984