3.1. Case Study on Measured Data

To verify the feasibility of the developed methodology, some experiments were performed and evaluated. In the experiments, the point cloud of a hammer head were acquired from the NTU-developed optical 3D measuring probe being developed by using a random speckle pattern projection and triangulation measurement principle [

12,

15]. The dimensions of the hammer head are approximately 110 × 35.5 × 21 mm

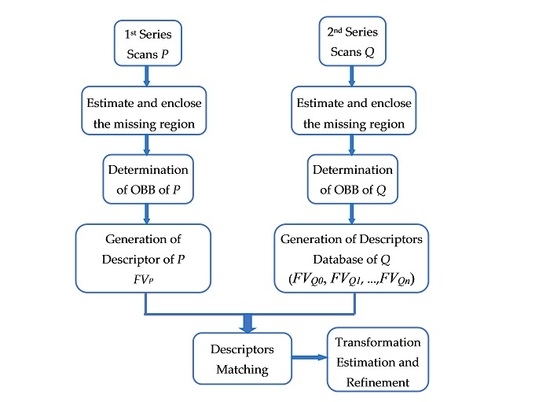

3. Firstly, the hammer head was placed on a fixed table, allowing for viewing the object from several positions around the object. However, the whole object surface could not be detected due to optical occlusion. Therefore, after the first series of automated scans to obtain point cloud

P, the object was reoriented manually and the second series of automated scans was continuously carried out to acquire point cloud

Q.

Figure 8 illustrates the coarse registration process between the two series of scans. The width of the normalized cross-correlation peak depends on the number of iterations and Δθ, as shown in

Figure 9. Meanwhile, the height of the normalized cross-correlation depends on the number of OBB segments

k1 ×

k2 ×

k3 and Δθ.

The performance of the registration can be estimated by using the distance from each overlapping point

qi in point cloud

Q to the fitting control point

pi which is the projected point of

qi onto the triangle mesh of

P. If

di denotes the distance between a point

pi in

P and its closest neighbor point

qi in

Q, the mean distance μ and standard deviation σ, which are used to evaluate the performance of the object registration, are computed as follows:

In this experiment, the mean deviation value is 0.0347 mm and the standard deviation value is 0.0235 mm.

Figure 10 illustrates the other examples being achieved by the developed method.

3.2. Case Study on Synthetic Data

In this section we provide a discussion on the influence of parameters on the performance of the proposed method. In addition, a comparison against several already published local feature descriptor methods is also introduced. The considered approaches are: Spin Images (SI), Point Signatures (PS), Point Feature Histograms (PFH), and the Signature of Histograms of OrienTations (SHOT). All methods were implemented in C++ using the open source Point Cloud Library (PCL). To estimate the computation cost of the registration process, the experiments are processed on a computer with an Intel core i7 processor with 3.40 GHz and 8 GB RAM.

In real application, it is very difficult to distinguish different error sources such as shape measurement error (noise, surface sampling,

etc.), correspondence error (occlusion, outliers,

etc.), and registration error. In order to evaluate the coarse registration errors, we have generated synthetic data, which is provided by the Industrial Technology Research Institute (ITRI) (as shown in

Figure 11). The dimensions of the socket model, connector model, cylinder model, and Brazo Control model are 45 × 25 × 25 mm

3, 41 × 33 × 25 mm

3, 35 × 35 × 35 mm

3, and 86 × 53 × 30 mm

3, respectively. Then, the point cloud corresponding with different views of the models are extracted by the NTU-developed virtual camera software (Precision Metrology (PM) lab, National Taiwan University, Taipei, Taiwan). The motion between green and red point cloud is a displacement of 50 mm in each axis and a rotation of π/4 around a fixed axis. Therefore, we have precise knowledge of the motion parameter rotation matrix

R and translation vector

t to serve as a reference for error estimation and validation of the coarse registration methods. The measures used to determine the accuracy include the rotation error and translation error. In order to evaluate the accuracy, the estimated transformation is compared to an expected one.

Results obtained show that sampling does not considerably affect the accuracy of the registration results. Thus, low resolution surfaces can be used in the proposed approach to reduce the computation time. Meanwhile, as is shown in

Table 1, errors in the proposed registration algorithms extremely depend on the rotation increment Δθ (rad). To enhance the accuracy, the rotation increment should become smaller. However, the increment has a significant impact on the runtime of the proposed coarse registration process. As an example, the experiment with 1000 points in

Table 1, with computation time in the case Δθ = 0.01 (rad), at 126.439 (s), was much higher than that in the case Δθ = 0.05 (rad), at only 1.063 (s).

Another important characteristic of the developed method is the total number of subdivided boxes in the OBB,

n =

k1 ×

k2 ×

k3. From

Table 2, it can be seen that if the OBB contains many subdivided boxes, it is more expensive to find the best rotation. For a fast registration procedure, fewer subdivided boxes are preferable. However, it is also required that the number of subdivided boxes is great enough to achieve the expected rotation. For instance, in the experiment using a connector model of 1000 points, the rotation error rose to 0.057 (rad) for the OBB of

n =

k1 ×

k2 ×

k3 = 4 × 5 × 6, while it reached just 0.017 (rad) if the OBB of

n =

k1 ×

k2 ×

k3 = 7 × 8 × 9.

Besides, the ratio of the overlapped area is especially crucial. As shown in

Table 3, translation and rotation errors in the proposed approach grow in direct proportion to the percentage of the non-overlapping region. This change is especially significant with over 40% of the outliers. This is because it is difficult to estimate translation exactly without a high level of overlapping. The excellent results are obtained for over 80% of shape the overlapping. In this case, the translation and rotation for tested models were at under 1.5 (mm) and 0.02 (rad), respectively. As described in the Introduction, the proposed registration method is developed to register two series of surface scans which exist with a certain degree of optical occlusion from each individual viewpoint of the probe scanning. To accurately register two series of scans under such a circumstance, it is important to have them overlap as much as possible. However, realistically, the overlapped ratio really depends on the complexity of the scanned object’s geometry and how the object is placed on the rotation table. In general, the higher the overlapped ratio between two series of scans, the more accurate the registration that can be achieved.

The result of

Table 4 reveals the performances of the proposed approach and 3D key point descriptors. It shows that the developed method in this paper outperforms in registration accuracy with reasonable operation efficiency. As we know, one significant common problem using feature descriptors is that they usually fail to deal with object geometry that has symmetrical or plural repeated surface contours. We demonstrate the developed approach by using four test cases comprising two regular shapes, a Brazo and a connector, and two featureless models, a cylinder and a socket with 80% shape similarity which was provided by the Industrial Technology Research Institute (ITRI) (as shown in

Figure 11). Given the reported results, it is clear that the proposed approach performs more accurately than the other methods on all the tested data. The computation efficiency of the proposed method is approximately the half of the fastest method, the Signature of Histograms of OrienTations SHOT. However, its time efficiency still ranks within a reasonable range.

To test the noise influence, the synthetic experiments were carried out with respect to three different levels of Gaussian noises: σ = 0.01, 0.02 and 0.03 (standard deviation). The coarse registration results on the connector point-sets are shown in

Table 5. We found that the proposed approach is able to achieve acceptable results with a certain level of noise.