Author Contributions

Conceptualization, Zejun Xiang and Ronghua Yang; methodology, Zejun Xiang, Ronghua Yang, Chang Deng and Mingxing Teng; software, Mingxing Teng and Chang Deng; validation, Zejun Xiang, Ronghua Yang, Chang Deng, Mingxing Teng and Mengkun She; formal analysis, Zejun Xiang, Ronghua Yang and Degui Teng; investigation, Chang Deng, Mingxing Teng and Mengkun She; resources, Zejun Xiang, Ronghua Yang, Chang Deng and Mingxing Teng; data curation, Chang Deng, Mingxing Teng and Mengkun She; writing—original draft preparation, Chang Deng and Mingxing Teng; writing—review and editing, Zejun Xiang, Ronghua Yang, Chang Deng, Degui Teng and Mengkun She; visualization, Zejun Xiang, Ronghua Yang, Chang Deng and Mingxing Teng; supervision, Zejun Xiang, Ronghua Yang and Degui Teng; project administration, Zejun Xiang and Ronghua Yang; funding acquisition, Zejun Xiang. All authors have read and agreed to the published version of the manuscript.

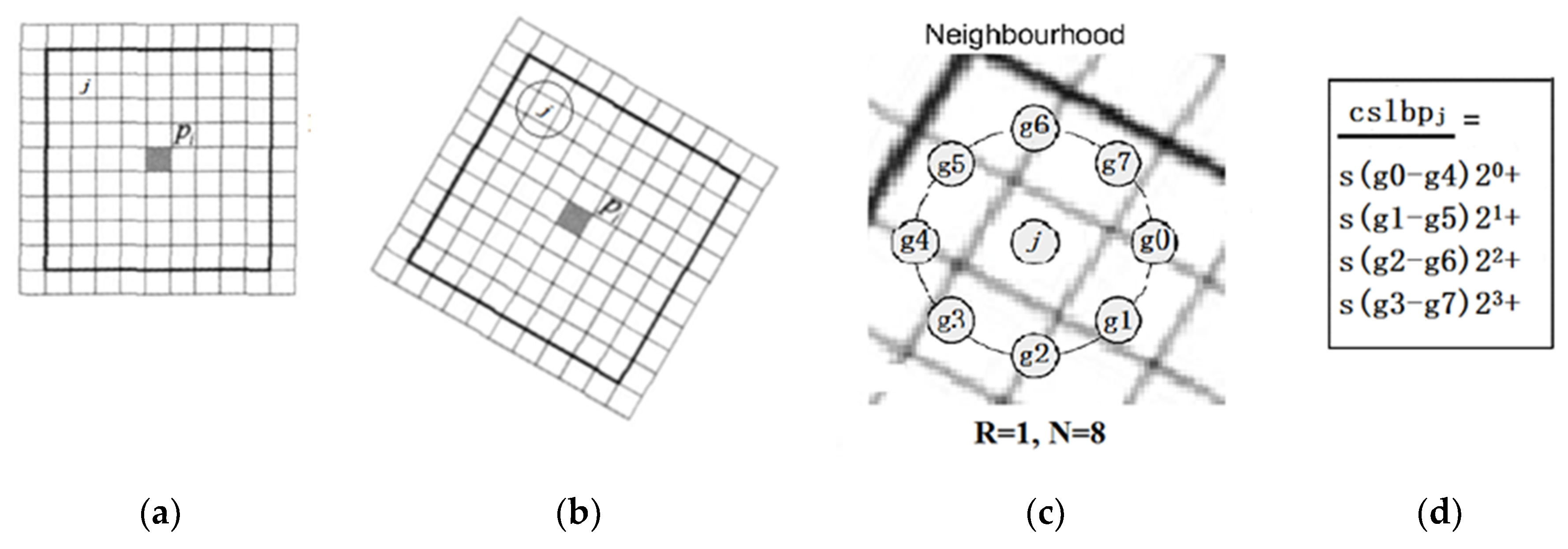

Figure 1.

Steps to compute center-symmetric local binary pattern (CS-LBP) features. (a) Area to compute the CS-LBP features centered around the keypoint; (b) the area after rotation; (c) CS-LBP feature for a neighborhood of 8 pixels of , when and ; (d) binary pattern for CS-LBP feature of .

Figure 1.

Steps to compute center-symmetric local binary pattern (CS-LBP) features. (a) Area to compute the CS-LBP features centered around the keypoint; (b) the area after rotation; (c) CS-LBP feature for a neighborhood of 8 pixels of , when and ; (d) binary pattern for CS-LBP feature of .

Figure 2.

Flow chart for the CS-LBP description vector.

Figure 2.

Flow chart for the CS-LBP description vector.

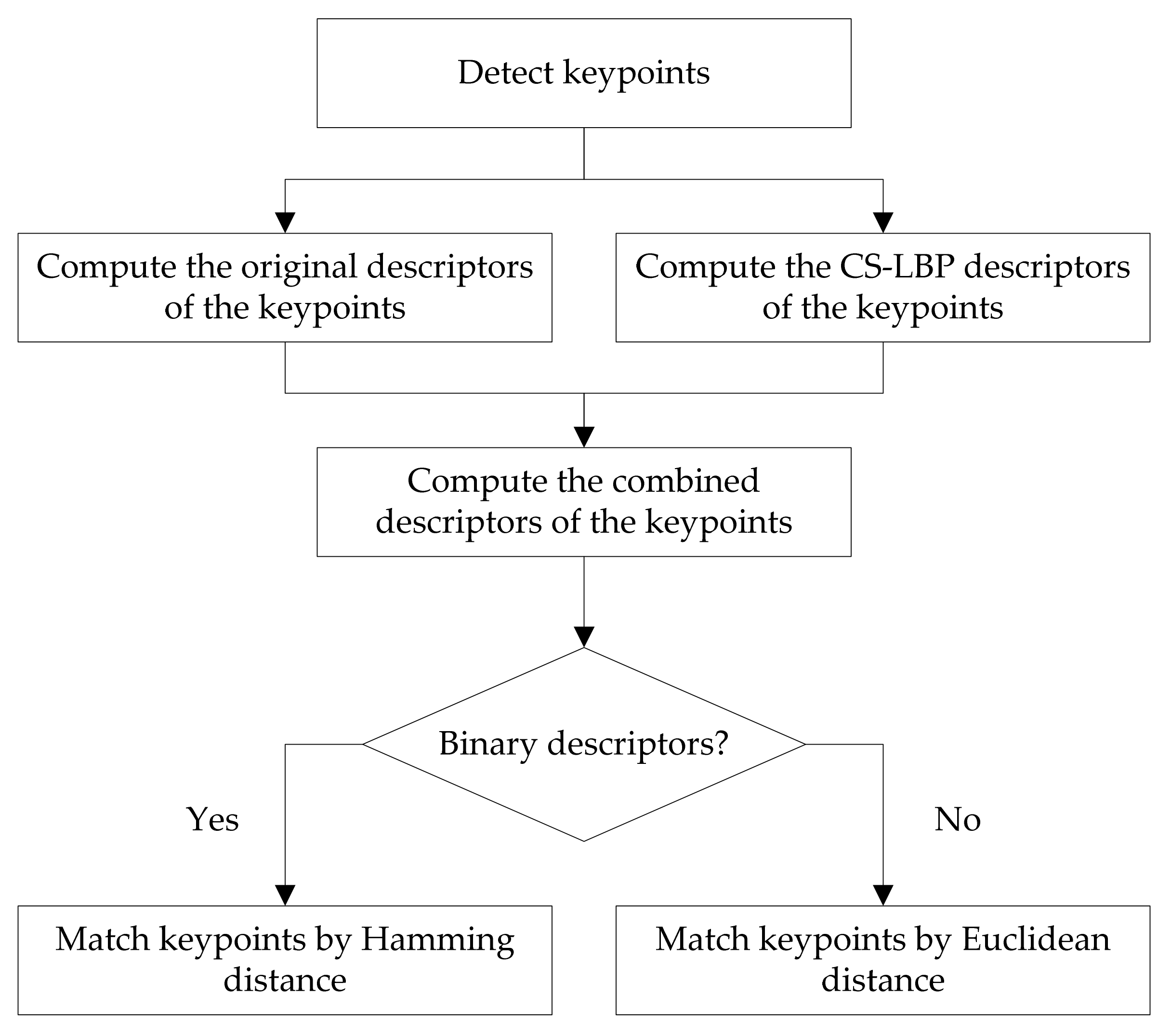

Figure 3.

Flow chart for calculating the combined descriptors and matching the keypoints.

Figure 3.

Flow chart for calculating the combined descriptors and matching the keypoints.

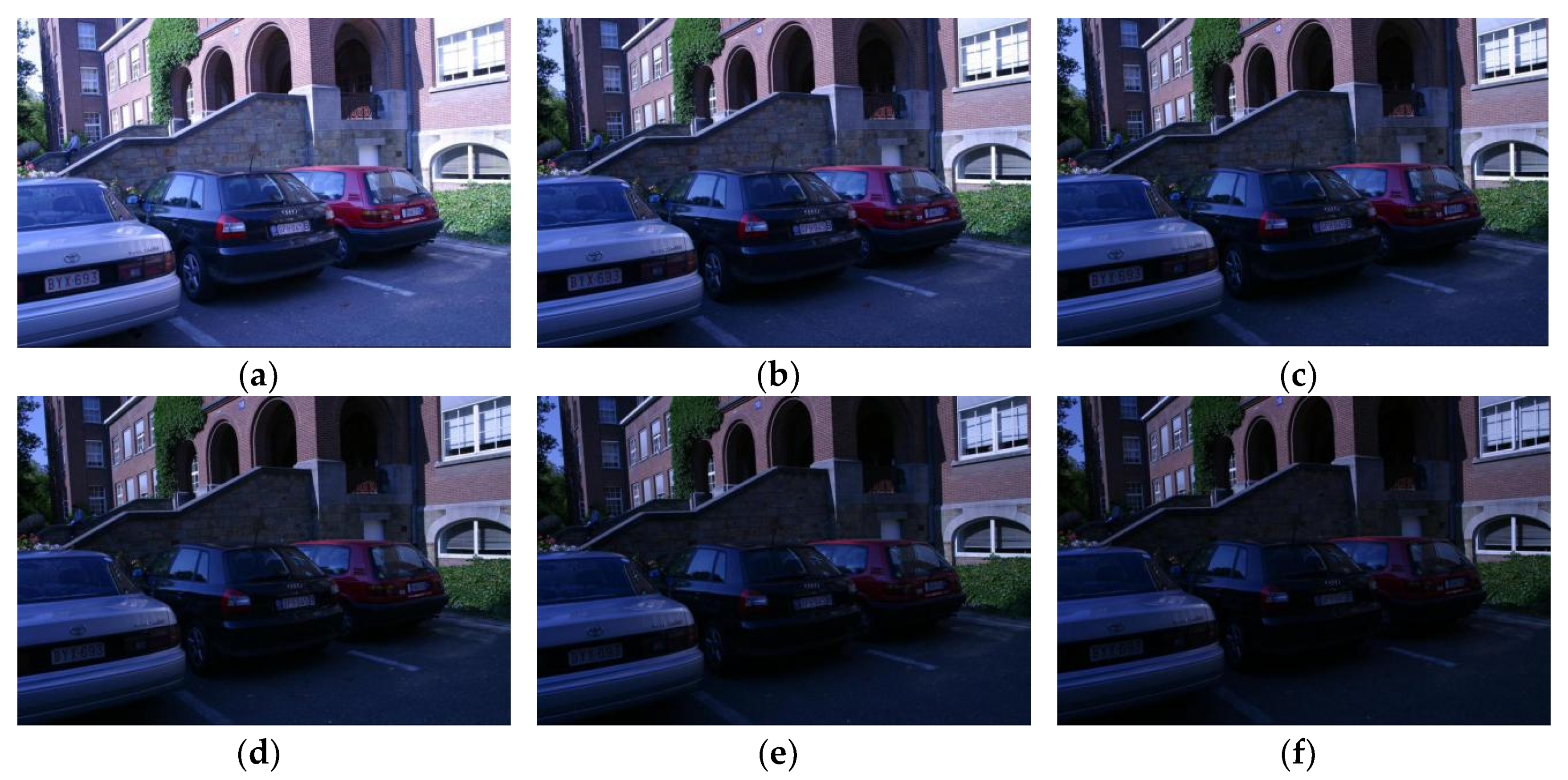

Figure 4.

Leuven sequence. (a) Leuven 1; (b) Leuven 2; (c) Leuven 3; (d) Leuven 4; (e) Leuven 5; and (f) Leuven 6.

Figure 4.

Leuven sequence. (a) Leuven 1; (b) Leuven 2; (c) Leuven 3; (d) Leuven 4; (e) Leuven 5; and (f) Leuven 6.

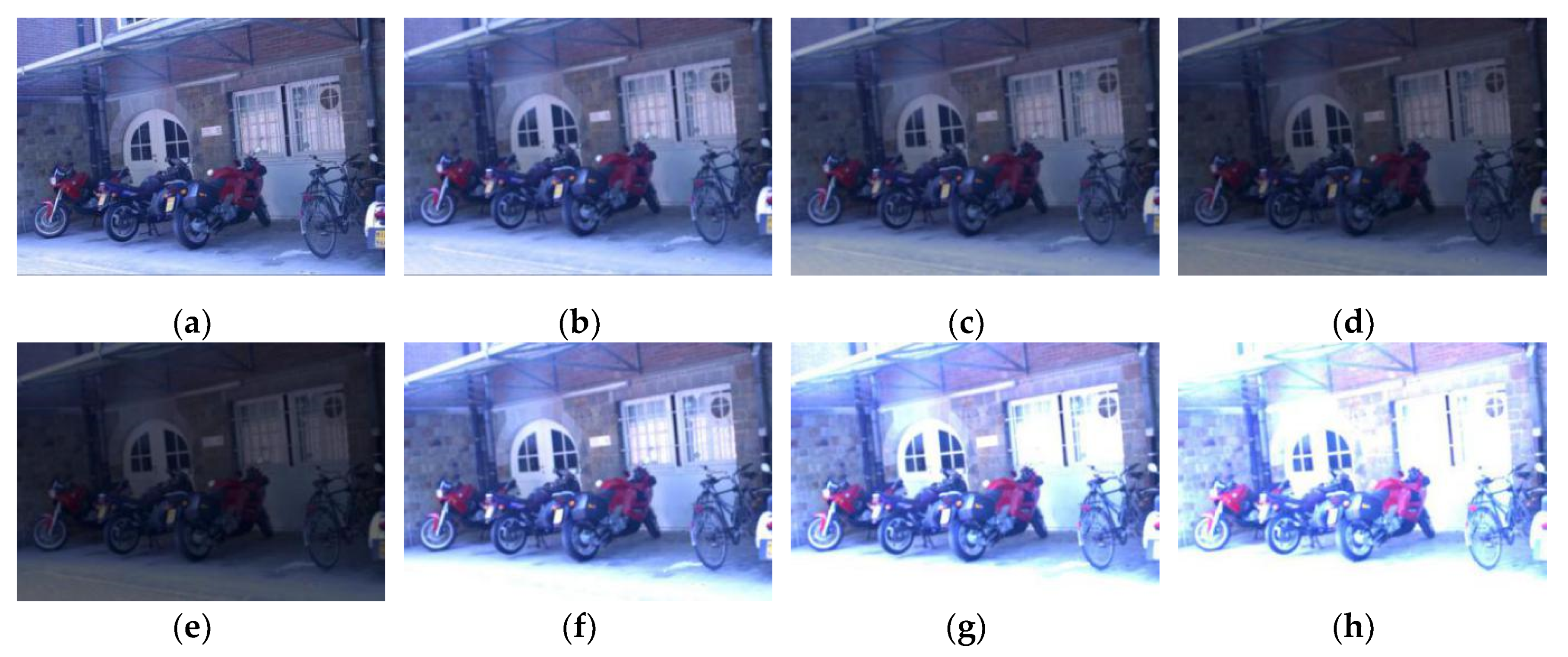

Figure 5.

Bikes sequence. (a) Normal Bikes; (b) New Bikes with increasing blur; (c) New Bikes in an underexposure of −1; (d) New Bikes in an underexposure of −2; (e) New Bikes in an underexposure of −3; (f) New Bikes in an overexposure of +1; (g) New Bikes in an overexposure of +2; and (h) New Bikes in an overexposure of +3.

Figure 5.

Bikes sequence. (a) Normal Bikes; (b) New Bikes with increasing blur; (c) New Bikes in an underexposure of −1; (d) New Bikes in an underexposure of −2; (e) New Bikes in an underexposure of −3; (f) New Bikes in an overexposure of +1; (g) New Bikes in an overexposure of +2; and (h) New Bikes in an overexposure of +3.

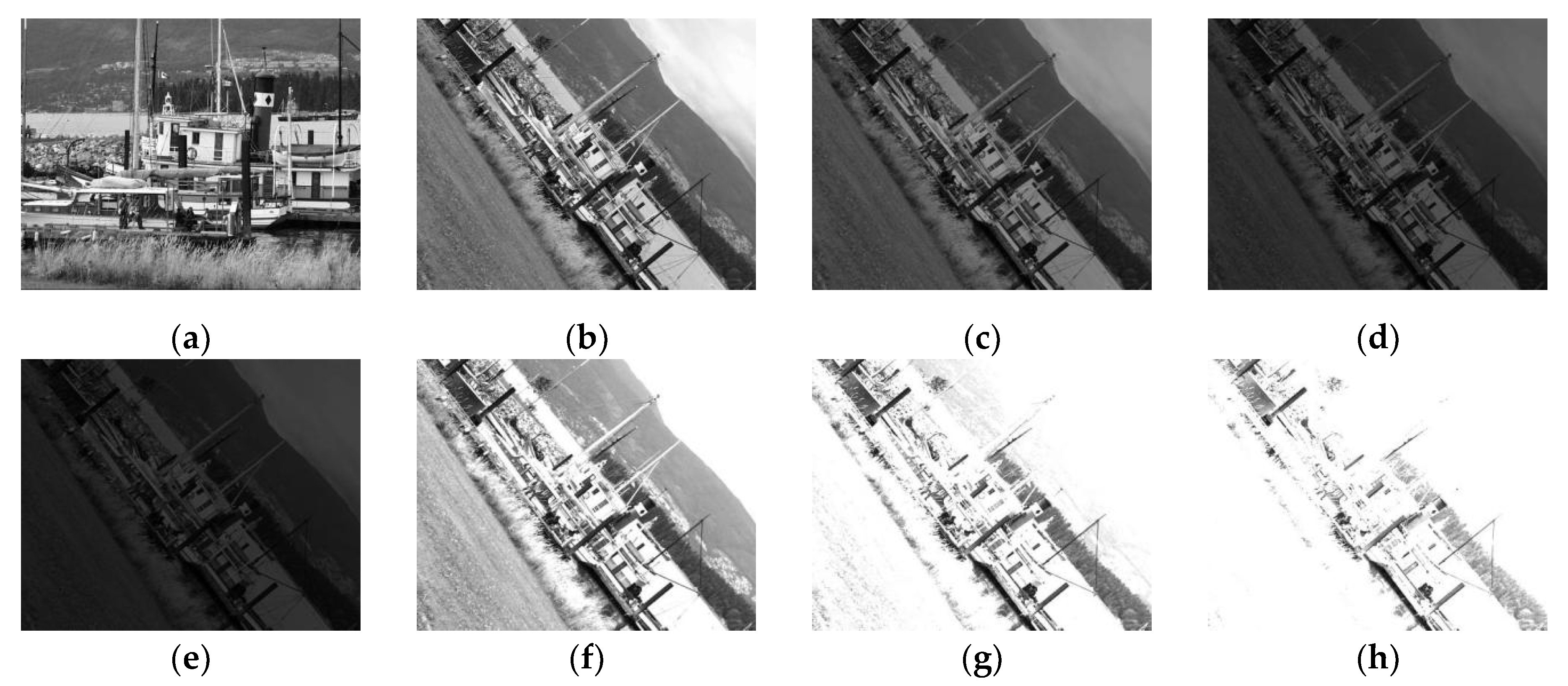

Figure 6.

Boat sequence. (a) Normal Boat; (b) New Boat with a scale reduction factor of 1.9 and a rotation angle of 45 degrees; (c) New Boat in an underexposure of −1; (d) New Boat in an underexposure of −2; (e) New Boat in an underexposure of −3; (f) New Boat in an overexposure of +1; (g) New Boat in an overexposure of +2; (h) New Boat in an overexposure of +3.

Figure 6.

Boat sequence. (a) Normal Boat; (b) New Boat with a scale reduction factor of 1.9 and a rotation angle of 45 degrees; (c) New Boat in an underexposure of −1; (d) New Boat in an underexposure of −2; (e) New Boat in an underexposure of −3; (f) New Boat in an overexposure of +1; (g) New Boat in an overexposure of +2; (h) New Boat in an overexposure of +3.

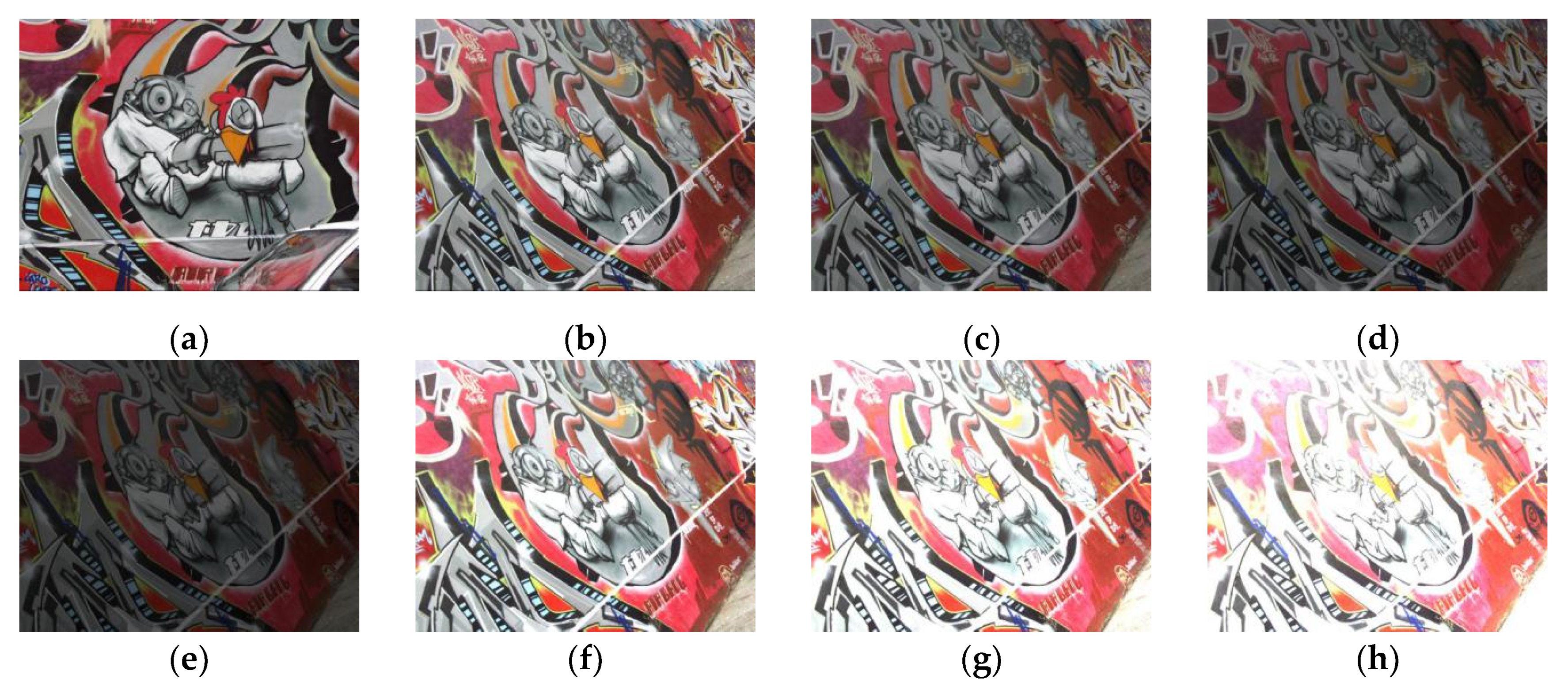

Figure 7.

Graffiti sequence. (a) Normal Graffiti; (b) New Graffiti with a viewpoint changing angle of 40 degrees; (c) New Graffiti in an underexposure of −1; (d) New Graffiti in an underexposure of −2; (e) New Graffiti in an underexposure of −3; (f) New Graffiti in an overexposure of +1; (g) New Graffiti in an overexposure of +2; (h) New Graffiti in an overexposure of +3.

Figure 7.

Graffiti sequence. (a) Normal Graffiti; (b) New Graffiti with a viewpoint changing angle of 40 degrees; (c) New Graffiti in an underexposure of −1; (d) New Graffiti in an underexposure of −2; (e) New Graffiti in an underexposure of −3; (f) New Graffiti in an overexposure of +1; (g) New Graffiti in an overexposure of +2; (h) New Graffiti in an overexposure of +3.

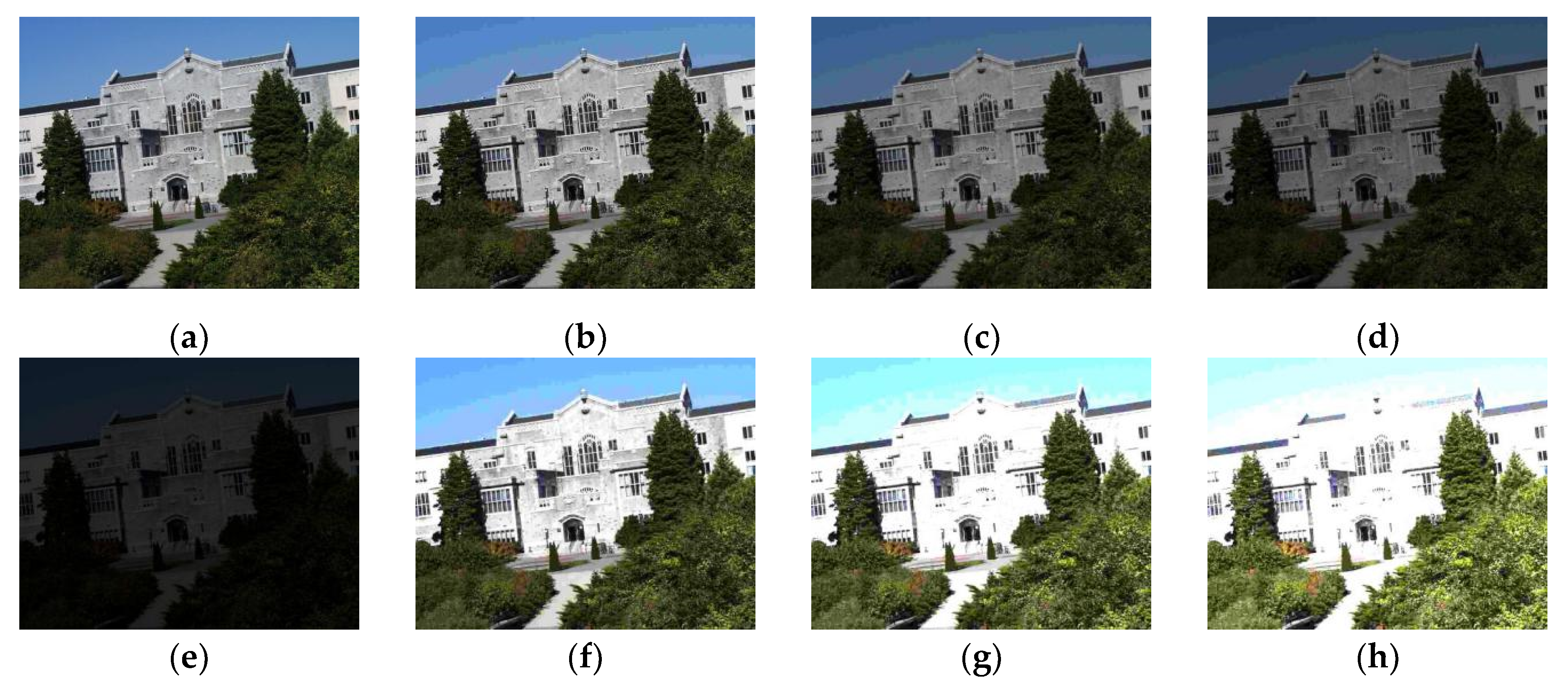

Figure 8.

Ubc sequence. (a) Normal Ubc; (b) New Ubc with a JPEG compression of 90%; (c) New Ubc in an underexposure of −1; (d) New Ubc in an underexposure of −2; (e) New Ubc in an underexposure of −3; (f) New Ubc in an overexposure of +1; (g) New Ubc in an overexposure of +2; (h) New Ubc in an overexposure of +3.

Figure 8.

Ubc sequence. (a) Normal Ubc; (b) New Ubc with a JPEG compression of 90%; (c) New Ubc in an underexposure of −1; (d) New Ubc in an underexposure of −2; (e) New Ubc in an underexposure of −3; (f) New Ubc in an overexposure of +1; (g) New Ubc in an overexposure of +2; (h) New Ubc in an overexposure of +3.

Figure 9.

Matching results of the recall of Leuven sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Leuven sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 9.

Matching results of the recall of Leuven sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Leuven sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

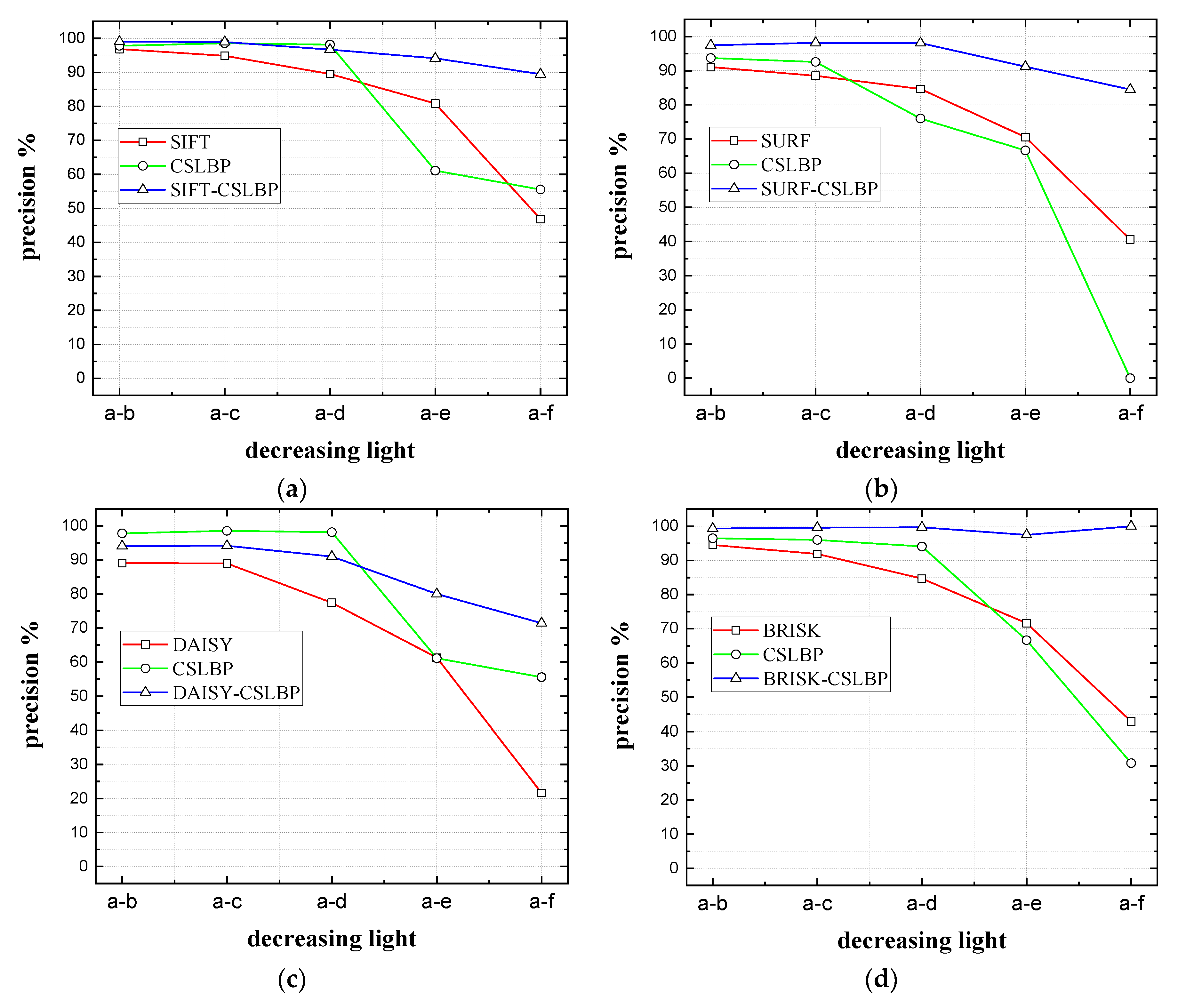

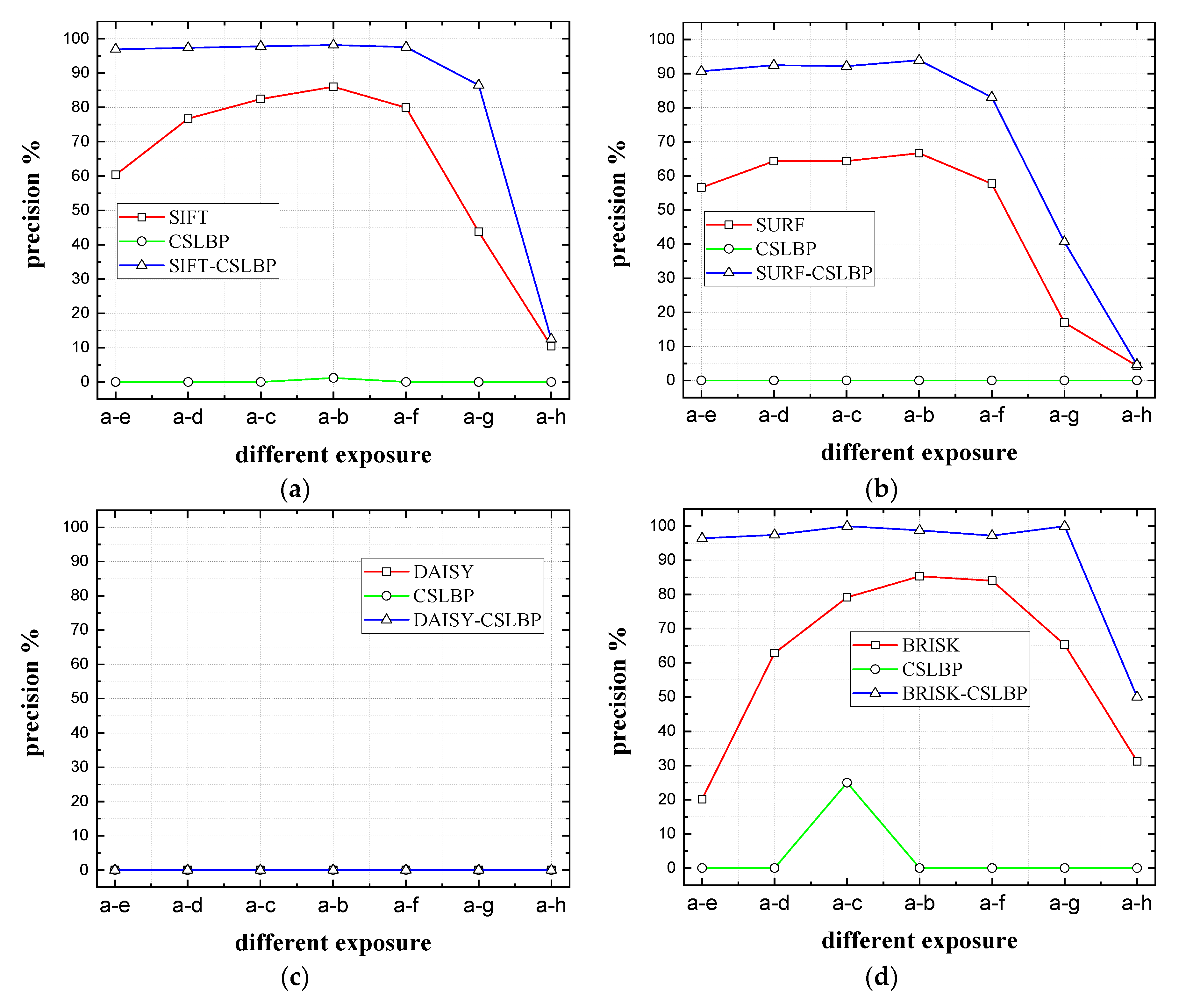

Figure 10.

Matching results of the precision of Leuven sequence for the eight original, the CSLBP, and the improved descriptors. The X label represents the matched images of Leuven sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; and (h) AKAZE features.

Figure 10.

Matching results of the precision of Leuven sequence for the eight original, the CSLBP, and the improved descriptors. The X label represents the matched images of Leuven sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; and (h) AKAZE features.

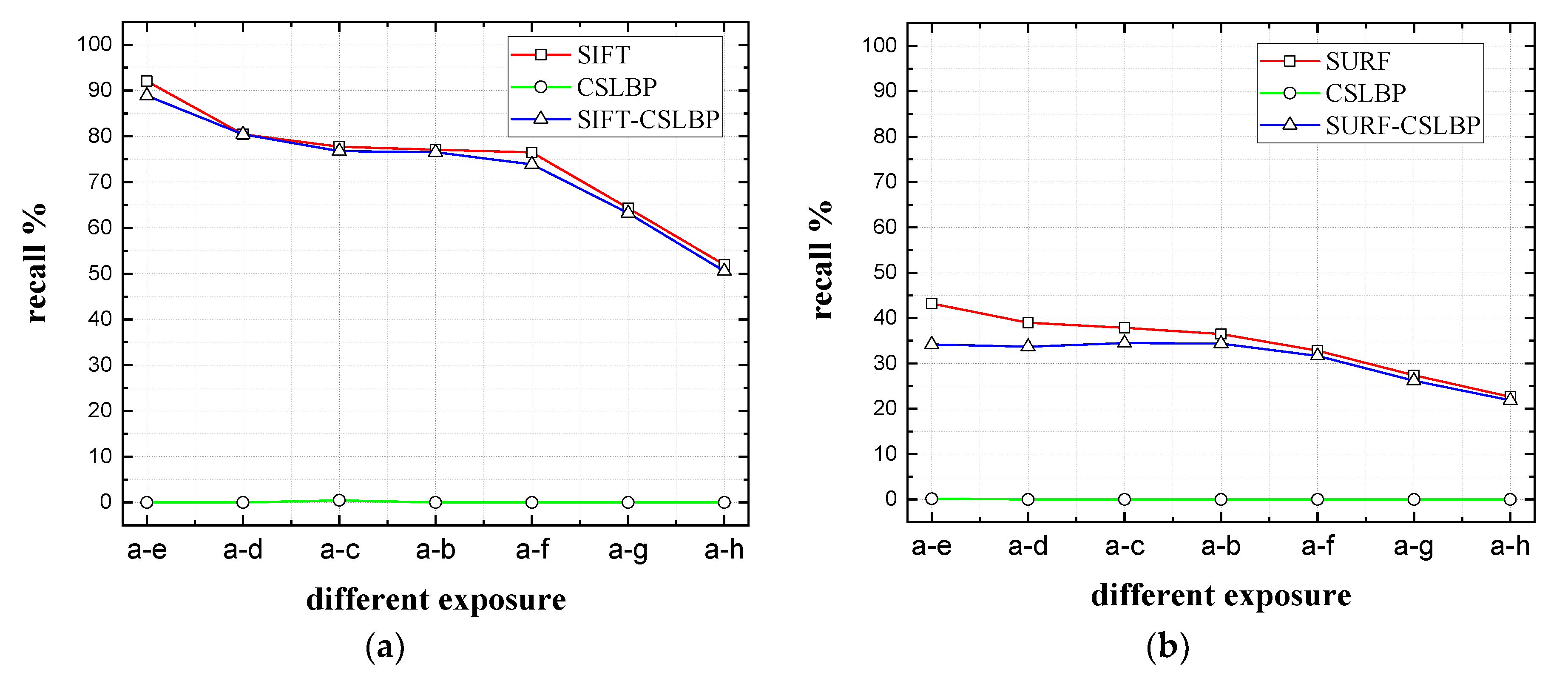

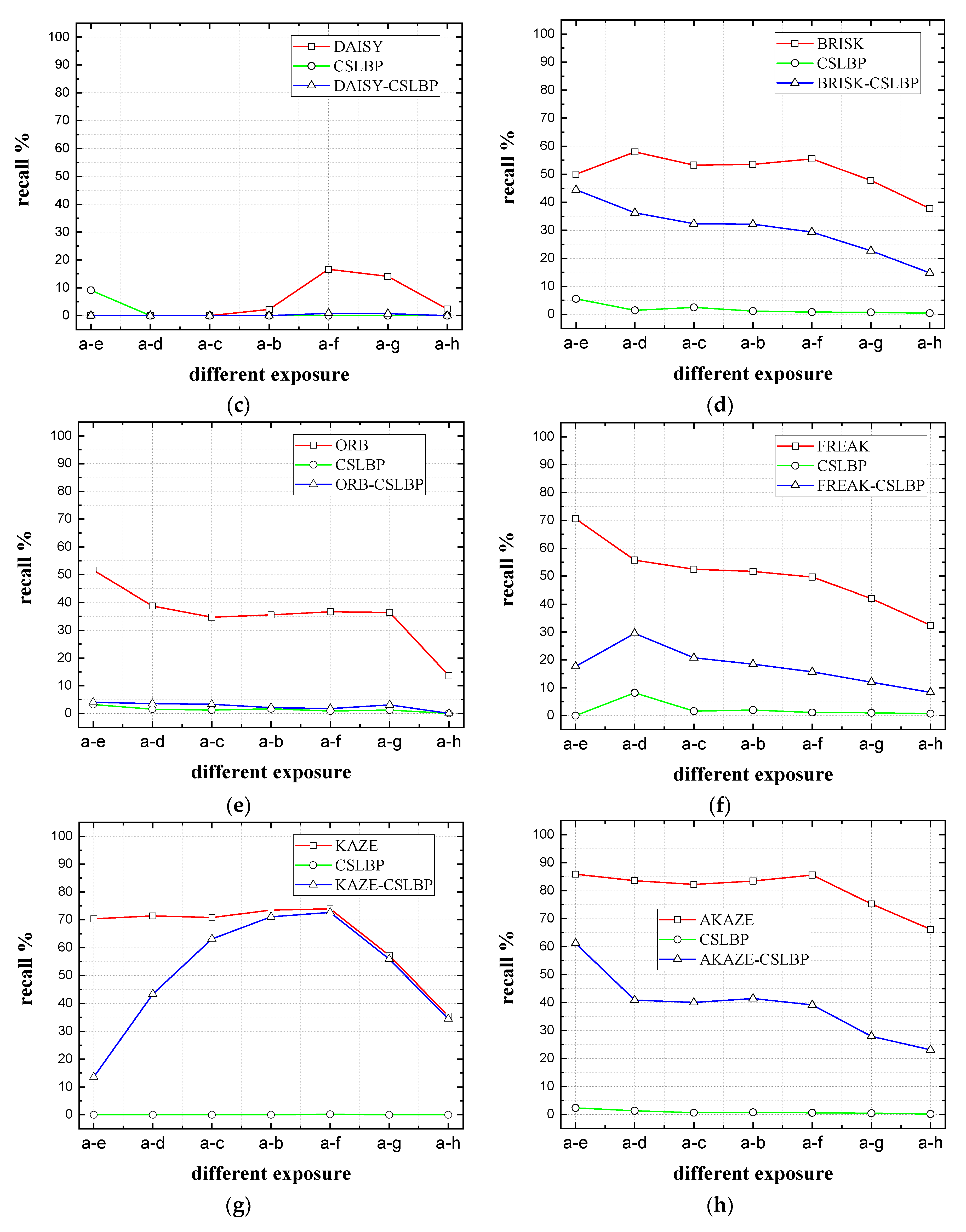

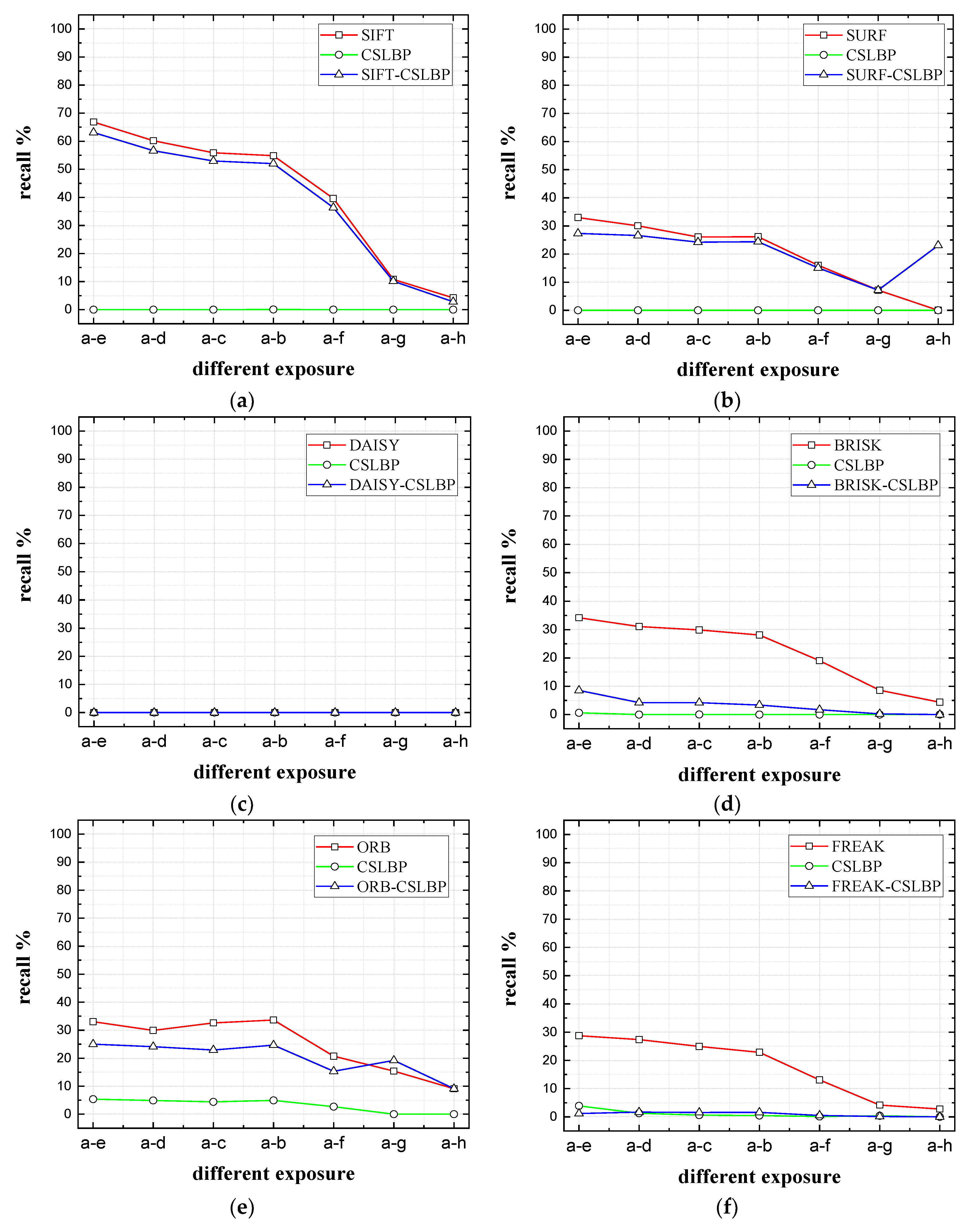

Figure 11.

Matching results of the recall of Bikes sequence for the eight original, the CSLBP, and the improved descriptors. The X label represents the matched images of Bikes sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 11.

Matching results of the recall of Bikes sequence for the eight original, the CSLBP, and the improved descriptors. The X label represents the matched images of Bikes sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

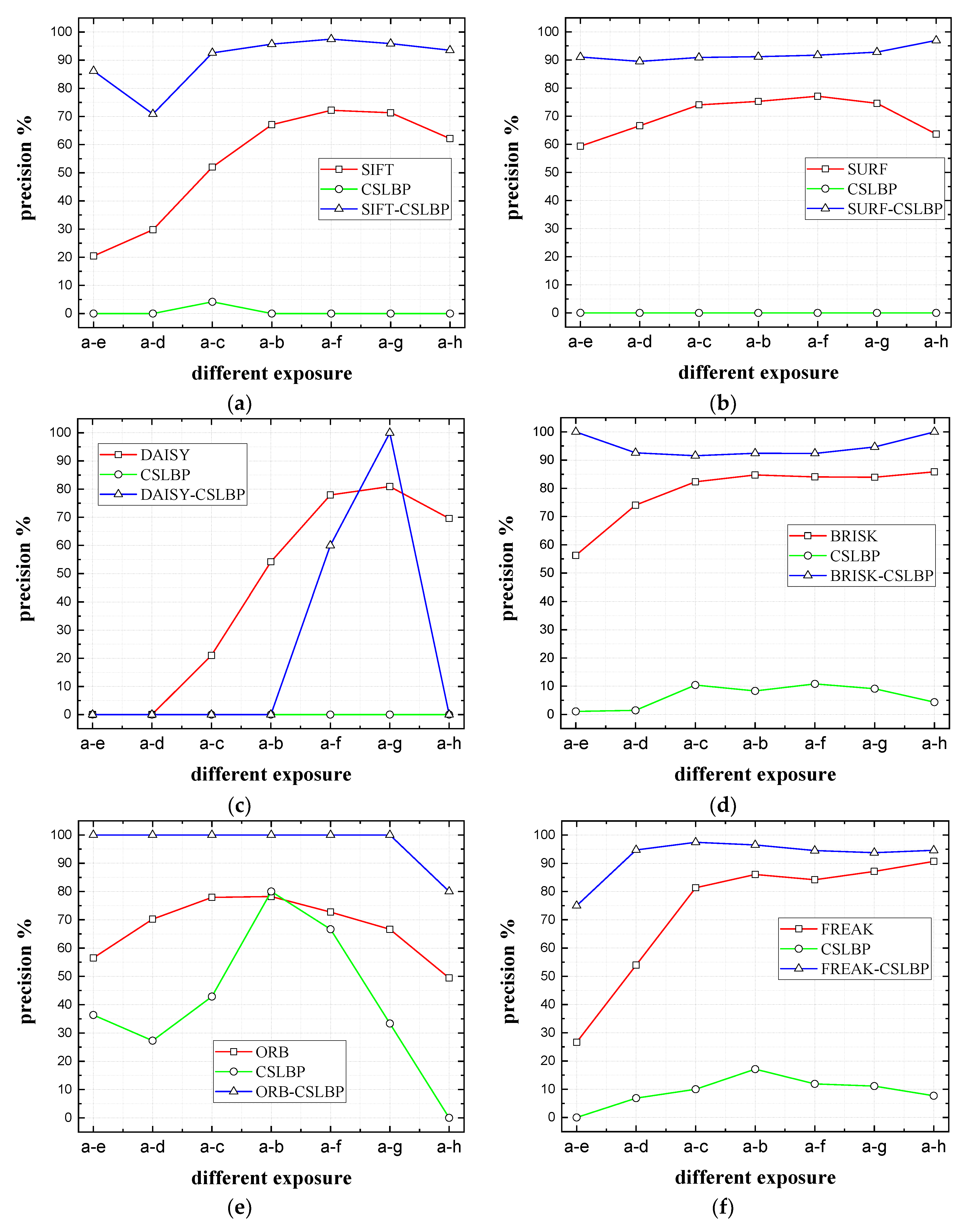

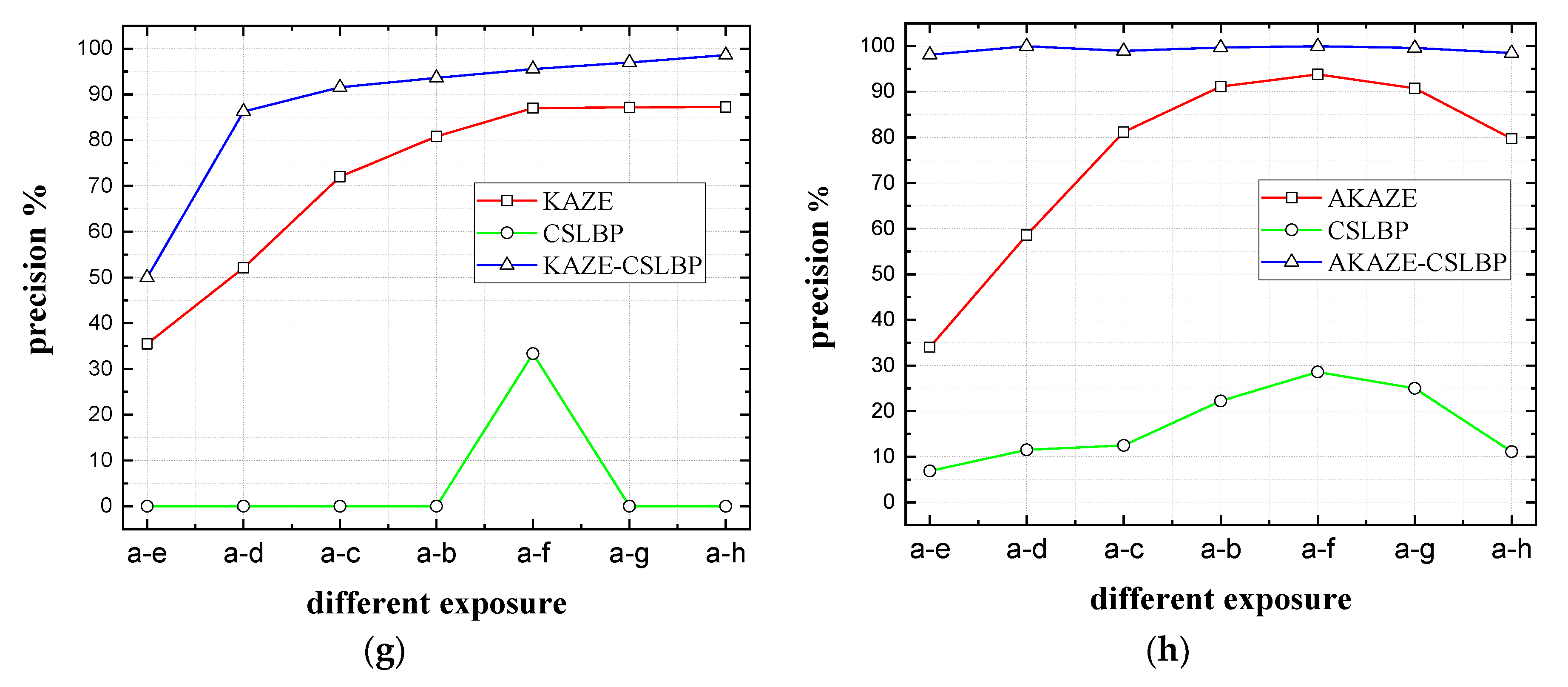

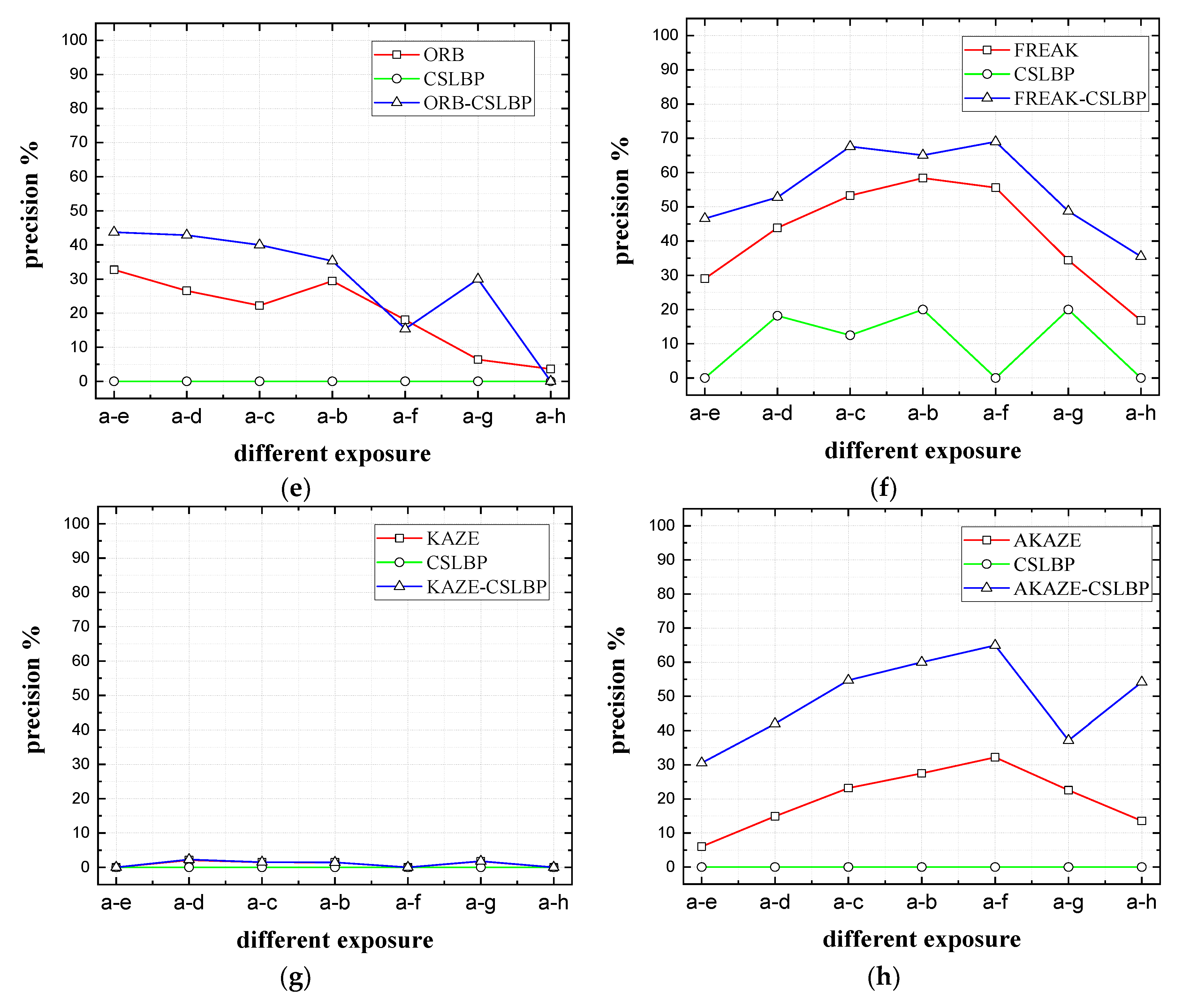

Figure 12.

Matching results of the precision of Bikes sequence for the eight original, the CSLBP, and the improved descriptors. The X label represents the matched images of Bikes sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 12.

Matching results of the precision of Bikes sequence for the eight original, the CSLBP, and the improved descriptors. The X label represents the matched images of Bikes sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 13.

Matching results of the recall of Boat sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Boat sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 13.

Matching results of the recall of Boat sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Boat sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 14.

Matching results of the precision of Boat sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Boat sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; and (h) AKAZE features.

Figure 14.

Matching results of the precision of Boat sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Boat sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; and (h) AKAZE features.

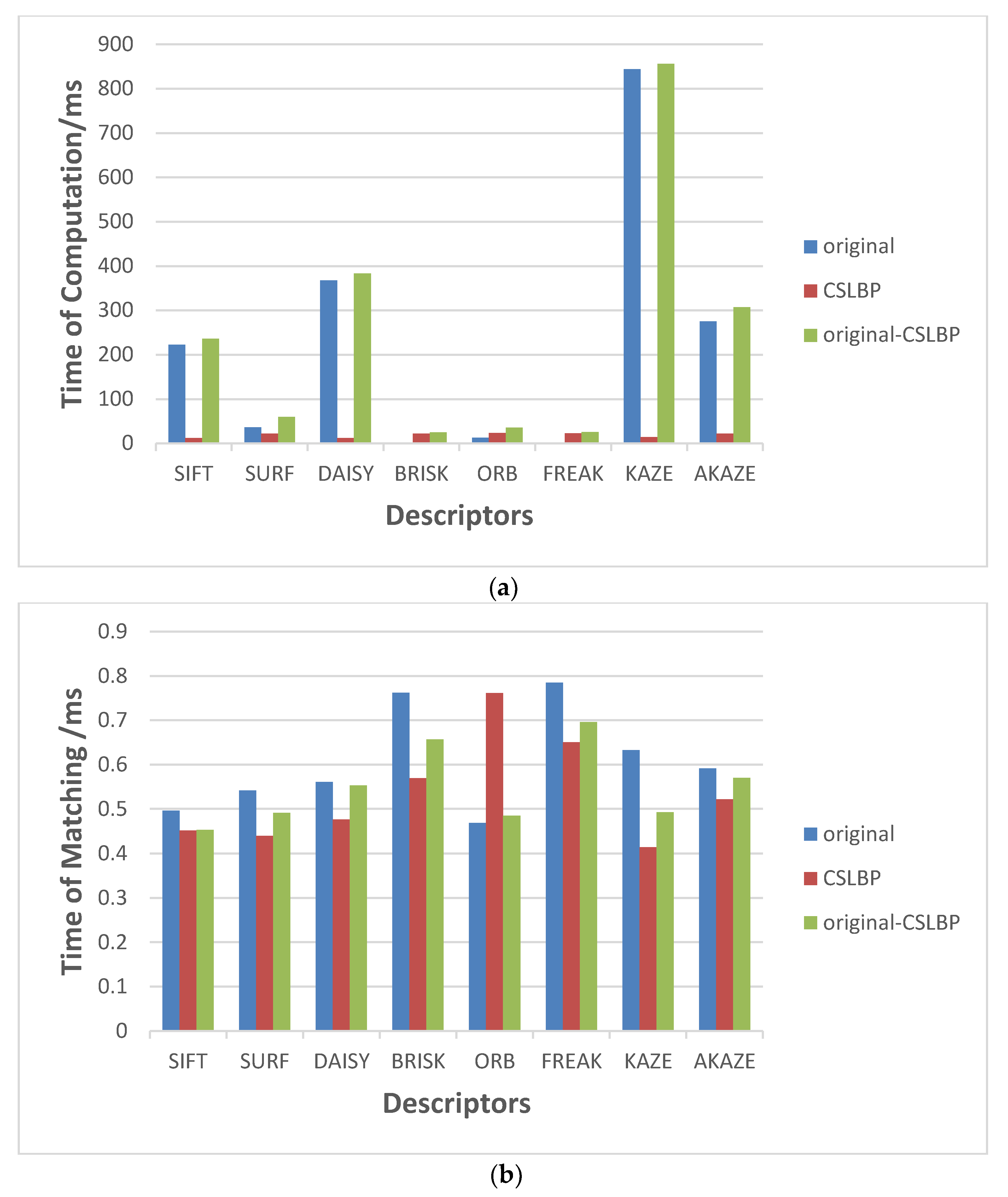

Figure 15.

Matching results of the recall of Graffiti sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Graffiti sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; and (h) AKAZE features.

Figure 15.

Matching results of the recall of Graffiti sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Graffiti sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; and (h) AKAZE features.

Figure 16.

Matching results of the precision of Graffiti sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Graffiti sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 16.

Matching results of the precision of Graffiti sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Graffiti sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

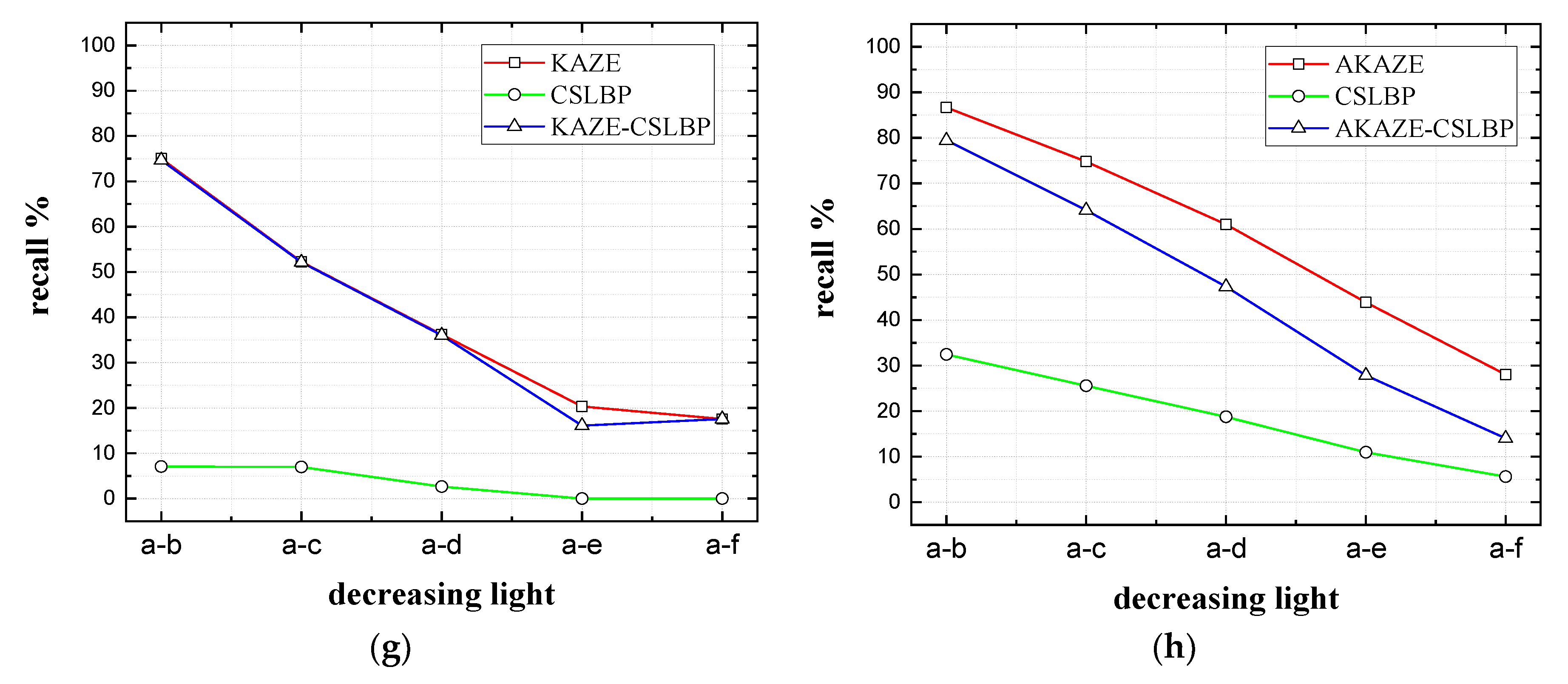

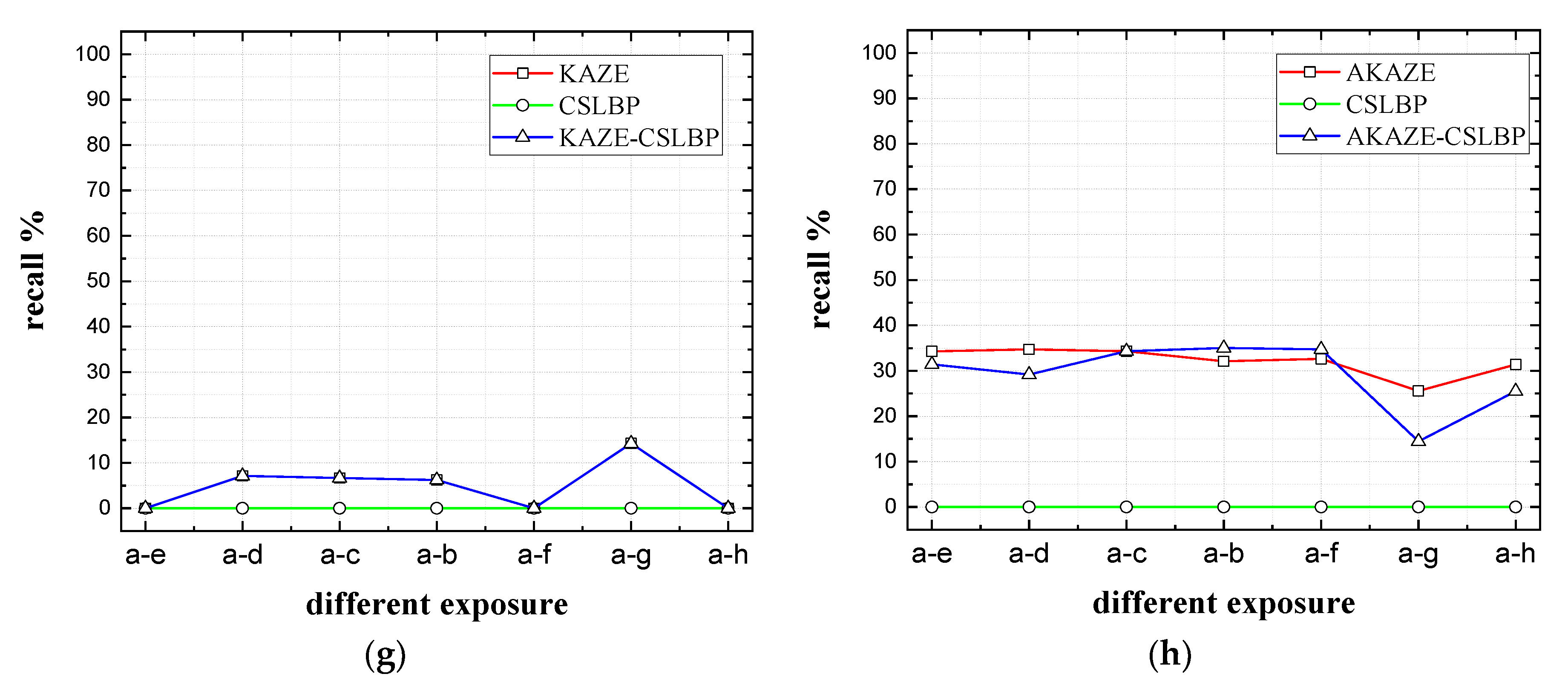

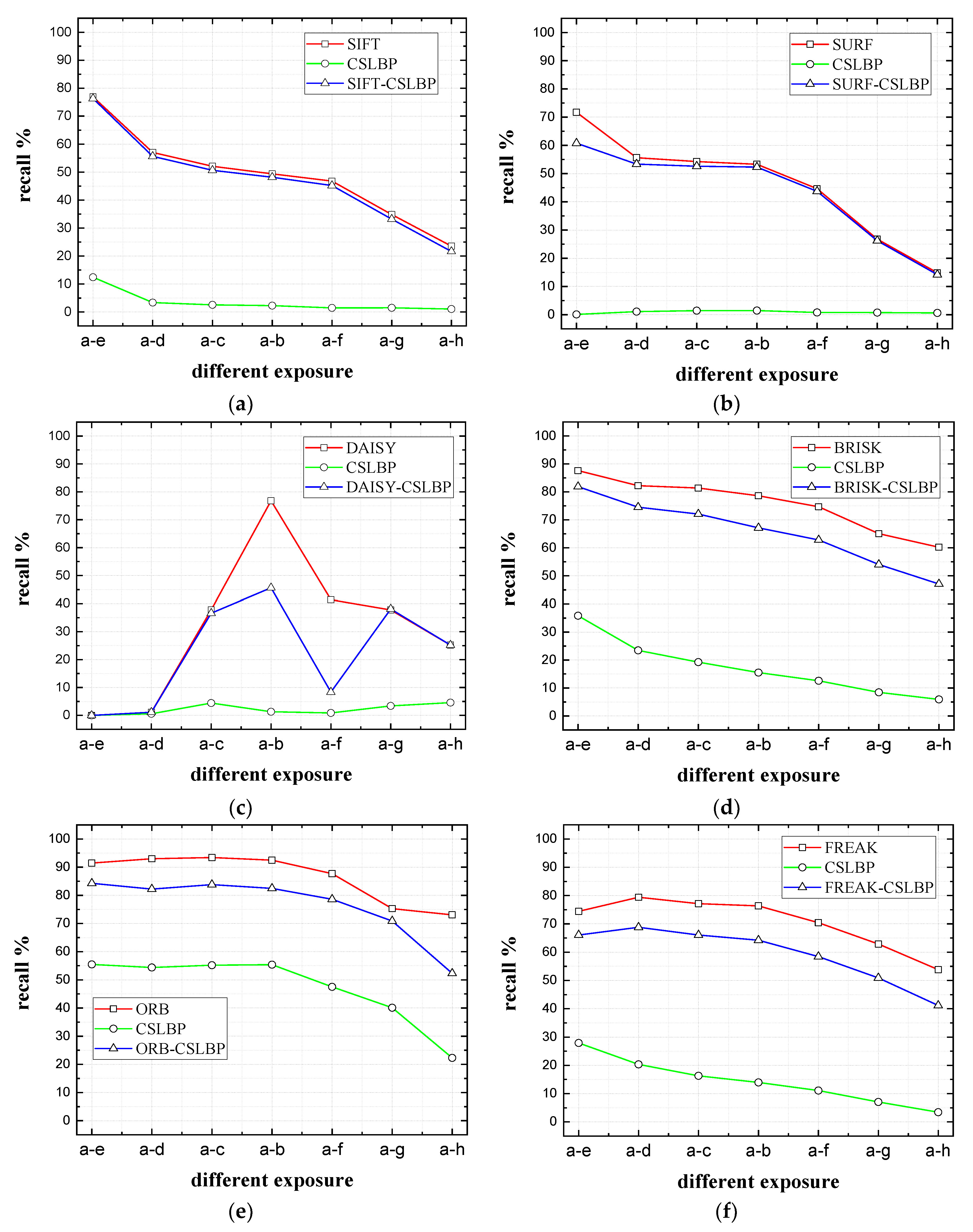

Figure 17.

Matching results of the recall of Ubc sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Ubc sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 17.

Matching results of the recall of Ubc sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Ubc sequence, and the Y label denotes the recall for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 18.

Matching results of the precision of Ubc sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Ubc sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

Figure 18.

Matching results of the precision of Ubc sequence for the eight original, the CSLBP, and the combined descriptors. The X label represents the matched images of Ubc sequence, and the Y label denotes the precision for each descriptor. (a) SIFT features; (b) SURF features; (c) DAISY features; (d) BRISK features; (e) ORB features; (f) FREAK features; (g) KAZE features; (h) AKAZE features.

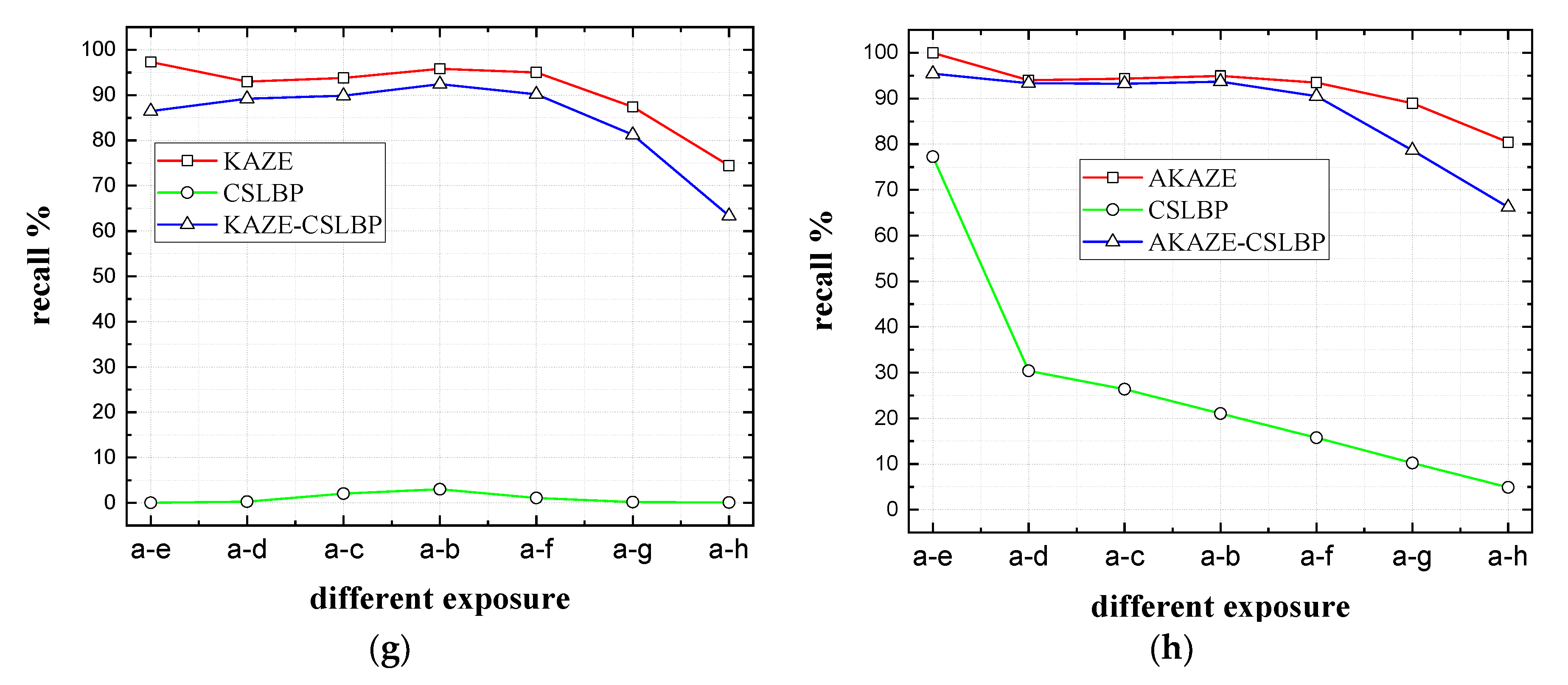

Figure 19.

Comparison results of computational and matching time consumption for the eight original, the CSLBP and the improved descriptors. The blue histograms represent the time consumption of the original descriptors, the red histograms denote the time consumption of the CSLBP descriptors and the green histograms indicate the time consumption of the improved descriptors. (a) Computational time consumption of 30 descriptors; and (b) matching time consumption of 30 descriptors against 30 descriptors.

Figure 19.

Comparison results of computational and matching time consumption for the eight original, the CSLBP and the improved descriptors. The blue histograms represent the time consumption of the original descriptors, the red histograms denote the time consumption of the CSLBP descriptors and the green histograms indicate the time consumption of the improved descriptors. (a) Computational time consumption of 30 descriptors; and (b) matching time consumption of 30 descriptors against 30 descriptors.

Table 1.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Leuven sequence.

Table 1.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Leuven sequence.

| Features | Descriptors | a-b | a-c | a-d | a-e | a-f |

|---|

| SIFT | CSLBP | −73.6% | −61.0% | −49.1% | −34.3% | −23.2% |

| SIFT-CSLBP | −0.2% | −1.1% | −0.9% | −1.0% | 1.7% |

| SURF | CSLBP | −55.0% | −45.0% | −31.5% | −22.6% | −18.5% |

| SURF-CSLBP | −1.1% | −0.7% | −0.2% | −0.5% | −2.3% |

| DAISY | CSLBP | −68.7% | −43.1% | −19.4% | −19.6% | −10.0% |

| DAISY-CSLBP | 16.6% | 15.4% | 14.5% | 1.4% | −10.0% |

| BRISK | CSLBP | −37.7% | −31.9% | −31.2% | −35.9% | −43.1% |

| BRISK-CSLBP | −4.6% | −2.8% | −4.0% | −12.7% | −13.8% |

| ORB | CSLBP | −22.1% | −13.1% | 6.9% | 0 | 22.2% |

| ORB-CSLBP | −12.6% | −7.9% | −3.4% | 0 | 0 |

| FREAK | CSLBP | −34.7% | −27.2% | −22.6% | −21.4% | −15.6% |

| FREAK-CSLBP | −5.9% | −2.4% | −0.5% | 3.1% | −5.2% |

| KAZE | CSLBP | −67.9% | −45.3% | −33.6% | −20.3% | −17.6% |

| KAZE-CSLBP | −0.3% | −0.1% | −0.1% | −4.2% | 0 |

| AKAZE | CSLBP | −54.2% | −49.2% | −42.2% | −32.9% | −22.4% |

| AKAZE-CSLBP | −7.2% | −10.7% | −13.6% | −16.0% | −14.0% |

Table 2.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Leuven sequence.

Table 2.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Leuven sequence.

| Features | Descriptors | a-b | a-c | a-d | a-e | a-f |

|---|

| SIFT | CSLBP | 1.0% | 3.7% | 8.6% | −19.7% | 8.7% |

| SIFT-CSLBP | 2.2% | 4.0% | 7.2% | 13.3% | 42.6% |

| SURF | CSLBP | 2.6% | 4.1% | −8.6% | −3.9% | −40.6% |

| SURF-CSLBP | 6.4% | 9.7% | 13.4% | 20.6% | 43.9% |

| DAISY | CSLBP | 8.7% | 9.6% | 20.7% | −0.2% | 33.9% |

| DAISY-CSLBP | 5.0% | 5.2% | 13.6% | 18.7% | 49.8% |

| BRISK | CSLBP | 2.0% | 4.1% | 9.4% | −5.0% | −12.1% |

| BRISK-CSLBP | 4.8% | 7.7% | 15.0% | 25.8% | 57.1% |

| ORB | CSLBP | −0.5% | 4.0% | −1.5% | 0 | 50.0% |

| ORB-CSLBP | 12.6% | 12.2% | 16.7% | 50% | 75.0% |

| FREAK | CSLBP | 5.4% | 10.7% | 12.2% | 18.3% | 19.0% |

| FREAK-CSLBP | 5.8% | 11.0% | 17.8% | 36.8% | 63.5% |

| KAZE | CSLBP | 7.1% | 9.7% | 24.5% | −64.1% | −43.4% |

| KAZE-CSLBP | 5.8% | 13.0% | 20.1% | 7.6% | 37.9% |

| AKAZE | CSLBP | 0.1% | 0.1% | 1.3% | −4.0% | −22.4% |

| AKAZE-CSLBP | 0.1% | 1.8% | 4.7% | 10.4% | 16.8% |

Table 3.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Bikes sequence.

Table 3.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Bikes sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

| SIFT | CSLBP | −92.1% | −80.5% | −77.3% | −77.1% | −76.5% | −64.3% | −51.9% |

| SIFT-CSLBP | −3.2% | 0 | −0.9% | −0.6% | −2.6% | −1.1% | −1.4% |

| SURF | CSLBP | −43.1% | −39.0% | −37.9% | −36.5% | −32.8% | −27.4% | −22.7% |

| SURF-CSLBP | −9.0% | −5.3% | −3.4% | −2.1% | −1.1% | −1.2% | −0.8% |

| DAISY | CSLBP | 9.1% | 0 | 0 | −2.2% | −16.7% | −14.1% | −2.4% |

| DAISY-CSLBP | 0 | 0 | 0 | −2.2% | −15.8% | −13.4% | −2.4% |

| BRISK | CSLBP | −44.4% | −56.5% | −50.7% | −52.3% | −54.7% | −47.1% | −37.3% |

| BRISK-CSLBP | −5.6% | −21.7% | −20.9% | −21.3% | −26.1% | −25.1% | −23.0% |

| ORB | CSLBP | −48.4% | −37.2% | −33.5% | −33.9% | −35.8% | −35.2% | −13.6% |

| ORB-CSLBP | −47.6% | −35.2% | −31.4% | −33.5% | −34.9% | −33.3% | −13.6% |

| FREAK | CSLBP | −70.6% | −47.5% | −50.8% | −49.7% | −48.5% | −41.0% | −31.7% |

| FREAK-CSLBP | −52.9% | −26.2% | −31.7% | −33.2% | −33.9% | −30.0% | −24.1% |

| KAZE | CSLBP | −70.4% | −71.4% | −70.8% | −73.5% | −73.8% | −57.3% | −35.4% |

| KAZE-CSLBP | −56.8% | −28.1% | −7.7% | −2.4% | −1.3% | −1.3% | −1.0% |

| AKAZE | CSLBP | −83.5% | −82.2% | −81.6% | −82.7% | −85.0% | −74.8% | −66.0% |

| AKAZE-CSLBP | −24.7% | −42.7% | −42.2% | −42.0% | −46.4% | −47.3% | −43.1% |

Table 4.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Bikes sequence.

Table 4.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Bikes sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −20.4% | −29.8% | −47.9% | −67.1% | −72.2% | −71.3% | −62.2% |

| SIFT-CSLBP | 65.7% | 41.1% | 40.5% | 28.6% | 25.3% | 24.5% | 31.4% |

| SURF | CSLBP | −59.3% | −66.6% | −74.0% | −75.3% | −77.1% | −74.6% | −63.6% |

| SURF-CSLBP | 31.7% | 22.9% | 16.9% | 15.9% | 14.6% | 18.2% | 33.3% |

| DAISY | CSLBP | 0.1% | 0 | −21.1% | −54.2% | −77.9% | −80.9% | −69.6% |

| DAISY-CSLBP | 0 | 0 | −21.1% | −54.2% | −17.9% | 19.1% | −69.6% |

| BRISK | CSLBP | −55.2% | −72.6% | −71.9% | −76.4% | −73.2% | −74.8% | −81.5% |

| BRISK-CSLBP | 43.8% | 18.5% | 9.2% | 7.7% | 8.3% | 10.7% | 14.1% |

| ORB | CSLBP | −20.2% | −43.0% | −35.1% | 1.8% | −6.1% | −33.3% | −49.5% |

| ORB-CSLBP | 43.5% | 29.8% | 22.1% | 21.8% | 27.3% | 33.3% | 30.5% |

| FREAK | CSLBP | −26.7% | −47.1% | −71.4% | −68.9% | −72.3% | −76.0% | −83.0% |

| FREAK-CSLBP | 48.3% | 40.8% | 16.1% | 10.5% | 10.4% | 6.6% | 3.9% |

| KAZE | CSLBP | −35.5% | −52.1% | −72.0% | −80.8% | −53.7% | −87.2% | −87.2% |

| KAZE-CSLBP | 14.5% | 34.2% | 19.5% | 12.8% | 8.5% | 9.8% | 11.3% |

| AKAZE | CSLBP | −27.1% | −47.1% | −68.7% | −68.9% | −65.3% | −65.8% | −68.6% |

| AKAZE-CSLBP | 64.1% | 41.4% | 17.7% | 8.5% | 6.2% | 8.8% | 18.8% |

Table 5.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Boat sequence.

Table 5.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Boat sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −66.8% | −60.2% | −55.9% | −54.8% | −39.6% | −10.8% | −4.2% |

| SIFT-CSLBP | −3.7% | −3.6% | −2.9% | −2.8% | −3.2% | −0.6% | −1.4% |

| SURF | CSLBP | −33.0% | −30.1% | −26.1% | −26.2% | −16.0% | −7.2% | 0 |

| SURF-CSLBP | −5.6% | −3.5% | −1.8% | −1.8% | −0.9% | 0 | 23.1% |

| DAISY | CSLBP | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| DAISY-CSLBP | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| BRISK | CSLBP | −33.5% | −31.1% | −29.8% | −28.1% | −19.0% | −8.6% | −4.4% |

| BRISK-CSLBP | −25.6% | −26.8% | −25.7% | −24.7% | −17.3% | −8.4% | −4.4% |

| ORB | CSLBP | −27.7% | −25.0% | −28.2% | −28.7% | −18.0% | −15.4% | −9.1% |

| ORB-CSLBP | −8.0% | −5.8% | −9.7% | −9.0% | −5.4% | 3.8% | 0 |

| FREAK | CSLBP | −24.9% | −26.1% | −24.3% | −22.4% | −12.9% | −3.8% | −2.8% |

| FREAK-CSLBP | −27.5% | −25.7% | −23.4% | −21.3% | −12.6% | −4.0% | −2.8% |

| KAZE | CSLBP | −20.0% | −13.3% | −15.8% | −19.2% | −27.8% | 0 | 0 |

| KAZE-CSLBP | −20.0% | 0 | 0 | 0 | 0 | 0 | 0 |

| AKAZE | CSLBP | −48.0% | −45.4% | −42.1% | −43.5% | −32.8% | −10.8% | −3.8% |

| AKAZE-CSLBP | −40.0% | −37.5% | −33.6% | −34.9% | −29.5% | −10.1% | 1.5% |

Table 6.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Boat sequence.

Table 6.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Boat sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −60.3% | −76.7% | −82.4% | −84.8% | −79.9% | −43.8% | −10.5% |

| SIFT-CSLBP | 36.6% | 20.6% | 15.3% | 12.1% | 17.6% | 42.7% | 2.0% |

| SURF | CSLBP | −56.6% | −64.3% | −64.3% | −66.7% | −57.7% | −17.0% | −4.3% |

| SURF-CSLBP | 34.1% | 28.1% | 27.8% | 27.3% | 25.4% | 23.6% | 0.3% |

| DAISY | CSLBP | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| DAISY-CSLBP | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| BRISK | CSLBP | −20.2% | −62.8% | −54.2% | −85.3% | −84.0% | −65.3% | −31.3% |

| BRISK-CSLBP | 76.2% | 34.6% | 20.8% | 13.4% | 13.2% | 34.7% | 18.8% |

| ORB | CSLBP | −43.2% | −40.8% | −47.6% | −48.1% | −46.0% | −26.5% | −6.8% |

| ORB-CSLBP | 10.3% | 8.5% | 3.6% | 4.2% | 19.1% | 18.9% | 43.2% |

| FREAK | CSLBP | −15.5% | −53.0% | −68.7% | −75.6% | −77.3% | −41.9% | −14.8% |

| FREAK-CSLBP | 81.3% | 37.8% | 24.4% | 18.1% | 21.5% | 57.0% | 35.2% |

| KAZE | CSLBP | −7.1% | −6.0% | −9.2% | −12.3% | −27.3% | −5.6% | 0 |

| KAZE-CSLBP | −7.1% | 34.0% | 50.8% | 37.7% | 63.6% | −5.6% | 0 |

| AKAZE | CSLBP | −11.1% | −43.8% | −72.3% | −77.5% | −77.0% | −60.9% | −23.9% |

| AKAZE-CSLBP | 87.2% | 52.6% | 25.6% | 16.7% | 19.6% | 38.1% | 14.7% |

Table 7.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Graffiti sequence.

Table 7.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Graffiti sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −35.0% | −37.1% | −37.8% | −32.1% | −28.5% | −24.3% | −18.9% |

| SIFT-CSLBP | 0 | −0.6% | −2.7% | −0.5% | −1.7% | −0.9% | 0 |

| SURF | CSLBP | −23.6% | −21.4% | −21.3% | −22.2% | −23.6% | −9.1% | −22.7% |

| SURF-CSLBP | −0.9% | −0.7% | −0.7% | −1.2% | −0.7% | 0 | −4.5% |

| DAISY | CSLBP | 0 | 0 | 0 | −10.8% | 0 | 0 | 0 |

| DAISY-CSLBP | 0 | 0 | 0 | −2.7% | 0 | 0 | 0 |

| BRISK | CSLBP | −20.5% | −25.7% | −26.8% | −24.4% | −23.5% | −16.9% | −13.4% |

| BRISK-CSLBP | 3.1% | −4.9% | −0.4% | −7.8% | −5.3% | −8.2% | −4.9% |

| ORB | CSLBP | −36.2% | −32.1% | −26.9% | −41.7% | −30.6% | −21.4% | −40.0% |

| ORB-CSLBP | −23.4% | −15.1% | −15.4% | −29.2% | −25.0% | 0 | −40.0% |

| FREAK | CSLBP | −32.0% | −28.4% | −28.7% | −28.2% | −23.6% | −16.8% | −14.3% |

| FREAK-CSLBP | −12.6% | −9.8% | −4.9% | −8.0% | −7.5% | −9.6% | −6.4 |

| KAZE | CSLBP | 0 | −7.1% | −6.7% | −6.3% | 0 | −14.3% | 0 |

| KAZE-CSLBP | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| AKAZE | CSLBP | −34.3% | −34.7% | −34.3% | −32.1% | −32.6% | −25.6% | −31.4% |

| AKAZE-CSLBP | −2.9% | −5.6% | 0 | 2.9% | 2.1% | −11.1% | −5.9% |

Table 8.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Graffiti sequence.

Table 8.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Graffiti sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −16.4% | −27.2% | −31.4% | −29.9% | −23.2% | −14.1% | −4.7% |

| SIFT-CSLBP | 1.7% | 4.7% | 4.8% | 5.7% | 2.9% | 2.8% | 1.2% |

| SURF | CSLBP | −7.1% | −8.5% | −11.6% | −13.1% | −11.7% | −2.8% | −4.5% |

| SURF-CSLBP | 0.4% | 0.7% | 0.8% | 0.4% | 0.3% | 0.3% | −0.7% |

| DAISY | CSLBP | 0 | 0 | 0 | −5.1% | 0 | 0 | 0 |

| DAISY-CSLBP | 0 | 0 | 0 | 1.1% | 0 | 0 | 0 |

| BRISK | CSLBP | −8.6% | −8.5% | −21.5% | −22.2% | −8.7% | −30.4% | −15.7% |

| BRISK-CSLBP | 21.2% | 22.1% | 18.0% | −3.6% | 10.7% | 3.7% | 5.5% |

| ORB | CSLBP | −32.7% | −26.6% | −22.2% | −29.4% | −18.0% | −6.4% | −3.6% |

| ORB-CSLBP | 11.0% | 16.3% | 17.8% | 5.9% | −2.6% | 23.6% | −3.6% |

| FREAK | CSLBP | −29.0% | −25.7% | −40.8% | −38.4% | −55.6% | −14.4% | −16.8% |

| FREAK-CSLBP | 17.6% | 8.9% | 14.3% | 6.7% | 13.4% | 14.3% | 18.7% |

| KAZE | CSLBP | 0 | −2.1% | −1.5% | −1.4% | 0 | −1.8% | 0 |

| KAZE-CSLBP | 0 | 0.2% | 0.1% | 0.1% | 0 | 0.1% | 0 |

| AKAZE | CSLBP | −6.0% | −14.9% | −23.2% | −27.5% | −32.2% | −22.5% | −13.6% |

| AKAZE-CSLBP | 24.5% | 27.1% | 31.5% | 32.5% | 32.7% | 14.6% | 40.6% |

Table 9.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Ubc sequence.

Table 9.

The reduced values of recall for the CSLBP and the combined descriptors compared to the original descriptors of Ubc sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −64.5% | −53.7% | −49.5% | −47.1% | −45.3% | −33.3% | −22.5% |

| SIFT-CSLBP | −0.6% | −1.4% | −1.4% | −1.2% | −1.6% | −1.6% | −1.9% |

| SURF | CSLBP | −71.6% | −54.5% | −52.8% | −51.8% | −43.9% | −26.0% | −14.2% |

| SURF-CSLBP | −10.9% | −2.3% | −1.6% | −1.0% | −0.9% | −0.6% | −0.6% |

| DAISY | CSLBP | 0 | −0.6% | −33.4 | −75.5% | −40.5% | −34.4% | −20.6% |

| DAISY-CSLBP | 0 | 0 | −1.2% | −31.1% | −33.1% | 0.3% | 0 |

| BRISK | CSLBP | −51.8% | −58.8% | −62.1% | −63.1% | −62.1% | −56.6% | −54.3% |

| BRISK-CSLBP | −5.8% | −7.7% | −9.3% | −11.5% | −11.9% | −11.0% | −13.1% |

| ORB | CSLBP | −36.0% | −38.6% | −38.2% | −37.1% | −40.2% | −35.2% | −50.8% |

| ORB-CSLBP | −7.2% | −10.8% | −9.6% | −10.0% | −9.1% | −4.4 | −20.8% |

| FREAK | CSLBP | −46.5% | −59.1% | −60.9% | −62.4% | −59.3% | −55.8% | −50.4% |

| FREAK-CSLBP | −8.4% | −10.6% | −11.1% | −12.1% | −12.0% | −12.0% | −12.7% |

| KAZE | CSLBP | −97.3% | −92.7% | −91.8% | −92.8% | −93.9% | −87.3% | −74.4% |

| KAZE-CSLBP | −10.8% | −3.8% | −3.9% | −3.4% | −4.8% | −6.2% | −11.1% |

| AKAZE | CSLBP | −22.7% | −63.6% | −68.0% | −73.9% | −77.7% | −78.7% | −75.6% |

| AKAZE-CSLBP | −4.5% | −0.6% | −1.1% | −1.2% | −3.0% | −10.3% | −14.2% |

Table 10.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Ubc sequence.

Table 10.

The improved values of precision for the CSLBP and the combined descriptors compared to the original descriptors of Ubc sequence.

| Features | Descriptors | a-e | a-d | a-c | a-b | a-f | a-g | a-h |

|---|

| SIFT | CSLBP | −27.0% | −29.2% | −36.7% | −37.7% | −47.0% | −46.5% | −56.0% |

| SIFT-CSLBP | 27.2% | 10.5% | 8.5% | 8.0% | 7.0% | 10.7% | 13.7% |

| SURF | CSLBP | −71.6% | −35.6% | −32.2% | −35.2% | −52.3% | −59.4% | −51.8% |

| SURF-CSLBP | 22.5% | 9.3% | 8.7% | 7.7% | 8.9% | 12.5% | 17.9% |

| DAISY | CSLBP | 0 | 8.9% | −28.4% | −35.1% | −21.2% | −23.4% | −23.9% |

| DAISY-CSLBP | 0 | 11.7% | 4.5% | 11.1% | 9.8% | 4.5% | 16.0% |

| BRISK | CSLBP | −22.3% | −11.0% | −11.6% | −10.1% | −11.1% | −18.8% | −26.0% |

| BRISK-CSLBP | 20.8% | 5.3% | 3.0% | 2.2% | 2.1% | 2.9% | 4.4% |

| ORB | CSLBP | −2.8% | −2.4% | −1.4% | −2.2% | −2.9% | −1.2% | −2.9% |

| ORB-CSLBP | 2.7% | 1.5% | 2.6% | 2.4% | 3.6% | 15.7% | 22.8% |

| FREAK | CSLBP | −7.4% | −10.8% | −8.1% | −7.7% | −11.0% | −19.6% | −32.1% |

| FREAK-CSLBP | 35.6% | 6.2% | 4.2% | 2.9% | 3.2% | 4.1% | 4.9% |

| KAZE | CSLBP | −18.5% | −53.9% | −5.3% | −9.3% | −4.9% | 8.8% | −38.6% |

| KAZE-CSLBP | 0.6% | 3.2% | 0.6% | 1.3% | 1.2% | 1.3% | 0.3% |

| AKAZE | CSLBP | −1.5% | −8.2% | −4.8% | −7.3% | −7.1% | −14.2% | −38.8% |

| AKAZE-CSLBP | 24.6% | 5.3% | 3.0% | 1.7% | 2.1% | 5.0% | 7.3% |

Table 11.

The ratios of the CSLBP and combined descriptors to the original descriptors in computational and matching speed.

Table 11.

The ratios of the CSLBP and combined descriptors to the original descriptors in computational and matching speed.

| Features | Descriptors | Computation Speed | Matching Speed |

|---|

| SIFT | CSLBP | 17.81 | 1.10 |

| SIFT-CSLBP | 0.94 | 1.09 |

| SURF | CSLBP | 1.66 | 1.23 |

| SURF-CSLBP | 0.61 | 1.10 |

| DAISY | CSLBP | 29.25 | 1.18 |

| DAISY-CSLBP | 0.96 | 1.02 |

| BRISK | CSLBP | 0.10 | 1.34 |

| BRISK-CSLBP | 0.09 | 1.16 |

| ORB | CSLBP | 0.53 | 0.62 |

| ORB-CSLBP | 0.36 | 0.97 |

| FREAK | CSLBP | 0.11 | 1.21 |

| FREAK-CSLBP | 0.10 | 1.13 |

| KAZE | CSLBP | 58.92 | 1.53 |

| KAZE-CSLBP | 0.99 | 1.28 |

| AKAZE | CSLBP | 12.32 | 1.13 |

| AKAZE-CSLBP | 0.89 | 1.04 |