A Review on Measuring Affect with Practical Sensors to Monitor Driver Behavior

Abstract

:1. Introduction

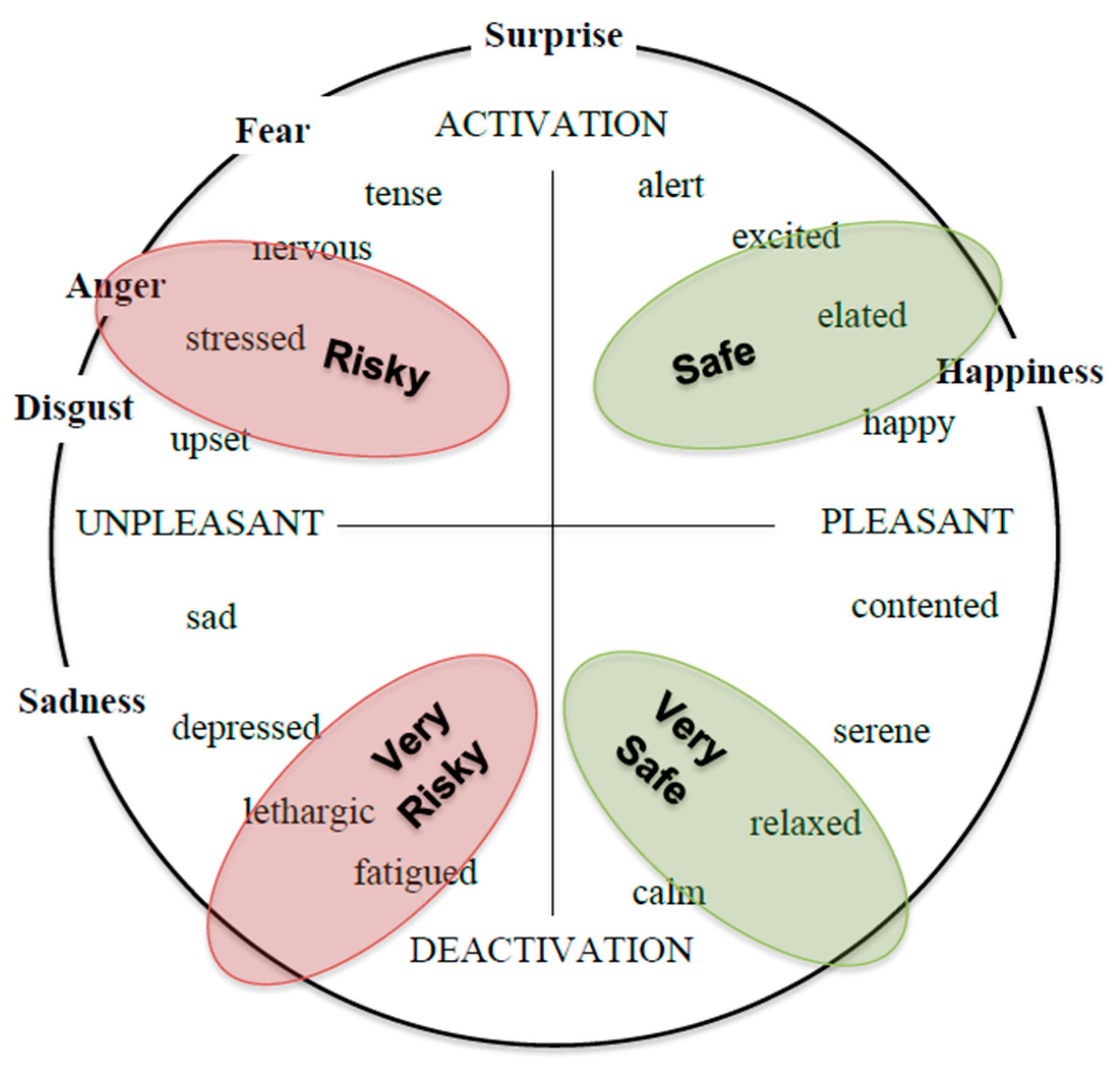

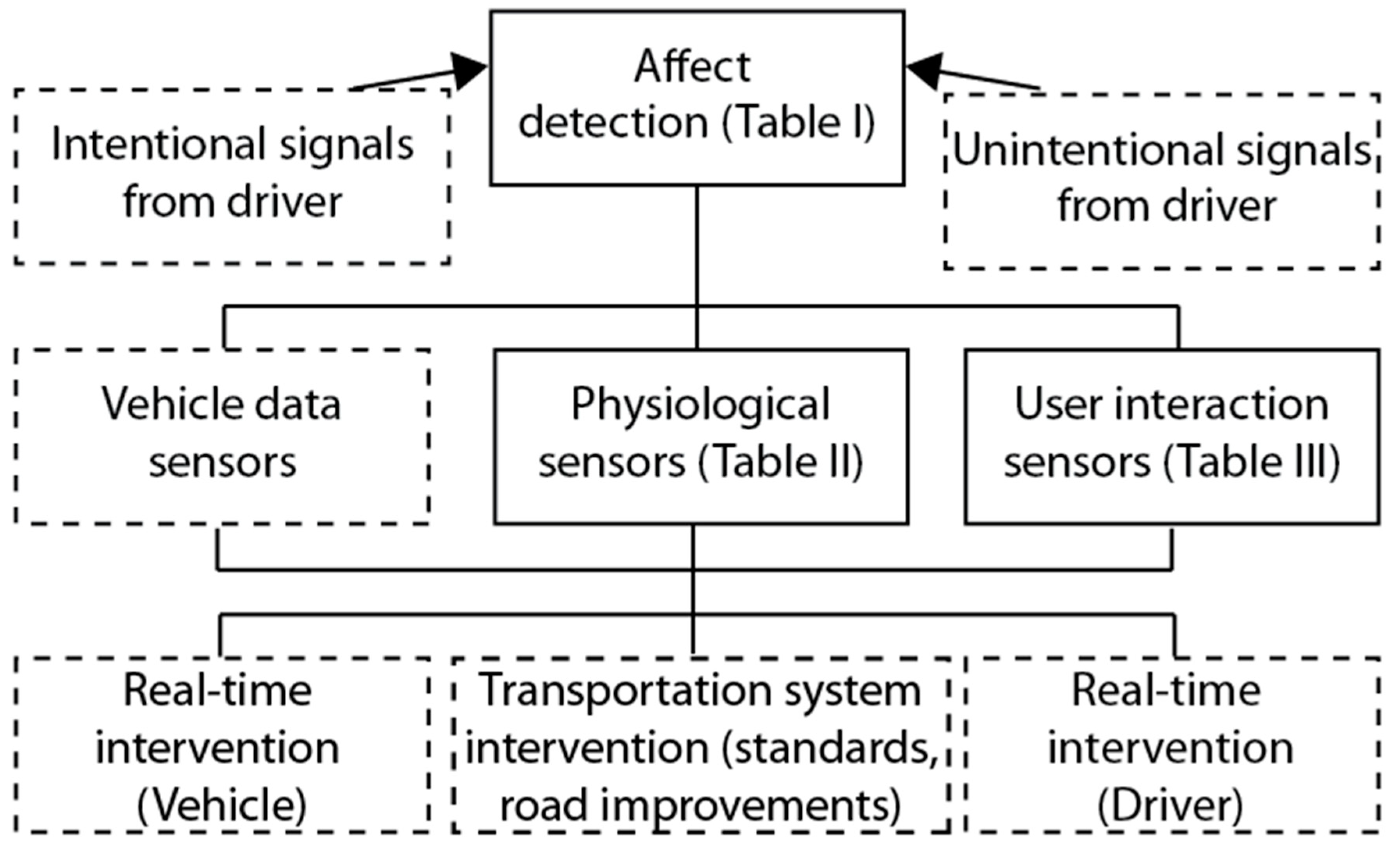

1.1. Affective States and Affect Detection

“Frustration was defined (for participants) as dissatisfaction or annoyance. Confusion was defined as a noticeable lack of understanding, whereas engaged concentration was a state of interest that results from involvement in an activity. Delight was defined as a high degree of satisfaction. Surprise was defined as wonder or amazement, especially from the unexpected. Boredom was defined as being weary or restless due to lack of interest. Participants were given the option of making a neutral judgment to indicate a lack of distinguishable affect. Neutral was defined as no apparent emotion or feeling.”

1.2. Methods

2. Previous Work Relating Affect to Driver Behaviors/Physiological Signals

2.1. Measuring Affect Based on Physiological Signals

2.2. Measuring Affect to Improve Driver–Vehicle Interactions

3. Soft and Wearable Sensor Technologies Applicable to Monitoring Driver Behavior

3.1. Sensor Technologies for Affect Detection Based on Physiological Signals

3.2. Measuring User Activity Based on Driver–Vehicle Interaction

4. Relationship between Driver Behavior and Roadway Safety

5. At-Risk Example Population: Drivers with Autism Spectrum Disorders

6. Conclusions and Future Directions

Author Contributions

Funding

Conflicts of Interest

References

- Fernández, A.; Usamentiaga, R.; Carús, J.L.; Casado, R. Driver Distraction Using Visual-Based Sensors and Algorithms. Sensors 2016, 16, 1805. [Google Scholar] [CrossRef]

- Merickel, J.; High, R.; Smith, L.; Wichman, C.; Frankel, E.; Smits, K.; Drincic, A.; Desouza, C.; Gunaratne, P.; Ebe, K.; et al. Driving Safety and Real-Time Glucose Monitoring in Insulin-Dependent Diabetes. Int. J. Automot. Eng. 2019, 10, 34–40. [Google Scholar] [Green Version]

- Liu, L.; Karatas, C.; Li, H.; Tan, S.; Gruteser, M.; Yang, J.; Chen, Y.; Martin, R.P. Toward Detection of Unsafe Driving with Wearables. In Proceedings of the 2015 Workshop on Wearable Systems and Applications, Florence, Italy, 19–22 May 2015; ACM: New York, NY, USA, 2015; pp. 27–32. [Google Scholar]

- Lisetti, C.L.; Nasoz, F. Using Noninvasive Wearable Computers to Recognize Human Emotions from Physiological Signals. Eurasip J. Appl. Signal Process. 2004, 1672–1687. [Google Scholar] [CrossRef]

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors 2018, 18, 2074. [Google Scholar] [CrossRef]

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Detecting driver drowsiness based on sensors: A review. Sensors 2012, 12, 16937–16953. [Google Scholar] [CrossRef]

- Kang, H.-B. Various approaches for driver and driving behavior monitoring: A review. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 616–623. [Google Scholar]

- Lechner, G.; Fellmann, M.; Festl, A.; Kaiser, C.; Kalayci, T.E.; Spitzer, M.; Stocker, A. A Lightweight Framework for Multi-device Integration and Multi-sensor Fusion to Explore Driver Distraction. In Proceedings of the Advanced Information Systems Engineering, Rome, Italy, 3–7 June 2019; pp. 80–95. [Google Scholar]

- Calvo, R.A.; D’Mello, S. Affect Detection: An Interdisciplinary Review of Models, Methods, and Their Applications. IEEE Trans. Affect. Comput. 2010, 1, 18–37. [Google Scholar] [CrossRef]

- Lazarus, R.S. From psychological stress to the emotions: A history of changing outlooks. Annu. Rev. Psychol. 1993, 44, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Russell, J.A. Core affect and the psychological construction of emotion. Psychol. Rev. 2003, 110, 145–172. [Google Scholar] [CrossRef] [PubMed]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 1995; M.I.T Media Laboratory Perceptual Computing Section Technical Report No. 321; Available online: https://affect.media.mit.edu/pdfs/95.picard.pdf (accessed on 19 September 2019).

- James, W. What is an Emotion? Mind 1884, 9, 188–205. [Google Scholar] [CrossRef]

- Lange, C.G.; James, W. The Emotions; Williams & Wilkins: Philadelphia, PA, USA, 1922. [Google Scholar]

- Lang, P.J. The varieties of emotional experience: A meditation on James-Lange theory. Psychol. Rev. 1994, 101, 211–221. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Levenson, R.W.; Friesen, W.V. Autonomic nervous system activity distinguishes among emotions. Science 1983, 221, 1208–1210. [Google Scholar] [CrossRef] [PubMed]

- Critchley, H.D.; Rotshtein, P.; Nagai, Y.; O’Doherty, J.; Mathias, C.J.; Dolan, R.J. Activity in the human brain predicting differential heart rate responses to emotional facial expressions. Neuroimage 2005, 24, 751–762. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- AlZoubi, O.; D’Mello, S.K.; Calvo, R.A. Detecting Naturalistic Expressions of Nonbasic Affect Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 298–310. [Google Scholar] [CrossRef]

- Baker, R.S.; D’Mello, S.K.; Rodrigo, M.M.T.; Graesser, A.C. Better to be frustrated than bored: The incidence, persistence, and impact of learners’ cognitive--affective states during interactions with three different computer-based learning environments. Int. J. Hum. Comput. Stud. 2010, 68, 223–241. [Google Scholar] [CrossRef]

- Smith, C.A.; Lazarus, R.S. Emotion and adaptation. Handb. Personal. Theory Res. 1990, 609–637. [Google Scholar]

- Darwin, C. The Expression of the Emotions in Man and Animals; Oxford University Press: Oxford, UK, 1872. [Google Scholar]

- Dalgleish, T. The emotional brain. Nat. Rev. Neurosci. 2004, 5, 583–589. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C.I. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Bradley, M.M.; Lang, P.J. Measuring emotion: Behavior, feeling, and physiology. Cogn. Neurosci. Emot. 2000, 431, 242–276. [Google Scholar]

- Cowie, R. Emotion-Oriented Computing: State of the Art and Key Challenges. Humaine Network of Excellence 2005. Available online: https://pdfs.semanticscholar.org/a393/9cfe4757380cde173aba0e6b558ddb60884f.pdf (accessed on 27 August 2019).

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000; ISBN 9780262661157. [Google Scholar]

- Bogdan, S.R.; Măirean, C.; Havârneanu, C.-E. A meta-analysis of the association between anger and aggressive driving. Transp. Res. Part F Traffic Psychol. Behav. 2016, 42, 350–364. [Google Scholar] [CrossRef]

- Deffenbacher, J.L.; Lynch, R.S.; Filetti, L.B.; Dahlen, E.R.; Oetting, E.R. Anger, aggression, risky behavior, and crash-related outcomes in three groups of drivers. Behav. Res. Ther. 2003, 41, 333–349. [Google Scholar] [CrossRef]

- Lajunen, T.; Parker, D. Are aggressive people aggressive drivers? A study of the relationship between self-reported general aggressiveness, driver anger and aggressive driving. Accid. Anal. Prev. 2001, 33, 243–255. [Google Scholar] [CrossRef]

- Underwood, G. Traffic and Transport Psychology: Theory and Application; Elsevier: Amsterdam, The Netherlands, 2005; ISBN 9780080550794. [Google Scholar]

- Roidl, E.; Frehse, B.; Höger, R. Emotional states of drivers and the impact on speed, acceleration and traffic violations—A simulator study. Accid. Anal. Prev. 2014, 70, 282–292. [Google Scholar] [CrossRef] [PubMed]

- Kuppens, P.; Van Mechelen, I.; Smits, D.J.M.; De Boeck, P.; Ceulemans, E. Individual differences in patterns of appraisal and anger experience. Cogn. Emot. 2007, 21, 689–713. [Google Scholar] [CrossRef] [Green Version]

- Scherer, K.R.; Schorr, A.; Johnstone, T. Appraisal Processes in Emotion: Theory, Methods, Research; Oxford University Press: Oxford, UK, 2001; ISBN 9780195351545. [Google Scholar]

- Mesken, J.; Hagenzieker, M.P.; Rothengatter, T.; de Waard, D. Frequency, determinants, and consequences of different drivers’ emotions: An on-the-road study using self-reports, (observed) behaviour, and physiology. Transp. Res. Part F Traffic Psychol. Behav. 2007, 10, 458–475. [Google Scholar] [CrossRef]

- Shinar, D.; Compton, R. Aggressive driving: An observational study of driver, vehicle, and situational variables. Accid. Anal. Prev. 2004, 36, 429–437. [Google Scholar] [CrossRef]

- Li, S.; Zhang, T.; Sawyer, B.D.; Zhang, W.; Hancock, P.A. Angry Drivers Take Risky Decisions: Evidence from Neurophysiological Assessment. Int. J. Environ. Res. Public Health 2019, 16, 1701. [Google Scholar] [CrossRef]

- Precht, L.; Keinath, A.; Krems, J.F. Effects of driving anger on driver behavior--Results from naturalistic driving data. Transp. Res. Part F Traffic Psychol. Behav. 2017, 45, 75–92. [Google Scholar] [CrossRef]

- Zhang, T.; Chan, A.H.S. The association between driving anger and driving outcomes: A meta-analysis of evidence from the past twenty years. Accid. Anal. Prev. 2016, 90, 50–62. [Google Scholar] [CrossRef]

- Deffenbacher, J.L.; Stephens, A.N.; Sullman, M.J.M. Driving anger as a psychological construct: Twenty years of research using the Driving Anger Scale. Transp. Res. Part F Traffic Psychol. Behav. 2016, 42, 236–247. [Google Scholar] [CrossRef]

- Klauer, S.G.; Dingus, T.A.; Neale, V.L.; Sudweeks, J.D.; Ramsey, D.J. The Impact of Driver Inattention on Near-Crash/Crash Risk: An Analysis Using the 100-Car Naturalistic Driving Study Data; Report No. DOT HS 810 594; National Highway Traffic Safety Administration: Washington, DC, USA, 2006.

- Matthews, G.; Dorn, L.; Ian Glendon, A. Personality correlates of driver stress. Pers. Individ. Dif. 1991, 12, 535–549. [Google Scholar] [CrossRef]

- Westerman, S.J.; Haigney, D. Individual differences in driver stress, error and violation. Pers. Individ. Dif. 2000, 29, 981–998. [Google Scholar] [CrossRef]

- Steinhauser, K.; Leist, F.; Maier, K.; Michel, V.; Pärsch, N.; Rigley, P.; Wurm, F.; Steinhauser, M. Effects of emotions on driving behavior. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 150–163. [Google Scholar] [CrossRef]

- Jefferies, L.N.; Smilek, D.; Eich, E.; Enns, J.T. Emotional valence and arousal interact in attentional control. Psychol. Sci. 2008, 19, 290–295. [Google Scholar] [CrossRef] [PubMed]

- Philip, P.; Sagaspe, P.; Moore, N.; Taillard, J.; Charles, A.; Guilleminault, C.; Bioulac, B. Fatigue, sleep restriction and driving performance. Accid. Anal. Prev. 2005, 37, 473–478. [Google Scholar] [CrossRef]

- Grandjean, E. Fatigue in industry. Br. J. Ind. Med. 1979, 36, 175–186. [Google Scholar] [CrossRef]

- Lee, M.L.; Howard, M.E.; Horrey, W.J.; Liang, Y.; Anderson, C.; Shreeve, M.S.; O’Brien, C.S.; Czeisler, C.A. High risk of near-crash driving events following night-shift work. Proc. Natl. Acad. Sci. USA 2016, 113, 176–181. [Google Scholar] [CrossRef]

- Healey, J.; Picard, R.W. Detecting stress during real-world driving tasks using physiological sensors. IEEE Trans. Intell. Transp. Syst. 2005, 6, 156–166. [Google Scholar] [CrossRef]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward machine emotional intelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Itoh, K.; Miwa, H.; Nukariya, Y.; Zecca, M.; Takanobu, H.; Roccella, S.; Carrozza, M.C.; Dario, P.; Takanishi, A. Development of a Bioinstrumentation System in the Interaction between a Human and a Robot. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 2620–2625. [Google Scholar]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Physiology-based affect recognition for computer-assisted intervention of children with Autism Spectrum Disorder. Int. J. Hum. Comput. Stud. 2008, 66, 662–677. [Google Scholar] [CrossRef]

- Bethel, C.L.; Salomon, K.; Murphy, R.R.; Burke, J.L. Survey of Psychophysiology Measurements Applied to Human-Robot Interaction. In Proceedings of the RO-MAN 2007—The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 732–737. [Google Scholar]

- Kreibig, S.D. Autonomic nervous system activity in emotion: A review. Biol. Psychol. 2010, 84, 394–421. [Google Scholar] [CrossRef]

- Ho, C.; Spence, C. Affective multisensory driver interface design. Int. J. Veh. Noise Vib. 2013, 9, 61–74. [Google Scholar] [CrossRef]

- Cunningham, M.L.; Regan, M.A. The impact of emotion, life stress and mental health issues on driving performance and safety. Road Transp. Res. A J. Aust. New Zealand Res. Pract. 2016, 25, 40. [Google Scholar]

- Emo, A.K.; Matthews, G.; Funke, G.J. The slow and the furious: Anger, stress and risky passing in simulated traffic congestion. Transp. Res. Part F Traffic Psychol. Behav. 2016, 42, 1–14. [Google Scholar] [CrossRef]

- Maxwell, J.P.; Grant, S.; Lipkin, S. Further validation of the propensity for angry driving scale in British drivers. Pers. Individ. Dif. 2005, 38, 213–224. [Google Scholar] [CrossRef]

- Tawari, A.; Trivedi, M. Speech based emotion classification framework for driver assistance system. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 174–178. [Google Scholar]

- Nass, C.; Jonsson, I.-M.; Harris, H.; Reaves, B.; Endo, J.; Brave, S.; Takayama, L. Improving Automotive Safety by Pairing Driver Emotion and Car Voice Emotion. In Proceedings of the CHI ’05 Extended Abstracts on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; ACM: New York, NY, USA, 2005; pp. 1973–1976. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Poitschke, T.; Schuller, B.; Blaschke, C.; Färber, B.; Nguyen-Thien, N. Emotion on the Road—Necessity, Acceptance, and Feasibility of Affective Computing in the Car. Adv. Hum.-Comput. Interact. 2010, 2010. [Google Scholar] [CrossRef]

- Braun, M.; Pfleging, B.; Alt, F. A Survey to Understand Emotional Situations on the Road and What They Mean for Affective Automotive UIs. Multimodal Technol. Interact. 2018, 2, 75. [Google Scholar] [CrossRef]

- Braun, M.; Schubert, J.; Pfleging, B.; Alt, F. Improving Driver Emotions with Affective Strategies. Multimodal Technol. Interact. 2019, 3, 21. [Google Scholar] [CrossRef]

- Wioleta, S. Using physiological signals for emotion recognition. In Proceedings of the 2013 6th International Conference on Human System Interactions (HSI), Sopot, Poland, 6–8 June 2013; pp. 556–561. [Google Scholar]

- Healey, J.A. Wearable and Automotive Systems for Affect Recognition from Physiology. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2000. [Google Scholar]

- Ranjan Singh, R.; Banerjee, R. Multi-parametric analysis of sensory data collected from automotive drivers for building a safety-critical wearable computing system. In Proceedings of the 2010 2nd International Conference on Computer Engineering and Technology, Chengdu, China, 16–18 April 2010; Volume 1, pp. V1-355–V1-360. [Google Scholar]

- Saikalis, C.T.; Lee, Y.-C. An investigation of measuring driver anger with electromyography. In Proceedings of the 10th International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Santa Fe, NM, USA, 24–27 June 2019. [Google Scholar]

- MacLean, D.; Roseway, A.; Czerwinski, M. MoodWings: A Wearable Biofeedback Device for Real-time Stress Intervention. In Proceedings of the 6th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes Island, Greece, 29–31 May 2013; ACM: New York, NY, USA, 2013; pp. 66:1–66:8. [Google Scholar]

- Nasoz, F.; Lisetti, C.L.; Vasilakos, A.V. Affectively intelligent and adaptive car interfaces. Inf. Sci. 2010, 180, 3817–3836. [Google Scholar] [CrossRef]

- Welch, K.C.; Kulkarni, A.S.; Jimenez, A.M. Wearable sensing devices for human-machine interaction systems. In Proceedings of the United States National Committee of URSI National Radio Science Meeting (USNC-URSI NRSM), Boulder, CO, USA, 4–7 January 2018; pp. 1–2. [Google Scholar]

- Saadatzi, M.N.; Tafazzoli, F.; Welch, K.C.; Graham, J.H. EmotiGO: Bluetooth-enabled eyewear for unobtrusive physiology-based emotion recognition. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–24 August 2016; pp. 903–909. [Google Scholar]

- He, J.; Choi, W.; Yang, Y.; Lu, J.; Wu, X.; Peng, K. Detection of driver drowsiness using wearable devices: A feasibility study of the proximity sensor. Appl. Ergon. 2017, 65, 473–480. [Google Scholar] [CrossRef]

- Yeo, J.C.; Lim, C.T. Emerging flexible and wearable physical sensing platforms for healthcare and biomedical applications. Microsyst. Nanoeng. 2016, 2, 16043. [Google Scholar]

- Huang, X.; Liu, Y.; Chen, K.; Shin, W.-J.; Lu, C.-J.; Kong, G.-W.; Patnaik, D.; Lee, S.-H.; Cortes, J.F.; Rogers, J.A. Stretchable, wireless sensors and functional substrates for epidermal characterization of sweat. Small 2014, 10, 3083–3090. [Google Scholar] [CrossRef] [PubMed]

- Yu, X. Real-Time Nonintrusive Detection of Driver Drowsiness; Technical Report CTS 09-15; University of Minnesota Center for Transportation Studies: Minneapolis, MA, USA, 2009. [Google Scholar]

- Lourenço, A.; Alves, A.P.; Carreiras, C.; Duarte, R.P.; Fred, A. CardioWheel: ECG Biometrics on the Steering Wheel. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Porto, Portugal, 7–11 September 2015; pp. 267–270. [Google Scholar]

- Pinto, J.R.; Cardoso, J.S.; Lourenço, A.; Carreiras, C. Towards a Continuous Biometric System Based on ECG Signals Acquired on the Steering Wheel. Sensors 2017, 17, 2228. [Google Scholar] [CrossRef] [PubMed]

- Granado, M.R.; Anta, L.M.G. Textile Piezoresistive Sensor and Heartbeat and/or Respiratory Rate Detection System. U.S. Patent 15/022,306, 29 September 2016. [Google Scholar]

- Baek, H.J.; Lee, H.B.; Kim, J.S.; Choi, J.M.; Kim, K.K.; Park, K.S. Nonintrusive biological signal monitoring in a car to evaluate a driver’s stress and health state. Telemed. J. e-Health 2009, 15, 182–189. [Google Scholar] [CrossRef]

- Bhardwaj, R.; Balasubramanian, V. Viability of Cardiac Parameters Measured Unobtrusively Using Capacitive Coupled Electrocardiography (cECG) to Estimate Driver Performance. IEEE Sens. J. 2019, 19, 4321–4330. [Google Scholar] [CrossRef]

- Oliveira, L.; Cardoso, J.S.; Lourenço, A.; Ahlström, C. Driver drowsiness detection: A comparison between intrusive and non-intrusive signal acquisition methods. In Proceedings of the 2018 7th European Workshop on Visual Information Processing (EUVIP), Tampere, Finland, 26–28 November 2018; pp. 1–6. [Google Scholar]

- Hassib, M.; Braun, M.; Pfleging, B.; Alt, F. Detecting and Influencing Driver Emotions using Psycho-physiological Sensors and Ambient Light. In Proceedings of the 17th IFIP TC. 13 International Conference on Human-Computer Interaction (INTERACT2019), Paphos, Cyprus, 2–6 September 2019. [Google Scholar]

- Bi, C.; Huang, J.; Xing, G.; Jiang, L.; Liu, X.; Chen, M. SafeWatch: A Wearable Hand Motion Tracking System for Improving Driving Safety. In Proceedings of the Second International Conference on Internet-of-Things Design and Implementation, Pittsburgh, PA, USA, 18–21 April 2017; ACM: New York, NY, USA, 2017; pp. 223–232. [Google Scholar]

- McMillen, K.A.; Lacy, C.; Allen, B.; Lobedan, K.; Wille, G. Sensor Systems Integrated with Steering Wheels. U.S. Patent 9,827,996, 28 November 2017. [Google Scholar]

- Durgam, K.K.; Sundaram, G.A. Behavior of 3-axis conformal proximity sensor arrays for restraint-free, in-vehicle, deployable safety assistance. J. Intell. Fuzzy Syst. 2019, 36, 2085–2094. [Google Scholar] [CrossRef]

- Ziraknejad, N.; Lawrence, P.D.; Romilly, D.P. Vehicle Occupant Head Position Quantification Using an Array of Capacitive Proximity Sensors. IEEE Trans. Veh. Technol. 2015, 64, 2274–2287. [Google Scholar] [CrossRef]

- Pouryazdan, A.; Prance, R.J.; Prance, H.; Roggen, D. Wearable Electric Potential Sensing: A New Modality Sensing Hair Touch and Restless Leg Movement. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, Heidelberg, Germany, 12–16 September 2016; ACM: New York, NY, USA, 2016; pp. 846–850. [Google Scholar]

- Harnett, C.K.; Zhao, H.; Shepherd, R.F. Stretchable Optical Fibers: Threads for Strain-Sensitive Textiles. Adv. Mater. Technol. 2017, 2, 1700087. [Google Scholar] [CrossRef]

- Leber, A.; Cholst, B.; Sandt, J.; Vogel, N.; Kolle, M. Stretchable Thermoplastic Elastomer Optical Fibers for Sensing of Extreme Deformations. Adv. Funct. Mater. 2018, 29, 1802629. [Google Scholar] [CrossRef]

- Tian, R.; Ruan, K.; Li, L.; Le, J.; Greenberg, J.; Barbat, S. Standardized evaluation of camera-based driver state monitoring systems. IEEE/CAA J. Autom. Sin. 2019, 6, 716–732. [Google Scholar] [CrossRef]

- Skach, S.; Stewart, R.; Healey, P.G.T. Smart Arse: Posture Classification with Textile Sensors in Trousers. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; ACM: New York, NY, USA, 2018; pp. 116–124. [Google Scholar]

- Braun, A.; Rus, S.; Majewski, M. Invisible Human Sensing in Smart Living Environments Using Capacitive Sensors. In Ambient Assisted Living: 9. AAL-Kongress, Frankfurt/M, Germany, 20–21 April 2016; Wichert, R., Mand, B., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 43–53. ISBN 9783319523224. [Google Scholar]

- Rus, S.; Braun, A.; Kuijper, A. E-Textile Couch: Towards Smart Garments Integrated Furniture. In Proceedings of the Ambient Intelligence, Porto, Portugal, 21–23 June 2017; pp. 214–224. [Google Scholar]

- Skach, S.; Healey, P.G.T.; Stewart, R. Talking Through Your Arse: Sensing Conversation with Seat Covers. In Proceedings of the 39th Annual Meeting of the Cognitive Science Society, CogSci 2017, London, UK, 16–29 July 2017. [Google Scholar]

- Zhang, Y.; Laput, G.; Harrison, C. Electrick: Low-Cost Touch Sensing Using Electric Field Tomography. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 1–14. [Google Scholar]

- Zhang, Y.; Xiao, R.; Harrison, C. Advancing Hand Gesture Recognition with High Resolution Electrical Impedance Tomography. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; ACM: New York, NY, USA, 2016; pp. 843–850. [Google Scholar]

- Rodrigues, J.G.P.; Vieira, F.; Vinhoza, T.T.V.; Barros, J.; Cunha, J.P.S. A non-intrusive multi-sensor system for characterizing driver behavior. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Portugal, 19–22 September 2010; pp. 1620–1624. [Google Scholar]

- Mimbela, L.E.Y.; Klein, L.A. Summary of Vehicle Detection and Surveillance Technologies Used in Intelligent Transportation Systems; Technical Report; Joint Program Office for Intelligent Transportation Systems: Washington, DC, USA, 2007. Available online: https://www.fhwa.dot.gov/policyinformation/pubs/vdstits2007/vdstits2007.pdf (accessed on 27 August 2019).

- Wood, J.S.; Zhang, S. Identification and Calculation of Horizontal Curves for Low-Volume Roadways Using Smartphone Sensors. Transp. Res. Rec. 2018, 2672, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Ghazizadeh, M.; Lee, J.D.; Boyle, L.N. Extending the Technology Acceptance Model to assess automation. Cogn. Technol. Work 2012, 14, 39–49. [Google Scholar] [CrossRef]

- Ben Shalom, D.; Mostofsky, S.H.; Hazlett, R.L.; Goldberg, M.C.; Landa, R.J.; Faran, Y.; McLeod, D.R.; Hoehn-Saric, R. Normal Physiological Emotions but Differences in Expression of Conscious Feelings in Children with High-Functioning Autism. J. Autism Dev. Disord. 2006, 36, 395–400. [Google Scholar] [CrossRef] [PubMed]

- Groden, J.; Goodwin, M.S.; Baron, M.G.; Groden, G.; Velicer, W.F.; Lipsitt, L.P.; Hofmann, S.G.; Plummer, B. Assessing Cardiovascular Responses to Stressors in Individuals With Autism Spectrum Disorders. Focus Autism Other Dev. Disabl. 2005, 20, 244–252. [Google Scholar] [CrossRef]

- Welch, K.C. Physiological signals of autistic children can be useful. IEEE Instrum. Meas. Mag. 2012, 15, 28–32. [Google Scholar] [CrossRef]

- Green, D.; Baird, G.; Barnett, A.L.; Henderson, L.; Huber, J.; Henderson, S.E. The severity and nature of motor impairment in Asperger’s syndrome: A comparison with specific developmental disorder of motor function. J. Child Psychol. Psychiatry 2002, 43, 655–668. [Google Scholar] [CrossRef]

- Schultz, R.T. Developmental deficits in social perception in autism: The role of the amygdala and fusiform face area. Int. J. Dev. Neurosci. 2005, 23, 125–141. [Google Scholar] [CrossRef]

- Langdell, T. Face Perception: An Approach to the Study of Autism. Ph.D. Thesis, University of London, London, UK, 1981. [Google Scholar]

- Schopler, E.; Mesibov, G.B. Social Behavior in Autism; Springer Science & Business Media, Plenum Press: New York, NY, USA, 1986; ISBN 9780306421631. [Google Scholar]

- Baio, J.A. Prevalence of autism spectrum disorder among children aged 8 years—Autism and developmental disabilities monitoring network, 11 sites, United States, 2010. MMWR Surveill. Summ. 2014, 63, 1–21. [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Pub.: Washington, DC, USA, 2013; ISBN 9780890425572. [Google Scholar]

- Prendinger, H.; Mori, J.; Ishizuka, M. Using human physiology to evaluate subtle expressivity of a virtual quizmaster in a mathematical game. Int. J. Hum. Comput. Stud. 2005, 62, 231–245. [Google Scholar] [CrossRef]

- Ernsperger, L. Keys to Success for Teaching Students with Autism; Future Horizons: Arlington, TA, USA, 2002; ISBN 9781885477927. [Google Scholar]

- Seip, J.-A. Teaching the Autistic and Developmentally Delayed: A Guide for Staff Training and Development; Gateway Press: Delta, BC, USA, 1992. [Google Scholar]

- Wieder, S.; Greenspan, S. Can children with autism master the core deficits and become empathetic, creative, and reflective? J. Dev. Learn. Disord. 2005, 9, 39–61. [Google Scholar]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Online Affect Detection and Robot Behavior Adaptation for Intervention of Children with Autism. IEEE Trans. Rob. 2008, 24, 883–896. [Google Scholar]

| Affective State | Reference(s) | Effect on Driver Behavior |

|---|---|---|

| Anger and Anxiety (vs. contempt and fright) | Roidl et al. (2014) [32] | Higher driving speed, stronger acceleration, speed limit violation for a longer time |

| Stress | Westerman and Haigney (2000) [43] | Higher (self-reported) lapses, errors, and violations |

| Happiness and Calmness (vs. anger) | Steinhauser et al. (2018) [44] | Lower driving speed and speed variability, longer distance to lead car |

| Fatigue | Philip et al. (2005) [46] | More inappropriate line crossings |

| Drowsiness | Lee et al. (2016) [48] | More near-crash events and lane excursions |

| Physiological Sensor | Reference | Affective State(s) Sensed | Scope and Context (Driving Only) |

|---|---|---|---|

| Heart rate, galvanic skin response (GSR) wearable biomedical sensors | Healey and Picard (2005) [49] | Stress | Driving test on roads: 24 subjects on at least a 50 min route |

| GSR, SpO2, respiration, and electrocardiogram (ECG) wearable biomedical sensors | Ranjan Singh and Banerjee (2010) [66] | Fatigue, stress | Driving test on roads: 14 subjects including taxi drivers |

| Heart rate and GSR wearable biomedical sensors, plus wearable biofeedback using a visible indicator | MacLean et al. (2013) [68] | Stress, emotional regulation | Simulated driving test: 11 subjects with driving experience and no history of epilepsy or autism |

| Heart rate, GSR, and temperature from armband; Polar heart monitor chest strap | Nasoz et al. (2010) [69] | Fear, frustration, boredom | Simulated driving test: 41 subjects |

| Eye blink rate, from smart glasses-correlated with braking response time and lane deviation | He et al. (2017) [72] | Drowsiness | Simulated driving test: 23 subjects. |

| Heart rate variability, from ECG electrodes made from conductive fabric on steering wheel | Yu (2009) [75] | Fatigue, drowsiness | Simulated driving test: 2 subjects |

| Heart rate using ECG electrodes on body, and eye movement | Oliveira et al. (2018) [81] | Drowsiness | Driving test on roads: 20 subjects |

| GSR, SpO2, Respiration, and ECG embedded in vehicle seat | Baek et al. (2009) [79] | Task-induced stress | Driving test on roads: 4 subjects with at least 5 years driving experience |

| Facial electromyography (EMG) | Saikalis and Lee (2019) [67] | Anger | Simulated driving test: 11 subjects |

| Electroencephalography (EEG) and heart rate | Hassib, Braun, Pfleging, and Alt, (2019) [82] | Negative emotions induced by music | Simulated driving test: 12 subjects |

| Capacitive coupled ECG embedded in back support of vehicle seat | Bhardwaj and Balasubramanian (2019) [80] | Fatigue | Simulated driving test: 20 male subjects |

| Interaction Sensor Format | Reference | Interaction Category and Possible Affective State(s) | Context of Study (Driving/Other) |

|---|---|---|---|

| Wrist-worn accelerometry on both driving hands | Bi et al. (2017) [83] | Handling secondary tasks (texting, eating), distraction, drowsiness | Road driving tests with 6 subjects, 75 different trips |

| Capacitive proximity sensors in vehicle seats | Durgam and Sundaram (2019) [85] | Driver posture, sudden braking, panic | Other: validating occupant position in video vs sensor data |

| Capacitive proximity sensors in vehicle headrests | Ziraknejad et al. (2015) [86] | Head position, drowsiness | Other: validating head position detection in lab tests |

| Wearable capacitive pressure sensor | Pouryazdan et al. (2016) [87] | Fidgeting, inattention | Other: detecting restless leg motion |

| Stretchable optical strain sensors in athletic tape | Harnett et al. (2017) [88] | Muscle tension, stress | Other: detecting weight bearing activity in lab tests, proof of concept |

| Stretchable optical strain sensors in gloves | Leber et al. (2018) [89] | Hand motion, distraction | Other: detecting hand configuration in lab tests, proof of concept |

| Resistive textile pressure sensors in trousers | Skach et al. (2018) [91] | Body posture, social behavior, engagement | Other: Classification of 19 different postures and gestures, 36 subjects |

| Resistive foam pressure sensors in an office chair back and seat | Skach et al. (2017) [94] | Seated body position, social behavior, engagement | Other: Correlation of body position and speaking role during conversation, 27 subjects |

| Electrical impedance tomography touch detection on 3D objects | Zhang et al. (2017) [95] | Hand motion/grip shape, coordination level related to alertness | Other: User interface for computers, games, toys: demonstration |

| Wrist-worn electrical impedance tomography sensor | Zhang et al. (2016) [96] | Hand positions, excitement/hostility | Other: classifying hand gestures |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Welch, K.C.; Harnett, C.; Lee, Y.-C. A Review on Measuring Affect with Practical Sensors to Monitor Driver Behavior. Safety 2019, 5, 72. https://0-doi-org.brum.beds.ac.uk/10.3390/safety5040072

Welch KC, Harnett C, Lee Y-C. A Review on Measuring Affect with Practical Sensors to Monitor Driver Behavior. Safety. 2019; 5(4):72. https://0-doi-org.brum.beds.ac.uk/10.3390/safety5040072

Chicago/Turabian StyleWelch, Karla Conn, Cindy Harnett, and Yi-Ching Lee. 2019. "A Review on Measuring Affect with Practical Sensors to Monitor Driver Behavior" Safety 5, no. 4: 72. https://0-doi-org.brum.beds.ac.uk/10.3390/safety5040072