End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++

Abstract

:1. Introduction

- (1)

- To the best of our knowledge, a comprehensive summary of DLCD techniques are firstly presented, which is useful for grasping the development process and tendency of DLCD.

- (2)

- An end-to-end CNN architecture was proposed for CD of VHR satellite images, where an improved UNet++ model with novel DS is presented so as to capture subtle changes in challenging scenes.

- (3)

- A comprehensive comparison of the existing FCN-based end-to-end CD methods was investigated.

2. Background

3. Methodology

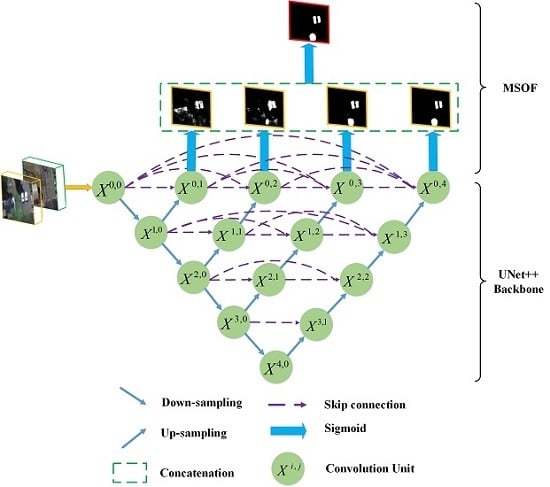

3.1. Proposed Network Architecture

3.1.1. Backbone of UNet++

3.1.2. Deep Supervision by Multiple Side-Outputs Fusion

3.2. Loss Function Formulation

3.2.1. Balanced Binary Cross-Entropy Loss

3.2.2. Dice Coefficient Loss

3.3. Training and Prediction

4. Experiments and Results

4.1. Datasets and Evaludation Metrics

4.2. Comparison Methods

- (1)

- Change detection network (CDNet) [56] was proposed for pixel-wise CD in street view scenes, which consists of contraction blocks and expansion blocks, and the final CM is generated by a soft-max layer.

- (2)

- Fully convolutional-early fusion (FC-EF) [53] was proposed for CD of satellite images. Image pairs are stacked as the input images. Skip connections are utilized to complement the less localized information with spatial details, thereby producing CMs with precise boundaries.

- (3)

- Fully convolutional Siamese-concatenation (FC-Siam-conc) [53] is a Siamese extension of FC-EF model. The encoding layers are separated into two streams of equal structure with shared weights. Then, the skip connections are concatenated in the decoder part.

- (4)

- Fully convolutional Siamese-difference (FC-Siam-diff) [53] is another Siamese extension of FC-EF model. The skip connections from the encoding streams are concatenated by using the absolute value of their difference.

- (5)

- FC-EF with residual blocks (FC-EF-Res) [51] is employed for CD in high-resolution satellite images. It is an extension of FC-EF architecture, where residual blocks with skip connections are used to improve the spatial accuracy of CM.

- (6)

- Fully convolutional network with pyramid pooling (FCN-PP) [52] is applied for landslide inventory mapping. A U-shape architecture is used to construct the FCN. Additionally, pyramid pooling is utilized to capture wider receptive field and overcome the drawbacks of global pooling.

4.3. Parameter Setting

4.3.1. Effect of Loss Function

4.3.2. Effect of Data Augmentation

4.3.3. Effect of MSOF

4.4. Results Comparisons

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CD | change detection |

| PBCD | pixel-based change detection |

| OBCD | object-based change detection |

| DI | difference image |

| CM | change map |

| VHR | very-high-resolution |

| DL | deep learning |

| RS | remote sensing |

| FB-DLCD | feature-based deep learning change detection |

| PB-DLCD | patch-based deep learning change detection |

| IB-DLCD | image-based deep learning change detection |

| CNN | convolutional neural network |

| DBN | deep belief network |

| GAN | generative adversarial network |

| cGAN | conditional generative adversarial network |

| IFMN | iterative feature mapping network |

| SDAE | sparse denoising autoencoder |

| RNN | recurrent neural network |

| GDCN | generative discriminatory classified network |

| FCN | fully convolutional network |

| BN | Batch Normalization |

| DS | deep supervision |

| MSOF | multiple side-outputs fusion |

| SOTA | state-of-the-art |

| OA | Overall Accuracy |

| FC-EF | fully convolutional-early fusion |

| FC-Siam-conc | fully convolutional Siamese-concatenation |

| FC-Siam-diff | fully convolutional Siamese-difference |

| FC-EF-Res | fC-EF with residual blocks |

| FCN-PP | fully convolutional network with pyramid pooling |

References

- Singh, A. Review Article Digital change detection techniques using remotely-sensed data. Int. Remote Sens. 2010, 10, 989–1003. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Demir, B.; Bovolo, F.; Bruzzone, L. Updating land-cover maps by classification of image time series: A novel change-detection-driven transfer learning approach. IEEE Trans. Geosci. Remote Sens. 2013, 51, 300–312. [Google Scholar] [CrossRef]

- Jin, S.; Yang, L.; Danielson, P.; Homer, C.; Fry, J.; Xian, G. A comprehensive change detection method for updating the National Land Cover Database to circa 2011. Remote Sens. Environ. 2013, 132, 159–175. [Google Scholar] [CrossRef] [Green Version]

- Le Hégarat-Mascle, S.; Ottlé, C.; Guerin, C. Land cover change detection at coarse spatial scales based on iterative estimation and previous state information. Remote Sens. Environ. 2005, 95, 464–479. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef] [Green Version]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Deng, J.; Wang, K.; Deng, Y.; Qi, G. PCA-based land-use change detection and analysis using mul-titemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Cui, X.; Zhang, L. A post-classification change detection method based on iterative slow feature analysis and Bayesian soft fusion. Remote Sens. Environ. 2017, 199, 241–255. [Google Scholar] [CrossRef]

- Huang, C.; Song, K.; Kim, S.; Townshend, J.R.; Davis, P.; Masek, J.G.; Goward, S.N. Use of a dark object concept and support vector machines to automate forest cover change analysis. Remote Sens. Environ. 2008, 112, 970–985. [Google Scholar] [CrossRef]

- Cao, G.; Li, Y.; Liu, Y.; Shang, Y. Automatic change detection in high-resolution remote-sensing images by means of level set evolution and support vector machine classification. Int. J. Remote Sens. 2014, 35, 6255–6270. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D.; Bovolo, F.; Kanevski, M.; Bruzzone, L. Supervised change detection in VHR images using contextual information and support vector machines. Int. J. Appl. Earth Obs. Geoinform. 2013, 20, 77–85. [Google Scholar] [CrossRef]

- Benedek, C.; Szir´anyi, T. Change detection in optical aerial images by a multilayer conditional mixed Markov model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef]

- Cao, G.; Zhou, L.; Li, Y. A new change detection method in high-resolution remote sensing images based on a conditional random field model. Int. J. Remote Sens. 2016, 37, 1173–1189. [Google Scholar] [CrossRef]

- Lv, P.; Zhong, Y.; Zhao, J.; Zhang, L. Unsupervised change detection based on hybrid conditional random field model for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4002–4015. [Google Scholar] [CrossRef]

- Jian, P.; Chen, K.; Zhang, C. A hypergraph-based context-sensitive representation technique for VHR remote-sensing image change detection. Int. J. Remote Sens. 2016, 37, 1814–1825. [Google Scholar] [CrossRef]

- Bazi, Y.; Melgani, F.; Al- Sharari, H.D. Unsupervised change detection in multispectral remotely sensed imagery with level set methods. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3178–3187. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Blaschke, T.; Ma, X.; Tiede, D.; Cheng, L.; Chen, Z.; Chen, D. Object-based change detection in urban areas: The effects of segmentation strategy, scale, and feature space on unsupervised methods. Remote Sens. 2016, 8, 761. [Google Scholar] [CrossRef]

- Zhang, Y.; Peng, D.; Huang, X. Object-based change detection for VHR images based on multiscale un- certainty analysis. IEEE Geosci. Remote Sens. Lett. 2018, 15, 13–17. [Google Scholar] [CrossRef]

- Gil-Yepes, J.L.; Ruiz, L.A.; Recio, J.A.; Balaguer-Beser, Á.; Hermosilla, T. Description and validation of a new set of object-based temporal geostatistical features for land-use/land-cover change detection. ISPRS J. Photogramm. Remote Sens. 2016, 121, 77–91. [Google Scholar] [CrossRef]

- Qin, Y.; Niu, Z.; Chen, F.; Li, B.; Ban, Y. Object-based land cover change detection for cross-sensor images. Int. J. Remote Sens. 2013, 34, 6723–6737. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Sakurada, K.; Okatani, T. Change Detection from a Street Image Pair using CNN Features and Superpixel Segmentation. In Proceedings of the British Machine Vision Conference BMVC, Swansea, UK, 7–10 September 2015; pp. 61.1–61.12. [Google Scholar]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised Deep Change Vector Analysis for Multiple-Change De-tection in VHR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Hou, B.; Wang, Y.; Liu, Q. Change Detection Based on Deep Features and Low Rank. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2418–2422. [Google Scholar] [CrossRef]

- El Amin, A.M.; Liu, Q.; Wang, Y. Zoom out cnns features for optical remote sensing change detection. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 812–817. [Google Scholar]

- Zhang, H.; Gong, M.; Zhang, P.; Su, L.; Shi, J. Feature-level change detection using deep representation and feature change analysis for multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1666–1670. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change detection based on deep siamese convolutional network for optical aerial images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, G.; Chen, K.; Yan, M.; Sun, X. Triplet-Based Semantic Relation Learning for Aerial Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 266–270. [Google Scholar] [CrossRef]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A Conditional Adversarial Network for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 45–49. [Google Scholar] [CrossRef]

- Zhan, T.; Gong, M.; Liu, J.; Zhang, P. Iterative feature mapping network for detecting multiple changes in multi-source remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 146, 38–51. [Google Scholar] [CrossRef]

- Lei, Y.; Liu, X.; Shi, J.; Lei, C.; Wang, J. Multiscale Superpixel Segmentation with Deep Features for Change Detection. IEEE Access 2019, 7, 36600–36616. [Google Scholar] [CrossRef]

- Gong, M.; Zhan, T.; Zhang, P.; Miao, Q. Superpixel-based difference representation learning for change detection in multispectral remote sensing images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2658–2673. [Google Scholar] [CrossRef]

- Gong, M.; Niu, X.; Zhang, P.; Li, Z. Generative adversarial networks for change detection in multi- spectral imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2310–2314. [Google Scholar] [CrossRef]

- Arabi, M.E.A.; Karoui, M.S.; Djerriri, K. Optical Remote Sensing Change Detection Through Deep Siamese Network. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 5041–5044. [Google Scholar]

- Dong, H.; Ma, W.; Wu, Y.; Gong, M.; Jiao, L. Local Descriptor Learning for Change Detection in Synthetic Aperture Radar Images via Convolutional Neural Networks. IEEE Access 2019, 7, 15389–15403. [Google Scholar] [CrossRef]

- Ma, W.; Xiong, Y.; Wu, Y.; Yang, H.; Zhang, X.; Jiao, L. Change Detection in Remote Sensing Images Based on Image Mapping and a Deep Capsule Network. Remote Sens. 2019, 11, 626. [Google Scholar] [CrossRef]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Tuia, D.; Yang, M.Y. Change Detection between Multimodal Remote Sensing Data Using Siamese CNN. arXiv 2018, arXiv:1807.09562. [Google Scholar]

- Khan, S.H.; He, X.; Porikli, F.; Bennamoun, M. Forest change detection in incomplete satellite images with deep neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5407–5423. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change detection in synthetic aperture radar images based on deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban change detection for multispectral earth observation using convolutional neural networks. arXiv 2018, arXiv:1810.08468. [Google Scholar]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyper- spectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3–13. [Google Scholar] [CrossRef]

- Wiratama, W.; Lee, J.; Park, S.E.; Sim, D. Dual-Dense Convolution Network for Change Detection of High-Resolution Panchromatic Imagery. Appl. Sci. 2018, 8, 1785. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X. The Spectral-Spatial Joint Learning for Change Detection in Multispectral Imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L. Learning a transferable change rule from a recurrent neural network for land cover change detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral-spatial-temporal features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 924–935. [Google Scholar] [CrossRef]

- Gong, M.; Yang, Y.; Zhan, T.; Niu, X.; Li, S. A Generative Discriminatory Classified Net- work for Change Detection in Multispectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 321–333. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. High Resolution Semantic Change Detection. arXiv 2018, arXiv:1810.08452v1. [Google Scholar]

- Lei, T.; Zhang, Y.; Lv, Z.; Li, S.; Liu, S.; Nandi, A.K. Landslide Inventory Mapping from Bi-temporal Images Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 982–986. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully convolutional siamese networks for change detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar]

- Lebedev, M.; Vizilter, Y.V.; Vygolov, O.; Knyaz, V.; Rubis, A.Y. Change detection in remote sensing images using conditional adversarial networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 565–571. [Google Scholar] [CrossRef]

- Guo, E.; Fu, X.; Zhu, J.; Deng, M.; Liu, Y.; Zhu, Q.; Li, H. Learning to Measure Change: Fully Convolutional Siamese Metric Networks for Scene Change Detection. arXiv 2018, arXiv:1810.09111. [Google Scholar]

- Alcantarilla, P.F.; Stent, S.; Ros, G.; Arroyo, R.; Gherardi, R. Streetview change detection with deconvolutional networks. Auton. Robots 2018, 42, 1301–1322. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised Deep Noise Modeling for Hyperspectral Image Change Detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- L¨angkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and segmentation of satellite orthoimagery using convolutional neural networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Kim, J.H.; Lee, H.; Hong, S.J.; Kim, S.; Park, J.; Hwang, J.Y.; Choi, J.P. Objects Segmentation from High-Resolution Aerial Images Using U-Net With Pyramid Pooling Layers. IEEE Geosci. Remote Sens. Lett. 2019, 16, 115–119. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Selfnormalizing neural networks. Adv. Neural Inf. Process. Syst. 2017, 30, 971–980. [Google Scholar]

- Lee, C.Y.; Xie, S.; Gallagher, P.; Zhang, Z.; Tu, Z. Deeply-supervised nets. arXiv 2015, arXiv:1409.5185. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

| Methods | Category | Example Studies |

|---|---|---|

| Traditional CD methods | PBCD | Bruzzone et al. [7], Celik [8], Deng et al. [9], Wu et al. [10], Huang et al. [11], Benedek et al. [14], and Bazi et al. [18] |

| OBCD | Ma et al. [20], Zhang et al. [21], Gil-Yepes et al. [22], Qin et al. [23] | |

| Deep learning CD methods | FB-DLCD | Sakurada et al. [26], Saha et al. [27], Hou et al. [28], El Amin et al. [29], Zhan et al. [31], Zhang et al. [32], Niu et al. [33], and Zhan et al. [34] |

| PB-DLCD | Gong et al. [36], Arabi et al. [38], Ma et al. [40], Zhang et al. [41], Khan et al. [42], Daudt et al. [44], Wang et al. [45], Wiratama et al. [46], Zhang et al. [47], Mou et al. [49], and Gong et al. [50] | |

| IB-DLCD | Lei et al. [52], Daudy et al. [53], Lebedev et al. [54], and Guo et al. [55] |

| Methods | Precision | Recall | F1-Score | OA |

|---|---|---|---|---|

| CDNet | 0.7395 | 0.6797 | 0.6882 | 0.9105 |

| FC-EF | 0.8156 | 0.7613 | 0.7711 | 0.9413 |

| FC-Siam-conc | 0.8441 | 0.8250 | 0.8250 | 0.9572 |

| FC-Siam-diff | 0.8578 | 0.8364 | 0.8373 | 0.9575 |

| FC-EF-Res | 0.8093 | 0.7881 | 0.7861 | 0.9436 |

| FCN-PP | 0.8264 | 0.8060 | 0.8047 | 0.9536 |

| Proposed method | 0.8954 | 0.8711 | 0.8756 | 0.9673 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111382

Peng D, Zhang Y, Guan H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sensing. 2019; 11(11):1382. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111382

Chicago/Turabian StylePeng, Daifeng, Yongjun Zhang, and Haiyan Guan. 2019. "End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++" Remote Sensing 11, no. 11: 1382. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111382