1. Introduction

Underwater imaging has found important applications in diverse research areas such as marine biology and archaeology [

1,

2], underwater surveying and mapping [

3], and underwater target detection [

4,

5]. However, captured underwater images are generally degraded by scattering and absorption.

Scattering means a change of direction of light after collision with suspended particles in water, which causes the blurring and low contrast of images.

Absorption means light absorbed by suspended particles which depends on the wavelength of each light beam [

6]. Because the light with shorter wavelength (i.e., green and blue light) travels longer in water, the underwater images generally have predominantly green-blue hue. Contrast loss and color deviation are the main consequences of underwater degradation processes(e.g.,

Figure 1), which may cause difficulties for further processing, and it is of considerable interest to remove such distortions.

The goal of underwater image processing is to enhance visibility and rectify the color deviation. In general, the underwater image processing techniques can be divided into two categories, namely, underwater image enhancement and restoration [

7,

8]. The underwater image enhancement methods, such as histogram equalization [

9], white balance [

10], pixel stretching [

11,

12], retinex-based methods [

13,

14] and fusion-based methods [

15,

16,

17], generally improve image visual effect by modifying image pixels without concerning the physical degradation mechanism of underwater images. These enhancement-based methods do not exploit physical imaging models and consequently they are often inadequate for restoring original scene features, especially color features [

8].

On the other hand, underwater image restoration methods aim to recover clear images by exploiting the optical imaging model. The most important task for restoration is to estimate two key model parameters, i.e., transmission and ambient light, which are usually estimated either by prior-based approaches [

6,

18,

19,

20,

21,

22,

23,

24,

25] or by learning-based approaches [

26,

27,

28,

29,

30]. The prior-based approaches heavily depend on the reliability of certain prior information, such as dark channel prior [

18,

19,

20,

21], red channel prior [

6], haze-line prior [

25] and so on. However, priors generally have their respective limitations, and may not adapt to some conditions. Thus, a mismatch between the adopted prior and the target scene may incur significant estimation error, and consequently recover distorted results [

23]. By contrast, the learning-based approaches aim to obtain more robust and accurate estimation by exploring the relations between the underwater images and the corresponding parameters in a data-driven manner, such as [

26,

27,

28,

29,

30]. To this end, it is essential to have a suitable training dataset and an efficient neural network that can be trained to learn such relations. Unfortunately, the existing networks [

26,

27,

28,

29,

30] are not capable of estimating the parameters accurately enough and the resulting restored images often suffer from various artifacts; it is also difficult to create a dataset capturing complex and varying underwater environments.

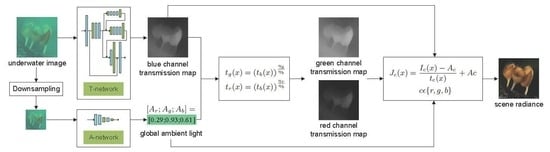

On the basis of the well-known model for underwater physical imaging, we propose a deep convolutional neural network (CNN) model with two parallel branches for underwater image restoration, accompanied by a training dataset in this paper. By exploring the inherent relations between the degraded underwater images and the associated blue channel transmission map as well as global ambient light in a data-driven manner, the proposed CNN can perform more accurate and robust parameter estimation; as a consequence, the restored images exhibit better visibility and more natural color compared to those produced by the state-of-the-art methods.

The contributions of this work are summarized as follows:

(1) We propose a new end-to-end underwater image restoration algorithm using a deep CNN model to improve the contrast and color cast of the recovered images. The whole network consists of two paralleled branches: a transmission estimation sub-network (T-network) and a global ambient light estimation sub-network (A-network), by which the transmission map and the ambient light can be estimated simultaneously. Since in our case no prior information is used to estimate the transmission and ambient light, it avoids the aforementioned mismatch issue commonly encountered in the prior-based methods and helps to improve the accuracy and universality of the estimation method.

(2) The proposed T-network is inspired by the U-shaped structure [

31], but also has some additional features. In particular, it uses cross-layer connection and multi-scale estimation to preserve delicate spatial structures and edge features. Specifically, the cross-layer connection is used to compensate for the information loss, especially edge information while the multi-scale estimation helps incorporate local image details from different scales. These two special structures help to produce transmission maps with better edge features and prevent halo artifacts in restoration images. Indeed, good restoration results can be obtained based on the estimated transmission maps without any refinement.

(3) We design a new underwater image synthesizing method, which can produce synthetic images with different blue-green color cast and different degree of clarity based on the underwater image degradation model and the existing depth map datasets, effectively simulating images captured in different underwater environments. This method enables us to create a rich dataset of underwater images for network training.

The rest of this paper is organized as follows:

Section 2 reviews the related work.

Section 3 describes the proposed method in detail.

Section 4 presents the experimental settings and results.

Section 5 discusses the design idea underlying the proposed method. Lastly,

Section 6 concludes this paper.

3. Proposed Method

Aiming to improve the image contrast and color cast, an underwater image restoration approach based on a parallel CNN and the underwater optical model is proposed in this paper. We first briefly review the underwater optical imaging model, then present a detailed description of our proposed two-branch CNN framework and the loss functions used in the optimization. Next, we explain how to use the estimated transmission and global ambient light to restore the underwater images. Lastly, we propose a new underwater image synthesizing method that can be used to build the training datasets.

3.1. Underwater Optical Imaging Model

Figure 2 shows a schematic diagram of underwater optical imaging. In underwater photography, the light captured by the camera is mainly composed of two parts. One part of light is scene radiance which attenuates due to absorption of water and scattering of suspended particles. The other part of light is some ambient light reflected into the camera by suspended particles. Following the previous research [

19], the simplified underwater optical imaging model can be described as:

where

x denotes a pixel in the underwater image and

c denotes the color channel,

is the image captured by the camera,

is the scene radiance,

is global ambient light, and

is the transmission map which represents the residual energy ratio of the scene radiance reaching the camera.

According to Schechner et al. [

32],

can be further expressed as:

where

c denotes the color channel,

is the object-camera distance, and

is attenuation coefficient, which is a sum of the absorption coefficient

and the scattering coefficient

, i.e.,

.

Underwater image restoration aims to recover

from

. To this end,

and

need to be estimated first. Li et al. [

24] found that the ratios of the total attenuation coefficients between different color channels in water can be expressed as:

where

and

are the red-blue and green-blue total attenuation coefficient ratios, respectively.

is the wavelength of different color channels with

,

,

equal to 620 nm, 540 nm, and 450 nm, respectively. Note that the transmission maps of the green and red channels,

and

, are determined by the blue channel transmission map,

, as follows:

Therefore, the key issue of underwater image restoration is to estimate and accurately.

3.2. Proposed CNN Framework

Our approach is to estimate the blue channel transmission and ambient light using two CNN subnetworks, respectively, and then leverage them to restore the clear images. The architecture of our approach is shown in

Figure 3. Specifically, the T-network is used to estimate blue channel transmission map of the underwater image, and the A-network is used to estimate global ambient light. The transmission maps of red channel and green channel are then computed using Equation (

4). Finally, the clear image is restored by substituting the obtained parameters into the underwater optical imaging model. The details of the A-network and the T-network are introduced as follows:

A-network: As mentioned in

Section 2, most of the conventional methods intend to select the pixels with infinite depth to estimate ambient light. However, the accuracy of this selection is often limited by camera angle and jeopardized by some anomalous pixels. To address these issues and improve the robustness of the estimation, we proposed a new global ambient light estimation method based on CNN by learning the mapping between underwater images and their corresponding ambient light.

Considering the estimation of global ambient light is much easier than that of transmission, we design a light-weight CNN model called A-network to predict the global ambient light. The illustration of the A-network architecture is given in

Figure 4.

The input of A-network is a down-sampled underwater image, and the corresponding output is the global ambient light, which is one pixel vector with three color channels, i.e., [; ; ]. Since the ambient light value is highly related to the global illumination features instead of the local image details, it is sensible to be estimated from a global perspective. In order to avoid the interference of the local details when extracting the global illumination features from the underwater image, the original image is down-sampled first before fed to A-network. Specifically, it is down-sampled to the size of to remove the most details but preserve the major global information as well. Meanwhile, by using an input down-sampled underwater image, the parameters of A-network can be greatly reduced, and the global image information can be learned more easily.

As shown in

Figure 4, the A-network consists mainly of two operations: convolution and max-pooling. We use three convolutional layers to extract features and reduce the dimension of feature maps, and use two max-pooling layers to overcome local sensitivity and further reduce the resolution of feature maps. The last layer, which is also a convolutional layer, is used for nonlinear regression. We adopt a larger convolution kernel in the first layer, and then gradually reduce the size of the convolution kernels in the later layers with the decrease of the size of the feature maps. In such a way, a larger receptive field can be obtained and better global information which is related to the ambient light estimation can be learned. In addition, we add a ReLU layer after each convolutional layer to avoid the problems of slow convergence and local minima during the training phase.

T-network: The existing CNN-based methods for transmission map estimation make the common assumption that the transmission maps of three color channels are the same. However, this assumption does not hold for underwater images. In general, blue light has the best transmission performance in water, and its transmission distribution is more uniform and wider. Based on the existing study of three-channel transmission relationship [

24], the proposed method only estimates the blue channel transmission map, which is then leveraged to compute the other two transmission maps using the deterministic relationship among them. Such a two-stage estimation strategy simplifies the network structure and reduces the training complexity. In addition, the existing single channel transmission estimation network can be used for reference.

There are many research results in single channel transmission estimation methods. In [

28], Zhao et al. proposed an end-to-end network, named Deep Fully Convolutional Regression Network (DFCRN), which has high accuracy in transmission estimation. The network uses a U-shaped network similar to encoding-decoding structure. In the encoding part, features are extracted. In the decoding part, features are preserved and re-extracted, and the size of output is ensured in this part. Under this U-shaped structure, this network can not only expand the receptive field, reduce the network parameters, but also ensure that the network has better nonlinear learning ability. However, this U-shaped network will lose some detail information, such as edges information, when reducing the size of the feature maps via the pooling layer in the encoding part. This makes the estimated transmission map unable to accurately reflect the edges shape, thus resulting in a halo phenomenon in the subsequent restored image. This phenomenon is common in the existing transmission estimation methods. Therefore, after estimating the transmission map, the edge-preserving filter is often used for refinement.

Building upon DFCRN, we design a new network (i.e., T-network) with enhanced edge preserving capability to estimate the blue channel transmission map, whose architecture is given in

Figure 5. The proposed T-network is built by augmenting the basic U-shaped structure with some additional features, such as crosslayer connection and multi-scale estimation. The connection between the first convolutional layer and the penultimate convolutional layer is used to compensate for the information loss, especially edge information because the front layer still retains a lot of detail information, especially more abundant edges information. Moreover, we adopt a multi-level pyramid pooling as the second pooling layer, which helps to estimate the transmission in different scales. Inspired by Ren et al. [

33], we fuse multi-scale transmission maps after the multi-level pyramid pooling, which helps to integrate features from different scales into the final result [

34]. There is an up-sampling layer after the output of every scale. The output of small scale will be added to the next scale as a feature map. The multi-scale approach provides a convenient way to aggregate local image details associated with different resolutions [

33], which helps to preserve edge features and prevent halo artifacts.

3.3. Loss Function

For regression tasks, most of the learning-based methods employ Euclidean loss, i.e., L2-norm loss, for network optimization. In this work, the L2-norm of the difference between the predicted and ground truth ambient light is used as the loss function to optimize the proposed A-network. Specifically, we define

where

is L2-norm operation,

is the output of the A-network, and

is the corresponding ground truth ambient light with

i denoting the color channel.

The transmission map estimated using only the L2-norm loss tends to be blurred and lacks high-frequency details, resulting in the loss of edge details. L1-norm loss is used to help to preserve the sharpness of edges and details. Thus, we optimize the T-network by minimizing a weighted combination of the pixel-wise L2-norm loss and L1-norm loss between the predicted transmission map and the corresponding ground truth. After experiments, the weight coefficient of L2-norm loss is 0.7, and the weight coefficient of L1-norm loss is 0.3. In addition, we adopt multi-scale estimation in our T-network, so the output of T-network has three different scales. The final loss function of our T-network can be expressed as the total loss on these three scales:

where

is L2-norm operation,

is L1-norm operation,

N is the number of pixels in the input image,

is the output of the T-network,

and

are small-scale outputs,

is the corresponding ground truth transmission map,

and

are small-scale corresponding ground truth transmission map.

is 16 times smaller than

.

is 64 times smaller than

.

3.4. Image Restoration

Once the global ambient light and the blue channel transmission map are estimated by the A-network and the T-network, we can compute the green channel transmission map and the red channel transmission map using Equation (

4). Finally, according to Equation (

1), the scene radiance can be restored as follows:

where

is the image captured by the camera,

is the scene radiance,

is global ambient light, and

is the estimated transmission map with

x denoting the pixel index and

c denoting the color channel.

It is worth noting that many underwater image enhancement or restoration methods are patch-based, and consequently the resulting estimated transmission maps often suffer from serious block effect. To prevent halo artifacts in restoration images, guided image filtering [

35] is often used to refine the transmission map by preserving the edge features. Different from those existing methods, the proposed method directly estimates whole transmission maps using our elaborately designed T-network, which can effectively prevent halos due to its good edge-preserving capability. Indeed, our method is able to produce more accurate estimated transmission maps with clear edges and consequently better restored images even without using guided filtering for post-refinement.

3.5. Synthesizing Underwater Dataset

The quality of the training data plays an important role in deep-learning-based methods. Training of CNN requires a large set of degraded underwater images and the corresponding clean images as well as the associated parameters (e.g., transmission map and global ambient light). It is very difficult, if not impossible, to obtain such a training dataset via experiments. Therefore, we choose to synthesize training images using the underwater optical model and the publicly available depth image datasets. Since underwater images generally have predominantly green-blue hue, we propose a method that can produce synthetic images with similar effects, effectively simulating images captured in various underwater environments. The detailed method is described as follows.

First, we collect clean images

and the corresponding depth maps

from the existing indoor and outdoor depth image datasets. It is preferable to have clean images with abundant colors and depth maps with clear edges. Next, we generate random blue channel attenuation coefficient

, and global ambient light

and then synthesize images using the physical model described in

Section 3.1. The relationship between the transmission of three channels in [

24] is used to reduce unknown parameters. Specifically, we first generate the blue channel attenuation coefficient, and calculate the blue channel transmission via Equation (

2); the other two channels then can be calculated via Equation (

4) and the underwater image can be generated via Equation (

1). Because the red channel attenuates much faster in water, underwater images generally have predominantly green-blue hue. Thus, we assume that

is smaller than

and

, and generate random

,

and

. The dataset generated in this way can be used to train the network to have more accurate estimation of transmission and ambient light of underwater images with different green-blue hue color cast.

4. Experiments

In this section, we first further illustrate the proposed dataset synthesizing method, and then describe the experimental settings, and finally compare the proposed method with several state-of-the-art methods for single underwater image recovery, such as enhancement-based methods (Zhang et al. [

12], Fu et al. [

13]), prior-based methods (Drews et al. [

20], Li et al. [

24], Berman et al. [

25]), and CNN-based methods (Shin et al. [

29]), on the synthetic and real-world underwater images.

4.1. Dataset

Training datasets play an important role in deep-learning-based methods as they are used to learn the mapping between the data and the corresponding labels. Unfortunately, for the underwater image restoration problem, there is no sufficient amount of labelled data for network training, especially considering the diverse underwater environments. Therefore, we propose a method to generate synthetic images that can effectively simulate those captured in various underwater environments. The proposed method makes use of the existing depth image datasets, the underwater optical model and the prior that the red component of ambient light is smaller than blue and green components for under water images.

We choose Middlebury Stereo dataset [

36,

37] as the indoor depth dataset. In addition, we use clear outdoor images from the Internet and Liu et al. [

38]’s depth map estimation model to generate the outdoor depth dataset. We crop images into smaller ones with size of

pixels. Following the steps in

Section 3.5, we use 477 clean images

and corresponding depth maps

to generate 13,780 underwater images and their corresponding transmission maps to train the T-network, and 20,670 underwater images and their corresponding ambient light to train the A-network. Note that the training images for the A-network are resized to

pixels by nearest interpolation.

A good underwater dataset not only needs to cover a wide range of scenes in a uniform manner to avoid overfitting. In view of the fact that the diversity of underwater environments is reflected, to a significant extent, in ambient light, we carefully control the statistics of the hue and brightness of ambient light generated by our synthesizing method. First, consider using a uniform distribution defined over a certain range to generate ambient light. As shown in

Figure 6a, ambient light generated in this way has an even distribution of green-blue hue color, but an uneven distribution of brightness. Thus, we modify the distribution by increasing the proportion of dark ambient light, the final distribution of

is shown in

Table 1 and the distribution of ambient light is shown in

Figure 6b. It can be seen that our modification yields even distributions of hue and brightness.

To demonstrate that datasets with evenly distributed hue and brightness improve the training performance, we compare the restoration results based on the datasets generated using the two aforementioned ambient light distributions. The comparison results are showed in

Figure 7. It can be seen that the network trained with the dataset generated using the modified distribution performs better, especially on dark underwater images.

4.2. Experimental Settings

The back-propagation algorithm and the stochastic gradient descent (SGD) algorithm are used to train our models.

In our T-network, all the training samples are resized to . The batch size is set as 8. The initial learning rate is 0.001 and decreases by 10% after every 1 k iterations. The optimization is stopped at 20 k iterations. In addition, we set weight decay and momentum to 0.0001 and 0.9, respectively.

In our A-network, all the training samples are resized to . The batch size is set as 128. The initial learning rate is 0.001 and decreases by 10% after every 1 k iterations. The optimization is stopped at 20 k iterations. In addition, we set weight decay and momentum to 0.005 and 0.9, respectively.

The training and validation loss of A-network and T-network over the training process are shown in

Figure 8a,b, respectively. Note that training T-network requires more memory than A-network because the input/output size of T-network is much larger than that of A-network. Due to the memory limits of the device, a much smaller batch size is set in T-network training, which lead to a continuous fluctuation in the training loss curve of T-network, as depicted in

Figure 8b. By considering that the validation loss curve of T-network converges after 15 k iterations, we stop the training process at 20 k iterations.

4.3. Comparisons on Synthetic Images

For evaluating performance, we construct a testing dataset of synthesized underwater images. We select 50 images and their depth maps from the NYU Depth dataset [

39] and RESIDE dataset [

40] (different from those that used for training) to synthesize 50 underwater images with different scenes, color deviation and contrast loss. Then, we evaluate the performance of the proposed method on the testing dataset and make comparisons with several state-of-the-art methods.

Figure 9 shows visual comparisons of different approaches on the testing dataset. As observed from

Figure 9, Drews et al. [

20] enhances image contrast to a certain extent, but fails to rectify color deviation sometimes. Li et al. [

24] improves the visibility, but tends to produce over-enhanced results, as compared to the ground truth. Berman et al. [

25] produces images with better clarity; nevertheless, the results still suffer from certain color deviation. Like our method, Shin et al. [

29] also uses CNN to restore underwater images, but their results often exhibit low contrast, less details and color shift. By contrast, our method can not only improve the contrast of the degraded underwater images, but also correct the color deviation properly. Moreover, the images recovered using our method are visually closer to the ground truth clear images than those produced by the competing methods.

It should be noted that, since [

20,

24,

29] are all patch-based, they have to use some edge-preserving filters, such as guided filters, to refine the estimated local transmission in order to suppress the block artifacts. In [

25], guided filtering is also adopted to regularize the transmission map because a binary classification of the pixels often results in abrupt discontinuities in the transmission map.

Different from these state-of-the-art approaches, we elaborately design a CNN structure (namely, the T-network) with multi-scale estimation and cross-layer connection for transmission map estimation, which can well preserve edge features of the transmission map. Moreover, our method is designed for full-size input images, not just the local patches. Our network also benefits from the training dataset produced by the new synthesizing method, which can effectively simulate images captured in various underwater environments. As a consequence, our method can obtain more accurate estimation of transmission map and thereby restore images with clear edges. As shown in

Figure 9f–h, the difference between our recovered images obtained with and without guided filtering is scarcely perceptible in most cases, and they both are very close to the ground truth. Therefore, guided filtering is inessential for our method as its functionalities have been implicitly realized by the proposed T-network.

Furthermore, we perform a quantitative comparison against the above-mentioned state-of-the-art restoration methods [

20,

24,

25,

29] using the Peak Signal-to-Noise Ratio (PSNR), Structural Similarity (SSIM) [

41] and a color difference formula CIEDE2000 [

42] as evaluation metrics. Specifically, a lager value of PSNR and SSIM, or a smaller value of CIEDE2000 indicates that the recovered image is closer to the corresponding ground truth in terms of statistical errors, image structure and color information, respectively.

Table 2 summarizes the average values of SSIM, PSNR and CIEDE2000 for the restored images obtained by different methods. It can be seen that our method, without and with guided filtering (see Ours and Ours+GF in

Table 2), outperforms the competing methods on all three metrics by a wide margin. It also shows that guided filtering is inessential for our method.

Finally, we compare the aforementioned restoration methods in terms of the accuracy of the estimated transmission light and global ambient light.

Figure 10 illustrates the restored images and their correspongding estimated blue-channel transmission and ambient light by different methods. For the convenience of comparison, we visualize each ambient light as a colored bar. Note that [

24,

25] and ours first estimate single channel transmission, then compute the others; on the other hand, the authors in [

20,

29] do not take into account the difference between the three channels’ transmission. As such, in

Figure 10, the comparison is made only regarding the blue channel transmission.

The accuracy of transmission and ambient light estimation determines the effect of image restoration. In

Figure 10b–d, it can be observed that the results of the prior-based restoration methods [

20,

24,

25] depend critically on the validity of priors. Specifically, Drews et al. [

20], Li et al. [

24] and Berman et al. [

25] produce estimating error in some areas of transmission map due to the failure of their adopted priors based on color information. In addition, their estimated ambient light is not accurate, when there are no pixels with the same value as the actual ambient light in underwater scenes or they estimate ambient light at wrong location due to the failure of their adopted priors. Shin et al. [

29] proposed a deep-learning-based method uses small local patches instead of full-size images as training samples; as such, it only exploits the local information but ignores the global structure. Owing to the limitation of the training data as well as the network architecture, this method produces relatively large estimation errors, especially regarding the ambient light, as illustrated in

Figure 10e. Compared with the above-mentioned methods, our method produces more accurate estimation results. In particular, our estimated transmission maps reflect more correctly the depth relationship between objects, and our estimated ambient light has less color distortions, as shown in

Figure 10f–g.

For further comparison, we compute the Mean Squared Error (MSE) of the estimated global ambient light and the SSIM of the estimated transmission maps produced by different methods. It can be seen from the quantitative results shown in

Figure 10 that our method gives the most accurate estimation of both the transmission map and the ambient light.

4.4. Comparisons on Real-World Images

In this part, we conduct several comparisons on real-world underwater images in order to verify the effectiveness of the proposed method. We choose a number of challenging real-world images with diverse underwater scenes and color shift that are commonly used for qualitative comparisons.

Figure 11 compares the recovered results of our method against several state-of-the-art methods on real-world images, including four restoration-based methods [

20,

24,

25,

29] mentioned in the previous subsection and two representative enhancement-based methods [

12,

13].

As shown in

Figure 11b,c,e, the authors in [

12,

13,

24] succeed in enhancing contrast of the recovered images and enriching the details, but they tend to over-enhance and produce reddish results. The authors in [

20] can only increase the contrast of images, but fail to correct color deviation, as depicted in

Figure 11d because it assumes that the attenuations of three color channels are the same, which is actually invalid in underwater environments. According to

Figure 11f, the authors in [

25] perform well on contrast enhancement and color correction, but it generates over-enhanced and over-saturated results when the ambient light is significantly brighter than the scene. It can be seen from

Figure 11g that the results of [

29] tend to have poor visibility with low contrast and hue distortion due to the inaccurately estimated transmission and ambient light. In contrast, our method produces images with natural color, enhanced contrast, and visually pleasing visibility, as illustrated in

Figure 11h. Therefore, although the proposed network is trained using synthetic underwater images, it is capable of delivering more satisfactory restoration results on real-world degraded underwater images as compared to the state-of-the-art methods.

In addition, we use two non-reference image quality metrics to objectively evaluate the recovered underwater images obtained by different methods. One is the Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) [

43], a metric for evaluating possible losses of naturalness in an image because of the presence of distortions. The range of BRISQUE scores is 0 to 100. The score closer to 0 represents the better quality. The other is the Underwater Color Image Quality Evaluation (UCIQE) [

44], a metric to quantify the nonuniform color cast and low-contrast that characterize underwater images. A higher UCIQE score represents a better image quality. We use 50 real-world images with diverse underwater environments as a test dataset.

Table 3 lists the average BRISQUE scores and UCIQE values for the different methods. As shown in

Table 3, the proposed method outperforms the others.