Using Digital Cameras on an Unmanned Aerial Vehicle to Derive Optimum Color Vegetation Indices for Leaf Nitrogen Concentration Monitoring in Winter Wheat

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site and Experimental Design

2.2. Data Acquisition and Processing

2.2.1. Color Images from Unmanned Aerial Vehicle (UAV)

2.2.2. Determination of Leaf N Concentration (LNC)

2.2.3. Derivation of Vegetation Indices (VIs)

2.3. Data Analysis and Evaluation

3. Results

3.1. Changes of Digital Number (DN) Values in Different Channels

3.2. Leaf N Concentration (LNC) Estimation Model Constraction and Validation

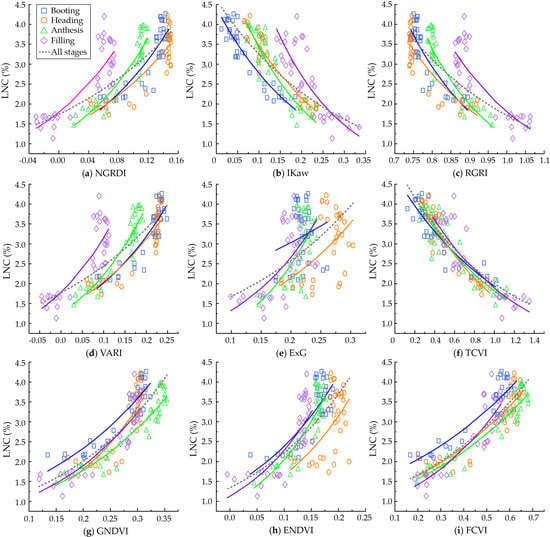

3.2.1. Quantitative Relationships between Leaf N Concentration (LNC) and Vegetation Indices (VIs) at Different Growth Stages

3.2.2. Validation of the Leaf N Concentration (LNC) Models for Wheat

3.3. Saturation Sensitivity of Vegetation Indices (VIs) at Different Leaf N Concentrations (LNCs)

3.4. Applicability of the Leaf N Concentration (LNC) Models under Different Treatments

4. Discussion

4.1. Performance of the Vegetation Indices (VIs) Derived from Digital Imagery in Estimating Wheat Leaf N Concentration (LNC)

4.2. Accuracy and Universality of Leaf N Concentration (LNC) Estimation Models in Wheat

4.3. Capability of Commercial Digital Cameras for Wheat Leaf N Concentration (LNC) Estimation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Benedetti, R.; Rossini, P. On the use of NDVI profiles as a tool for agricultural statistics: The case study of wheat yield estimate and forecast in Emilia Romagna. Remote Sens. Environ. 1993, 45, 311–326. [Google Scholar] [CrossRef]

- Rouse, J.W.J.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Vegetation Systems in the Great Plains with ERTS; NASA: Washington, DC, USA, 1973; Volume 351, p. 309.

- Basnyat, B.M.P.; Moulin, A.; Pelcat, Y.; Lafond, G.P. Optimal time for remote sensing to relate to crop grain yield on the Canadian prairies. Can. J. Plant Sci. 2004, 84, 97–103. [Google Scholar]

- Hatfield, J.L.; Gitelson, A.A.; Schepers, J.S.; Walthall, C.L.; Pearson, C.H. Application of spectral remote sensing for agronomic decisions. Agron. J. 2015, 100, 117–131. [Google Scholar] [CrossRef]

- Ju, X.T.; Xing, G.X.; Chen, X.P.; Zhang, S.L.; Zhang, L.J.; Liu, X.J.; Cui, Z.L.; Yin, B.; Christie, P.; Zhu, Z.L.; et al. Reducing environmental risk by improving N management in intensive Chinese agricultural systems. Proc. Natl. Acad. Sci. USA 2009, 106, 3041–3046. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty-five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Hansen, P.; Schjoerring, J.K. Reflectance measurement of canopy biomass and nitrogen status in wheat crops using normalized difference vegetation indices and partial least squares regression. Remote Sens. Environ. 2003, 86, 542–553. [Google Scholar] [CrossRef]

- Tan, C.; Guo, W.; Wang, J. Predicting grain protein content of winter wheat based on landsat TM images and leaf nitrogen Content. In Proceedings of the International Conference on Remote Sensing, Environment and Transportation Engineering, Nanjing, China, 24–26 June 2011; pp. 5165–5168. [Google Scholar]

- Zhang, F.; Cui, Z.; Fan, M.; Zhang, W.; Chen, X.; Jiang, R. Integrated Soil–Crop System Management: Reducing Environmental Risk while Increasing Crop Productivity and Improving Nutrient Use Efficiency in China. J. Environ. Qual. 2011, 40, 1051. [Google Scholar] [CrossRef]

- Miao, Y.; Zhang, F. Long-term experiments for sustainable nutrient management in China. A review. Agron. Sustain. Dev. 2011, 31, 397–414. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Vadrevu, K.P. Introduction to Remote Sensing (FIFTH EDITION); Campbell, J.B., Wynne, R.H., Eds.; Guilford Press: New York, NY, USA, 2013; Volume 28, pp. 117–118. ISBN 978-160918-176-5. [Google Scholar]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.T.; McMurtrey, J.E.; Walthall, C.L. Evaluation of Digital Photography from Model Aircraft for Remote Sensing of Crop Biomass and Nitrogen Status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Nakatani, M.; Kawashima, S. An Algorithm for Estimating Chlorophyll Content in Leaves Using a Video Camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Golzarian, M.R.; Frick, R.A.; Rajendran, K.; Berger, B.; Roy, S.; Tester, M.; Lun, D.S. Accurate inference of shoot biomass from high-throughput images of cereal plants. Plant Methods 2011, 7, 2. [Google Scholar] [CrossRef] [PubMed]

- Geipel, J.; Link, J.; Claupein, W. Combined Spectral and Spatial Modeling of Corn Yield Based on Aerial Images and Crop Surface Models Acquired with an Unmanned Aircraft System. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Cui, R.-X.; Liu, Y.-D.; Fu, J.-D. [Estimation of Winter Wheat Biomass Using Visible Spectral and BP Based Artificial Neural Networks]. Guang pu xue yu guang pu fen xi = Guang pu 2015, 35, 2596. [Google Scholar] [PubMed]

- Huete, A.; Huete, A. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; Mccarty, G.W. Acquisition of NIR-Green-Blue Digital Photographs from Unmanned Aircraft for Crop Monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; Mccarty, G.W.; Daughtry, C.S.T.; Forrestal, P.J.; Kratochvil, R.J.; Carr, J.L.; Allen, N.F.; Fox-Rabinovitz, J.R.; Miller, C.D. NIR-Green-Blue High-Resolution Digital Images for Assessment of Winter Cover Crop Biomass. GIScience Remote Sens. 2011, 48, 86–98. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Li, Y.; Chen, D.; Walker, C.; Angus, J. Estimating the nitrogen status of crops using a digital camera. Field Crop. Res. 2010, 118, 221–227. [Google Scholar] [CrossRef]

- Li, F.; Gnyp, M.L.; Jia, L.; Miao, Y.; Yu, Z.; Koppe, W.; Bareth, G.; Chen, X.; Zhang, F. Estimating N status of winter wheat using a handheld spectrometer in the North China Plain. Field Crop. Res. 2008, 106, 77–85. [Google Scholar] [CrossRef]

- Zhu, Y.; Tian, Y.; Yao, X.; Liu, X.; Cao, W. Analysis of Common Canopy Reflectance Spectra for Indicating Leaf Nitrogen Concentrations in Wheat and Rice. Plant Prod. Sci. 2007, 10, 400–411. [Google Scholar] [CrossRef]

- Moharana, S.; Dutta, S. Spatial variability of chlorophyll and nitrogen content of rice from hyperspectral imagery. ISPRS J. Photogramm. Remote Sens. 2016, 122, 17–29. [Google Scholar] [CrossRef]

- Yao, X.; Ren, H.; Cao, Z.; Tian, Y.; Cao, W.; Zhu, Y.; Cheng, T. Detecting leaf nitrogen content in wheat with canopy hyperspectrum under different soil backgrounds. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 114–124. [Google Scholar] [CrossRef]

- Wright, I.J.; Reich, P.B.; Westoby, M.; Ackerly, D.D.; Baruch, Z.; Bongers, F.; Cavender-Bares, J.; Chapin, T.; Cornelissen, J.H.C.; Diemer, M.; et al. The worldwide leaf economics spectrum. Nature 2004, 428, 821–827. [Google Scholar] [CrossRef]

- Evans, J.R. Photosynthesis and nitrogen relationships in leaves of C3 plants. Oecologia 1989, 78, 9–19. [Google Scholar] [CrossRef] [PubMed]

- Filella, I.; Serrano, L.; Serra, J.; Peñuelas, J. Evaluating Wheat Nitrogen Status with Canopy Reflectance Indices and Discriminant Analysis. Crop. Sci. 1995, 35, 1400–1405. [Google Scholar] [CrossRef]

- Houlès, V.; Guérif, M.; Mary, B. Elaboration of a nitrogen nutrition indicator for winter wheat based on leaf area index and chlorophyll content for making nitrogen recommendations. Eur. J. Agron. 2007, 27, 1–11. [Google Scholar] [CrossRef]

- Yao, X.; Huang, Y.; Shang, G.; Zhou, C.; Cheng, T.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of Six Algorithms to Monitor Wheat Leaf Nitrogen Concentration. Remote Sens. 2015, 7, 14939–14966. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Adam, E.; Adjorlolo, C.; Abdel-Rahman, E.M. Evaluating the robustness of models developed from field spectral data in predicting African grass foliar nitrogen concentration using WorldView-2 image as an independent test dataset. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 178–187. [Google Scholar] [CrossRef]

- Lepine, L.C.; Ollinger, S.V.; Ouimette, A.P.; Martin, M.E. Examining spectral reflectance features related to foliar nitrogen in forests: Implications for broad-scale nitrogen mapping. Remote Sens. Environ. 2016, 173, 174–186. [Google Scholar] [CrossRef]

- Ecarnot, M.; Compan, F.; Roumet, P. Assessing leaf nitrogen content and leaf mass per unit area of wheat in the field throughout plant cycle with a portable spectrometer. Field Crop. Res. 2013, 140, 44–50. [Google Scholar] [CrossRef]

- Bremner, J.M.; Sparks, D.L.; Page, A.L.; Helmke, P.A.; Loeppert, R.H.; Soltanpour, P.N.; Tabatabai, M.A.; Johnston, C.T.; Sumner, M.E. Nitrogen—Total. Methods Soil Anal. Chem. Methods Part 1996, 72, 532–535. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Gamon, J.A.; Surfus, J.S. Assessing Leaf Pigment Content and Activity with a Reflectometer. New Phytol. 2010, 143, 105–117. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Li, H.; Liu, G.; Liu, Q.; Chen, Z.; Huang, C. Retrieval of Winter Wheat Leaf Area Index from Chinese GF-1 Satellite Data Using the PROSAIL Model. Sensors 2018, 18, 1120. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Govaerts, Y.M.; Verstraete, M.M.; Pinty, B.; Gobron, N. Designing optimal spectral indices: A feasibility and proof of concept study. Int. J. Remote Sens. 1999, 20, 1853–1873. [Google Scholar] [CrossRef]

- Gitelson, A.A. Remote estimation of crop fractional vegetation cover: The use of noise equivalent as an indicator of performance of vegetation indices. Int. J. Remote Sens. 2013, 34, 6054–6066. [Google Scholar] [CrossRef]

- Agapiou, A.; Hadjimitsis, D.G.; Alexakis, D.D. Evaluation of Broadband and Narrowband Vegetation Indices for the Identification of Archaeological Crop Marks. Remote Sens. 2012, 4, 3892–3919. [Google Scholar] [CrossRef] [Green Version]

- Li, F.; Mistele, B.; Hu, Y.; Chen, X.; Schmidhalter, U. Reflectance estimation of canopy nitrogen content in winter wheat using optimised hyperspectral spectral indices and partial least squares regression. Eur. J. Agron. 2014, 52, 198–209. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Doraiswamy, C.P.; McMurtrey, E.J.; Daughtry, T.C.S.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef] [Green Version]

- Chappelle, E.W.; Kim, M.S.; McMurtrey, J.E., III. Ratio analysis of reflectance spectra (RARS): An algorithm for the remote estimation of the concentrations of chlorophyll A, chlorophyll B, and carotenoids in soybean leaves. Remote Sens. Environ. 1992, 39, 239–247. [Google Scholar] [CrossRef]

- Peñuelas, J.; Filella, I. Visible and near-infrared reflectance techniques for diagnosing plant physiological status. Trends Plant Sci. 1998, 3, 151–156. [Google Scholar] [CrossRef]

- Peñuelas, J.; Gamon, J.; Fredeen, A.; Merino, J.; Field, C. Reflectance indices associated with physiological changes in nitrogen- and water-limited sunflower leaves. Remote Sens. Environ. 1994, 48, 135–146. [Google Scholar] [CrossRef]

- Carter, G.A.; Miller, R.L. Early detection of plant stress by digital imaging within narrow stress-sensitive wavebands. Remote Sens. Environ. 1994, 50, 295–302. [Google Scholar] [CrossRef]

- Peñuelas, J.A.; Gamon, J.; Griffin, K.L.; Field, C.B. Assessing community type, plant biomass, pigment composition, and photosynthetic efficiency of aquatic vegetation from spectral reflectance. Remote Sens. Environ. 1993, 46, 110–118. [Google Scholar] [CrossRef]

- Lamm, R.D.; Slaughter, D.C.; Giles, D.K. Precision Weed Control System for Cotton. Trans. ASAE 2002, 45, 231–238. [Google Scholar]

| Experiment | Year | Wheat Varieties | N Application Rates (kg/ha) | Plot Area (m2) | Planting Density (plants/ha) | Sampling Dates |

|---|---|---|---|---|---|---|

| Exp.1 | 2013–2014 | V1: Yangmai 8 V2: Shengxuan 6 | N0: 0 N1: 150 N2: 300 | 7 × 5 | D1: 3.0 × 106 D2: 1.5 × 106 | 9/15/23 April 6 May |

| Exp.2 | 2014–2015 | V1: Yangmai 8 V2: Shengxuan 6 | N0: 0 N1: 150 N2: 300 | 7 × 5 | D1: 2.4 × 106 D2: 1.5 × 106 | 8/17/25 April 6 May |

| Parameter | Value |

|---|---|

| Weight (without batteries) | 2050 g |

| Size | 73 (width) × 73 (length) × 36 (height) cm |

| Battery Wight (4s/5000) | 520 g |

| Maximum payload | 2500 g |

| Flight duration | 8~41 min |

| Temperature range | −5 °C ~ 35 °C |

| Parameter | Value | |

|---|---|---|

| RGB Camera | CIR Camera | |

| Blue Channel | Visible blue light | Visible blue light |

| Green Channel | Visible green light | Visible green light |

| Red Channel | Visible red light | |

| NIR Channel | 670–770 nm | |

| Geometric Resolution | 5760 × 3840 pixel | 4000 × 3000 pixel |

| Focal Length | 24 mm | 4 mm |

| Date | Growth Stage | RGB Imagery | CIR Imagery | |

|---|---|---|---|---|

| Exp. 1 (2014) | 9 April | Booting | ✘ | ✔ |

| 15 April | Heading | ✘ | ✔ | |

| 23 April | Anthesis | ✘ | ✔ | |

| 6 May | Filling | ✘ | ✔ | |

| Exp. 2 (2015) | 8 April | Booting | ✔ | ✔ |

| 17 April | Heading | ✔ | ✔ | |

| 25 April | Anthesis | ✔ | ✔ | |

| 6 May | Filling | ✔ | ✔ | |

| Camera | VI | Name | Formula |

|---|---|---|---|

| RGB | NGRDI | Normalized green red difference index | (G−R)/(G+R) |

| IKaw | Kawashima index | (R−B)/(R+B) | |

| RGRI | Red green ratio index | R/G | |

| VARI | Visible atmospherically resistance index | (G−R)/(G+R−B) | |

| ExG | Excess green vegetation index | (2G−R−B)/(G+R+B) | |

| TCVI 1 | True Color Vegetation Index | 1.4*(2R−2B)/(2R−G−2B+255*0.4) | |

| CIR | GNDVI | Green normalized difference vegetation index | (NIR−G)/(NIR+G) |

| ENDVI | Enhanced normalized difference vegetation index | (NIR+G−2B)/(NIR+G+2B) | |

| FCVI 2 | False Color Vegetation Index | 1.5*(2NIR+B−2G)/(2G+2B−2NIR+255*0.5) |

| Growth Stage | RGB Camera | CIR Camera | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Normalized Green-Red Difference Index (NGRDI) | IKaw | Red Green Ratio Index (RGRI) | VARI | Excess Green Vegetation Index (ExG) | True Color Vegetation Index (TCVI) | Green Normalized Difference Vegetation Index (GNDVI) | Enhanced Normalized Difference Vegetation Index (ENDVI) | False Color Vegetation Index (FCVI) | ||

| Booting | Function | y = 1.19e8x | y = 4.42e−4.68x | y = 141e−4.84x | y = 1.28e4.57x | y = 1.81e2.58x | y = 4.73e−0.89x | y = 0.99e4.3x | y = 1.36e5.6x | y = 1.5e1.56x |

| R2 | 0.752 | 0.880 | 0.743 | 0.818 | 0.032 | 0.854 | 0.853 | 0.664 | 0.866 | |

| RRMSE | 11.7 | 8.3 | 11.9 | 10.1 | 22.5 | 9.1 | 9.1 | 13.8 | 9.5 | |

| Heading | Function | y = 1.31e6.56x | y = 5.6e−4.79x | y = 64.7e−3.95x | y = 1.35e4.18x | y = 0.79e5.01x | y = 5.62e−1.21x | y = 0.52e6.25x | y = 0.87e6.42x | y = 1.15e1.81x |

| R2 | 0.792 | 0.918 | 0.782 | 0.841 | 0.284 | 0.911 | 0.706 | 0.313 | 0.822 | |

| RRMSE | 12.9 | 8.6 | 13.1 | 11.2 | 23.8 | 8.9 | 14.1 | 22.9 | 11.5 | |

| Anthesis | Function | y = 1.21e9.26x | y = 6.19e−5.95x | y = 237e−5.31x | y = 1.25e5.73x | y = 0.4e9.17x | y = 5.11e−1.04x | y = 0.71e4.63x | y = 0.95e7.67x | y = 1.1e1.79x |

| R2 | 0.875 | 0.825 | 0.868 | 0.892 | 0.458 | 0.855 | 0.889 | 0.811 | 0.911 | |

| RRMSE | 10.6 | 11.8 | 10.8 | 10.0 | 21.8 | 11.5 | 9.7 | 12.9 | 9.0 | |

| Filling | Function | y = 1.79e8.34x | y = 9.21e−6.11x | y = 143e−4.37x | y = 1.78e5.71x | y = 0.66e6.98x | y = 5.56e−1.08x | y = 0.65e5.34x | y = 1.13e7.02x | y = 0.87e2.45x |

| R2 | 0.771 | 0.746 | 0.769 | 0.779 | 0.426 | 0.813 | 0.821 | 0.648 | 0.849 | |

| RRMSE | 18.5 | 18.6 | 18.4 | 18.1 | 27.5 | 16.3 | 15.2 | 20.0 | 15.1 | |

| All | Function | y = 1.74e5.21x | y = 4.61e−3.52x | y = 34.4e−3x | y = 1.76e3.27x | y = 1.1e4.27x | y = 5.09e−1.02x | y = 0.77e4.77x | y = 1.34e5.08x | y = 1.15e1.85x |

| R2 | 0.631 | 0.659 | 0.634 | 0.651 | 0.252 | 0.852 | 0.744 | 0.507 | 0.792 | |

| RRMSE | 18.7 | 17.7 | 18.6 | 18.1 | 26.4 | 12.1 | 15.8 | 21.7 | 14.0 | |

| Camera | VI | Cross-Validation | Independent Validation | ||

|---|---|---|---|---|---|

| R2 | RRMSE (%) | R2 | RRMSE (%) | ||

| RGB | Normalized Green Red Difference Vegetation Index (NGRDI) | 0.591 | 18.24 | ||

| IKaw | 0.618 | 17.79 | |||

| Red green ratio index (RGRI) | 0.608 | 18.66 | |||

| VARI | 0.603 | 18.37 | |||

| Excess green vegetation index (ExG) | 0.150 | 27.23 | |||

| True color vegetation index (TCVI) | 0.848 | 11.47 | |||

| CIR | Green normalized difference vegetation index (GNDVI) | 0.720 | 16.13 | 0.523 | 23.66 |

| Enhanced normalized difference vegetation index (ENDVI) | 0.492 | 20.62 | 0.207 | 37.99 | |

| False color vegetation index (FCVI) | 0.756 | 14.18 | 0.627 | 13.61 | |

| Treatment | RGB Imagery | CIR Imagery | |

|---|---|---|---|

| Variety | Yangmai 8 | 13.95 | 14.69 |

| Shengxuan 6 | 9.88 | 13.34 | |

| N rates (kg/ha) | 0 | 13.01 | 16.41 |

| 150 | 11.23 | 12.61 | |

| 300 | 11.64 | 13.35 | |

| Planting Density (plants/ha) | 3.0 × 106 | 9.01 | 14.17 |

| 1.5 × 106 | 14.49 | 13.87 | |

| VI | Coefficients for Different Channels | Channels | L | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| a1 | a2 | a3 | a4 | a5 | a6 | R | G | B | |||

| Normal color (RGB) | Normalized green red difference vegetation index (NGRDI) | −1 | 1 | 0 | 1 | 1 | 0 | DNred | DNgreen | 0 | |

| IKaw | 1 | 0 | −1 | 1 | 0 | 1 | DNred | DNblue | 0 | ||

| Red green ratio index (RGRI) | 1 | 0 | 0 | 0 | 1 | 0 | DNred | DNgreen | 0 | ||

| VARI | −1 | 1 | 0 | 1 | 1 | −1 | DNred | DNgreen | DNblue | 0 | |

| Excess green vegetation index (ExG) | −1 | 2 | −1 | 1 | 1 | 1 | DNred | DNgreen | DNblue | 0 | |

| True color vegetation index (TCVI) | 2 | 0 | −2 | 2 | −1 | −2 | DNred | DNgreen | DNblue | 0.4 | |

| Color near-infrared (CIR) | Green normalized difference vegetation index (GNDVI) | 1 | −1 | 0 | 1 | 1 | 0 | DNnir | DNgreen | 0 | |

| Enhanced normalized difference vegetation index (ENDVI) | 1 | 1 | −2 | 1 | 1 | 2 | DNnir | DNgreen | DNblue | 0 | |

| False color vegetation index (FCVI) | 1 | −2 | 1 | −2 | 2 | 2 | DNnir | DNgreen | DNblue | 0.5 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, J.; Cai, W.; Zheng, H.; Cheng, T.; Tian, Y.; Zhu, Y.; Ehsani, R.; Hu, Y.; Niu, Q.; Gui, L.; et al. Using Digital Cameras on an Unmanned Aerial Vehicle to Derive Optimum Color Vegetation Indices for Leaf Nitrogen Concentration Monitoring in Winter Wheat. Remote Sens. 2019, 11, 2667. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11222667

Jiang J, Cai W, Zheng H, Cheng T, Tian Y, Zhu Y, Ehsani R, Hu Y, Niu Q, Gui L, et al. Using Digital Cameras on an Unmanned Aerial Vehicle to Derive Optimum Color Vegetation Indices for Leaf Nitrogen Concentration Monitoring in Winter Wheat. Remote Sensing. 2019; 11(22):2667. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11222667

Chicago/Turabian StyleJiang, Jiale, Weidi Cai, Hengbiao Zheng, Tao Cheng, Yongchao Tian, Yan Zhu, Reza Ehsani, Yongqiang Hu, Qingsong Niu, Lijuan Gui, and et al. 2019. "Using Digital Cameras on an Unmanned Aerial Vehicle to Derive Optimum Color Vegetation Indices for Leaf Nitrogen Concentration Monitoring in Winter Wheat" Remote Sensing 11, no. 22: 2667. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11222667