Inundation Extent Mapping by Synthetic Aperture Radar: A Review

Abstract

:1. Introduction

2. Techniques to Produce SAR Inundation Maps

2.1. Principles

2.2. Error Sources

3. Relative Strengths and Limitations of Existing Techniques

3.1. Approaches

3.1.1. Supervised Versus Unsupervised Methods

3.1.2. Threshold Determination

3.1.3. Segmentation

3.1.4. Change Detection

3.1.5. Visual Inspection/Manual Editing Versus Automated Process

3.1.6. Unobstructed, Beneath-Vegetation Flood Versus Urban Flood

3.2. Selected Studies of Combined Approaches

4. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Merwade, V.; Rajib, A.; Liu, Z. An integrated approach for flood inundation modeling on large scales. In Bridging Science and Policy Implication for Managing Climate Extremes; Jung, H.-S., Wang, B., Eds.; World Scientific Publication Company: Singapore, 2018; pp. 133–155. [Google Scholar]

- Wing, O.E.; Bates, P.D.; Smith, A.M.; Sampson, C.C.; Johnson, K.A.; Fargione, J.; Morefield, P. Estimates of present and future flood risk in the conterminous united states. Environ. Res. Lett. 2018, 13, 1748–9326. [Google Scholar] [CrossRef]

- Yamazaki, D.; Kanae, S.; Kim, H.; Oki, T. A physically based description of floodplain inundation dynamics in a global river routing model. Water Resour. Res. 2011, 47. [Google Scholar] [CrossRef] [Green Version]

- Hardesty, S.; Shen, X.; Nikolopoulos, E.; Anagnostou, E. A numerical framework for evaluating flood inundation risk under different dam operation scenarios. Water 2018, 10, 1798. [Google Scholar] [CrossRef]

- Shen, X.; Hong, Y.; Zhang, K.; Hao, Z. Refining a distributed linear reservoir routing method to improve performance of the crest model. J. Hydrol. Eng. 2016, 22. [Google Scholar] [CrossRef]

- Shen, X.; Hong, Y.; Anagnostou, E.N.; Zhang, K.; Hao, Z. Chapter 7 an advanced distributed hydrologic framework-the development of crest. In Hydrologic Remote Sensing and Capacity Building, Chapter; Hong, Y., Zhang, Y., Khan, S.I., Eds.; CRC Press: Boca Raton, FL, USA, 2016; pp. 127–138. [Google Scholar]

- Shen, X.; Anagnostou, E.N. A framework to improve hyper-resolution hydrologic simulation in snow-affected regions. J. Hydrol. 2017, 552, 1–12. [Google Scholar] [CrossRef]

- Afshari, S.; Tavakoly, A.A.; Rajib, M.A.; Zheng, X.; Follum, M.L.; Omranian, E.; Fekete, B.M. Comparison of new generation low-complexity flood inundation mapping tools with a hydrodynamic model. J. Hydrol. 2018, 556, 539–556. [Google Scholar] [CrossRef]

- Horritt, M.; Bates, P. Evaluation of 1d and 2d numerical models for predicting river flood inundation. J. Hydrol. 2002, 268, 87–99. [Google Scholar] [CrossRef]

- Liu, Z.; Merwade, V.; Jafarzadegan, K. Investigating the role of model structure and surface roughness in generating flood inundation extents using one-and two-dimensional hydraulic models. J. Flood Risk Manag. 2018, 12, e12347. [Google Scholar] [CrossRef]

- Zheng, X.; Lin, P.; Keane, S.; Kesler, C.; Rajib, A. Nhdplus-Hand Evaluation; Consortium of Universities for the Advancement of Hydrologic Science, Inc.: Boston, MA, USA, 2016; p. 122. [Google Scholar]

- Dodov, B.; Foufoula-Georgiou, E. Floodplain morphometry extraction from a high-resolution digital elevation model: A simple algorithm for regional analysis studies. Geosci. Remote Sens. Lett. IEEE 2006, 3, 410–413. [Google Scholar] [CrossRef]

- Nardi, F.; Biscarini, C.; Di Francesco, S.; Manciola, P.; Ubertini, L. Comparing a large-scale dem-based floodplain delineation algorithm with standard flood maps: The tiber river basin case study. Irrig. Drain. 2013, 62, 11–19. [Google Scholar] [CrossRef]

- Shen, X.; Vergara, H.J.; Nikolopoulos, E.I.; Anagnostou, E.N.; Hong, Y.; Hao, Z.; Zhang, K.; Mao, K. Gdbc: A tool for generating global-scale distributed basin morphometry. Environ. Model. Softw. 2016, 83, 212–223. [Google Scholar] [CrossRef]

- Shen, X.; Anagnostou, E.N.; Mei, Y.; Hong, Y. A global distributed basin morphometric dataset. Sci. Data 2017, 4, 160124. [Google Scholar] [CrossRef] [Green Version]

- Shen, X.; Mei, Y.; Anagnostou, E.N. A comprehensive database of flood events in the contiguous united states from 2002 to 2013. Bull. Am. Meteorol. Soc. 2017, 98, 1493–1502. [Google Scholar] [CrossRef]

- Cohen, S.; Brakenridge, G.R.; Kettner, A.; Bates, B.; Nelson, J.; McDonald, R.; Huang, Y.F.; Munasinghe, D.; Zhang, J. Estimating floodwater depths from flood inundation maps and topography. JAWRA J. Am. Water Resour. Assoc. 2017, 54, 847–858. [Google Scholar] [CrossRef]

- Nguyen, N.Y.; Ichikawa, Y.; Ishidaira, H. Estimation of inundation depth using flood extent information and hydrodynamic simulations. Hydrol. Res. Lett. 2016, 10, 39–44. [Google Scholar] [CrossRef]

- Cian, F.; Marconcini, M.; Ceccato, P.; Giupponi, C.J.N.H.; Sciences, E.S. Flood depth estimation by means of high-resolution sar images and lidar data. Nat. Hazards Earth Syst. Sci. 2018, 18, 3063–3084. [Google Scholar] [CrossRef]

- Shen, X.; Anagnostou, E. Rapid sar-based flood-inundation extent/depth estimation. In Proceedings of the AGU Fall Meeting 2018, Washington, DC, USA, 11–15 December 2018. [Google Scholar]

- Jones, J. The us geological survey dynamic surface water extent product evaluation strategy. In Proceedings of the EGU General Assembly Conference Abstracts, Vienna, Austria, 17–22 April 2016; Volume 18, p. 8197. [Google Scholar]

- Jones, J.W. Efficient wetland surface water detection and monitoring via landsat: Comparison with in situ data from the everglades depth estimation network. Remote Sens. 2015, 7, 12503–12538. [Google Scholar] [CrossRef]

- Heimhuber, V.; Tulbure, M.G.; Broich, M. Modeling multidecadal surface water inundation dynamics and key drivers on large river basin scale using multiple time series of earth-observation and river flow data. Water Resour. Res. 2017, 53, 1251–1269. [Google Scholar] [CrossRef]

- Jones, J.W. Improved automated detection of subpixel-scale inundation—Revised dynamic surface water extent (dswe) partial surface water tests. Remote Sens. 2019, 11, 374. [Google Scholar] [CrossRef]

- Papa, F.; Prigent, C.; Aires, F.; Jimenez, C.; Rossow, W.; Matthews, E. Interannual variability of surface water extent at the global scale, 1993–2004. J. Geophys. Res. Atmos. 2010, 115. [Google Scholar] [CrossRef] [Green Version]

- Prigent, C.; Papa, F.; Aires, F.; Rossow, W.B.; Matthews, E. Global inundation dynamics inferred from multiple satellite observations, 1993–2000. J. Geophys. Res. Atmos. 2007, 112. [Google Scholar] [CrossRef] [Green Version]

- Aires, F.; Papa, F.; Prigent, C.; Crétaux, J.-F.; Berge-Nguyen, M. Characterization and space–time downscaling of the inundation extent over the inner niger delta using giems and modis data. J. Hydrometeorol. 2014, 15, 171–192. [Google Scholar] [CrossRef]

- Takbiri, Z.; Ebtehaj, A.M.; Foufoula-Georgiou, E.J. A multi-sensor data-driven methodology for all-sky passive microwave inundation retrieval. arXiv, 2018; arXiv:1807.03803. [Google Scholar]

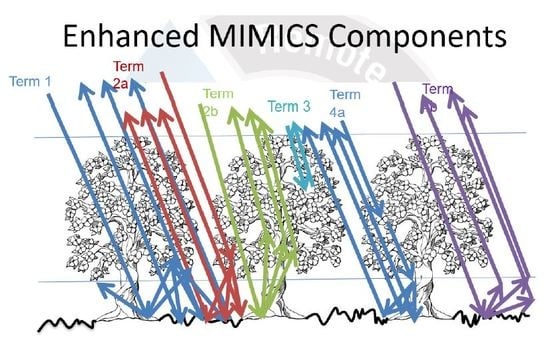

- Shen, X.; Hong, Y.; Qin, Q.; Chen, S.; Grout, T. A backscattering enhanced canopy scattering model based on mimics. In Proceedings of the American Geophysical Union (AGU) 2010 Fall Meeting, San Francisco, CA, USA, 13–17 December 2010. [Google Scholar]

- Ulaby, F.T.; Moore, R.K.; Fung, A.K. Microwave Remote Sensing: Active and Passive; Artech House Inc.: London, UK, 1986; Volume 3, p. 1848. [Google Scholar]

- Fung, A.K. Microwave Scattering and Emission Models and Their Applications; Artech House: Cambridge, UK; New York, NY, USA, 1994. [Google Scholar]

- Refice, A.; Capolongo, D.; Pasquariello, G.; D’Addabbo, A.; Bovenga, F.; Nutricato, R.; Lovergine, F.P.; Pietranera, L. Sar and insar for flood monitoring: Examples with cosmo-skymed data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2711–2722. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of sar data for detecting floodwater in urban and agricultural areas: The role of the interferometric coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1532–1544. [Google Scholar] [CrossRef]

- Chini, M.; Papastergios, A.; Pulvirenti, L.; Pierdicca, N.; Matgen, P.; Parcharidis, I. Sar coherence and polarimetric information for improving flood mapping. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7577–7580. [Google Scholar]

- Chini, M.; Pelich, R.; Pulvirenti, L.; Pierdicca, N.; Hostache, R.; Matgen, P. Sentinel-1 insar coherence to detect floodwater in urban areas: Houston and hurricane harvey as a test case. Remote Sens. 2019, 11, 107. [Google Scholar] [CrossRef]

- Schumann, G.J.-P.; Moller, D.K. Microwave remote sensing of flood inundation. Phys. Chem. Earthparts A/B/C 2015, 83, 84–95. [Google Scholar] [CrossRef]

- Gray, A.L.; Vachon, P.W.; Livingstone, C.E.; Lukowski, T.I. Synthetic aperture radar calibration using reference reflectors. IEEE Trans. Geosci. Remote Sens. 1990, 28, 374–383. [Google Scholar] [CrossRef]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC: Boca Raton, FL, USA, 2009. [Google Scholar]

- Gomez, L.; Ospina, R.; Frery, A.C. Statistical properties of an unassisted image quality index for sar imagery. Remote Sens. 2019, 11, 385. [Google Scholar] [CrossRef]

- Borghys, D.; Yvinec, Y.; Perneel, C.; Pizurica, A.; Philips, W. Supervised feature-based classification of multi-channel sar images. Pattern Recognit. Lett. 2006, 27, 252–258. [Google Scholar] [CrossRef]

- Kussul, N.; Shelestov, A.; Skakun, S. Grid system for flood extent extraction from satellite images. Earth Sci. Inform. 2008, 1, 105. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M. Analysis of cosmo-skymed observations of the 2008 flood in myanmar. Ital. J. Remote Sens. 2010, 42, 79–90. [Google Scholar] [CrossRef]

- Song, Y.-S.; Sohn, H.-G.; Park, C.-H. Efficient water area classification using radarsat-1 sar imagery in a high relief mountainous environment. Photogramm. Eng. Remote Sens. 2007, 73, 285–296. [Google Scholar] [CrossRef]

- Townsend, P.A. Mapping seasonal flooding in forested wetlands using multi-temporal radarsat sar. Photogramm. Eng. Remote Sens. 2001, 67, 857–864. [Google Scholar]

- Töyrä, J.; Pietroniro, A.; Martz, L.W.; Prowse, T.D. A multi-sensor approach to wetland flood monitoring. Hydrol. Process. 2002, 16, 1569–1581. [Google Scholar] [CrossRef]

- Zhou, C.; Luo, J.; Yang, C.; Li, B.; Wang, S. Flood monitoring using multi-temporal avhrr and radarsat imagery. Photogramm. Eng. Remote Sens. 2000, 66, 633–638. [Google Scholar]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. An algorithm for operational flood mapping from synthetic aperture radar (sar) data using fuzzy logic. Nat. Hazards Earth Syst. Sci. 2011, 11, 529. [Google Scholar] [CrossRef]

- Yamada, Y. Detection of flood-inundated area and relation between the area and micro-geomorphology using sar and gis. In Proceedings of the IGARSS’01, IEEE 2001 International Conference on Geoscience and Remote Sensing Symposium, Sydney, NSW, Australia, 9–13 July 2001; pp. 3282–3284. [Google Scholar]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.-P.; Bates, P.D.; Mason, D.C. A change detection approach to flood mapping in urban areas using terrasar-x. Geosci. Remote Sens. IEEE Trans. 2013, 51, 2417–2430. [Google Scholar] [CrossRef]

- Hirose, K.; Maruyama, Y.; Do Van, Q.; Tsukada, M.; Shiokawa, Y. Visualization of flood monitoring in the lower reaches of the mekong river. In Proceedings of the 22nd Asian Conference on Remote Sensing, Singapore, 5–9 Novembers 2001; p. 9. [Google Scholar]

- Matgen, P.; Hostache, R.; Schumann, G.; Pfister, L.; Hoffmann, L.; Savenije, H. Towards an automated sar-based flood monitoring system: Lessons learned from two case studies. Phys. Chem. Earthparts A/B/C 2011, 36, 241–252. [Google Scholar] [CrossRef]

- Tan, Q.; Bi, S.; Hu, J.; Liu, Z. Measuring lake water level using multi-source remote sensing images combined with hydrological statistical data. In Proceedings of the IGARSS’04, 2004 IEEE International Conference on Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 4885–4888. [Google Scholar]

- Martinis, S.; Twele, A.; Voigt, S. Towards operational near real-time flood detection using a split-based automatic thresholding procedure on high resolution terrasar-x data. Nat. Hazards Earth Syst. Sci. 2009, 9, 303–314. [Google Scholar] [CrossRef]

- Martinis, S.; Rieke, C. Backscatter analysis using multi-temporal and multi-frequency sar data in the context of flood mapping at river saale, germany. Remote Sens. 2015, 7, 7732–7752. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A split-based approach to unsupervised change detection in large-size multitemporal images: Application to tsunami-damage assessment. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1658–1670. [Google Scholar] [CrossRef]

- Baatz, M. Object-oriented and multi-scale image analysis in semantic networks. In Proceedings of the the 2nd International Symposium on Operationalization of Remote Sensing, Enschede, The Netherlands, 16–20 August 1999. [Google Scholar]

- Lu, J.; Giustarini, L.; Xiong, B.; Zhao, L.; Jiang, Y.; Kuang, G. Automated flood detection with improved robustness and efficiency using multi-temporal sar data. Remote Sens. Lett. 2014, 5, 240–248. [Google Scholar] [CrossRef]

- Pekel, J.-F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef]

- Shen, X.; Anagnostou, E.N.; Allen, G.H.; Brakenridge, G.R.; Kettner, A.J. Near real-time nonobstructed flood inundation mapping by synthetic aperture radar. Remote Sens. Environ. 2019, 221, 302–335. [Google Scholar] [CrossRef]

- Horritt, M. A statistical active contour model for sar image segmentation. Image Vis. Comput. 1999, 17, 213–224. [Google Scholar] [CrossRef]

- Horritt, M.S.; Mason, D.C.; Luckman, A.J. Flood boundary delineation from synthetic aperture radar imagery using a statistical active contour model. Int. J. Remote Sens. 2001, 22, 2489–2507. [Google Scholar] [CrossRef]

- Heremans, R.; Willekens, A.; Borghys, D.; Verbeeck, B.; Valckenborgh, J.; Acheroy, M.; Perneel, C. Automatic detection of flooded areas on envisat/asar images using an object-oriented classification technique and an active contour algorithm. In Proceedings of the RAST’03, International Conference on Recent Advances in Space Technologies, Istanbul, Turkey, 20–22 November 2003; pp. 311–316. [Google Scholar]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Guerriero, L.; Ferrazzoli, P. Flood monitoring using multi-temporal cosmo-skymed data: Image segmentation and signature interpretation. Remote Sens. Environ. 2011, 115, 990–1002. [Google Scholar] [CrossRef]

- Santoro, M.; Wegmüller, U. Multi-temporal sar metrics applied to map water bodies. In Proceedings of the 2012 IEEE International Conference on Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 5230–5233. [Google Scholar]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An unsupervised approach based on the generalized gaussian model to automatic change detection in multitemporal sar images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef]

- Landuyt, L.; Van Wesemael, A.; Schumann, G.J.; Hostache, R.; Verhoest, N.E.; Van Coillie, F.M. Flood mapping based on synthetic aperture radar: An assessment of established approaches. IEEE Trans. Geosci. Remote 2018, 57, 1–18. [Google Scholar] [CrossRef]

- Cian, F.; Marconcini, M.; Ceccato, P. Normalized difference flood index for rapid flood mapping: Taking advantage of eo big data. Remote Sens. Environ. 2018, 209, 712–730. [Google Scholar] [CrossRef]

- Horritt, M.S.; Mason, D.C.; Cobby, D.M.; Davenport, I.J.; Bates, P.D. Waterline mapping in flooded vegetation from airborne sar imagery. Remote Sens. Environ. 2003, 85, 271–281. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. Monitoring flood evolution in vegetated areas using cosmo-skymed data: The tuscany 2009 case study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1807–1816. [Google Scholar] [CrossRef]

- Ormsby, J.P.; Blanchard, B.J.; Blanchard, A.J. Detection of lowland flooding using active microwave systems. Photogramm. Eng. Remote Sens. 1985, 51, 317–328. [Google Scholar]

- Mason, D.C.; Davenport, I.J.; Neal, J.C.; Schumann, G.J.-P.; Bates, P.D. Near real-time flood detection in urban and rural areas using high-resolution synthetic aperture radar images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3041–3052. [Google Scholar] [CrossRef]

- Mason, D.C.; Speck, R.; Devereux, B.; Schumann, G.J.-P.; Neal, J.C.; Bates, P.D. Flood detection in urban areas using terrasar-x. IEEE Trans. Geosci. Remote Sens. 2010, 48, 882–894. [Google Scholar] [CrossRef]

- Mason, D.C.; Giustarini, L.; Garcia-Pintado, J.; Cloke, H.L. Detection of flooded urban areas in high resolution synthetic aperture radar images using double scattering. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 150–159. [Google Scholar] [CrossRef]

- Ferro, A.; Brunner, D.; Bruzzone, L.; Lemoine, G. On the relationship between double bounce and the orientation of buildings in vhr sar images. IEEE Geosci. Remote Sens. Lett. 2011, 8, 612–616. [Google Scholar] [CrossRef]

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recognit. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Martinis, S.; Kersten, J.; Twele, A. A fully automated terrasar-x based flood service. Isprs J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A hierarchical split-based approach for parametric thresholding of sar images: Flood inundation as a test case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Bracaglia, M.; Ferrazzoli, P.; Guerriero, L. A fully polarimetric multiple scattering model for crops. Remote Sens. Environ. 1995, 54, 170–179. [Google Scholar] [CrossRef]

- Fung, A.K.; Shah, M.R.; Tjuatja, S. Numerical simulation of scattering from three-dimensional randomly rough surfaces. Geosci. Remote Sens. IEEE Trans. 1994, 32, 986–994. [Google Scholar] [CrossRef]

- Chini, M.; Pelich, R.; Hostache, R.; Matgen, P.; Lopez-Martinez, C. Towards a 20 m global building map from sentinel-1 sar data. Remote Sens. 2018, 10, 1833. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A three-component scattering model for polarimetric sar data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four-component scattering model for polarimetric sar image decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Kavetski, D.; Chini, M.; Corato, G.; Schlaffer, S.; Matgen, P. Probabilistic flood mapping using synthetic aperture radar data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6958–6969. [Google Scholar] [CrossRef]

- Fry, J.A.; Xian, G.; Jin, S.; Dewitz, J.A.; Homer, C.G.; Limin, Y.; Barnes, C.A.; Herold, N.D.; Wickham, J.D. Completion of the 2006 national land cover database for the conterminous united states. Photogramm. Eng. Remote Sens. 2011, 77, 858–864. [Google Scholar]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S. Finer resolution observation and monitoring of global land cover: First mapping results with landsat tm and etm+ data. Int. J. Remote Sens. 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Yamazaki, D.; O’Loughlin, F.; Trigg, M.A.; Miller, Z.F.; Pavelsky, T.M.; Bates, P.D. Development of the global width database for large rivers. Water Resour. Res. 2014, 50, 3467–3480. [Google Scholar] [CrossRef]

- Allen, G.H.; Pavelsky, T.M. Global extent of rivers and streams. Science 2018, 361, 585–588. [Google Scholar] [CrossRef]

- Simley, J.D.; Carswell, W.J., Jr. The National Map—Hydrography; U.S. Geological Survey: Reston, VA, USA, 2009.

- Yamazaki, D.; Ikeshima, D.; Tawatari, R.; Yamaguchi, T.; O’Loughlin, F.; Neal, J.C.; Sampson, C.C.; Kanae, S.; Bates, P.D. A high-accuracy map of global terrain elevations. Geophys. Res. Lett. 2017, 44, 5844–5853. [Google Scholar] [CrossRef] [Green Version]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, X.; Wang, D.; Mao, K.; Anagnostou, E.; Hong, Y. Inundation Extent Mapping by Synthetic Aperture Radar: A Review. Remote Sens. 2019, 11, 879. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11070879

Shen X, Wang D, Mao K, Anagnostou E, Hong Y. Inundation Extent Mapping by Synthetic Aperture Radar: A Review. Remote Sensing. 2019; 11(7):879. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11070879

Chicago/Turabian StyleShen, Xinyi, Dacheng Wang, Kebiao Mao, Emmanouil Anagnostou, and Yang Hong. 2019. "Inundation Extent Mapping by Synthetic Aperture Radar: A Review" Remote Sensing 11, no. 7: 879. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11070879