Evaluating Thermal Attribute Mapping Strategies for Oblique Airborne Photogrammetric System AOS-Tx8

Abstract

:1. Introduction

1.1. Related Work

1.1.1. Laser Scanning Point Cloud

1.1.2. Photogrammetric Point Cloud

1.1.3. Polyhedral Model

1.2. Contributions and Paper Structure

- Potential thermal leakage detection: The oblique airborne photogrammetric system used in this study provides a solution to automatically detect potential thermal leakages for large-scale areas. Specifically, RGB cameras from different perspectives provide accurate and detailed 3D reference models, while thermal cameras give the evidence for existing temperature anomalies, which represent the potential thermal leakages.

- Building facade information retrieval: With the help of multiple RGB and thermal cameras providing information from different points of view, both the rooftop and facade information can be acquired during the measurements. Thus, the oblique airborne system used in this study provides a new insight into the building condition (e.g., solar energy utilization).

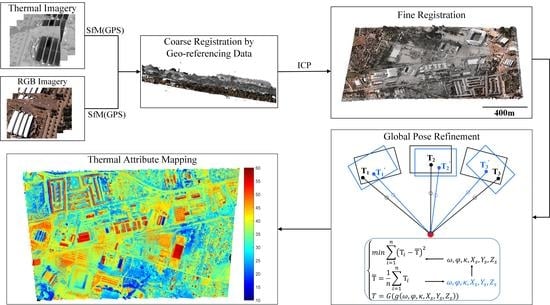

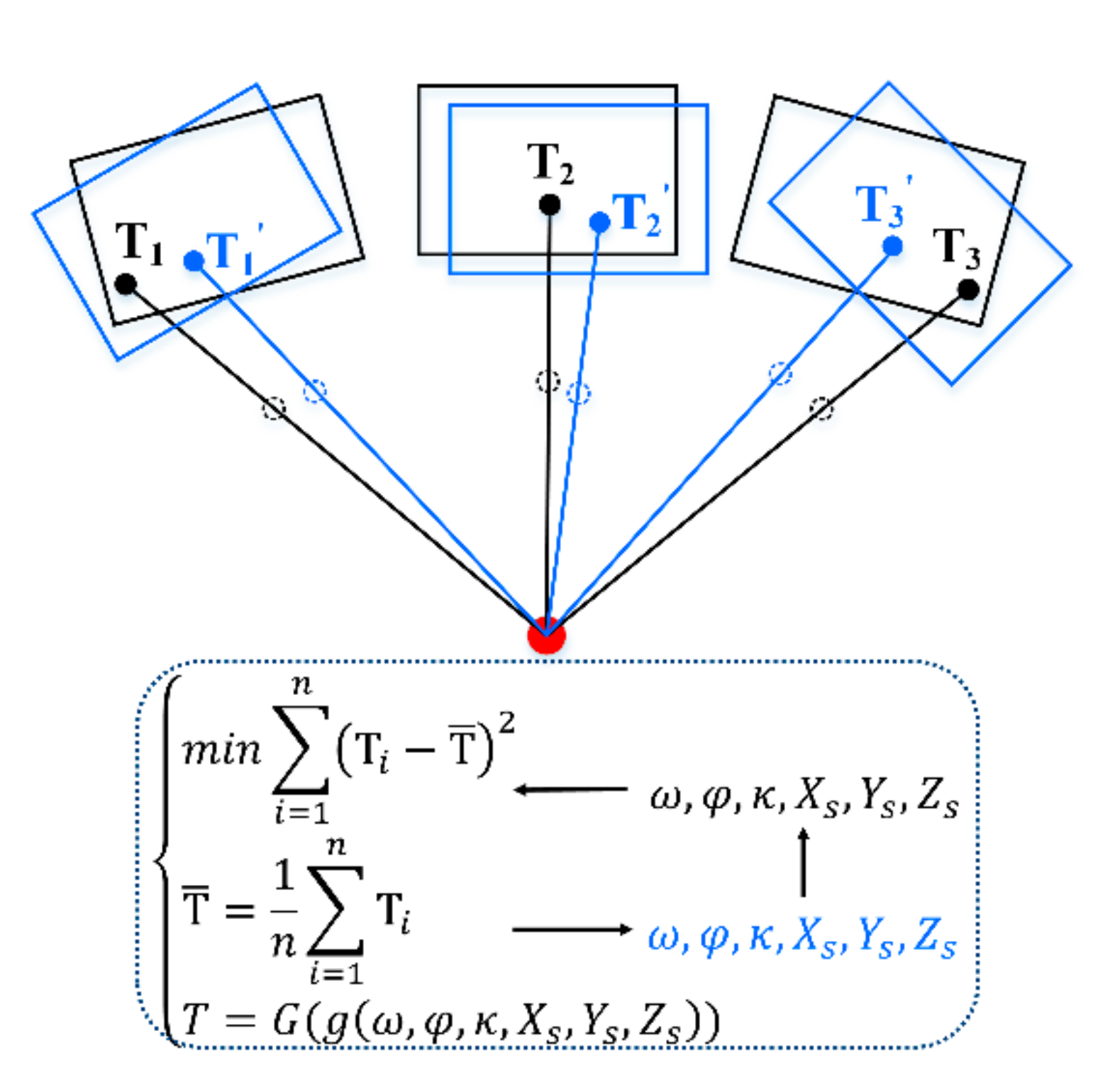

- Registration and thermal attribute mapping: A novel workflow is proposed to process the data collected by an integrated RGB and thermal airborne camera system. Firstly, the registration between thermal imagery and RGB photogrammetric point clouds is conducted by a coarse point cloud registration supported by global positioning system (GPS) data and a fine point cloud registration based on iterative closest point (ICP). Then, in order to achieve great photogrammetric consistency of thermal attribute mapping results, three different strategies including texture selection based on thermal radiant characteristics [29], mean temperature computation of overlapping images [18], and global image pose refinement [23] are compared and tested on three different areas (built-up area, water area, bare soil). Relative registration accuracy between sensors rather than absolute geometric accuracy is used to evaluate the texture mapping results.

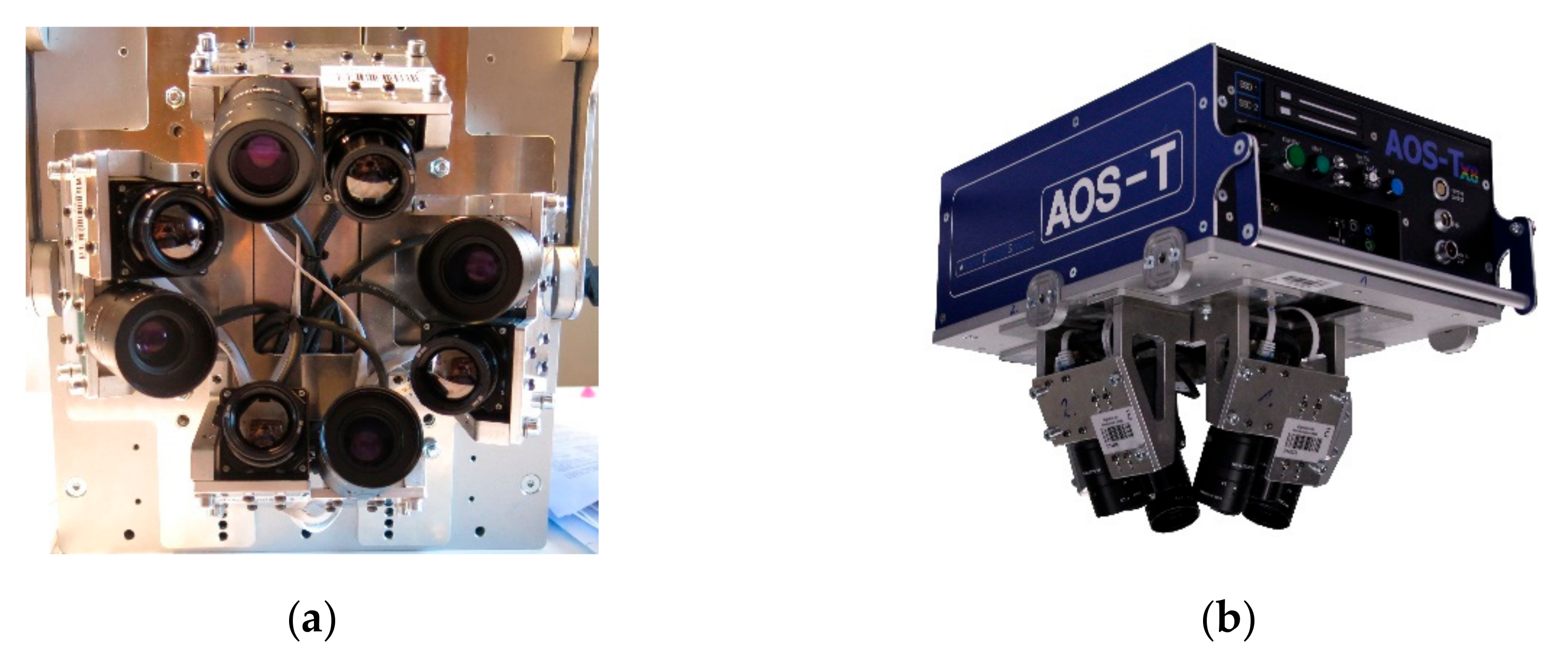

2. Description of AOS-Tx8

3. Methodology

3.1. Camera Calibration

3.2. Registration and Texture Mapping

3.2.1. Point Cloud Generation

3.2.2. Registration

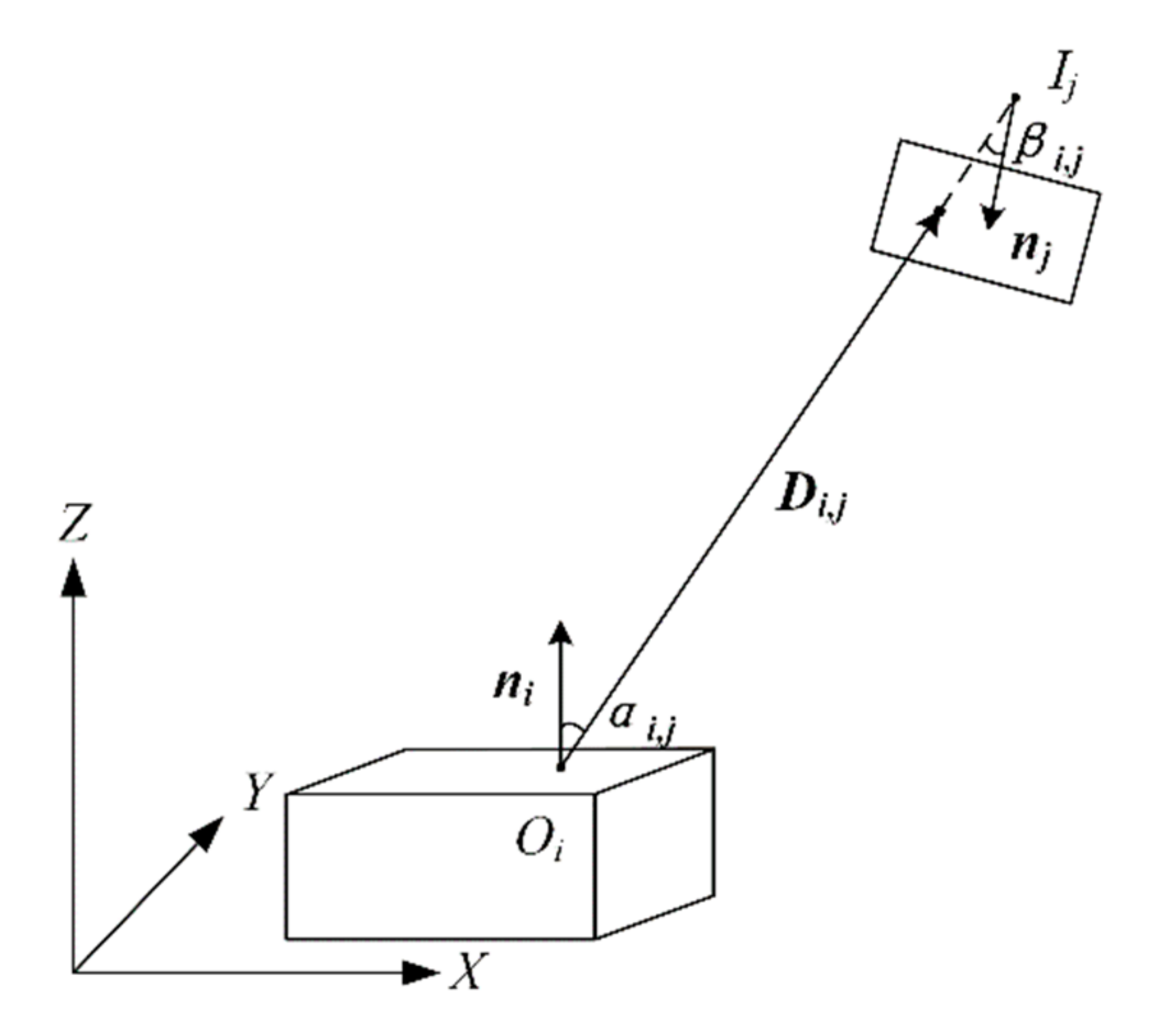

3.2.3. Thermal Texture Mapping

3.3. Evaluation

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Westfeld, P.; Mader, D.; Maas, H.-G. Generation of TIR-attributed 3d point clouds from UAV-based thermal imagery. Photogramm. Fernerkund. Geoinf. 2015, 5, 381–393. [Google Scholar] [CrossRef]

- Brooke, C. Thermal Imaging for the Archaeological Investigation of Historic Buildings. Remote Sens. 2018, 10, 1401. [Google Scholar] [CrossRef] [Green Version]

- Ambrosia, V.G.; Wegener, S.S.; Sullivan, D.V.; Buechel, S.W.; Dunagan, S.E.; Brass, J.A.; Stoneburner, J.; Schoenung, S.M. Demonstrating UAV-acquired real-time thermal data over fires. Photogramm. Eng. Remote Sens. 2003, 69, 391–402. [Google Scholar] [CrossRef]

- Friman, O.; Follo, P.; Ahlberg, J.; Sjökvist, S. Methods for large-scale monitoring of district heating systems using airborne thermography. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5175–5182. [Google Scholar] [CrossRef] [Green Version]

- Lin, D.; Grundmann, J.; Eltner, A. Evaluating Image Tracking Approaches for Surface Velocimetry with Thermal Tracers. Water Resour. Res. 2019, 55, 3122–3136. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Kim, K.W.; Hong, H.G.; Koo, J.H.; Kim, M.C.; Park, K.R. Gender recognition from human-body images using visible-light and thermal camera videos based on a convolutional neural network for image feature extraction. Sensors 2017, 17, 637. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Blasch, E.; Xue, Z.; Zhao, J.; Laganiere, R.; Wu, W. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 94–109. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Smith, H.K.; Clarkson, G.J.; Taylor, G.; Thompson, A.J.; Clarkson, J.; Rajpoot, N.M. Automatic detection of regions in spinach canopies responding to soil moisture deficit using combined visible and thermal imagery. PLoS ONE 2014, 9, e97612. [Google Scholar]

- Hare, D.K.; Briggs, M.A.; Rosenberry, D.O.; Boutt, D.F.; Lane, J.W. A comparison of thermal infrared to fiber-optic distributed temperature sensing for evaluation of groundwater discharge to surface water. J. Hydrol. 2015, 530, 153–166. [Google Scholar] [CrossRef] [Green Version]

- Cho, Y.K.; Ham, Y.; Golpavar-Fard, M. 3D as-is building energy modeling and diagnosis: A review of the state-of-the-art. Adv. Eng. Inform. 2015, 29, 184–195. [Google Scholar] [CrossRef]

- Lin, D.; Dong, Z.; Zhang, X.; Maas, H.-G. Unsupervised window extraction from photogrammetric point clouds with thermal attributes. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 45–51. [Google Scholar] [CrossRef] [Green Version]

- Bannehr, L.; Pohl, H.; Ulrich, C.; Hermann, K. AOS-Tx8, ein neues Thermal- und RGB Oblique Kamera System. In Proceedings of the Photogrammetrie, Laserscanning, Optische 3D-Messtechnik, Beiträge der Oldenburger 3D-Tage, Oldenburger, Germany, 31 January–1 February 2018; pp. 1–9. [Google Scholar]

- Alba, M.I.; Barazzetti, L.; Scaioni, M.; Rosina, E.; Previtali, M. Mapping infrared data on terrestrial laser scanning 3D models of buildings. Remote Sens. 2011, 3, 1847–1870. [Google Scholar] [CrossRef] [Green Version]

- Borrmann, D.; Nüchter, A.; Ðakulović, M.; Maurović, I.; Petrović, I.; Osmanković, D.; Velagić, J. A mobile robot based system for fully automated thermal 3D mapping. Adv. Eng. Inform. 2014, 28, 425–440. [Google Scholar] [CrossRef]

- González-Aguilera, D.; Rodríguez-Gonzálvez, P.; Armesto, J.; Lagüela, S. Novel approach to 3D thermography and energy efficiency evaluation. Energy Build. 2012, 54, 436–443. [Google Scholar] [CrossRef]

- Ham, Y.; Golparvar-Fard, M. An automated vision-based method for rapid 3D energy performance modeling of existing buildings using thermal and digital imagery. Adv. Eng. Inform. 2013, 27, 395–409. [Google Scholar] [CrossRef]

- Javadnejad, F. Small Unmanned Aircraft Systems (UAS) for Engineering Inspections and Geospatial Mapping. Ph.D. Thesis, Oregon State University, Corvallis, OR, USA, November 2017. [Google Scholar]

- Vidas, S.; Moghadam, P. HeatWave: A handheld 3D thermography system for energy auditing. Energy Build. 2013, 66, 445–460. [Google Scholar] [CrossRef] [Green Version]

- Vidas, S.; Moghadam, P.; Sridharan, S. Real-time mobile 3D temperature mapping. IEEE Sens. J. 2015, 15, 1145–1152. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C.; Lin, H.Y. Fusion of Infrared Thermal Image and Visible Image for 3D Thermal Model Reconstruction Using Smartphone Sensors. Sensors 2018, 18, 2003. [Google Scholar] [CrossRef] [Green Version]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the processing of UAV-based thermal imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef] [Green Version]

- Lin, D.; Jarzabek-Rychard, M.; Tong, X.; Maas, H.-G. Fusion of thermal imagery with point clouds for building façade thermal attribute mapping. ISPRS J. Photogramm. Remote Sens. 2019, 151, 162–175. [Google Scholar] [CrossRef]

- Hoegner, L.; Stilla, U. Thermal leakage detection on building facades using infrared textures generated by mobile mapping. In Proceedings of the IEEE Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009; pp. 1–6. [Google Scholar]

- Hoegner, L.; Stilla, U. Building facade object detection from terrestrial thermal infrared image sequences combining different views. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 55. [Google Scholar] [CrossRef] [Green Version]

- Hoegner, L.; Stilla, U. Mobile thermal mapping for matching of infrared images with 3D building models and 3D point clouds. Quant. InfraRed Thermgr. J. 2018, 15, 252–270. [Google Scholar] [CrossRef]

- Iwaszczuk, D.; Stilla, U. Quality assessment of building textures extracted from oblique airborne thermal imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 3–8. [Google Scholar] [CrossRef]

- Iwaszczuk, D.; Stilla, U. Camera pose refinement by matching uncertain 3D building models with thermal infrared image sequences for high quality texture extraction. ISPRS J. Photogramm. Remote Sens. 2017, 132, 33–47. [Google Scholar] [CrossRef]

- Lin, D.; Jarzabek-Rychard, M.; Schneider, D.; Maas, H.-G. Thermal texture selection and correction for building facade inspection based on thermal radiant characteristics. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 585–591. [Google Scholar] [CrossRef] [Green Version]

- Lin, D.; Maas, H.-G.; Westfeld, P.; Budzier, H.; Gerlach, G. An advanced radiometric calibration approach for uncooled thermal cameras. Photogramm. Rec. 2018, 33, 30–48. [Google Scholar] [CrossRef]

- Tempelhahn, A.; Budzier, H.; Krause, V.; Gerlach, G. Shutter-less calibration of uncooled infrared cameras. J. Sens. Sens. Syst. 2016, 5, 9–16. [Google Scholar] [CrossRef] [Green Version]

- El-Hakim, S. A real-time system for object measurement with CCD cameras. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 1986, 26, 363–373. [Google Scholar]

- Ribeiro-Gomes, K.; Hernández-López, D.; Ortega, J.F.; Ballesteros, R.; Poblete, T.; Moreno, M.A. Uncooled thermal camera calibration and optimization of the photogrammetry process for UAV applications in agriculture. Sensors 2017, 17, 2173. [Google Scholar] [CrossRef]

- Conte, P.; Girelli, V.A.; Mandanici, E. Structure from Motion for aerial thermal imagery at city scale: Pre-processing, camera calibration, accuracy assessment. ISPRS J. Photogramm. Remote Sens. 2018, 146, 320–333. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (pcl). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (NZ). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Vollmer, M.; Möllmann, K.P. Infrared Thermal Imaging: Fundamentals, Research and Applications; John Wiley and Sons: Brandenburg, Germany, 2017; p. 612. [Google Scholar]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A modern library for 3D data pro cessing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

| Specifications | Baumer VCXG-53c | FLIR A65sc |

|---|---|---|

| Megapixel | 5.308 (2592 × 2048) | 0.328 (640 × 512) |

| Frame rate (Hz) | 23 | 30 |

| Detector pitch (μm) | 4.8 | 17 |

| Field of View, FOV (°) | 28.8 | 25 |

| Focal length (mm) | 25 | 25 |

| Measuring range | RGB (24 bit) | From −25 °C to 135 °C |

| Dimension (mm) | 40 × 29 × 29 | 106 × 40 × 43 |

| c (px) | x0 (px) | y0 (px) | K1 | K2 | K3 | P1 | P2 | B1 | B2 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1500.64 | 335.10 | 254.76 | 4.62 × 10−4 | 4.14 × 10−6 | 1.52 × 10−8 | 1.26 × 10−4 | 1.11 × 10−4 | 3.85 × 10−3 | 1.65 | |

| 0.44 | 0.13 | 0.31 | 1.01 × 10−6 | 2.16 × 10−8 | 3.15 × 10−10 | 2.37 × 10−6 | 6.93 × 10−6 | 5.38 × 10−5 | 2.28 × 10−5 |

| Test Area | Coarse Registration (Mean/Std.) (m) | Fine Registration (Mean/Std.) (m) |

|---|---|---|

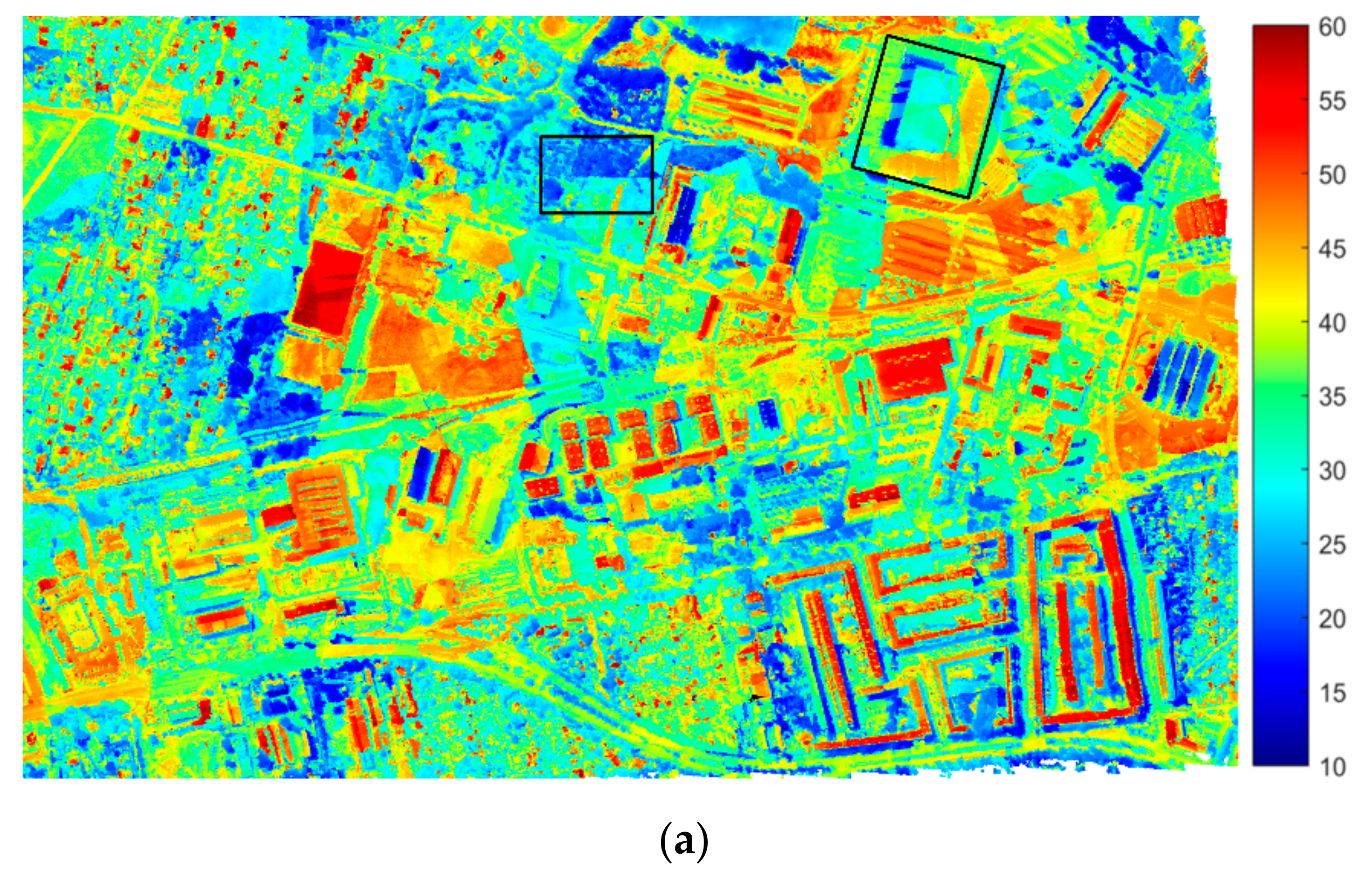

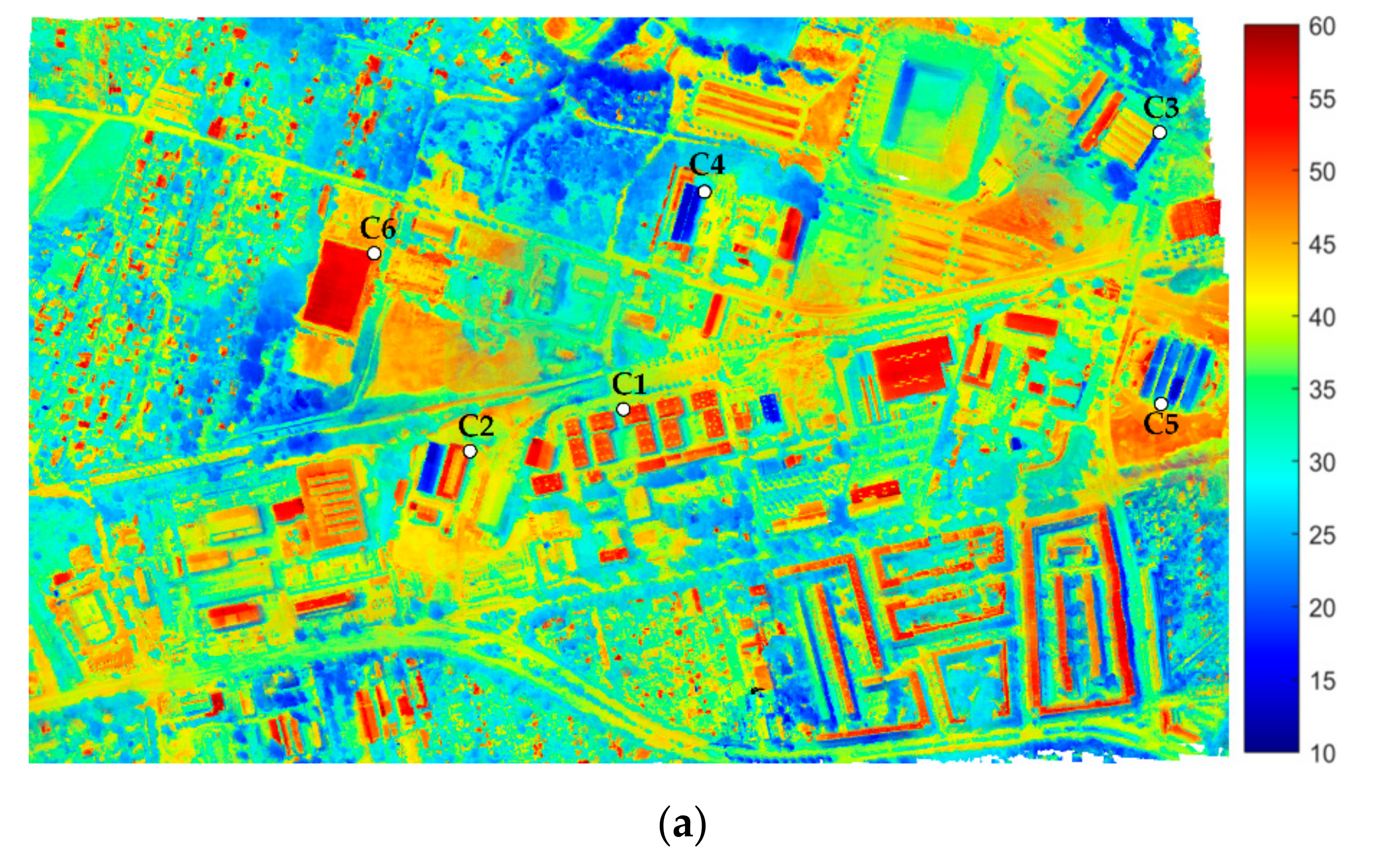

| Building area | 15.7/6.3 | 4.1/2.9 |

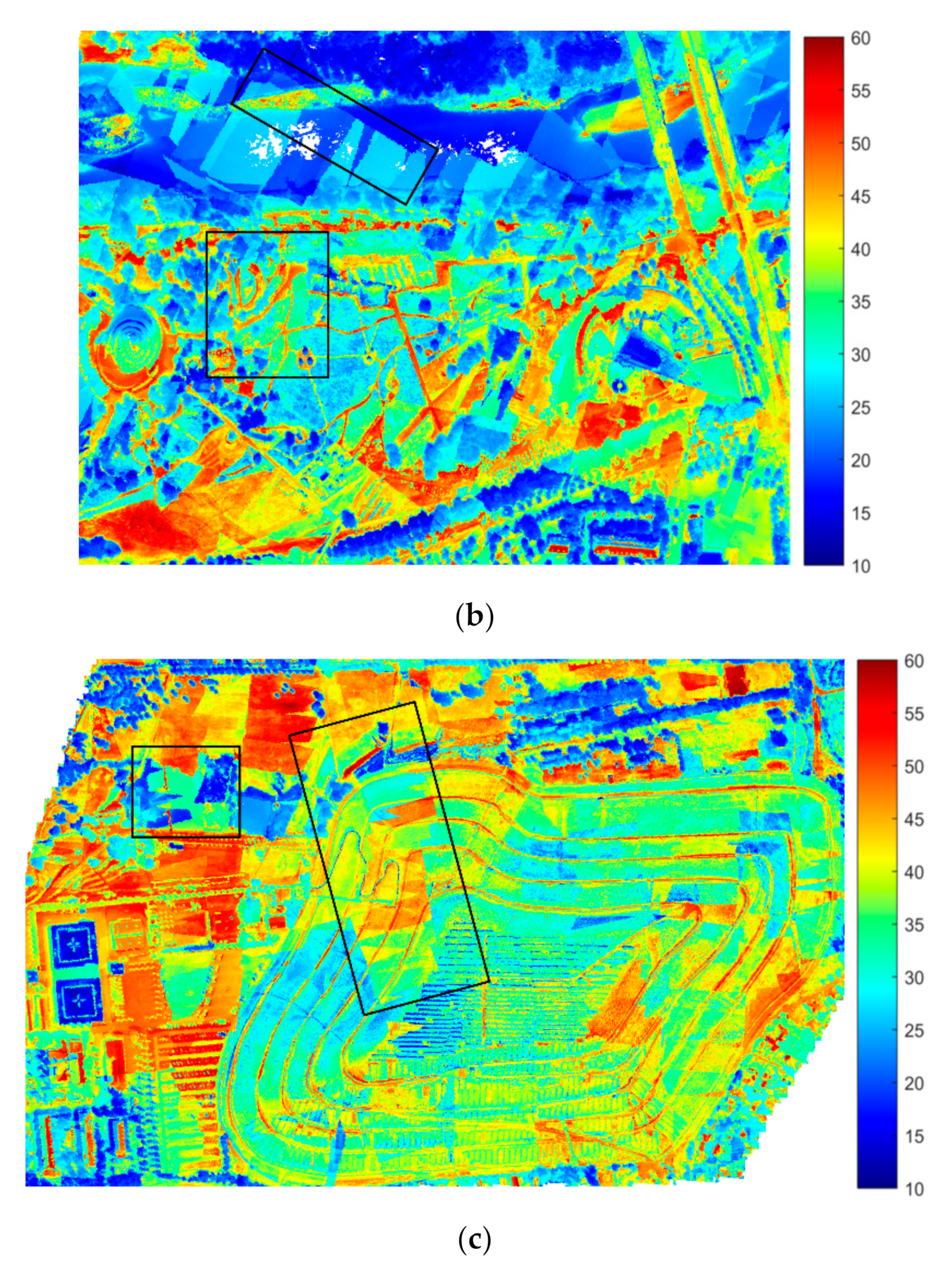

| River ecosystem | 11.1/8.7 | 2.1/1.7 |

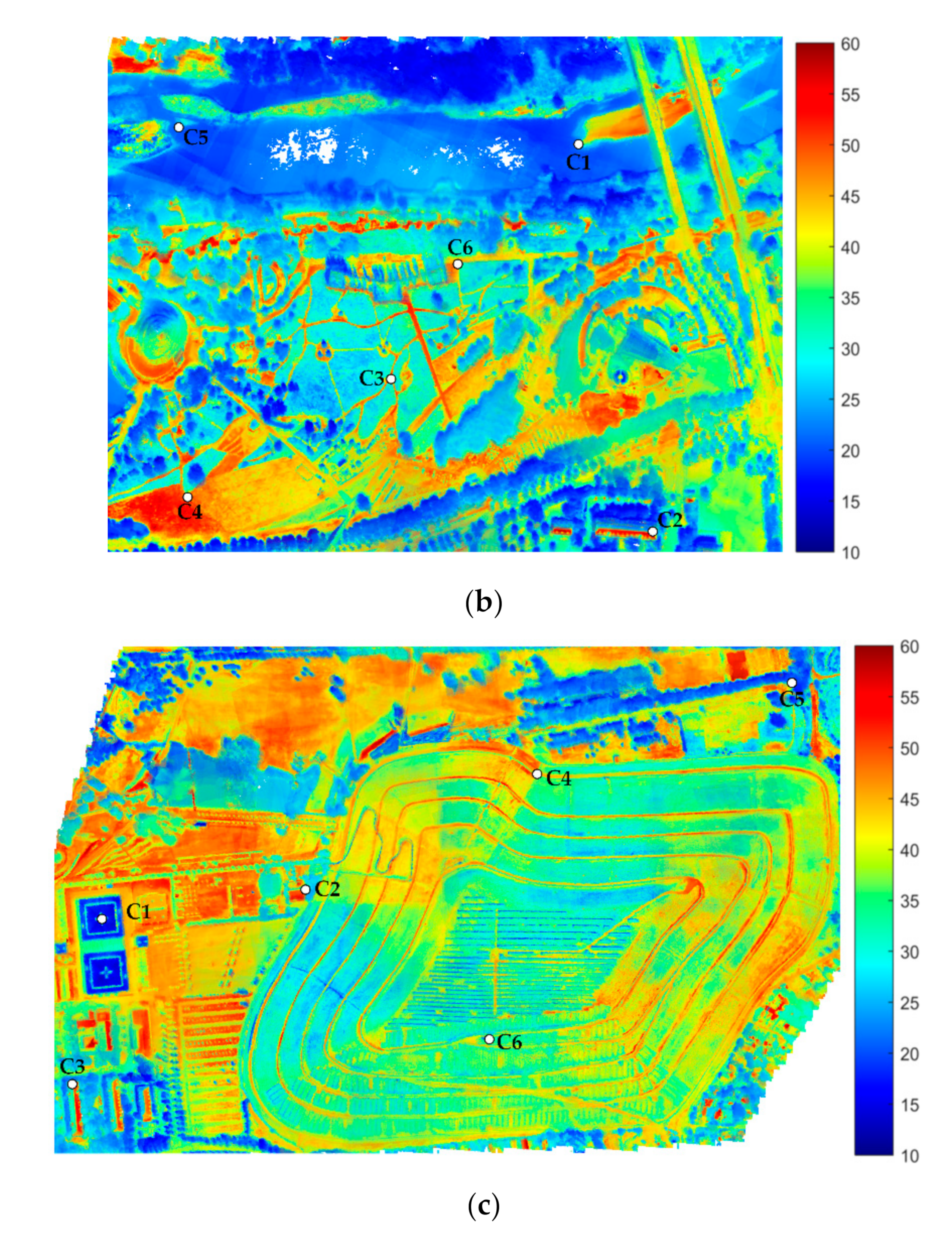

| Soil area | 10.5/6.6 | 4.2/3.0 |

| (a) | ||||||

| Point Index | C1 | C2 | C3 | C4 | C5 | C6 |

| Strategy 1 | 3.6 | 4.5 | 4.1 | 4.6 | 3.9 | 3.5 |

| Strategy 2 | 3.5 | 4.3 | 4.2 | 3.6 | 3.6 | 3.4 |

| Strategy 3 | 2.6 | 2.8 | 2.9 | 2.5 | 2.8 | 2.0 |

| (b) | ||||||

| Point Index | C1 | C2 | C3 | C4 | C5 | C6 |

| Strategy 1 | 2.8 | 3.0 | 1.9 | 2.2 | 2.1 | 2.4 |

| Strategy 2 | 2.6 | 2.5 | 2.1 | 1.8 | 2.0 | 2.3 |

| Strategy 3 | 2.3 | 2.5 | 1.8 | 1.7 | 1.9 | 2.1 |

| (c) | ||||||

| Point Index | C1 | C2 | C3 | C4 | C5 | C6 |

| Strategy 1 | 3.8 | 4.2 | 3.4 | 4.7 | 4.6 | 3.7 |

| Strategy 2 | 3.3 | 4.2 | 3.8 | 4.4 | 4.4 | 3.1 |

| Strategy 3 | 2.4 | 3.5 | 3.4 | 4.3 | 3.9 | 3.0 |

| (d) | ||||||

| RMSE | Building Area | River Ecosystem | Soil Area | |||

| Strategy 1 | 4.1 | 2.4 | 4.1 | |||

| Strategy 2 | 3.8 | 2.2 | 3.9 | |||

| Strategy 3 | 2.6 | 2.1 | 3.4 | |||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, D.; Bannehr, L.; Ulrich, C.; Maas, H.-G. Evaluating Thermal Attribute Mapping Strategies for Oblique Airborne Photogrammetric System AOS-Tx8. Remote Sens. 2020, 12, 112. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010112

Lin D, Bannehr L, Ulrich C, Maas H-G. Evaluating Thermal Attribute Mapping Strategies for Oblique Airborne Photogrammetric System AOS-Tx8. Remote Sensing. 2020; 12(1):112. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010112

Chicago/Turabian StyleLin, Dong, Lutz Bannehr, Christoph Ulrich, and Hans-Gerd Maas. 2020. "Evaluating Thermal Attribute Mapping Strategies for Oblique Airborne Photogrammetric System AOS-Tx8" Remote Sensing 12, no. 1: 112. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010112