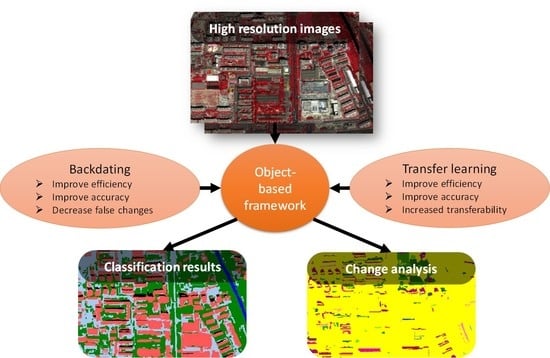

Integrating Backdating and Transfer Learning in an Object-Based Framework for High Resolution Image Classification and Change Analysis

Abstract

:1. Introduction

2. Study Area and Data Preprocessing

2.1. Study Area

2.2. Data Preprocessing

3. Methods

3.1. Method 1: Classification and Change Analysis using Backdating

3.1.1. Image Segmentation

3.1.2. Backdating

- (1)

- Change detection

- (2)

- Rule-based classification

3.2. Method 2: Classification and Change Analysis using Transfer Learning

3.2.1. Image Segmentation

3.2.2. Transfer Learning

- (1)

- Change detection

- (2)

- Supervised classification

3.3. Method 3: Classification and Change Analysis Integrating Backdating and Transfer Learning

3.3.1. Image Segmentation

3.3.2. Backdating & Transfer Learning

- (1)

- Change detection

- (2)

- Supervised classification

3.4. Accuracy Assessment

4. Results

4.1. Comparison of the Classification Accuracy

4.2. Comparison of the Change Detection Accuracy

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Turner, B.L.; Lambin, E.F.; Reenberg, A. The emergence of land change science for global environmental change and sustainability. Proc. Natl. Acad. Sci. USA 2007, 104, 20666–20671. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grimm, N.B.; Faeth, S.H.; Golubiewski, N.E.; Redman, C.L.; Wu, J.; Bai, X.; Briggs, J.M. Global Change and the Ecology of Cities. Science 2008, 319, 756–760. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hansen, M.C.; Loveland, T.R. A review of large area monitoring of land cover change using Landsat data. Remote Sens. Environ. 2012, 122, 66–74. [Google Scholar] [CrossRef]

- Wickham, J.; Stehman, S.V.; Gass, L.; Dewitz, J.A.; Sorenson, D.G.; Granneman, B.J.; Poss, R.V.; Baer, L.A. Thematic accuracy assessment of the 2011 National Land Cover Database (NLCD). Remote Sens. Environ. 2017, 191, 328–341. [Google Scholar] [CrossRef] [Green Version]

- Bontemps, S.; Bogaert, P.; Titeux, N.; Defourny, P. An object-based change detection method accounting for temporal dependences in time series with medium to coarse spatial resolution. Remote Sens. Environ. 2008, 112, 3181–3191. [Google Scholar] [CrossRef]

- Eklundh, L.; Johansson, T.; Solberg, S. Mapping insect defoliation in Scots pine with MODIS time-series data. Remote Sens. Environ. 2009, 113, 1566–1573. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Zhang, X.; Du, S. Learning selfhood scales for urban land cover mapping with very-high-resolution satellite images. Remote Sens. Environ. 2016, 178, 172–190. [Google Scholar] [CrossRef]

- Yu, W.; Zhou, W.; Qian, Y.; Yan, J. A new approach for land cover classification and change analysis: Integrating backdating and an object-based method. Remote Sens. Environ. 2016, 177, 37–47. [Google Scholar] [CrossRef]

- Lin, C.; Du, P.; Samat, A.; Li, E.; Wang, X.; Xia, J. Automatic Updating of Land Cover Maps in Rapidly Urbanizing Regions by Relational Knowledge Transferring from GlobeLand30. Remote Sens. Basel 2019, 11, 1397. [Google Scholar] [CrossRef] [Green Version]

- Wu, T.; Luo, J.; Xia, L.; Shen, Z.; Hu, X. Prior Knowledge-Based Automatic Object-Oriented Hierarchical Classification for Updating Detailed Land Cover Maps. J. Indian Soc. Remote 2015, 43, 653–669. [Google Scholar] [CrossRef]

- Xian, G.; Homer, C.; Fry, J. Updating the 2001 National Land Cover Database land cover classification to 2006 by using Landsat imagery change detection methods. Remote Sens. Environ. 2009, 113, 1133–1147. [Google Scholar] [CrossRef] [Green Version]

- Jin, S.M.; Yang, L.M.; Danielson, P.; Homer, C.; Fry, J.; Xian, G. A comprehensive change detection method for updating the National Land Cover Database to circa 2011. Remote Sens. Environ. 2013, 132, 159–175. [Google Scholar] [CrossRef] [Green Version]

- Zhou, W.Q.; Troy, A.; Grove, M. Object-based land cover classification and change analysis in the Baltimore metropolitan area using multitemporal high resolution remote sensing data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef] [Green Version]

- Milani, G.; Volpi, M.; Tonolla, D.; Doering, M.; Robinson, C.; Kneubühler, M.; Schaepman, M. Robust quantification of riverine land cover dynamics by high-resolution remote sensing. Remote Sens. Environ. 2018, 217, 491–505. [Google Scholar] [CrossRef]

- Stow, D. Reducing the effects of misregistration on pixel-level change detection. Int. J. Remote Sens. 1999, 20, 2477–2483. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Wu, T.; Luo, J.; Zhou, Y.n.; Wang, C.; Xi, J.; Fang, J. Geo-Object-Based Land Cover Map Update for High-Spatial-Resolution Remote Sensing Images via Change Detection and Label Transfer. Remote Sens. Basel 2020, 12, 174. [Google Scholar] [CrossRef] [Green Version]

- Xian, G.; Homer, C. Updating the 2001 National Land Cover Database Impervious Surface Products to 2006 using Landsat Imagery Change Detection Methods. Remote Sens. Environ. 2010, 114, 1676–1686. [Google Scholar] [CrossRef]

- Rasi, R.; Beuchle, R.; Bodart, C.; Vollmar, M.; Seliger, R.; Achard, F. Automatic Updating of an Object-Based Tropical Forest Cover Classification and Change Assessment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 66–73. [Google Scholar] [CrossRef]

- Do, C.B.; Ng, A.Y. Transfer learning for text classification. Adv. Neural Inf. Process. Syst. 2005, 18, 299–306. [Google Scholar]

- Burgess, E.W. The Growth of the City: An Introduction to a Research Project. City 2008, 18, 71–78. [Google Scholar]

- Deng, J.; Zhang, Z.; Marchi, E.; Schuller, B. Sparse autoencoder-based feature transfer learning for speech emotion recognition. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, Switzerland, 2–5 September 2013; pp. 511–516. [Google Scholar]

- Xia, L.; Luo, J.; Wang, W.; Shen, Z. An Automated Approach for Land Cover Classification Based on a Fuzzy Supervised Learning Framework. J. Indian Soc. Remote 2014, 42, 505–515. [Google Scholar] [CrossRef]

- Xue, L.; Zhang, L.; Bo, D.; Zhang, L.; Qian, S. Iterative Reweighting Heterogeneous Transfer Learning Framework for Supervised Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2022–2035. [Google Scholar]

- Tuia, D.; Persello, C.; Bruzzone, L. Domain Adaptation for the Classification of Remote Sensing Data: An Overview of Recent Advances. IEEE Geosci. Remote Sens. Mag. 2016, 4, 41–57. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Demir, B.; Bovolo, F.; Bruzzone, L. Updating Land-Cover Maps by Classification of Image Time Series: A Novel Change-Detection-Driven Transfer Learning Approach. IEEE Trans. Geosci. Remote 2013, 51, 300–312. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q.H. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Trimble, T. eCognition Developer 8.7 Reference Book. Trimble Ger. GmbH Munich Ger. 2011, 1, 319–328. [Google Scholar]

- Qian, Y.; Zhou, W.; Yan, J.; Li, W.; Han, L. Comparing machine learning classifiers for object-based land cover classification using very high resolution imagery. Remote Sens. Basel 2015, 7, 153–168. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution segmentation: An optimizating approach for high quality multi-scale image segmentation. In Proceedings of Beiträge zum AGIT-Symposium; Wichmann Verlag: Salzburg, Austria, 2000; pp. 12–23. [Google Scholar]

- Mathieu, R.; Aryal, J.; Chong, A.K. Object-based classification of ikonos imagery for mapping large-scale vegetation communities in urban areas. Sensors 2007, 7, 2860–2880. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pu, R.L.; Landry, S.; Yu, Q. Object-based urban detailed land cover classification with high spatial resolution IKONOS imagery. Int. J. Remote Sens. 2011, 32, 3285–3308. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Gong, P.; He, C.Y.; Pu, R.L.; Shi, P.J. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Remote Sens. 2003, 69, 369–379. [Google Scholar] [CrossRef] [Green Version]

- Johnson, R.D.; Kasischke, E.S. Change vector analysis: A technique for the multispectral monitoring of land cover and condition. Int. J. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Nackaerts, K.; Vaesen, K.; Muys, B.; Coppin, P. Comparative performance of a modified change vector analysis in forest change detection. Int. J. Remote Sens. 2005, 26, 839–852. [Google Scholar] [CrossRef]

- Morisette, J.T.; Khorram, S. Accuracy assessment curves for satellite-based change detection. Photogramm Eng. Remote Sens. 2000, 66, 875–880. [Google Scholar]

- Zhou, W.; Huang, G.; Troy, A.; Cadenasso, M. Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: A comparison study. Remote Sens. Environ. 2009, 113, 1769–1777. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Dannenberg, M.; Hakkenberg, C.; Song, C. Consistent Classification of Landsat Time Series with an Improved Automatic Adaptive Signature Generalization Algorithm. Remote Sens. Basel 2016, 8, 691. [Google Scholar] [CrossRef] [Green Version]

- Gray, J.; Song, C. Consistent classification of image time series with automatic adaptive signature generalization. Remote Sens. Environ. 2013, 134, 333–341. [Google Scholar] [CrossRef]

- Blaschke, T. Towards a framework for change detection based on image objects. Göttinger Geogr. Abh. 2005, 113, 1–9. [Google Scholar]

- Xiaolong, D.; Khorram, S. The effects of image misregistration on the accuracy of remotely sensed change detection. IEEE Trans. Geosci. Remote 1998, 36, 1566–1577. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Zhao, K.; Powers, R. Assessment of the image misregistration effects on object-based change detection. ISPRS J. Photogramm. 2014, 87, 19–27. [Google Scholar] [CrossRef]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Chanussot, J.; Moon, W.M. Very high-resolution remote sensing: Challenges and opportunities [point of view]. Proc. IEEE 2012, 100, 1907–1910. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Y.; Zhu, Z.; Liang, L.; Yu, B.; Cao, W. Mapping annual urban dynamics (1985–2015) using time series of Landsat data. Remote Sens. Environ. 2018, 216, 674–683. [Google Scholar] [CrossRef]

- Dong, R.; Li, C.; Fu, H.; Wang, J.; Gong, P. Improving 3-m Resolution Land Cover Mapping through Efficient Learning from an Imperfect 10-m Resolution Map. Remote Sens. Basel 2020, 12, 1418. [Google Scholar] [CrossRef]

| GeoEye-1 | Pleiades | |

|---|---|---|

| Acquisition Data/Time | 2009-06-28 03:04 GMT | 2015-09-19 03:06 GMT |

| Solar azimuth | 132.0320 degrees | 155.5987 degrees |

| Solar elevation | 67.3074 degrees | 49.3733 degrees |

| Object Features | Description |

|---|---|

| Mean value of blue | Mean value of the blue band of an image object |

| Mean value of green | Mean value of the green band of an image object |

| Mean value of red | Mean value of the red band of an image object |

| Mean value of near-infrared | Mean value of the near-infrared band of an image object |

| Mean value of panchromatic | Mean value of the panchromatic band of an image object |

| Brightness | Mean value of the 5 original bands |

| NDVI | (near infrared − red)/(near infrared + red) |

| NDWI | (green − near infrared)/(green + near infrared) |

| Method 1 | Method 2 | Method 3 | ||

|---|---|---|---|---|

| Overall Acc. (%) | 83.33 | 76 | 85.33 | |

| Kappa Coefficient | 0.79 | 0.70 | 0.82 | |

| Producer’s Acc. (%) | Greenspace | 90.48 | 93.65 | 92.06 |

| Water | 76.79 | 80.36 | 80.36 | |

| Building | 95.08 | 75.41 | 93.44 | |

| Pavement | 63.33 | 33.33 | 70.00 | |

| Shadow | 90.00 | 96.67 | 90.00 | |

| User’s Acc. (%) | Greenspace | 77.03 | 95.16 | 76.32 |

| Water | 100.00 | 93.75 | 97.83 | |

| Building | 75.32 | 59.74 | 83.82 | |

| Pavement | 74.50 | 58.82 | 76.36 | |

| Shadow | 98.18 | 73.42 | 98.18 | |

| Reference Data | ||||||

|---|---|---|---|---|---|---|

| Classes | Greenspace | Water | Building | Pavement | Shadow | Sum |

| Greenspace | 57 | 5 | 0 | 10 | 2 | 74 |

| Water | 0 | 43 | 0 | 0 | 0 | 43 |

| Building | 1 | 2 | 58 | 12 | 4 | 77 |

| Pavement | 5 | 5 | 3 | 38 | 0 | 51 |

| Shadow | 0 | 1 | 0 | 0 | 54 | 55 |

| Sum | 63 | 56 | 61 | 60 | 60 | |

| Producer’s Acc. (%) | 90.48 | 76.79 | 95.08 | 63.33 | 90 | |

| User’s Acc. (%) | 77.03 | 100 | 75.32 | 74.5 | 98.18 | |

| Overall Acc. (%) | 83.33 | |||||

| Kappa Coefficient | 0.79 | |||||

| Reference Data | ||||||

|---|---|---|---|---|---|---|

| Classes | Greenspace | Water | Building | Pavement | Shadow | Sum |

| Greenspace | 59 | 1 | 1 | 1 | 0 | 62 |

| Water | 0 | 45 | 0 | 2 | 1 | 48 |

| Building | 0 | 0 | 46 | 31 | 0 | 77 |

| Pavement | 4 | 0 | 9 | 20 | 1 | 34 |

| Shadow | 0 | 10 | 5 | 6 | 58 | 79 |

| Sum | 63 | 56 | 61 | 60 | 60 | |

| Producer’s Acc. (%) | 93.65 | 80.36 | 75.4 | 33.33 | 96.67 | |

| User’s Acc. (%) | 95.16 | 93.75 | 59.74 | 58.82 | 73.42 | |

| Overall Acc. (%) | 76 | |||||

| Kappa Coefficient | 0.7 | |||||

| Reference Data | ||||||

|---|---|---|---|---|---|---|

| Classes | Greenspace | Water | Building | Pavement | Shadow | Sum |

| Greenspace | 58 | 5 | 1 | 10 | 2 | 76 |

| Water | 0 | 45 | 0 | 0 | 1 | 46 |

| Building | 0 | 0 | 57 | 8 | 3 | 68 |

| Pavement | 5 | 5 | 3 | 42 | 0 | 55 |

| Shadow | 0 | 1 | 0 | 0 | 54 | 55 |

| Sum | 63 | 56 | 61 | 60 | 60 | |

| Producer’s Acc. (%) | 92.06 | 80.36 | 93.44 | 70 | 90 | |

| User’s Acc. (%) | 76.32 | 97.83 | 83.82 | 76.36 | 98.18 | |

| Overall Acc. (%) | 85.33 | |||||

| Kappa Coefficient | 0.82 | |||||

| Reference Data | |||

|---|---|---|---|

| Classes | Possible Change | No Change | Sum |

| Possible change | 124 | 11 | 135 |

| No change | 26 | 139 | 165 |

| Sum | 150 | 150 | |

| Producer’s Acc. (%) | 82.67 | 92.67 | |

| User’s Acc. (%) | 91.85 | 84.24 | |

| Overall Acc. (%) | 87.67 | ||

| Kappa Coefficient | 0.75 | ||

| Reference Data | |||

|---|---|---|---|

| Classified Data | Possible Change | No Change | Sum |

| Possible Change | 133 | 24 | 157 |

| No change | 17 | 126 | 143 |

| Sum | 150 | 150 | |

| Producer’s Acc. (%) | 88.67 | 84.00 | |

| User’s Acc. (%) | 84.71 | 88.11 | |

| Overall Acc. (%) | 86.30 | ||

| Kappa Coefficient | 0.73 | ||

| Method 1 | Method 2 | Method 3 | ||

|---|---|---|---|---|

| Overall Acc. (%) | 84.67 | 79.67 | 88.67 | |

| Kappa Coefficient | 0.83 | 0.77 | 0.87 | |

| Producer’s Acc. (%) | NoChange | 91.84 | 71.43 | 91.84 |

| 09GS to 15SD | 66.67 | 81.25 | 93.75 | |

| 09GS to 15BLD | 100 | 96.67 | 96.67 | |

| 09GS to 15PA | 100 | 86.67 | 96.67 | |

| 09BLD to 15SD | 100 | 63.33 | 63.33 | |

| 09PA to 15BLD | 100 | 96.67 | 100 | |

| 09PA to 15GS | 100 | 85.3 | 100 | |

| 09PA to 15SD | 46.94 | 67.35 | 71.43 | |

| User’s Acc. (%) | NoChange | 100 | 100 | 100 |

| 09GS to 15SD | 100 | 100 | 100 | |

| 09GS to 15BLD | 100 | 90.63 | 96.67 | |

| 09GS to 15PA | 100 | 92.86 | 100 | |

| 09BLD to 15SD | 46.15 | 57.58 | 57.58 | |

| 09PA to 15BLD | 96.77 | 87.88 | 93.75 | |

| 09PA to 15GS | 100 | 85.3 | 94.44 | |

| 09PA to 15SD | 82.14 | 66 | 71.43 | |

| Method 1 | Reference Data | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Classes | No Change | 09GS to 15SD | 09GS to 15BLD | 09GS to 15PA | 09BLD to 15SD | 09PA to 15BLD | 09PA to 15GS | 09PA to 15SD | Sum |

| No change | 43 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 43 |

| 09GS to 15SD | 0 | 32 | 0 | 0 | 0 | 0 | 0 | 0 | 32 |

| 09GS to 15BLD | 2 | 0 | 30 | 0 | 0 | 0 | 0 | 0 | 32 |

| 09GS to 15PA | 0 | 0 | 0 | 30 | 0 | 0 | 0 | 0 | 30 |

| 09BLD to 15SD | 0 | 9 | 0 | 0 | 30 | 0 | 0 | 26 | 65 |

| 09PA to 15BLD | 1 | 0 | 0 | 0 | 0 | 30 | 0 | 0 | 31 |

| 09PA to 15GS | 1 | 0 | 0 | 0 | 0 | 0 | 34 | 0 | 35 |

| 09PA to 15SD | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 22 | 27 |

| False Change | 3 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 5 |

| Sum | 50 | 48 | 30 | 30 | 30 | 30 | 34 | 48 | |

| Producer’s Acc. (%) | 91.84 | 66.67 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 46.94 | |

| User’s Acc. (%) | 100.00 | 100.00 | 100.00 | 100.00 | 46.15 | 96.77 | 100.00 | 82.14 | |

| Overall Acc. (%) | 84.67 | ||||||||

| Kappa Coefficient | 0.83 | ||||||||

| Method 2 | Reference Data | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Classes | No Change | 09GS to 15SD | 09GS to 15BLD | 09GS to 15PA | 09BLD to 15SD | 09PA to 15BLD | 09PA to 15GS | 09PA to 15SD | Sum |

| No change | 33 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 33 |

| 09GS to 15SD | 0 | 39 | 0 | 0 | 0 | 0 | 0 | 0 | 39 |

| 09GS to 15BLD | 0 | 1 | 29 | 2 | 0 | 0 | 0 | 0 | 32 |

| 09GS to 15PA | 1 | 1 | 0 | 26 | 0 | 0 | 0 | 0 | 28 |

| 09BLD to 15SD | 0 | 0 | 0 | 0 | 33 | 0 | 0 | 0 | 33 |

| 09PA to 15BLD | 5 | 0 | 1 | 0 | 0 | 29 | 0 | 0 | 35 |

| 09PA to 15GS | 3 | 1 | 0 | 0 | 0 | 0 | 29 | 2 | 35 |

| 09PA to 15SD | 0 | 6 | 0 | 0 | 11 | 0 | 0 | 32 | 49 |

| False Change | 8 | 0 | 0 | 2 | 0 | 1 | 5 | 0 | 16 |

| Sum | 50 | 48 | 30 | 30 | 44 | 30 | 34 | 34 | |

| Producer’s Acc. (%) | 71.43 | 81.25 | 96.67 | 86.67 | 63.33 | 96.67 | 85.30 | 67.35 | |

| User’s Acc. (%) | 100.00 | 100.00 | 90.63 | 92.86 | 57.58 | 87.88 | 85.30 | 66.00 | |

| Overall Acc. (%) | 79.67 | ||||||||

| Kappa Coefficient | 0.77 | ||||||||

| Reference Data | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Classes | No Change | 09GS to 15SD | 09GS to 15BLD | 09GS to 15PA | 09BLD to 15SD | 09PA to 15BLD | 09PA to 15GS | 09PA to 15SD | Sum |

| NoChange | 45 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 45 |

| 09GS to 15SD | 0 | 45 | 0 | 0 | 0 | 0 | 0 | 0 | 45 |

| 09GS to 15BLD | 0 | 0 | 29 | 1 | 0 | 0 | 0 | 0 | 30 |

| 09GS to 15PA | 0 | 0 | 0 | 29 | 0 | 0 | 0 | 0 | 29 |

| 09BLD to 15SD | 0 | 0 | 0 | 0 | 33 | 0 | 0 | 0 | 33 |

| 09PA to 15BLD | 1 | 0 | 1 | 0 | 0 | 30 | 0 | 0 | 32 |

| 09PA to 15GS | 3 | 0 | 0 | 0 | 0 | 0 | 34 | 0 | 37 |

| 09PA to 15SD | 0 | 3 | 0 | 0 | 11 | 0 | 0 | 34 | 48 |

| False Change | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| Sum | 50 | 48 | 30 | 30 | 44 | 30 | 34 | 34 | |

| Producer’s Acc. (%) | 91.84 | 93.75 | 96.67 | 96.67 | 63.33 | 100.00 | 100.00 | 71.43 | |

| User’s Acc. (%) | 100.00 | 100.00 | 96.67 | 100.00 | 57.58 | 93.75 | 94.44 | 71.43 | |

| Overall Acc. (%) | 88.67 | ||||||||

| Kappa Coefficient | 0.87 | ||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, Y.; Zhou, W.; Yu, W.; Han, L.; Li, W.; Zhao, W. Integrating Backdating and Transfer Learning in an Object-Based Framework for High Resolution Image Classification and Change Analysis. Remote Sens. 2020, 12, 4094. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244094

Qian Y, Zhou W, Yu W, Han L, Li W, Zhao W. Integrating Backdating and Transfer Learning in an Object-Based Framework for High Resolution Image Classification and Change Analysis. Remote Sensing. 2020; 12(24):4094. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244094

Chicago/Turabian StyleQian, Yuguo, Weiqi Zhou, Wenjuan Yu, Lijian Han, Weifeng Li, and Wenhui Zhao. 2020. "Integrating Backdating and Transfer Learning in an Object-Based Framework for High Resolution Image Classification and Change Analysis" Remote Sensing 12, no. 24: 4094. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244094