Sentinel-2 Cloud Removal Considering Ground Changes by Fusing Multitemporal SAR and Optical Images

Abstract

:1. Introduction

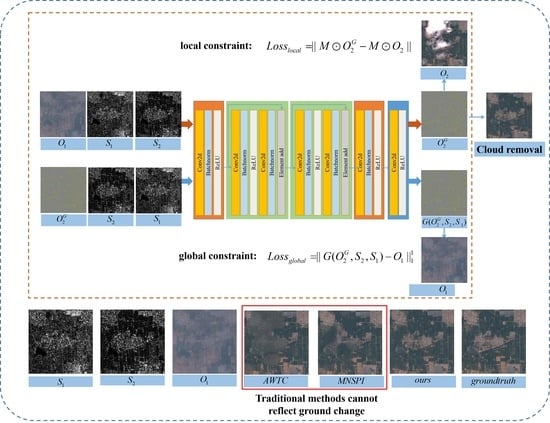

- Based on deep neural network, we propose a novel method to remove clouds in images from Sentinel-2 satellite with multitemporal images from Sentinel-1 and Sentinel-2 satellites. The cloud removal results can reflect the ground information change in the reconstruction areas during the two periods.

- Different from the existing SAR-based methods which need large training datasets, the proposed method can directly act on a given corrupted optical image without datasets, confirming that a large training dataset is unnecessary in the cloud removal task.

- Severe simulation and real data experiments are conducted with multitemporal optical and SAR images from different scenes. Experimental results show that the proposed method outperforms many other cloud removal methods and has strong flexibility across different scenes.

2. Methods

2.1. Problem Formulation

2.2. Method for Cloud Removal

2.3. Network Structure

3. Experiments

3.1. Experiment Settings

3.1.1. Data Introduction

3.1.2. Mask Production

3.1.3. Evaluation Methods

3.1.4. Implementation Details

3.1.5. Comparison Methods

3.2. Simulation Experiment Results

3.3. Real Experiment Results

4. Discussion

4.1. Ablation Study about Loss Function

4.2. Ablation Study about Reference Data

4.3. Time Cost

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Du, L.; Tian, Q.; Yu, T.; Meng, Q.; Jancso, T.; Udvardy, P.; Huang, Y. A comprehensive drought monitoring method integrating modis and trmm data. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 245–253. [Google Scholar] [CrossRef]

- Rapinel, S.; Hubert-Moy, L.; Clément, B. Combined use of lidar data and multispectral earth observation imagery for wetland habitat mapping. Int. J. Appl. Earth Obs. Geoinf. 2015, 37, 56–64. [Google Scholar] [CrossRef]

- Rodríguez-Veiga, P.; Quegan, S.; Carreiras, J.; Persson, H.J.; Fransson, J.E.; Hoscilo, A.; Ziółkowski, D.; Stereńczak, K.; Lohberger, S.; Stängel, M. Forest biomass retrieval approaches from earth observation in different biomes. Int. J. Appl. Earth Obs. Geoinf. 2019, 77, 53–68. [Google Scholar] [CrossRef]

- Kogan, F.; Kussul, N.; Adamenko, T.; Skakun, S.; Kravchenko, O.; Kryvobok, O.; Shelestov, A.; Kolotii, A.; Kussul, O.; Lavrenyuk, A. Winter wheat yield forecasting in ukraine based on earth observation, meteorological data and biophysical models. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 192–203. [Google Scholar] [CrossRef]

- Pradhan, S. Crop area estimation using gis, remote sensing and area frame sampling. Int. J. Appl. Earth Obs. Geoinf. 2001, 3, 86–92. [Google Scholar] [CrossRef]

- Ju, J.; Roy, D.P. The availability of cloud-free landsat etm+ data over the conterminous united states and globally. Remote Sens. Environ. 2008, 112, 1196–1211. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Wang, L.; Qu, J.J.; Xiong, X.; Hao, X.; Xie, Y.; Che, N. A new method for retrieving band 6 of aqua modis. IEEE Geosci. Remote Sens. Lett. 2006, 3, 267–270. [Google Scholar] [CrossRef]

- Shen, H.; Zeng, C.; Zhang, L. Recovering reflectance of aqua modis band 6 based on within-class local fitting. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 4, 185–192. [Google Scholar] [CrossRef]

- Zhang, C.; Li, W.; Travis, D. Gaps-fill of slc-off landsat etm+ satellite image using a geostatistical approach. Int. J. Remote Sens. 2007, 28, 5103–5122. [Google Scholar] [CrossRef]

- Yu, C.; Chen, L.; Su, L.; Fan, M.; Li, S. Kriging interpolation method and its application in retrieval of modis aerosol optical depth. In Proceedings of the 19th International Conference on Geoinformatics, Shanghai, China, 24–26 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–6. [Google Scholar]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424. [Google Scholar]

- Ballester, C.; Bertalmio, M.; Caselles, V.; Sapiro, G.; Verdera, J. Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans. Image Process. 2001, 10, 1200–1211. [Google Scholar] [CrossRef] [Green Version]

- Chan, T.F.; Shen, J. Nontexture inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 2001, 12, 436–449. [Google Scholar] [CrossRef]

- Shen, J.; Chan, T.F. Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. 2002, 62, 1019–1043. [Google Scholar] [CrossRef]

- Bugeau, A.; Bertalmío, M.; Caselles, V.; Sapiro, G. A comprehensive framework for image inpainting. IEEE Trans. Image Process. 2010, 19, 2634–2645. [Google Scholar] [CrossRef]

- Efros, A.A.; Leung, T.K. Texture synthesis by non-parametric sampling. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 1033–1038. [Google Scholar]

- Criminisi, A.; Pérez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef]

- Zhang, J.; Clayton, M.K.; Townsend, P.A. Functional concurrent linear regression model for spatial images. J. Agric. Biol. Environ. Stat. 2011, 16, 105–130. [Google Scholar] [CrossRef]

- Zhang, J.; Clayton, M.K.; Townsend, P.A. Missing data and regression models for spatial images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1574–1582. [Google Scholar] [CrossRef]

- Lorenzi, L.; Melgani, F.; Mercier, G. Missing-area reconstruction in multispectral images under a compressive sensing perspective. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3998–4008. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering quantitative remote sensing products contaminated by thick clouds and shadows using multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality ndvi time-series data set based on the savitzky–golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Jönsson, P.; Eklundh, L. Timesat—A program for analyzing time-series of satellite sensor data. Comput. Geosci. 2004, 30, 833–845. [Google Scholar] [CrossRef] [Green Version]

- Uzkent, B.U.; Sarukkai, V.S.; Jain, A.J.; Ermon, S.E. Cloud removal in satellite images using spatiotemporal generative networks. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass, CO, USA, 1–5 March 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Singh, P.; Komodakis, N. Cloud-gan: Cloud removal for sentinel-2 imagery using a cyclic consistent generative adversarial networks. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1772–1775. [Google Scholar]

- Liu, L.; Lei, B. Can sar images and optical images transfer with each other? In .Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7019–7022. [Google Scholar]

- Li, W.; Li, Y.; Chan, J.C.-W. Thick cloud removal with optical and sar imagery via convolutional-mapping-deconvolutional network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2865–2879. [Google Scholar] [CrossRef]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud removal with fusion of high resolution optical and sar images using generative adversarial networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef] [Green Version]

- Turnes, J.N.; Castro, J.D.B.; Torres, D.L.; Vega, P.J.S.; Feitosa, R.Q.; Happ, P.N. Atrous cgan for sar to optical image translation. IEEE Geosci. Remote Sens. Lett. 2020. [Google Scholar] [CrossRef]

- Bermudez, J.; Happ, P.; Oliveira, D.; Feitosa, R. Sar to optical image synthesis for cloud removal with generative adversarial networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4. [Google Scholar] [CrossRef] [Green Version]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2223–2232. [Google Scholar]

- He, W.; Yokoya, N. Multi-temporal sentinel-1 and-2 data fusion for optical image simulation. ISPRS Int. J. Geo-Inf. 2018, 7, 389. [Google Scholar] [CrossRef] [Green Version]

- Bermudez, J.D.; Happ, P.N.; Feitosa, R.Q.; Oliveira, D.A. Synthesis of multispectral optical images from sar/optical multitemporal data using conditional generative adversarial networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1220–1224. [Google Scholar] [CrossRef]

- Xia, Y.; Zhang, H.; Zhang, L.; Fan, Z. Cloud removal of optical remote sensing imagery with multitemporal sar-optical data using x-mtgan. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3396–3399. [Google Scholar]

- Meraner, A.; Ebel, P.; Zhu, X.X.; Schmitt, M. Cloud removal in sentinel-2 imagery using a deep residual neural network and sar-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef]

- Ng, M.K.-P.; Yuan, Q.; Yan, L.; Sun, J. An adaptive weighted tensor completion method for the recovery of remote sensing images with missing data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3367–3381. [Google Scholar] [CrossRef]

- Zhu, X.; Gao, F.; Liu, D.; Chen, J. A modified neighborhood similar pixel interpolator approach for removing thick clouds in landsat images. IEEE Geosci. Remote Sens. Lett. 2011, 9, 521–525. [Google Scholar] [CrossRef]

- Zeng, C.; Shen, H.; Zhang, L. Recovering missing pixels for landsat etm+ slc-off imagery using multi-temporal regression analysis and a regularization method. Remote Sens. Environ. 2013, 131, 182–194. [Google Scholar] [CrossRef]

| Simulation exp | ||||

|---|---|---|---|---|

| 1 | 22 March 2020 | 8 July 2020 | 23 March 2020 | 6 July 2020 |

| 2 | 16 November 2019 | 2 May 2020 | 14 November 2019 | 2 May 2020 |

| 3 | 20 October 2019 | 23 May 2020 | 19 October 2019 | 21 May 2020 |

| 4 | 1 November 2019 | 13 December 2019 | 31 October 2019 | 10 December 2019 |

| 5 | 18 October 2019 | 5 December 2019 | 19 October 2019 | 8 December 2019 |

| 6 | 24 March 2020 | 5 April 2020 | 26 March 2020 | 5 April 2020 |

| 7 | 27 August 2019 | 9 August 2020 | 23 August 2019 | 7 August 2020 |

| 8 | 31 August 2019 | 2 July 2020 | 5 September 2019 | 1 July 2020 |

| Real data exp | ||||

| 1 | 09 April 2021 | 03 May 2021 | 08 April 2021 | 03 May 2021 |

| 2 | 20 April 2021 | 14 April 2021 | 23 April 2021 | 15 April 2021 |

| PSNR | SSIM | CC | SAM | |

|---|---|---|---|---|

| WLR | 35.9515 * | 0.9679 * | * 0.9782 | * 0.2894 |

| MNSPI | 34.9646 | 0.9661 | 0.9721 | 0.3617 |

| AWTC | 32.6143 | 0.9487 | 0.9596 | 0.4800 |

| Ours | 37.3142 * | 0.9748 * | 0.9842 * | 0.2586 * |

| PSNR | SSIM | CC | SAM | |

|---|---|---|---|---|

| W/O(TV) | 37.2542 | 0.9747 | 0.9841 | 0.2591 |

| W/O(global) | 37.2075 | 0.9746 | 0.9841 | 0.2592 |

| Baseline | 37.3142 | 0.9748 | 0.9842 | 0.2586 |

| PSNR | SSIM | CC | SAM | |

|---|---|---|---|---|

| W/O( and ) | 36.3542 | 0.9724 | 0.9805 | 0.2803 |

| W/O() | 37.1386 | 0.9743 | 0.9839 | 0.2598 |

| W/O() | 36.4235 | 0.9725 | 0.9808 | 0.2745 |

| Ours | 37.1953 | 0.9746 | 0.9841 | 0.2593 |

| WLR | MNSPI | AWTC | Ours | |

|---|---|---|---|---|

| Time | <4 s | 26 s | 183 s | 842 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, J.; Yi, Y.; Wei, T.; Zhang, G. Sentinel-2 Cloud Removal Considering Ground Changes by Fusing Multitemporal SAR and Optical Images. Remote Sens. 2021, 13, 3998. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13193998

Gao J, Yi Y, Wei T, Zhang G. Sentinel-2 Cloud Removal Considering Ground Changes by Fusing Multitemporal SAR and Optical Images. Remote Sensing. 2021; 13(19):3998. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13193998

Chicago/Turabian StyleGao, Jianhao, Yang Yi, Tang Wei, and Guanhao Zhang. 2021. "Sentinel-2 Cloud Removal Considering Ground Changes by Fusing Multitemporal SAR and Optical Images" Remote Sensing 13, no. 19: 3998. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13193998