1. Introduction

The river cross-section is an essential parameter in water resources planning, hydraulics engineering, flow discharge modeling, ecological assessment, and river management [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10]. In a general survey, the cross-sections are covered by a water body, thus, the investigators have to measure the distance from water surface to the riverbed, i.e., bathymetry. Typically, bathymetry is directly investigated using water-contact equipment, such as rope [

3], lead fish [

11], real-time kinematics (RTK) [

12], and an acoustic doppler current profiler (ADCP) [

7]. These kinds of bathymetry observation methods have a very high accuracy for mapping cross-sections, but the investigators need to carry heavy and expensive equipment to the field and take several hours or even days to collect the data [

13], which makes survey updates rare or allows them to be conducted only on the sites of special interest [

14]. Therefore, recently, many scholars have used remote sensing (RS) techniques, e.g., satellite or unmanned aerial vehicle (UAV), to retrieve river bathymetry data [

8,

15,

16,

17,

18,

19].

In recent RS-based bathymetry retrieval studies, Hernandez et al. [

15] used light detection and ranging (LiDAR) to measure the seabed topography of La Parguera Nature Reserve in 2016. Worldview 2 (WV2) satellite image data, simple linear regression (REG) concept-based algorithm, and bands’ ratios of the satellite spectrum were used to retrieve water depth. The research results show that the root mean square error (RMSE) at 1 to 10 m is 1.260 m and the atmosphere, water quality, and turbidity have an impact on the accuracy of water depth retrieval.

Kim et al. [

17] conducted a water depth survey using an UAV in 2019. The authors combined this with principal component analysis (PCA) to select the high influence spectral bands and then used REG, artificial neural network (ANN), and geographically weighted regression (GWR) algorithms to develop a water depth retrieval model. The coefficient of determination (R

2) of REG, ANN, and GWR were 0.587, 0.595, and 0.851, respectively. The results indicated that the GWR model has certain accuracy for water depth retrieval and the choice of spectral band has an influence on the retrieval results.

Kasvi et al. [

16] conducted a water depth retrieval study of the Pulmanki River, Finland in 2019. ADCP, REG, and structure from motion (SfM) developed from UAV aerial photography were used for modeling. The results show that ADCP has the best water depth measurement accuracy with mean absolute error (MAE) ranging from 0.030 m to 0.070 m, followed by REG (0.050–0.170 m) and SfM (0.180–2.980 m). The mean errors (ME) were similar and were in the order of ADCP (−0.030 m to 0.000 m), REG (−0.170 m to 0.020 m), and SfM (−0.440 m to 3.200 m). The author also points out that the error from image retrieval is similar to ADCP water depth measurement. Owing to the inability of ADCP to measure the 0 to 0.2 m shallow water depth, the cost of image retrieval water depth is considerably lower than that of ADCP, and given the lack of data, therefore, the image retrieval method should be used to estimate water depth under the suitable conditions of use.

Janowski et al. [

18] developed a novel methodological approach to assess the suitability of airborne LiDAR bathymetry for the auto-classification and mapping of the seafloor based on ML classifiers in 2022. The application results show that the random forest algorithm has the best performance in scenarios and the overall accuracy in all scenarios ranged from more than 75% to 91%, with a median of 84%.

Although the abovementioned state-of-the-art techniques show a reasonable and reliable bathymetry modeling ability, they are mainly established for the application of wide areas and huge scales, e.g., wide rivers [

9,

16,

20,

21], lakes [

5], or oceans [

10,

18,

19,

22]. Additionally, due to the limitation of satellite spatial and temporal resolution and the influence of cloud cover, most of the research results are only applicable to large sections and high-water-depth areas [

23]. The riverbed sections of a large number of small and shallow rivers cannot be reasonably retrieved, and there are spatial resolution and observation frequency limitations in estimating water depth via satellite. Therefore, the existing bathymetry retrieval models are not easy to apply in regions which have narrow and shallow conditions with rapidly changing morphology [

7] like the mountainous rivers in Taiwan.

Taiwan is located in a typhoon-prone area with steep topography, rainfall is uneven during high and low water periods, and the flow rate varies considerably; therefore, the river section changes frequently, and the information of section surveys needs to be updated frequently [

2,

6]. Nevertheless, it is not easy to survey the river section directly with specific bathymetry, i.e., water depth. In addition, the rivers of Taiwan are predominantly in mountainous areas, and there are 131 named rivers in Taiwan, and more than 1000 submerged streams and wild streams, which are narrow and shallow (

Figure 1). Under the circumstances of the bathymetry investigation in narrow and shallow river, the rapid development of UAV and advanced machine learning (ML) algorithm in recent years can possibly compensate for the lack of satellite image water depth retrieval. Samboko et al. [

9] reviewed a study on the use of UAVs for river surveys and noted that UAVs can carry sensors to dangerous or inaccessible areas for surveys, and calibration can be conducted with relevant models developed to improve the applicability of this method. Moreover, Ashphaq et al. [

19] reviewed over 100 research articles of satellite-derived bathymetry (SDB) retrieval models from past 50 years; the authors indicated that machine learning (ML) algorithms have a better modeling performance in the bathymetry where water depth is less than 20 m but require more relative studies for an applicability evaluation.

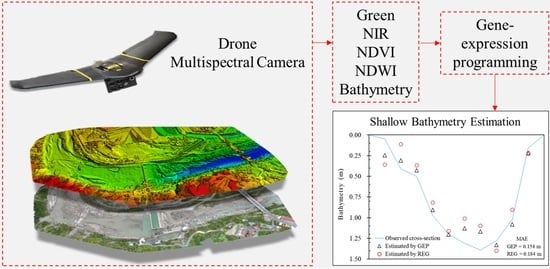

For there are many narrow and shallow rivers in Taiwan’s mountainous area and the riverbed changes frequently; manually investigating the river cross-section is difficult, and the applicability of the existing RS-based bathymetry retrieval model in shallow and narrow area are still unknown. Therefore, the authors aimed to link the gap between practical needs and the shortage of the existing bathymetry retrieval models by the following objectives in this study:

- (1)

using UAV with multispectral camera to capture images and surveyed cross-section under shallow bathymetry conditions;

- (2)

applying a ML algorithm to establish a water depth retrieval model for the rivers of Taiwan’s mountain area, which has yet been found in related studies;

- (3)

encrypting the established model to Python-based program for further application, which can be used to simulate water depth for the shallow river or near-shore areas that are not easily measured under similar conditions as this study, where the water depth could be estimated by multispectral sensor mounted on the UAV.

4. Discussion

In this study, the water depth reverse research model was developed for the mountainous rivers in southern Taiwan. The results show that huge absolute errors occur in the turbid water, which is similar to the findings of [

5,

16,

38]. Furthermore, this study compares the modeled results and errors with relevant studies. Although the study area, data source, and data range were different from other studies and the results could not be directly compared, the water depth retrieval results of each study can be used to slightly evaluate the difference in accuracy of the water depth evaluation of different models.

As shown in

Table 6, in the MAE section, the GEP model developed in this study exhibits a higher retrieval error (0.154 m) than that (0.112 m) of the REG model developed via [

16]; the value is higher than MAE (0.053 m) obtained at 0.20 to 1.50 m water depth via the contact measurement with ADCP but lower than that (0.740 m) of the water depth obtained using the SfM method. In ME, the retrieval error (REG = −0.008 m, GEP = 0.012 m) of this study is similar to that of the ADCP contact measurement result of and better than the water depth results obtained via telemetry combined with the deep learning or random forest algorithm [

16,

21,

39,

40]. Finally, in the RMSE comparison section, GEP outperformed the simulation results of the water depth retrieval model developed using machine learning [

23,

34,

40,

41] or linear algorithms [

15,

23,

34] in previous studies, which indicates that the GEP water depth retrieval model developed in this study has a certain degree of accuracy. Considering that the UAV has the ability to quickly survey a large area according to the user’s needs and the MAE error is of the same magnitude as ADCP but the price is only 10% of ADCP, it should be able to meet the shallow water depth survey requirements of mountainous rivers in Taiwan.

The established bathymetry model showed a certain degree of retrieval accuracy of the study area. Nevertheless, the authors should mention that the water depth retrieval model is influenced by many factors, including light sources, turbidity and the penetration range [

18,

39], water composition, underwater vegetation cover, riverbed composition [

16], and other parameters which may inhibit acoustic waves like salinity, temperature, etc. [

19]. Therefore, the evaluation of the water depth near the surface or near the bottom layer may cause low accuracy, but it can be seen from the comparison of error distribution that GEP has the ability to avoid significant error. Additionally, compared with other studies, high-resolution multispectral images are adopted in this study. Therefore, the differences in the resolution of images, types of aircraft, and multispectral camera bands may affect the retrieval results. In the actual application, the model should be selected based on the development of the model, using the accuracy requirements and the results of current surveys.

5. Conclusions

Due to the rivers in the mountainous areas of Taiwan being often narrow and shallow and under the influence of extreme rainfall caused by climate change, the scour and deposition of riverbeds change rapidly, and how to quickly obtain a reasonable riverbed cross-section has become a topic faced in river engineering. In this study, an UAV equipped with a multispectral camera was used to shoot the high-resolution multispectral images of Taiwan mountainous streams for quickly obtaining a river cross-section. The bathymetry retrieval model is developed using the GEP and REG algorithms. The results show that the MAE performance of GEP has increased by 16.3%, compared to the REG model. For the further comparison with relevant studies, the accuracy of the GEP model is close to or better than related studies using images to retrieve water depth and can avoid the occurrence of significant error. GEP is suitable for the water depth retrieval of rivers in mountainous areas in Taiwan and can be used for river management planning and disaster prevention management evaluation or the shallow shores of shallow rivers or near-shore areas that are not easily measured under similar conditions as this study.

That being said, in practical applications, considering the basis of model development, accuracy requirements, and field survey results when choosing a suitable model remains necessary. This study only discusses the capability of the GEP algorithm applied to shallow water river in the southern mountainous region of Taiwan and indicates that its retrieval accuracy is sufficient for the water depth evaluation of the study area, which can be used as an alternative when water depth is not available on site, so it cannot be fully compared with other case studies. For further research, it is suggested that researchers might conduct more extensive case studies or meta-studies on the RS water depth retrieval technology, e.g., deep learning algorithms, generalized additive model (GAM), and decision trees.