A Feasibility Study of Map-Based Dashboard for Spatiotemporal Knowledge Acquisition and Analysis

Abstract

:1. Introduction

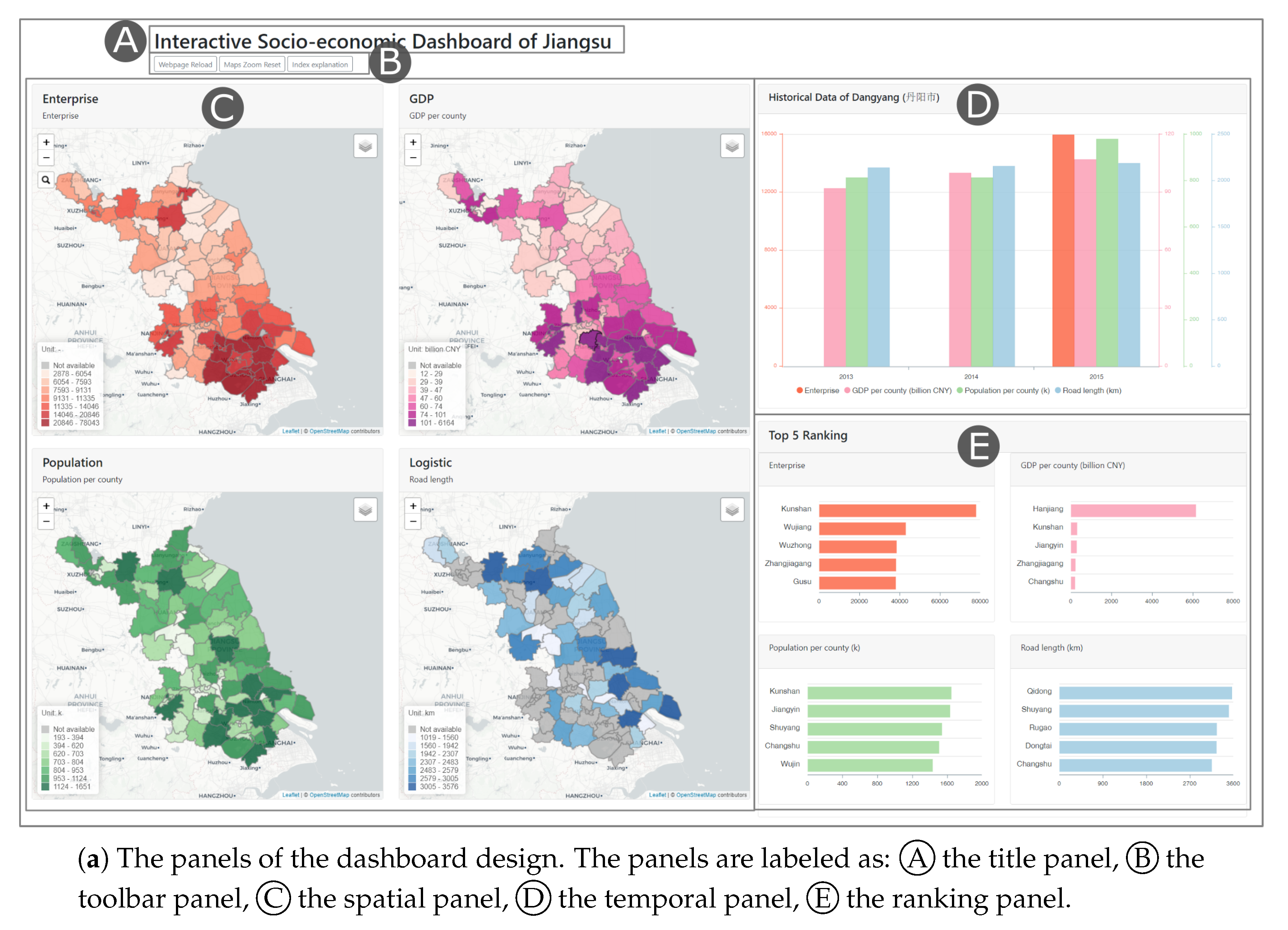

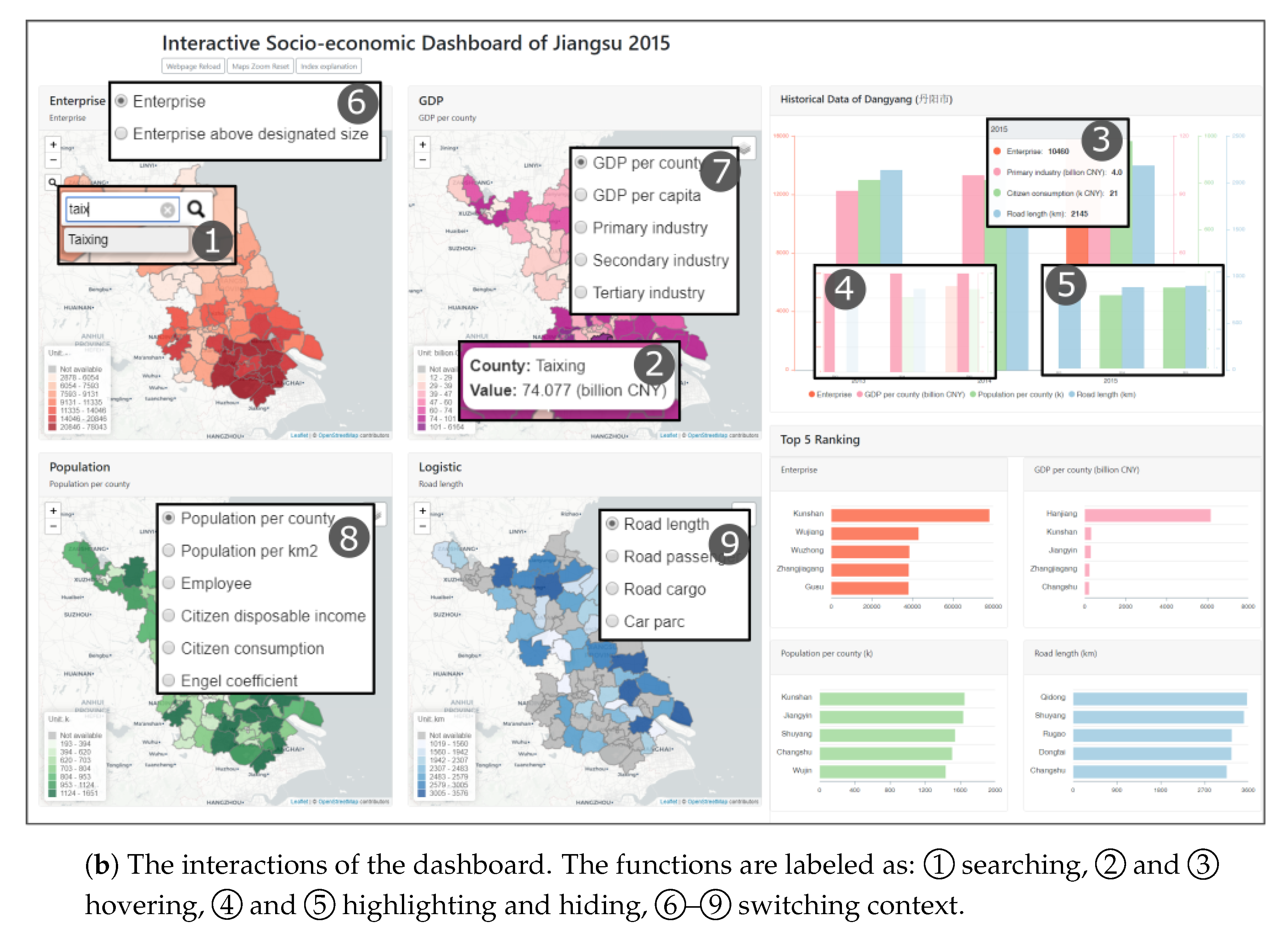

2. Design of the Dashboard

2.1. Background

2.2. Test Data

2.3. User Interface

3. Design of the Evaluation Experiment

3.1. Participants

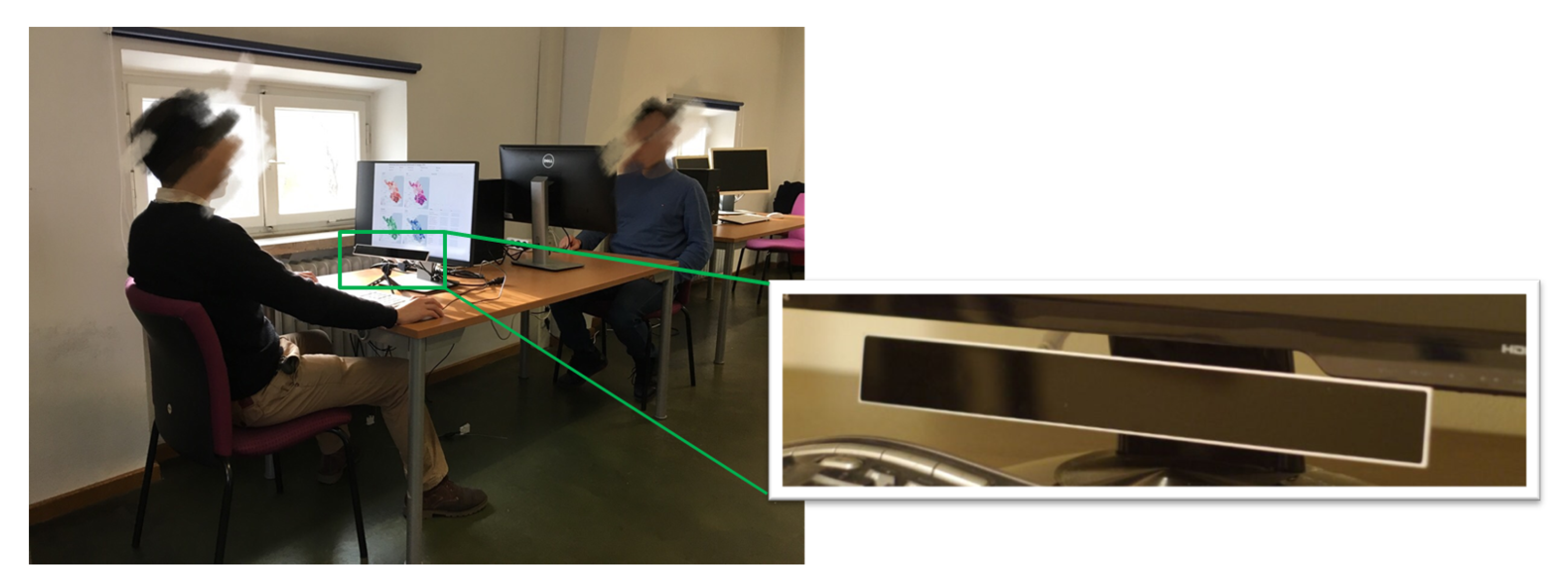

3.2. Apparatus

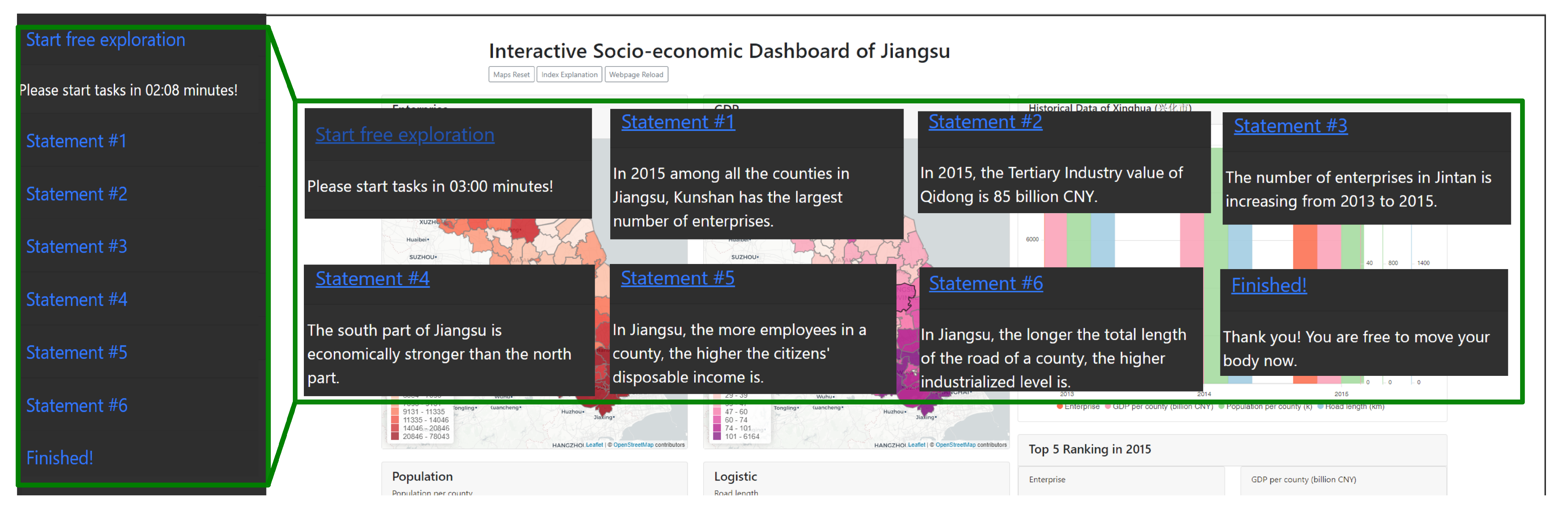

3.3. Benchmark Tasks

3.4. Experiment Tool

3.5. Procedure

3.6. Methods of Analysis

4. Evaluation Results

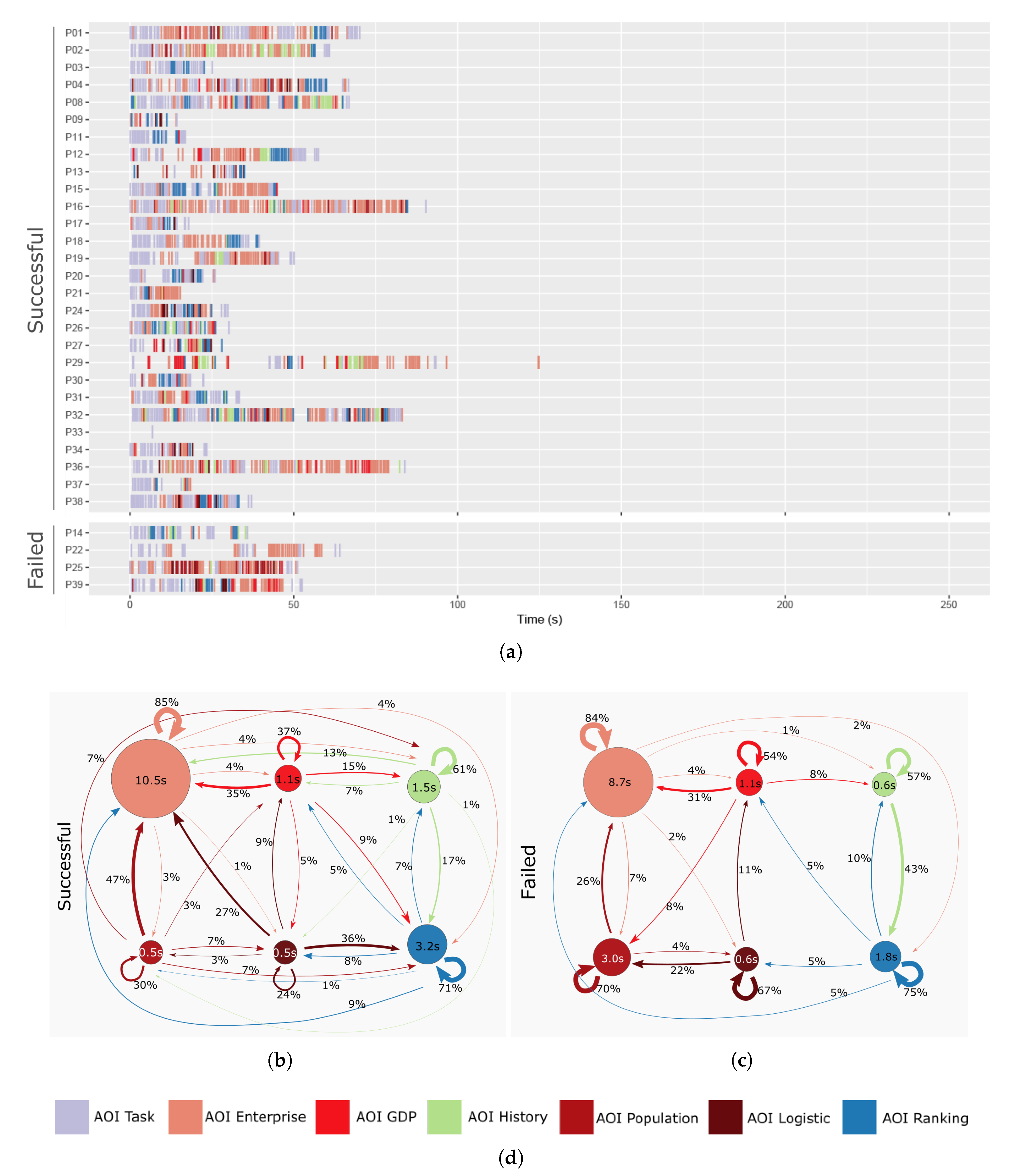

4.1. Fixation in Free Exploration

4.2. Success Rate

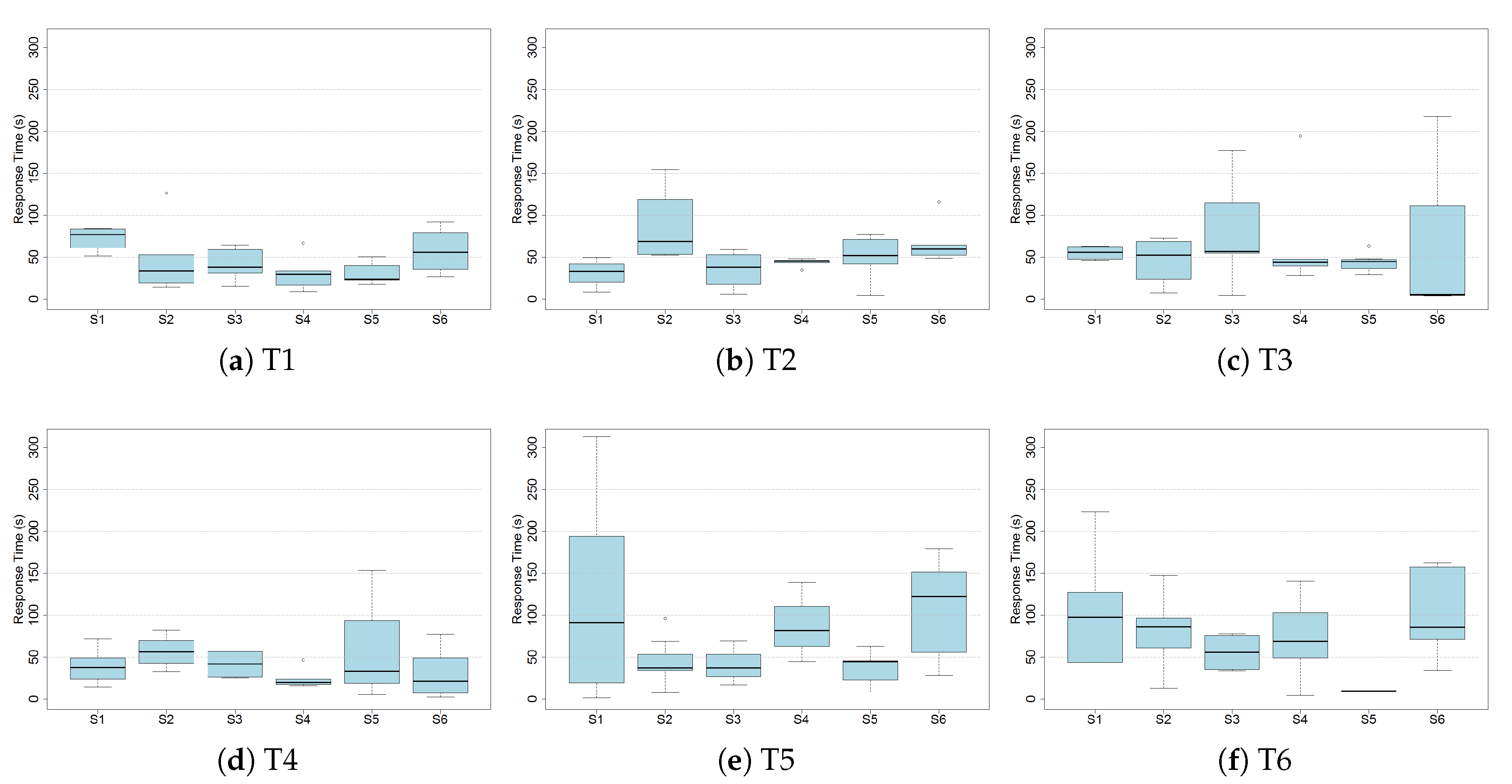

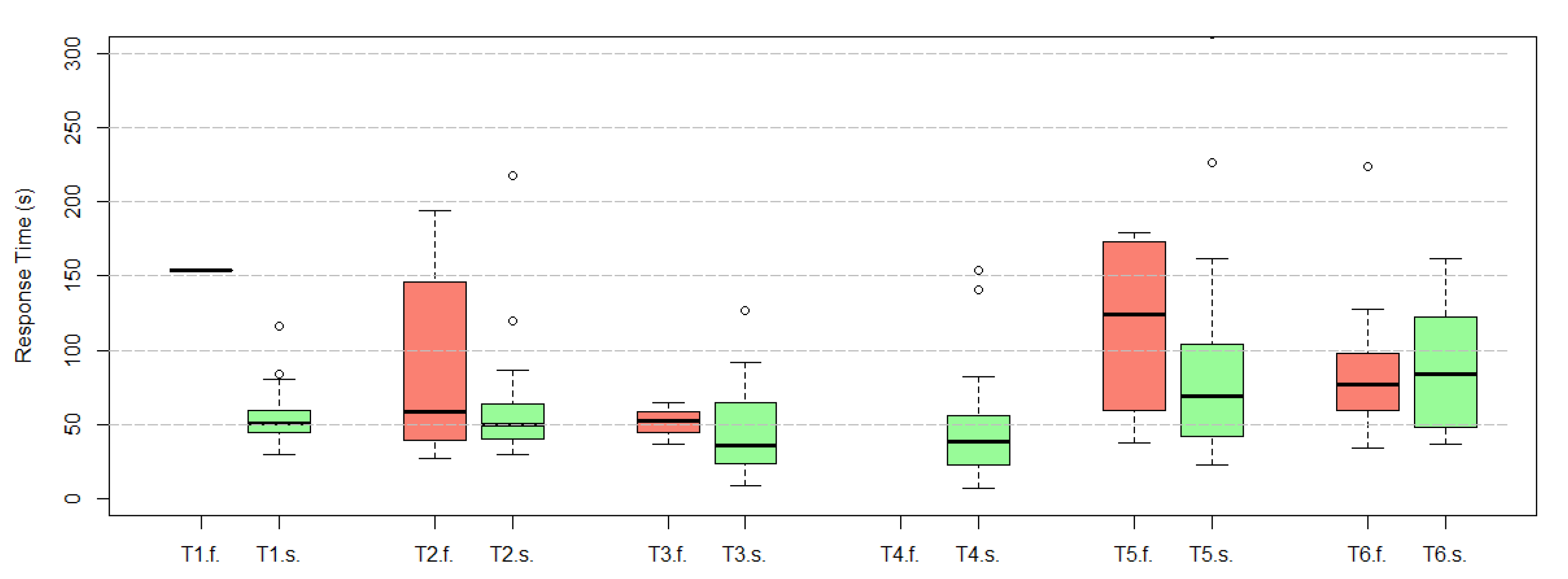

4.3. Response Time

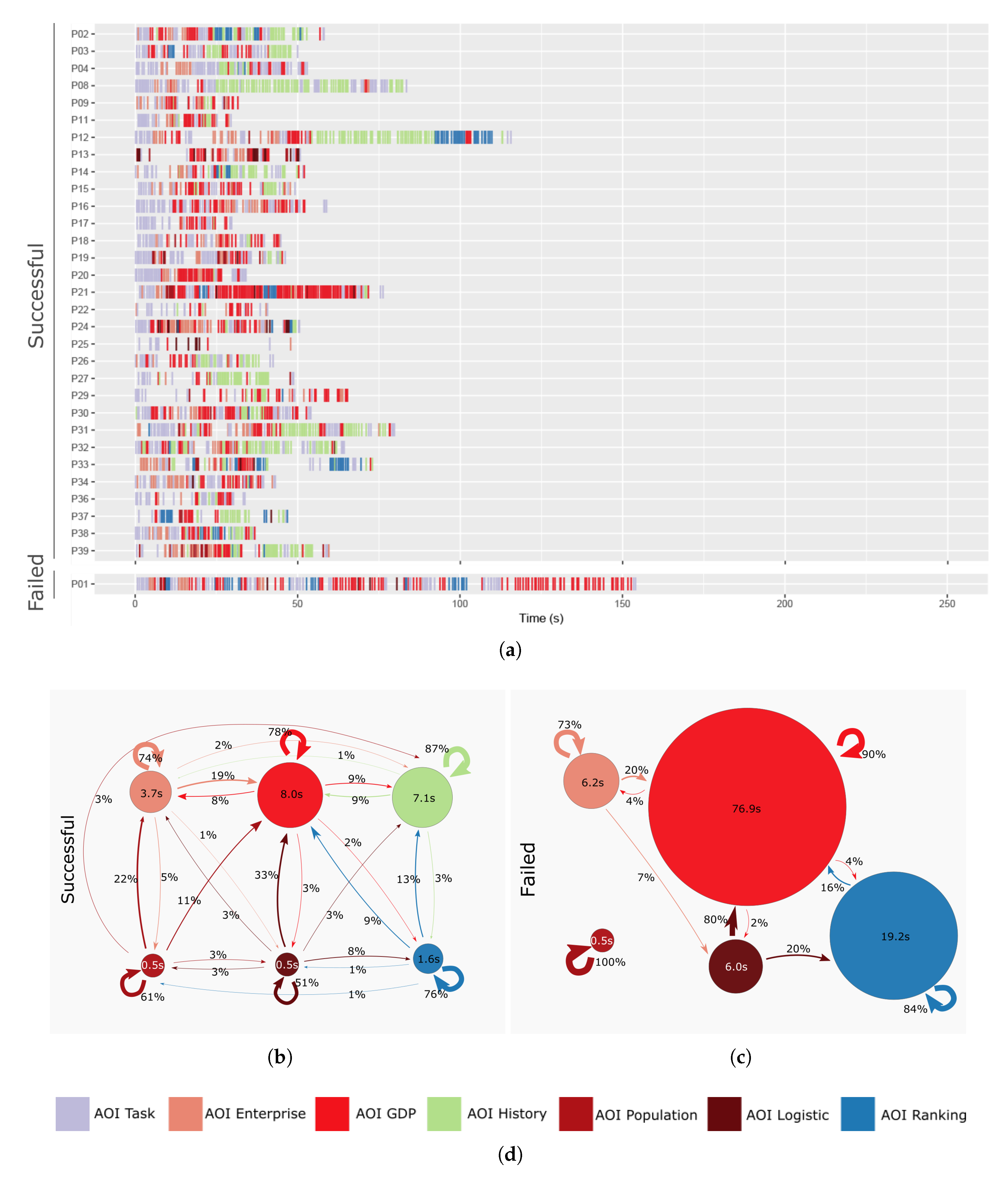

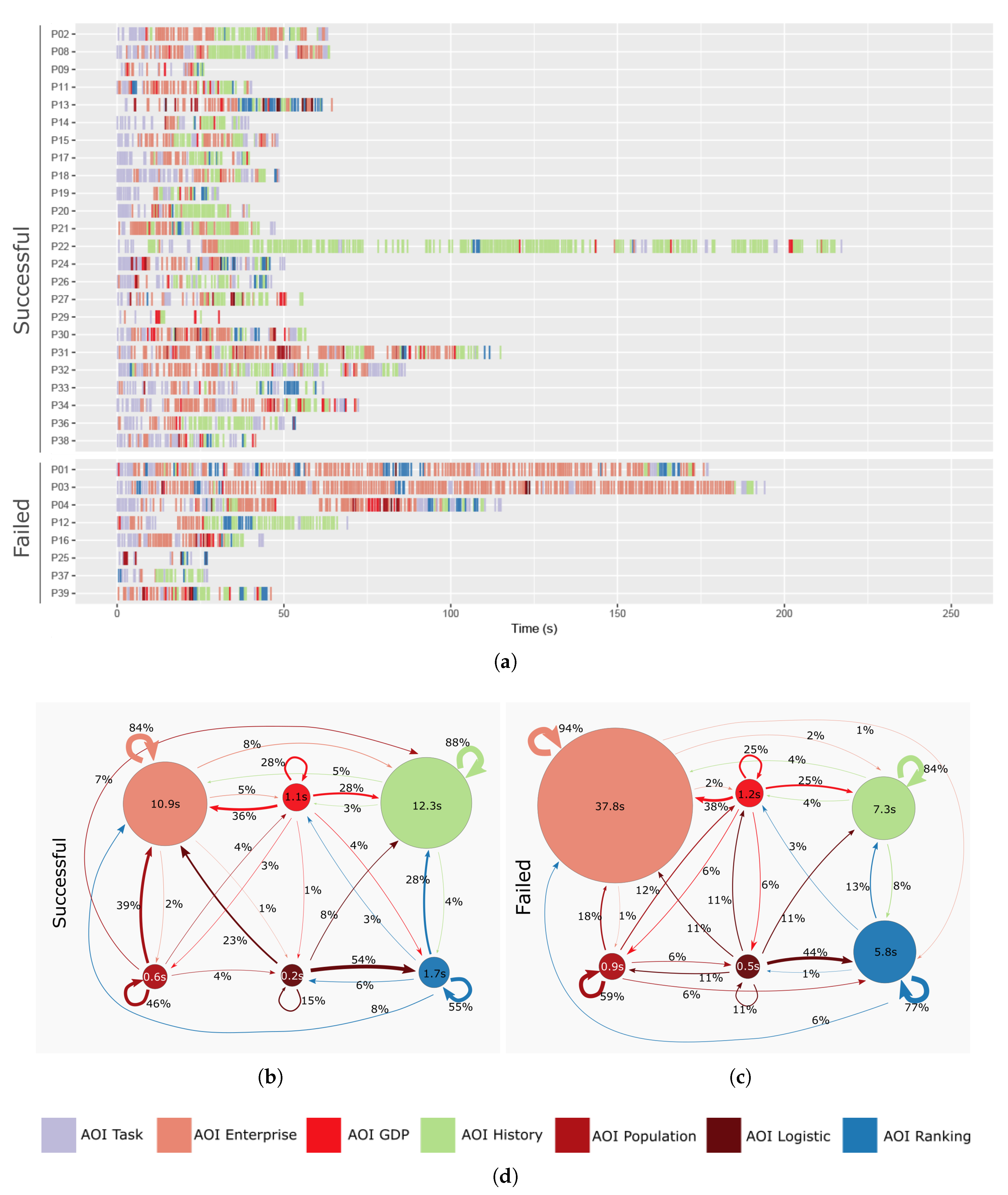

4.4. Dwell and Transition during Tasks

4.5. Feedback

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AOI | area of interest |

| CEO | chief executive officer |

| SME | small and medium-sized enterprise |

| GDP | gross domestic product |

| CNY | Chinese Yuan |

Appendix A

| Category | Factor | Explanation |

|---|---|---|

| Enterprise | Enterprise | The total number of the enterprises in a county |

| Enterprise above designated size | The number of enterprises with annual main business revenue of 20 million CNY or more | |

| GDP | GDP per county | The total gross domestic product in one county |

| GDP per capita | The average GDP per person | |

| Primary industry | The GDP value of the county from natural raw materials, such as mining, agriculture, or forestry | |

| Secondary industry | The GDP value of industry which converts the raw materials provided by primary, such as manufacturing industry | |

| Tertiary industry | The GDP value concerned with the provision of services | |

| Population | Population per county | The total population of one county |

| Population per km2 | The population density of one county | |

| Employee | The total number of employees in one county | |

| Citizen disposable income | The average citizen disposable income of one county | |

| Citizen consumption | The average citizen consumption of one county | |

| Engel coefficient | The proportion of income spent on food falls | |

| Logistic | Road length | The total road length in one county |

| Road passenger | The total transported passenger number in one county in one year | |

| Road cargo | The total weight of the transported cargo in one county in one year | |

| Car parc | The number of cars and other vehicles in a region or market |

References

- Jing, C.; Du, M.; Li, S.; Liu, S. Geospatial Dashboards for Monitoring Smart City Performance. Sustainability 2019, 11, 5648. [Google Scholar] [CrossRef] [Green Version]

- Few, S. Information Dashboard Design: The Effective Visual Communication of Data; O’Reilly Media, Inc.: Newton, MA, USA, 2006. [Google Scholar]

- Huijboom, N.; Van den Broek, T. Open data: An international comparison of strategies. Eur. J. ePractice 2011, 12, 4–16. [Google Scholar]

- Kitchin, R.; Lauriault, T.P.; McArdle, G. Knowing and governing cities through urban indicators, city benchmarking and real-time dashboards. Reg. Stud. Reg. Sci. 2015, 2, 6–28. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Jiang, B.; Heer, J. imMens: Real-time Visual Querying of Big Data. Comput. Graph. Forum 2013, 32, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Reda, K.; Johnson, A.E.; Papka, M.E.; Leigh, J. Effects of Display Size and Resolution on User Behavior and Insight Acquisition in Visual Exploration. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15), Seoul, Korea, 18–23 April 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 2759–2768. [Google Scholar] [CrossRef] [Green Version]

- Yalçın, M.A.; Elmqvist, N.; Bederson, B.B. Keshif: Rapid and Expressive Tabular Data Exploration for Novices. IEEE Trans. Vis. Comput. Graph. 2018, 24, 2339–2352. [Google Scholar] [CrossRef] [PubMed]

- Vornhagen, H. Effective visualisation to enable sensemaking of complex systems. The case of governance dashboard. In Proceedings of the International Conference EGOV-CeDEM-ePart, Donau, Austria, 3–5 September 2018; pp. 313–321. [Google Scholar]

- Gurstein, M.B. Open data: Empowering the empowered or effective data use for everyone? First Monday 2011, 16. [Google Scholar] [CrossRef]

- Batty, M.; Axhausen, K.W.; Giannotti, F.; Pozdnoukhov, A.; Bazzani, A.; Wachowicz, M.; Ouzounis, G.; Portugali, Y. Smart cities of the future. Eur. Phys. J. Spec. Top. 2012, 214, 481–518. [Google Scholar] [CrossRef] [Green Version]

- Batty, M. A perspective on city dashboards. Reg. Stud. Reg. Sci. 2015, 2, 29–32. [Google Scholar] [CrossRef] [Green Version]

- Census Mappong Module: Dublin City. Available online: http://airo.maynoothuniversity.ie/Instant_Atlas/Updated%20Modules/dd/Dublin%20City/atlas.html (accessed on 25 June 2020).

- Smart City 2-Boston Smartcity. Available online: https://boston.opendatasoft.com/page/smart-city-2/ (accessed on 25 June 2020).

- Visualising Enterprise, Innovation & Employment in Galway City and County. Available online: http://galwaydashboard.ie/enterprise (accessed on 25 June 2020).

- Keim, D.; Andrienko, G.; Fekete, J.D.; Görg, C.; Kohlhammer, J.; Melançon, G. Visual Analytics: Definition, Process, and Challenges. In Information Visualization: Human-Centered Issues and Perspectives; Kerren, A., Stasko, J.T., Fekete, J.D., North, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 154–175. [Google Scholar] [CrossRef] [Green Version]

- Robinson, A.C.; Peuquet, D.J.; Pezanowski, S.; Hardisty, F.A.; Swedberg, B. Design and evaluation of a geovisual analytics system for uncovering patterns in spatio-temporal event data. Cartogr. Geogr. Inf. Sci. 2017, 44, 216–228. [Google Scholar] [CrossRef]

- Pezanowski, S.; MacEachren, A.M.; Savelyev, A.; Robinson, A.C. SensePlace3: A geovisual framework to analyze place–time–attribute information in social media. Cartogr. Geogr. Inf. Sci. 2018, 45, 420–437. [Google Scholar] [CrossRef]

- Li, J.; Chen, S.; Zhang, K.; Andrienko, G.; Andrienko, N. COPE: Interactive Exploration of Co-Occurrence Patterns in Spatial Time Series. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2554–2567. [Google Scholar] [CrossRef] [PubMed]

- Nazemi, K.; Burkhardt, D. Visual analytical dashboards for comparative analytical tasks—A case study on mobility and transportation. Procedia Comput. Sci. 2019, 149, 138–150. [Google Scholar] [CrossRef]

- Roth, R.E. Interactive maps: What we know and what we need to know. J. Spat. Inf. Sci. 2013, 2013, 59–115. [Google Scholar] [CrossRef]

- Roth, R.E.; Çöltekin, A.; Delazari, L.; Filho, H.F.; Griffin, A.; Hall, A.; Korpi, J.; Lokka, I.; Mendonça, A.; Ooms, K.; et al. User studies in cartography: Opportunities for empirical research on interactive maps and visualizations. Int. J. Cartogr. 2017, 3, 61–89. [Google Scholar] [CrossRef]

- Chang, R.; Ziemkiewicz, C.; Green, T.M.; Ribarsky, W. Defining Insight for Visual Analytics. IEEE Comput. Graph. Appl. 2009, 29, 14–17. [Google Scholar] [CrossRef]

- Roth, R.E.; Ross, K.S.; MacEachren, A.M. User-Centered Design for Interactive Maps: A Case Study in Crime Analysis. ISPRS Int. J. Geo-Inf. 2015, 4, 262–301. [Google Scholar] [CrossRef]

- Andrienko, N.; Andrienko, G.; Gatalsky, P. Exploratory spatio-temporal visualization: An analytical review. J. Vis. Lang. Comput. 2003, 14, 503–541. [Google Scholar] [CrossRef]

- Bogucka, E.; Jahnke, M. Feasibility of the Space–Time Cube in Temporal Cultural Landscape Visualization. ISPRS Int. J. Geo-Inf. 2018, 7, 209. [Google Scholar] [CrossRef] [Green Version]

- Brinck, T.; Gergle, D.; Wood, S.D. Usability for the Web: Designing Web Sites That Work; Morgan Kaufmann Publishers: Burlington, MA, USA, 2001. [Google Scholar]

- Brooke, J. SUS: A “quick and dirty’usability. In Usability Evaluation in Industry; Routledge: Abingdon, UK, 1996; p. 189. [Google Scholar]

- Seebacher, D.; Häuäler, J.; Hundt, M.; Stein, M.; Müller, H.; Engelke, U.; Keim, D. Visual Analysis of Spatio-Temporal Event Predictions: Investigating the Spread Dynamics of Invasive Species. IEEE Trans. Big Data 2018. [Google Scholar] [CrossRef] [Green Version]

- Cao, N.; Lin, C.; Zhu, Q.; Lin, Y.; Teng, X.; Wen, X. Voila: Visual Anomaly Detection and Monitoring with Streaming Spatiotemporal Data. IEEE Trans. Vis. Comput. 2018, 24, 23–33. [Google Scholar] [CrossRef]

- Liu, D.; Xu, P.; Ren, L. TPFlow: Progressive Partition and Multidimensional Pattern Extraction for Large-Scale Spatio-Temporal Data Analysis. IEEE Trans. Vis. Comput. Graph. 2019, 25, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Huang, C.; Liu, M.; Yan, J.; Jiang, T.; Tan, Z.; Hu, Y.; Chen, W.; Zhang, X. UrbanMotion: Visual Analysis of Metropolitan-Scale Sparse Trajectories. IEEE Trans. Vis. Comput. Graph. 2020. [Google Scholar] [CrossRef]

- McKenna, S.; Staheli, D.; Fulcher, C.; Meyer, M. BubbleNet: A Cyber Security Dashboard for Visualizing Patterns. Comput. Graph. Forum 2016, 35, 281–290. [Google Scholar] [CrossRef]

- Hegarty, M.; Smallman, H.S.; Stull, A.T. Choosing and using geospatial displays: Effects of design on performance and metacognition. J. Exp. Psychol. Appl. 2012, 18, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Opach, T.; Gołębiowska, I.; Fabrikant, S.I. How Do People View Multi-Component Animated Maps? Cartogr. J. 2014, 51, 330–342. [Google Scholar] [CrossRef]

- Popelka, S.; Herman, L.; Řezník, T.; Pařilová, M.; Jedlička, K.; Bouchal, J.; Kepka, M.; Charvát, K. User Evaluation of Map-Based Visual Analytic Tools. ISPRS Int. J. Geo-Inf. 2019, 8, 363. [Google Scholar] [CrossRef] [Green Version]

- Mckenna, S.; Staheli, D.; Meyer, M. Unlocking user-centered design methods for building cyber security visualizations. In Proceedings of the 2015 IEEE Symposium on Visualization for Cyber Security (VizSec), Chicago, IL, USA, 25 October 2015; pp. 1–8. [Google Scholar]

- Howson, C. Successful Business Intelligence: Unlock the Value of BI & Big Data; McGraw-Hill Education Group: New York, NY, USA, 2013. [Google Scholar]

- Zuo, C.; Liu, B.; Ding, L.; Bogucka, E.; Meng, L. Usability Test of Map-based Interactive Dashboards Using Eye Movement Data. In Proceedings of the 15th International Conference on Location Based Services, Vienna, Austria, 11–13 November 2019; Vienna University of Technology: Vienna, Austria, 2019. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Halszka, J.; van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Tran, V.T.; Fuhr, N. Using Eye-Tracking with Dynamic Areas of Interest for Analyzing Interactive Information Retrieval. In Proceedings of the 35th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’12), Portland, OR, USA, 12–16 August 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 1165–1166. [Google Scholar] [CrossRef]

| Task Number | Statement | Answer |

|---|---|---|

| Task 1 | In 2015, the Tertiary Industry value of Qidong is 85 billion Chinese Yuan (CNY). | Wrong |

| Task 2 | The number of enterprises in Jintan increases from 2013 to 2015. | Unkown |

| Task 3 | In 2015, among all the counties in Jiangsu, Kunshan has the largest number of enterprises. | Correct |

| Task 4 | The south part of Jiangsu is economically stronger than the north part. | Correct |

| Task 5 | In Jiangsu, the more employees in a county, the higher the citizens’ disposable income is. | Wrong |

| Task 6 | In Jiangsu, the longer the total length of the road of a county, the higher the industrialized level is. | Wrong |

| Tasks | T1 | T2 | T3 | T4 | T5 | T6 |

|---|---|---|---|---|---|---|

| Description | Find an attribute of a place. | Find the attribute temporal trend of a place. | Identify a place with the highest attribute value. | Summarize the spatial distribution of an attribute. | Compare the spatial distribution of two attributes. | Abstract the attributes. |

| Compare the spatial | ||||||

| distribution of two attributes. | ||||||

| Search Area | Single | Single | Multiple | Multiple | Multiple | Multiple |

| Search Time | Single | Multiple | Single | Single | Single | Single |

| Search Attribute | Single | Single | Single | Single | Multiple | Multiple |

| Query type | State | Change | Order | State | State | State |

| Cognitive Operation | Identification | Comparison | Identification, Comparison | Comparison, Summary | Comparison, Summary | Comparison, Summary, Deduction |

| Dashboard Interaction | Query, Switch content | Query, Highlight * | Switch content * | Switch content * | Switch content | Switch content * |

| Data Availability | High | High | High | High | High | Middle |

| Metric | Description |

|---|---|

| Sequence | The order of fixation within the AOIs. |

| Dwell time | The sum of all the fixations and saccades within an AOI. |

| Transition | The movement from one AOI to another. |

| Return | It is a transition to an AOI itself, also known as revisit. |

| Transition probability | The probability of the fixation moving from one AOI to another AOI in a sequence. |

| Category | Group | Number of Participants | T1 | T2 | T3 | T4 | T5 | T6 | Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Overall | 32 | 96.9 | 75.0 | 87.5 | 100.0 | 87.5 | 62.5 | 84.9 |

| Gender | Female | 17 | 94.1 | 64.7 | 94.1 | 100.0 | 94.1 | 58.8 | 84.3 |

| Male | 15 | 100.0 | 86.7 | 80.0 | 100.0 | 80.0 | 66.7 | 85.6 | |

| Education | Master | 14 | 92.9 | 64.3 | 85.7 | 100.0 | 85.7 | 78.6 | 84.5 |

| Bachelor | 17 | 100.0 | 82.4 | 94.1 | 100.0 | 88.2 | 52.9 | 86.3 | |

| High School | 1 | 100.0 | 100.0 | 0.0 | 100.0 | 100.0 | 0.0 | 66.7 | |

| Previous | Used > 5 times | 12 | 100.0 | 83.3 | 91.7 | 100.0 | 83.3 | 75.0 | 88.9 |

| Dashboard | Used ≤ 5 times | 5 | 100.0 | 60.0 | 80.0 | 100.0 | 60.0 | 40.0 | 73.3 |

| Usage | Heard only | 8 | 100.0 | 87.5 | 87.5 | 100.0 | 100.0 | 50.0 | 87.5 |

| Experience | Never heard | 7 | 85.7 | 57.1 | 85.7 | 100.0 | 100.0 | 71.4 | 83.3 |

| Category | Group | Number of Participants | T1 | T2 | T3 | T4 | T5 | T6 | Overall |

|---|---|---|---|---|---|---|---|---|---|

| Overall | Overall | 32 | 37.7 | 51.2 | 49.8 | 38.3 | 70.0 | 78.8 | 357.6 |

| Gender | Female | 17 | 40.1 | 52.6 | 56.9 | 32.3 | 53.8 | 87.3 | 348.4 |

| Male | 15 | 36.7 | 49.4 | 47.9 | 43.1 | 81.9 | 74.8 | 377.4 | |

| Education | Master | 14 | 37.7 | 49.1 | 48.8 | 48.7 | 89.2 | 88.7 | 386.0 |

| Bechalor | 17 | 36.7 | 52.6 | 50.3 | 25.1 | 53.8 | 75.6 | 339.7 | |

| High School | 1 | 64.3 | 41.7 | 217.8 | 32.9 | 91.4 | 48.7 | 496.8 | |

| Previous | Used > 5 times | 12 | 32.2 | 52.8 | 59.5 | 38.0 | 86.2 | 74.2 | 388.3 |

| Dashboard | Used ≤ 5 times | 5 | 40.1 | 45.2 | 43.9 | 56.0 | 96.5 | 97.9 | 445.9 |

| Usage | Heard only | 8 | 31.6 | 50.7 | 44.0 | 33.3 | 67.3 | 94.3 | 315.1 |

| Experience | Never heard | 7 | 53.0 | 51.4 | 63.2 | 46.7 | 57.8 | 75.6 | 348.4 |

| Group | Item | Frequency |

|---|---|---|

| Views | The spatial panel is helpful | 9 |

| The temporal panel is helpful | 6 | |

| The ranking panel is helpful | 2 | |

| Layout | The color scheme helped in organizing information | 13 |

| The grouped layers helped in factor finding | 4 | |

| The juxtaposition benefits for comparison | 3 | |

| The structured design gives a good overview | 2 | |

| Interaction | The search function is useful in finding places | 15 |

| The interactions of the temporal panel helped them find data quickly | 6 | |

| Mouse hovering and clicking are helpful | 5 | |

| Layer switching is efficient | 2 | |

| Other | Natural to use | 1 |

| Group | Item | Frequency |

|---|---|---|

| Penal | The top margin of the the bars in the temporal panel is sometimes too narrow | 5 |

| The maps shift when the mouse moves close to their boundaries | 5 | |

| The temporal panel is too informative | 3 | |

| The legend intervals are confusing | 3 | |

| The axises in the temporal panel change their ranges | 1 | |

| The axises in the temporal panel are not necessary | 1 | |

| The temporal panel should be split into four charts as other panels | 1 | |

| Mark the important places in the spatial panel | 1 | |

| Layout | Hard to compare two layers in one map | 3 |

| The color scheme is not good for color-blind people | 3 | |

| Only one map in the spatial panel is preferred | 2 | |

| The color hue should be increased in the temporal panel and ranking panel | 2 | |

| The color of the unavailable data should be lighter | 1 | |

| Interaction | The search bar should be in each map / outside the spatial panel | 5 |

| The ranking panel should be clickable | 4 | |

| The selected place should be highlighted on all the maps | 2 | |

| The map legends should be clickable | 2 | |

| Other | The font size is too small | 5 |

| The unavailable data increases the difficulty | 3 | |

| No idea where to look at on the dashboard | 2 | |

| The dashboard is too informative | 2 | |

| The listing of top five municipalities is not interested to the participant | 1 | |

| A learning time is required | 1 | |

| Lack of the economic background information | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuo, C.; Ding, L.; Meng, L. A Feasibility Study of Map-Based Dashboard for Spatiotemporal Knowledge Acquisition and Analysis. ISPRS Int. J. Geo-Inf. 2020, 9, 636. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9110636

Zuo C, Ding L, Meng L. A Feasibility Study of Map-Based Dashboard for Spatiotemporal Knowledge Acquisition and Analysis. ISPRS International Journal of Geo-Information. 2020; 9(11):636. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9110636

Chicago/Turabian StyleZuo, Chenyu, Linfang Ding, and Liqiu Meng. 2020. "A Feasibility Study of Map-Based Dashboard for Spatiotemporal Knowledge Acquisition and Analysis" ISPRS International Journal of Geo-Information 9, no. 11: 636. https://0-doi-org.brum.beds.ac.uk/10.3390/ijgi9110636