Global and Local Tensor Sparse Approximation Models for Hyperspectral Image Destriping

Abstract

:1. Introduction

- (1)

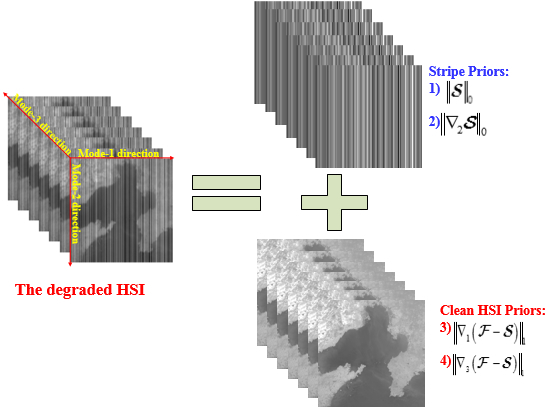

- We transformed an HSI destriping model to tensor framework, to better exploit high correlation between adjacent bands. In the tensor framework, a non-convex optimization model was constructed to estimate the stripes and underlying stripe-free image simultaneously for higher spectral fidelity.

- (2)

- The proposed method fully considers the discriminative prior of the stripes and the stripe-free image in tensor framework. We represent and analyze intrinsic characteristics of the stripes and stripe-free images, using and , respectively. Experiment results show that the proposed method outperforms existing state-of-the-art methods, especially when the stripes are non-periodic.

- (3)

- We used the PADMM to solve a non-convex optimization model effectively. Extensive experiments were conducted on simulated data and real-world data. The proposed scheme achieved superior performance in both quantitative evaluation and visual comparison compared with existing approaches.

2. Intrinsic Statistical Property Analysis of Stripe and Clean Images

2.1. Notations and Preliminaries

2.2. Priors and Regularizers

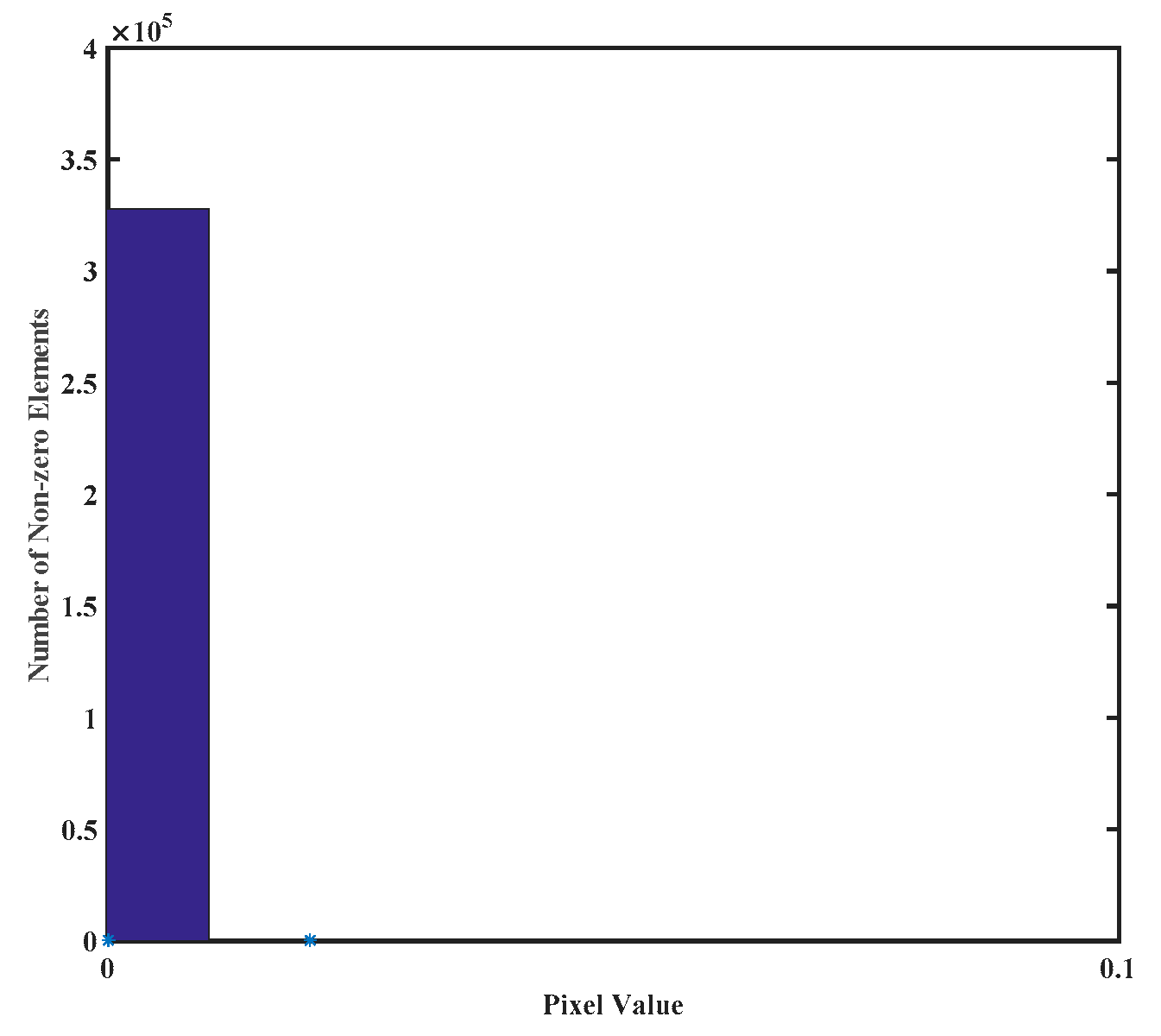

2.2.1. Global Sparsity of Stripes

2.2.2. Local Smoothness along Stripe Direction

2.2.3. Continuity of HSIs along Spatial Domain

2.2.4. Continuity of HSIs along Spectral Domain

3. The GLTSA Destriping Method

3.1. Stripe-Degraded Model

3.2. The GLTSA Destriping Model

3.3. Optimization Procedure and Algorithm

| Algorithm 1. The GLTSA destriping algorithm |

| Input: The observed degraded image with stripe , parameters , the maximum number of iterations Nmax=103, and the calculation accuracy tol = 10−4. |

| Output: The stripes |

| Initialize: (1) k=0, ; While and k < Nmax do; (2) Update by Equation (14); (3) Update by Equation (16); (4) Update using 3-D FFT by Equation (18); (5) Update by Equation (21); (6) Update by Equation (23); (7) Update by Equation (25); (8) Update the multipliers , i = 1, 2, 3, 4, 5 by Equations (26)–(30). End While |

4. Experiment Results

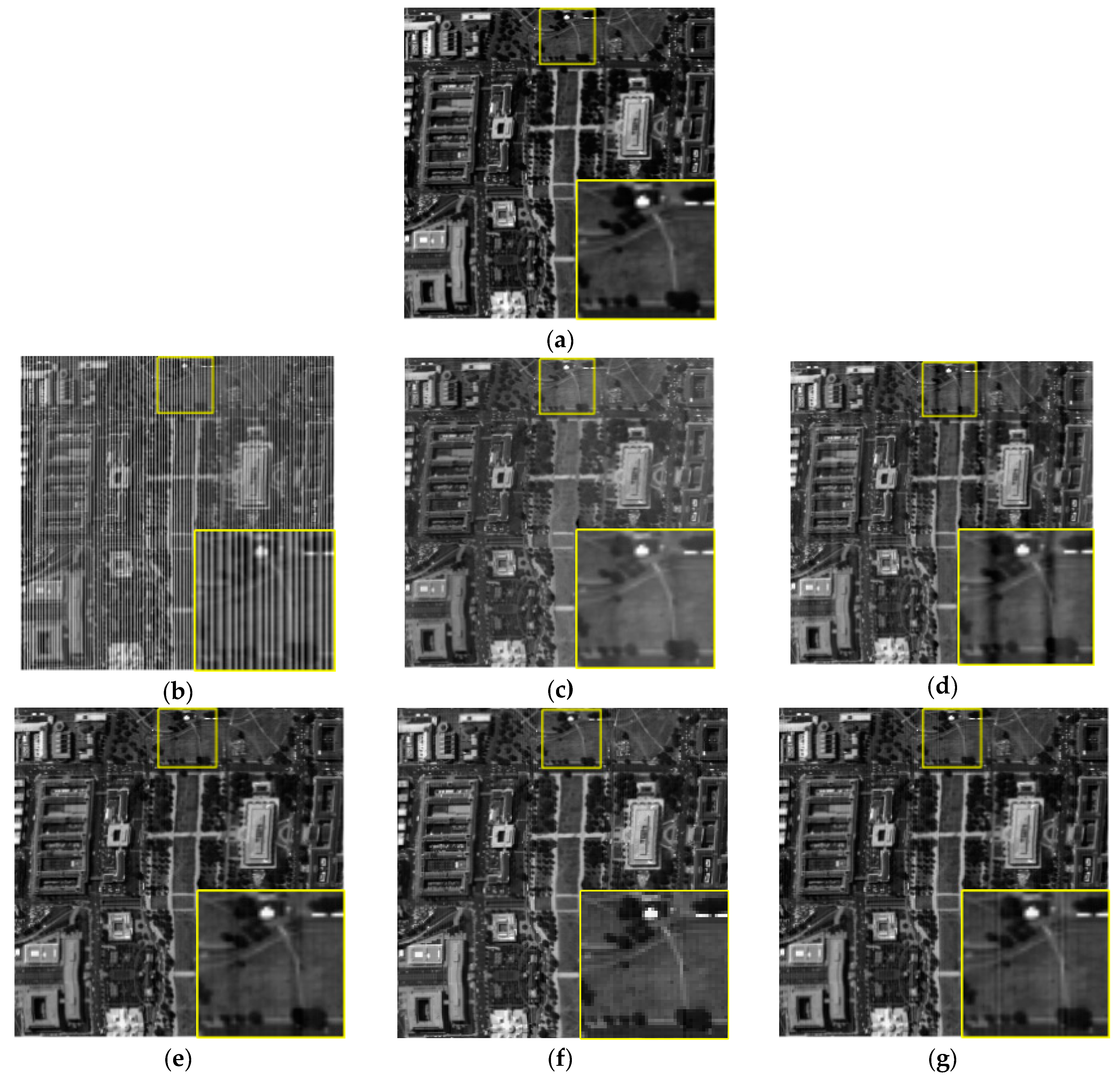

4.1. Simulated Data Experiments

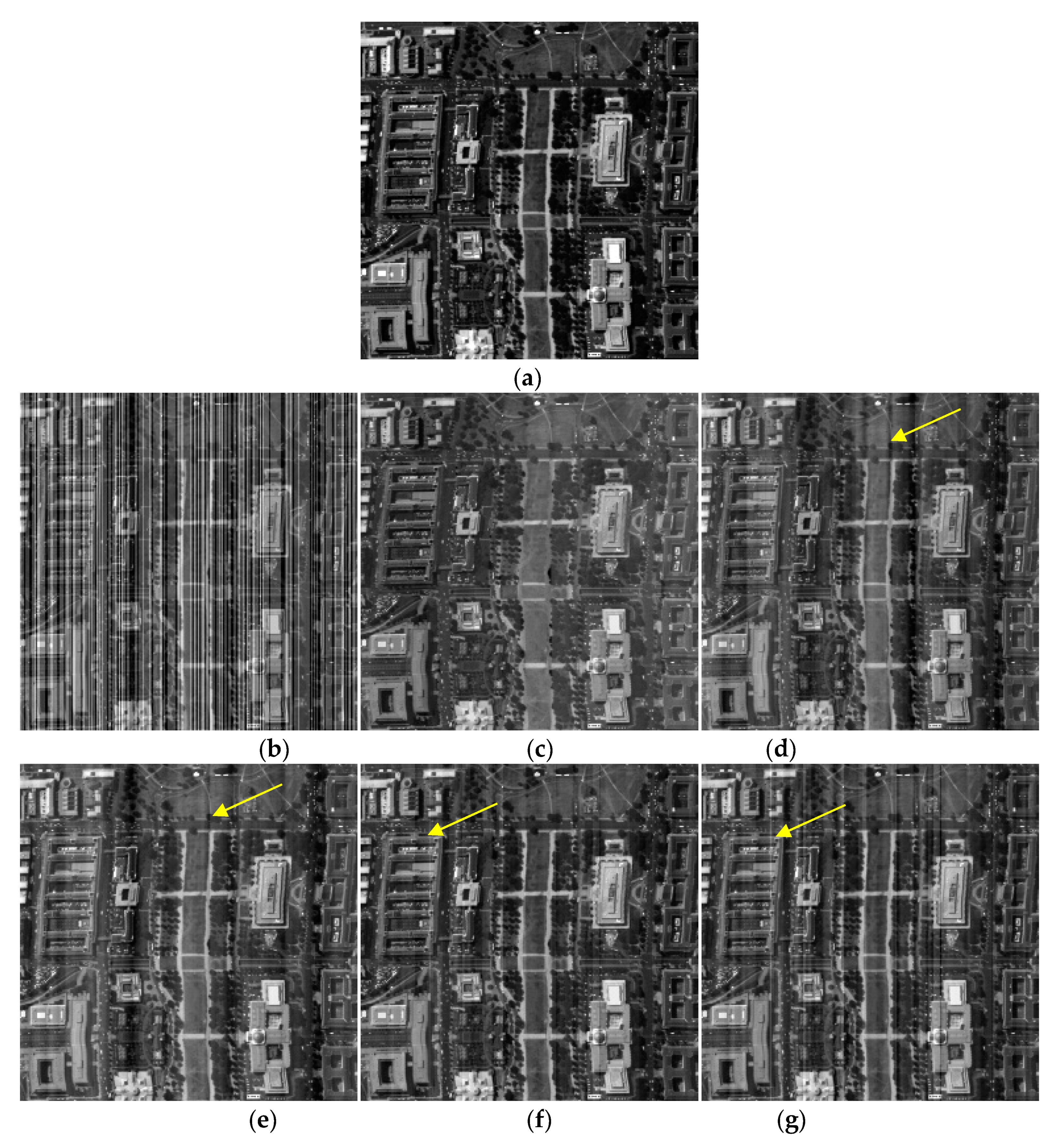

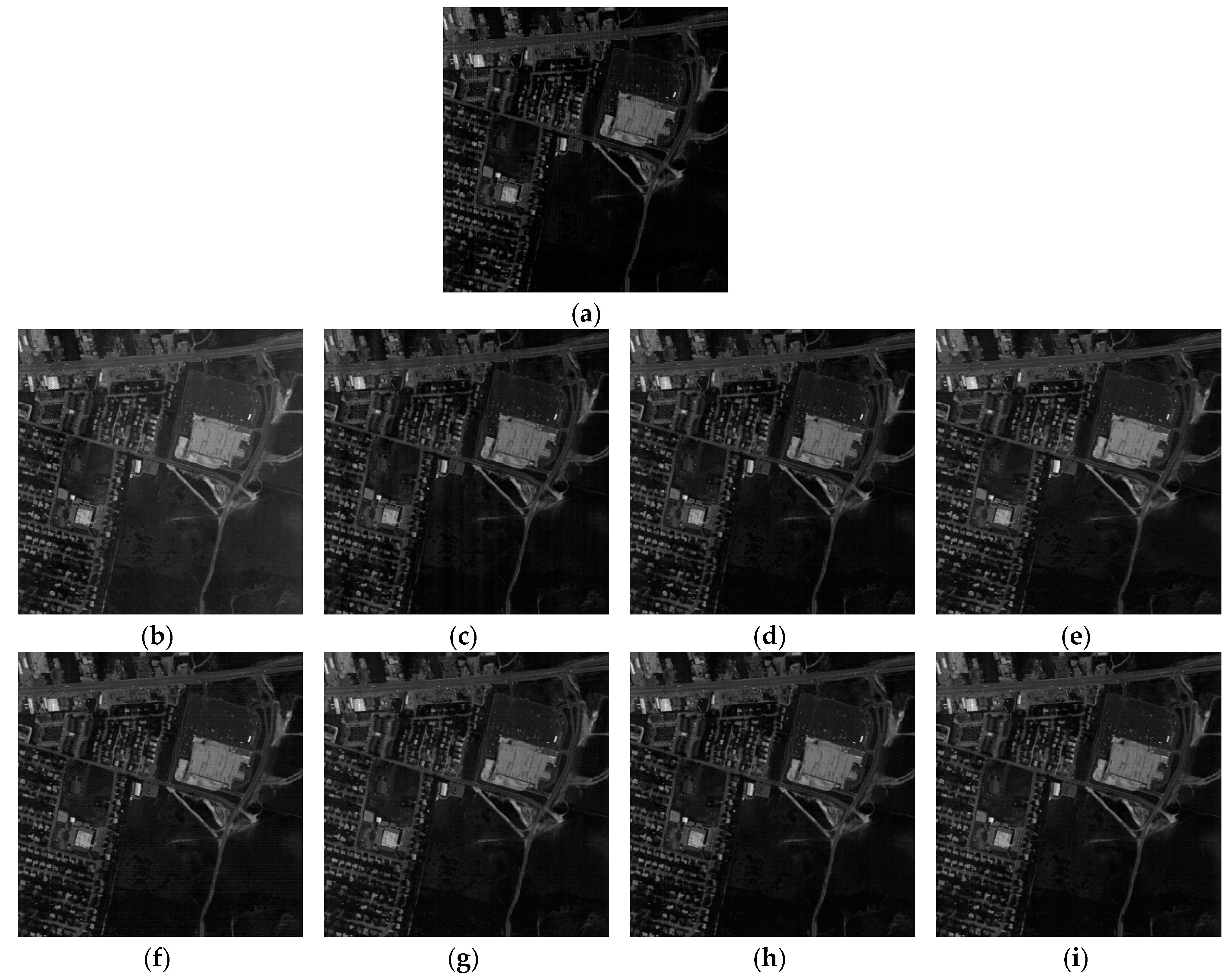

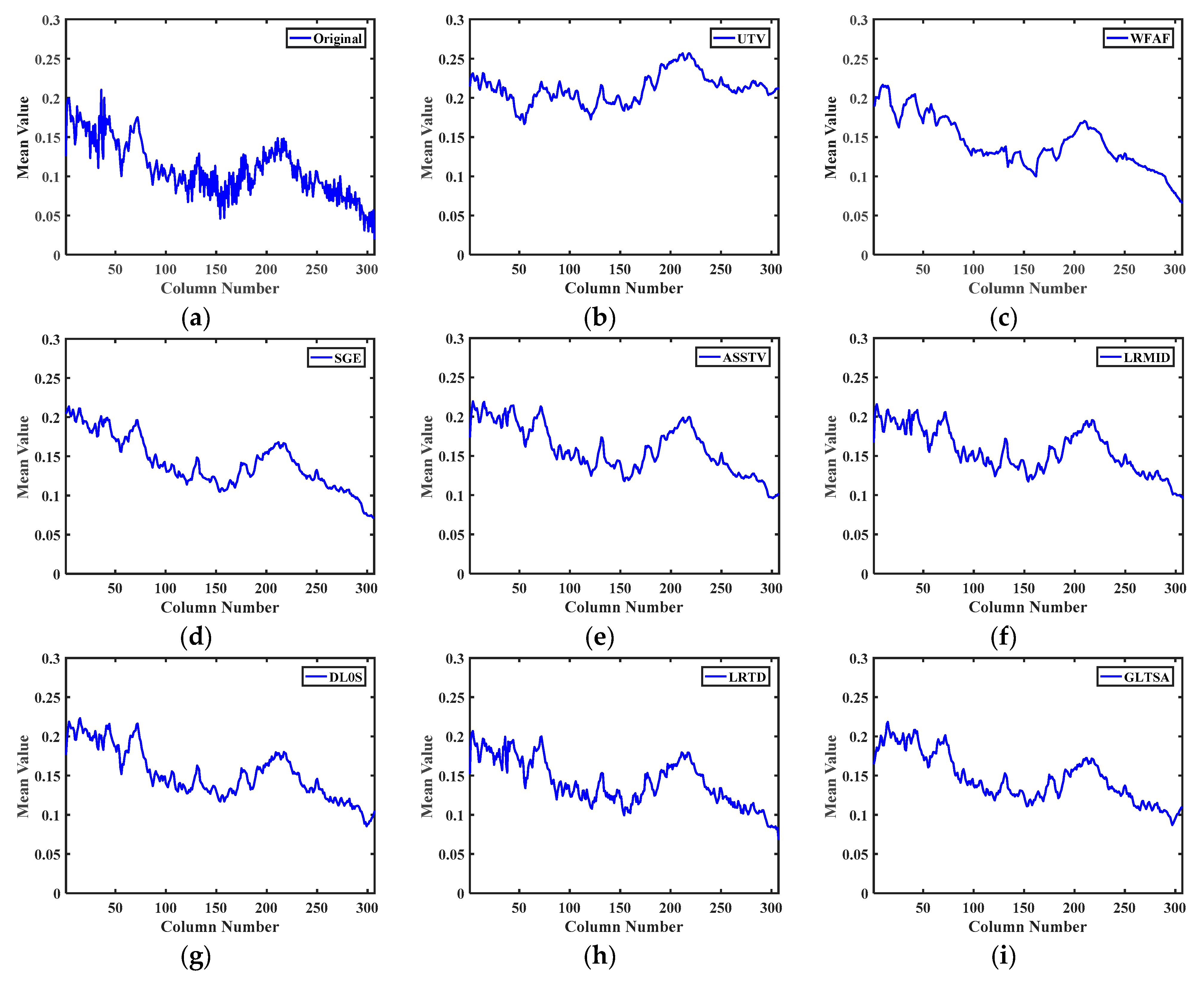

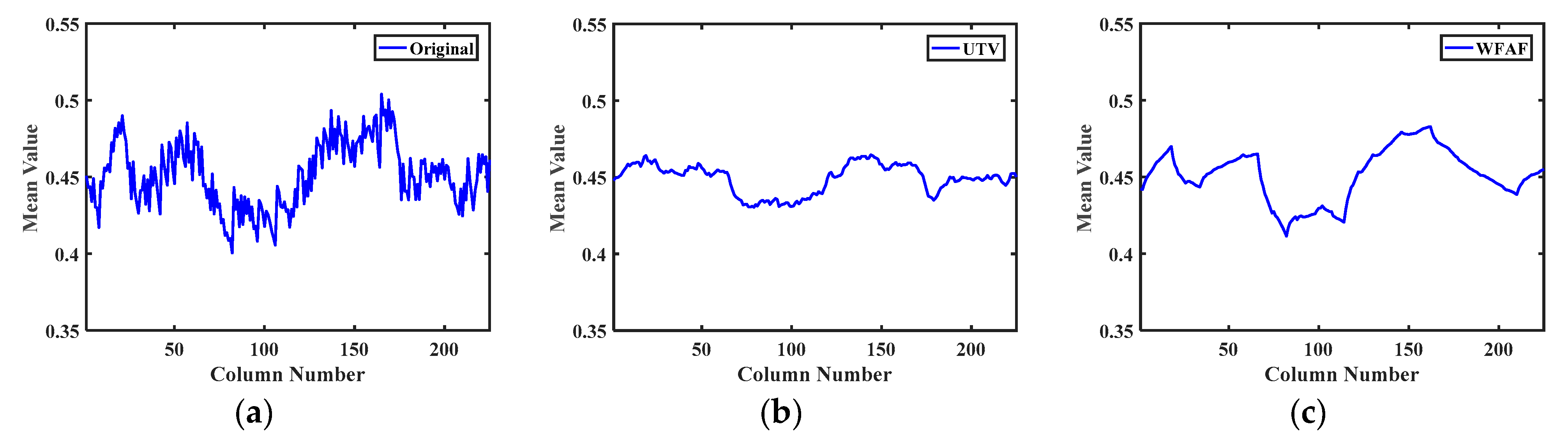

4.2. Experiments on Real Data

4.3. Analysis for Parameter Settings

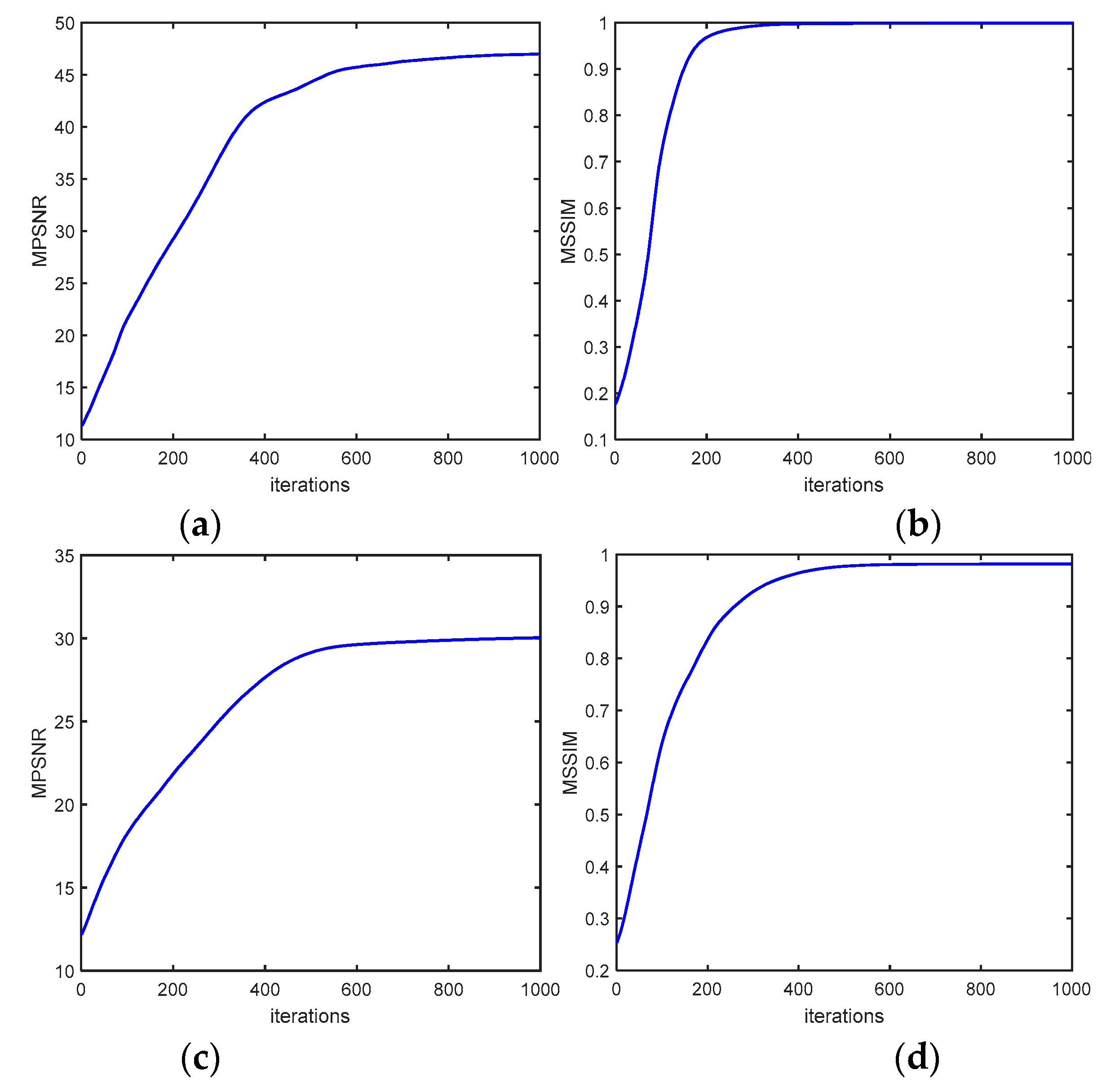

4.4. Convergence Analysis and Comparison of Running Time

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C.-W. Nonlocal Low-Rank Regularized Tensor Decomposition for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5174–5189. [Google Scholar] [CrossRef]

- Sánchez, N.; González-Zamora, Á.; Martínez-Fernández, J. Integrated hyperspectral approach to global agricultural drought monitoring. Agric. For. Meteorol. 2018, 259, 141–153. [Google Scholar] [CrossRef]

- Chen, J.; Shao, Y.; Guo, H. Destriping CMODIS data by power filtering. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2119–2124. [Google Scholar] [CrossRef]

- Yi, C.; Zhao, Y.Q.; Yang, J. Joint Hyperspectral Superresolution and Unmixing With Interactive Feedback. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3823–3834. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.Q.; Chan, C.W. Coupled Sparse Denoising and Unmixing With Low-Rank Constraint for Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2015, 1–16. [Google Scholar] [CrossRef]

- Yi, C.; Zhao, Y.Q.; Chan, J.C.W. Spectral super-resolution for multispectral image based on spectral improvement strategy and spatial preservation strategy. IEEE Trans. Geosci. Remote Sens. 2019, 1–15. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Chanussot, J.; Benediktsson, J.A. Segmentation and classification of hyperspectral images using watershed transformation. Pattern Recognit. 2010, 43, 2367–2379. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Zhao, Y.; Chan, J.C. Learning and Transferring Deep Joint Spectral–Spatial Features for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C.-W. Hyper-Laplacian Regularized Nonlocal Low-rank Matrix Recovery for Hyperspectral Image Compressive Sensing Reconstruction. Inf. Sci. 2019, 501, 406–420. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.-W. Nonlocal Tensor Sparse Representation and Low-Rank Regularization for Hyperspectral Image Compressive Sensing Reconstruction. Remote Sens. 2019, 11, 193. [Google Scholar] [CrossRef] [Green Version]

- Xue, J. Nonconvex tensor rank minimization and its applications to tensor recovery. Inf. Sci. 2019, 503, 109–128. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C.; Kong, S.G. Enhanced Sparsity Prior Model for Low-Rank Tensor Completion. IEEE Trans. Neural Netw. Learn. Syst. 2019, 12, 1–15. [Google Scholar] [CrossRef]

- Liu, X.; Lu, X.; Shen, H. aration and Removal in Remote Sensing Images by Consideration of the Global Sparsity and Local Variational Properties. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3049–3060. [Google Scholar] [CrossRef]

- Bouali, M.; Ladjal, S. Toward optimal destriping of MODIS data using a unidirectional variational model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2924–2935. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.; Zhao, X.; Deng, L.; Huang, J. Stripes Removal of Remote Sensing Images by Total Variation Regularization and Group Sparsity Constraint. Remote Sens. 2017, 9, 559. [Google Scholar] [CrossRef] [Green Version]

- Hong-Xia, D.; Ting-Zhu, H.; Liang-Jian, D. Directional ℓ0 Sparse Modeling for Image Stripes Removal. Remote Sens. 2018, 10, 361. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Lin, H.; Shao, Y.; Yang, L. Oblique striping removal in hyperspectral imagery based on wavelet transform. Int. J. Remote Sens. 2006, 27, 1717–1723. [Google Scholar] [CrossRef]

- Liu, X.; Lu, X.; Shen, H. Oblique Stripe Removal in Remote Sensing Images via Oriented Variation. 2018. Available online: https://arxiv.org/ftp/arxiv/papers/1809/1809.02043.pdf (accessed on 18 February 2020).

- Chang, Y.; Yan, L.; Fang, H.; Luo, C. Anisotropic Spectral-Spatial Total Variation Model for Multispectral Remote Sensing Image Destriping. IEEE Trans. Image Process. 2015, 24, 1852–1866. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.; Zhao, X. Destriping of Multispectral Remote Sensing Image Using Low-Rank Tensor Decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4950–4967. [Google Scholar] [CrossRef]

- Hao, W. A New Separable Two-dimensional Finite Impulse Response Filter Design with Sparse Coefficients. IEEE Trans. Circuits Syst. Regul. Papers 2017, 62, 2864–2873. [Google Scholar] [CrossRef]

- Münch, B.; Trtik, P.; Marone, F.; Stampanoni, M. Stripe and ring artifact removal with combined wavelet-Fourier filtering. Opt. Express 2009, 17, 8567–8591. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carfantan, H.; Idier, J. Statistical linear destriping of satellite-based pushbroom-type images. IEEE Trans. Geosci. Remote Sens. 2009, 48, 1860–1871. [Google Scholar] [CrossRef]

- Gadallah, F.L.; Csillag, G.; Smith, E.J.M. Destriping multisensory imagery with moment matching. Int. J. Remote Sens. 2000, 21, 2505–2511. [Google Scholar] [CrossRef]

- Horn, B.K.P.; Woodham, R.J. Destriping LANDSAT MSS images by histogram modification. Comput. Graph. Image Process 1979, 10, 69–83. [Google Scholar] [CrossRef] [Green Version]

- Cao, B.; Du, Y.; Xu, D. An improved histogram matching algorithm for the removal of striping noise in optical hyperspectral imagery. Optik Int. J. Light Electron Opt. 2015, 126, 4723–4730. [Google Scholar] [CrossRef]

- Sun, L.X.; Neville, R.; Staenz, K.; White, H.P. Automatic destriping of Hyperion imagery based on spectral moment matching. Can. J. Remote Sens. 2008, 34, S68–S81. [Google Scholar] [CrossRef]

- Shen, H.F.; Jiang, W.; Zhang, H.Y.; Zhang, L.P. A piece-wise approach to removing the nonlinear and irregular stripes in MODIS data. Int. J. Remote Sens. 2014, 35, 44–53. [Google Scholar] [CrossRef]

- Rakwatin, P.; Takeuchi, W.; Yasuoka, Y. Stripes reduction in MODIS data by combining histogram matching with facet filter. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1844–1856. [Google Scholar] [CrossRef]

- Chang, W.W.; Guo, L.; Fu, Z.Y. A new destriping method of imaging spectrometer images. In Proceedings of the IEEE International Conference on Wavelet Analysis & Pattern Recognition, Beijing, China, 2–4 November 2007. [Google Scholar] [CrossRef]

- Chang, Y.; Fang, H.; Yan, L.; Liu, H. Robust destriping method with unidirectional total variation and framelet regularization. Opt. Express 2013, 21, 23307–23323. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.X.; Fang, H.Z.; Liu, H. Simultaneous destriping and denoising for hyperspectral images with unidirectional total variation and sparse representation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1051–1055. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.Z.; Deng, L.J.; Zhao, X.L.; Wang, M. Group sparsity based regularization model for hyperspectral image stripes removal. Neurocomput 2017, 267, 95–106. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Wu, T.; Zhong, S. Hyperspectral image stripes removal: From image decomposition perspective. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7018–7031. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Fang, H. Weighted Low-rank Tensor Recovery for Hyperspectral Image Restoration. arXiv 2017, arXiv:1709.00192. [Google Scholar]

- Cao, W.; Chang, Y.; Han, G. Destriping Remote Sensing Image via Low-Rank Approximation and Nonlocal Total Variation. IEEE Geosci. Remote Sens. Lett. 2018, 1–5. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Shen, H.F.; Zhang, L.P. A MAP-based algorithm for destriping and inpainting of remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1492–1502. [Google Scholar] [CrossRef]

- Yuan, G.Z.; Ghanem, B. L0TV: A new method for image restoration in the presence of impulse noise. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015. [Google Scholar]

- Blumensath, T.; Davies, M.E. Iterative thresholding for sparse approximation. J. Fourier Anal. Appl. 2008, 14, 629–654. [Google Scholar] [CrossRef] [Green Version]

- Jiao, Y.; Jin, B.; Lu, X. A primal dual active set with continuation algorithm for the L0-regularized optimization problem. Appl. Comput. Harmon. Anal. 2015, 39, 400–426. [Google Scholar] [CrossRef]

- Donoho, D.L. De-noising by soft-thresholding. IEEE Trans. Inf. Theory 1995, 41, 613–627. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image. Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarityindex for image quality assessment. IEEE Trans. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [Green Version]

- Yuhas, R.H.; Goetz, F.H.A.; Boardman, J.W. Discrimination among semiarid landscape endmembers using the spectral angle mapper (SAM) algorithm. In Proceedings of the Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992. [Google Scholar]

- Kong, X.; Zhao, Y.; Xue, J.; Chan, J. Hyperspectral Image Denoising Using Global Weighted Tensor Norm Minimum and Nonlocal Low-Rank Approximation. Remote Sens. 2019, 11, 2281. [Google Scholar] [CrossRef] [Green Version]

| I | 20 | 60 | 100 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| r | 0.2 | 0.4 | 0.8 | 0–1 | 0.2 | 0.4 | 0.8 | 0–1 | 0.2 | 0.4 | 0.8 | 0–1 | |

| MPSNR | Noisy | 29.10 | 26.09 | 23.08 | 26.51 | 19.56 | 16.55 | 13.54 | 15.28 | 15.12 | 12.11 | 9.10 | 11.94 |

| SGE [23] | 39.82 | 38.85 | 39.87 | 39.24 | 37.95 | 33.93 | 38.42 | 37.81 | 34.07 | 15.15 | 10.31 | 18.27 | |

| WFAF [22] | 31.58 | 31.36 | 31.51 | 31.36 | 31.08 | 29.59 | 30.43 | 30.52 | 30.29 | 27.39 | 28.87 | 29.01 | |

| UTV [14] | 25.85 | 25.85 | 25.85 | 25.84 | 25.85 | 25.84 | 25.85 | 25.85 | 25.85 | 25.84 | 25.85 | 25.85 | |

| ASSTV [19] | 36.70 | 36.70 | 36.34 | 36.57 | 36.70 | 36.71 | 34.64 | 36.17 | 36.72 | 36.73 | 32.07 | 36.18 | |

| LRMID [34] | 42.46 | 42.39 | 42.41 | 42.43 | 42.33 | 42.38 | 37.61 | 41.92 | 42.16 | 41.96 | 25.18 | 41.05 | |

| LRTD [20] | 49.86 | 48.85 | 42.10 | 47.86 | 49.81 | 48.95 | 41.76 | 48.98 | 49.81 | 49.01 | 41.66 | 48.67 | |

| DL0S [16] | 43.74 | 41.85 | 39.14 | 42.18 | 43.76 | 41.86 | 39.11 | 42.16 | 43.77 | 41.91 | 39.10 | 42.81 | |

| Ours | 50.90 | 47.95 | 42.55 | 48.28 | 54.22 | 47.97 | 42.33 | 50.19 | 53.79 | 50.12 | 42.32 | 50.94 | |

| MSSIM | Noisy | 0.8452 | 0.7429 | 0.5846 | 0.7752 | 0.5003 | 0.2883 | 0.1753 | 0.4235 | 0.3201 | 0.1185 | 0.0765 | 0.2894 |

| SGE [23] | 0.9907 | 0.988 | 0.9909 | 0.9906 | 0.9845 | 0.9602 | 0.9868 | 0.9764 | 0.9712 | 0.2951 | 0.1096 | 0.7698 | |

| WFAF [22] | 0.9483 | 0.9474 | 0.9484 | 0.9486 | 0.9469 | 0.9384 | 0.9456 | 0.9482 | 0.9441 | 0.9218 | 0.9398 | 0.9367 | |

| UTV [14] | 0.9022 | 0.9022 | 0.9022 | 0.9022 | 0.9022 | 0.9022 | 0.9022 | 0.9022 | 0.9021 | 0.9022 | 0.9021 | 0.9022 | |

| ASSTV [19] | 0.9833 | 0.9833 | 0.9812 | 0.9842 | 0.9833 | 0.9833 | 0.968 | 0.9846 | 0.9834 | 0.9834 | 0.9329 | 0.9792 | |

| LRMID [34] | 0.9954 | 0.9954 | 0.9955 | 0.9955 | 0.9953 | 0.9954 | 0.9802 | 0.9911 | 0.9949 | 0.9945 | 0.6796 | 0.9843 | |

| LRTD [20] | 0.999 | 0.9986 | 0.9941 | 0.9992 | 0.999 | 0.9987 | 0.9937 | 0.9979 | 0.999 | 0.9987 | 0.9936 | 0.9989 | |

| DL0S [16] | 0.9968 | 0.9951 | 0.9918 | 0.9978 | 0.9968 | 0.9951 | 0.9918 | 0.9952 | 0.9968 | 0.9951 | 0.9918 | 0.9966 | |

| Ours | 0.9988 | 0.9967 | 0.9942 | 0.9941 | 0.9995 | 0.9985 | 0.9948 | 0.9989 | 0.9995 | 0.9989 | 0.9948 | 0.9996 | |

| MFSIM | Noisy | 0.9271 | 0.856 | 0.8662 | 0.9158 | 0.8157 | 0.6427 | 0.694 | 0.8319 | 0.748 | 0.5372 | 0.6032 | 0.7384 |

| SGE [23] | 0.9927 | 0.9907 | 0.993 | 0.9928 | 0.9876 | 0.9714 | 0.9905 | 0.9901 | 0.9756 | 0.6516 | 0.6304 | 0.8542 | |

| WFAF [22] | 0.9626 | 0.9617 | 0.9623 | 0.9681 | 0.9605 | 0.954 | 0.9583 | 0.9598 | 0.9571 | 0.9418 | 0.9522 | 0.9568 | |

| UTV [14] | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | |

| ASSTV [19] | 0.9822 | 0.9822 | 0.9819 | 0.9823 | 0.9822 | 0.9822 | 0.9799 | 0.9831 | 0.9822 | 0.9823 | 0.9778 | 0.9818 | |

| LRMID [34] | 0.9965 | 0.9965 | 0.9966 | 0.9966 | 0.9963 | 0.9964 | 0.9932 | 0.9961 | 0.9958 | 0.9952 | 0.982 | 0.9967 | |

| LRTD [20] | 0.9994 | 0.9991 | 0.9969 | 0.9989 | 0.9993 | 0.9991 | 0.9966 | 0.9967 | 0.9993 | 0.9991 | 0.9965 | 0.9989 | |

| DL0S [16] | 0.9969 | 0.9956 | 0.9933 | 0.9958 | 0.9969 | 0.9956 | 0.9932 | 0.9958 | 0.9969 | 0.9956 | 0.9932 | 0.9959 | |

| Ours | 0.9989 | 0.9967 | 0.9955 | 0.9988 | 0.9996 | 0.998 | 0.9954 | 0.9989 | 0.9995 | 0.9988 | 0.9954 | 0.9992 | |

| SAM | Noisy | 0.177 | 0.356 | 0.452 | 0.378 | 0.387 | 0.776 | 0.931 | 0.814 | 0.494 | 0.991 | 1.139 | 1.024 |

| SGE [23] | 0.020 | 0.043 | 0.015 | 0.031 | 0.057 | 0.128 | 0.040 | 0.098 | 0.100 | 0.840 | 1.090 | 0.911 | |

| WFAF [22] | 0.025 | 0.048 | 0.030 | 0.038 | 0.053 | 0.122 | 0.065 | 0.079 | 0.080 | 0.186 | 0.097 | 0.137 | |

| UTV [14] | 0.049 | 0.049 | 0.049 | 0.049 | 0.050 | 0.050 | 0.051 | 0.051 | 0.051 | 0.052 | 0.052 | 0.052 | |

| ASSTV [19] | 0.035 | 0.035 | 0.035 | 0.035 | 0.035 | 0.035 | 0.035 | 0.035 | 0.035 | 0.035 | 0.036 | 0.035 | |

| LRMID [34] | 0.038 | 0.039 | 0.039 | 0.039 | 0.040 | 0.040 | 0.050 | 0.046 | 0.042 | 0.045 | 0.169 | 0.064 | |

| LRTD [20] | 0.023 | 0.026 | 0.033 | 0.029 | 0.023 | 0.026 | 0.033 | 0.028 | 0.023 | 0.026 | 0.033 | 0.029 | |

| DL0S [16] | 0.011 | 0.021 | 0.028 | 0.023 | 0.011 | 0.021 | 0.028 | 0.019 | 0.011 | 0.021 | 0.028 | 0.018 | |

| Ours | 0.013 | 0.027 | 0.030 | 0.025 | 0.006 | 0.017 | 0.031 | 0.013 | 0.007 | 0.012 | 0.031 | 0.014 | |

| ERGAS | Noisy | 131.10 | 185.40 | 262.20 | 241.61 | 393.29 | 556.19 | 786.59 | 581.47 | 655.48 | 926.98 | 1311.0 | 986.87 |

| SGE [23] | 38.16 | 42.68 | 37.96 | 39.58 | 47.46 | 75.30 | 44.83 | 56.24 | 77.76 | 653.70 | 1141.4 | 878.94 | |

| WFAF [22] | 98.71 | 101.21 | 99.50 | 100.98 | 104.69 | 124.43 | 112.74 | 118.36 | 115.71 | 161.12 | 135.79 | 152.68 | |

| UTV [14] | 191.27 | 191.27 | 191.27 | 191.27 | 191.28 | 191.33 | 191.29 | 191.30 | 191.30 | 191.44 | 191.33 | 191.38 | |

| ASSTV [19] | 56.22 | 56.20 | 58.38 | 56.28 | 56.19 | 56.14 | 70.14 | 59.34 | 56.09 | 56.00 | 93.45 | 65.97 | |

| LRMID [34] | 30.80 | 31.00 | 31.00 | 30.91 | 31.37 | 31.08 | 49.89 | 35.94 | 31.91 | 32.16 | 205.92 | 49.78 | |

| LRTD [20] | 17.11 | 19.05 | 31.03 | 24.61 | 17.15 | 19.04 | 32.06 | 26.25 | 17.15 | 19.03 | 32.38 | 29.37 | |

| DL0S [16] | 24.68 | 30.42 | 41.73 | 32.84 | 24.62 | 30.34 | 41.98 | 36.27 | 24.56 | 30.18 | 42.00 | 38.57 | |

| Ours | 10.83 | 18.95 | 29.04 | 21.06 | 7.46 | 20.51 | 30.66 | 23.58 | 7.89 | 12.42 | 30.69 | 26.75 |

| I | 20 | 60 | 100 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R | 0.2 | 0.4 | 0.8 | 0–1 | 0.2 | 0.4 | 0.8 | 0–1 | 0.2 | 0.4 | 0.8 | 0–1 | |

| MPSNR | Noisy | 29.12 | 26.09 | 23.08 | 27.13 | 19.58 | 16.55 | 13.54 | 17.34 | 15.14 | 12.12 | 9.1 | 12.85 |

| SGE [23] | 37.05 | 36.28 | 34.01 | 36.89 | 30.18 | 28.47 | 23.41 | 29.19 | 22.59 | 16.29 | 9.97 | 17.92 | |

| WFAF [22] | 31.12 | 30.78 | 30.19 | 30.85 | 28.41 | 26.97 | 25.07 | 27.38 | 25.63 | 23.63 | 21.3 | 24.11 | |

| UTV [14] | 25.85 | 25.84 | 25.82 | 25.84 | 25.84 | 25.74 | 25.62 | 25.78 | 25.83 | 25.55 | 25.29 | 25.68 | |

| ASSTV [19] | 35.83 | 35.97 | 34.22 | 36.12 | 32.15 | 31.37 | 26.86 | 31.62 | 28.48 | 26.83 | 21.84 | 27.38 | |

| LRMID [34] | 39.72 | 39.66 | 35.95 | 39.67 | 32.47 | 30.33 | 25.26 | 31.95 | 27.22 | 24.28 | 19.51 | 25.91 | |

| LRTD [20] | 47.88 | 43.96 | 30.5 | 44.98 | 47.78 | 42.34 | 23.36 | 44.68 | 47.85 | 40.23 | 19.56 | 41.38 | |

| DL0S [16] | 42.88 | 41.3 | 31.45 | 42.88 | 42.87 | 40.94 | 26.98 | 41.67 | 42.11 | 37.68 | 23.13 | 40.62 | |

| Ours | 48.59 | 43.52 | 36.44 | 46.25 | 53.01 | 42.81 | 31.09 | 47.39 | 52.52 | 46.9 | 26.24 | 47.19 | |

| MSSIM | Noisy | 0.8599 | 0.7598 | 0.6133 | 0.8367 | 0.5333 | 0.336 | 0.1664 | 0.4168 | 0.3684 | 0.1716 | 0.0552 | 0.2168 |

| SGE [23] | 0.9871 | 0.9858 | 0.979 | 0.9862 | 0.9524 | 0.9334 | 0.8209 | 0.9258 | 0.8191 | 0.5056 | 0.0767 | 0.6729 | |

| WFAF [22] | 0.9457 | 0.9443 | 0.9425 | 0.9455 | 0.9216 | 0.9088 | 0.8864 | 0.9094 | 0.8786 | 0.8429 | 0.7849 | 0.8581 | |

| UTV [14] | 0.9022 | 0.9022 | 0.902 | 0.9021 | 0.9022 | 0.901 | 0.8994 | 0.9011 | 0.902 | 0.8986 | 0.8936 | 0.8988 | |

| ASSTV [19] | 0.9801 | 0.9805 | 0.9719 | 0.9779 | 0.9555 | 0.9432 | 0.8569 | 0.9412 | 0.8965 | 0.8448 | 0.6567 | 0.8567 | |

| LRMID [34] | 0.9923 | 0.9918 | 0.9808 | 0.9919 | 0.9552 | 0.9174 | 0.7831 | 0.9239 | 0.851 | 0.7309 | 0.4985 | 0.7862 | |

| LRTD [20] | 0.9985 | 0.9962 | 0.9373 | 0.9968 | 0.9985 | 0.9948 | 0.8043 | 0.9913 | 0.9985 | 0.9922 | 0.6691 | 0.9936 | |

| DL0S [16] | 0.9963 | 0.9946 | 0.9736 | 0.9949 | 0.9962 | 0.9945 | 0.9402 | 0.9938 | 0.9952 | 0.9909 | 0.8781 | 0.9917 | |

| Ours | 0.9991 | 0.9961 | 0.9884 | 0.9989 | 0.9994 | 0.9958 | 0.9733 | 0.9987 | 0.9993 | 0.9979 | 0.9299 | 0.9981 | |

| MFSIM | Noisy | 0.9317 | 0.8819 | 0.8275 | 0.8897 | 0.8104 | 0.7021 | 0.6324 | 0.7168 | 0.7421 | 0.6159 | 0.5437 | 0.6348 |

| SGE [23] | 0.9912 | 0.9906 | 0.9867 | 0.9921 | 0.9771 | 0.9667 | 0.9065 | 0.9698 | 0.9066 | 0.7551 | 0.5874 | 0.7965 | |

| WFAF [22] | 0.9616 | 0.9611 | 0.9597 | 0.9612 | 0.9542 | 0.9488 | 0.9369 | 0.9514 | 0.9421 | 0.9285 | 0.9043 | 0.9341 | |

| UTV [14] | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | 0.9422 | |

| ASSTV [19] | 0.98 | 0.9803 | 0.9759 | 0.9796 | 0.9664 | 0.9585 | 0.9111 | 0.9559 | 0.9359 | 0.9082 | 0.8165 | 0.9167 | |

| LRMID [34] | 0.9946 | 0.9945 | 0.9873 | 0.9937 | 0.9705 | 0.651 | 0.8784 | 0.9367 | 0.9144 | 0.8628 | 0.7514 | 0.8934 | |

| LRTD [20] | 0.9988 | 0.9969 | 0.9619 | 0.9971 | 0.9988 | 0.9955 | 0.8802 | 0.9959 | 0.9988 | 0.9935 | 0.8022 | 0.9945 | |

| DL0S [16] | 0.9966 | 0.9955 | 0.9848 | 0.9962 | 0.9966 | 0.9954 | 0.9751 | 0.9956 | 0.9963 | 0.9942 | 0.9533 | 0.9939 | |

| Ours | 0.9993 | 0.9965 | 0.9918 | 0.9989 | 0.9995 | 0.9962 | 0.9884 | 0.9981 | 0.9993 | 0.9981 | 0.9772 | 0.9979 | |

| SAM | Noisy | 0.187 | 0.309 | 0.44 | 0.338 | 0.447 | 0.706 | 0.921 | 0.769 | 0.593 | 0.912 | 1.129 | 0.927 |

| SGE [23] | 0.063 | 0.081 | 0.107 | 0.089 | 0.187 | 0.237 | 0.4 | 0.243 | 0.387 | 0.732 | 1.1 | 0.933 | |

| WFAF [22] | 0.066 | 0.086 | 0.1 | 0.088 | 0.183 | 0.236 | 0.269 | 0.247 | 0.29 | 0.365 | 0.419 | 0.387 | |

| UTV [14] | 0.05 | 0.054 | 0.058 | 0.056 | 0.056 | 0.087 | 0.111 | 0.091 | 0.066 | 0.126 | 0.166 | 0.134 | |

| ASSTV [19] | 0.035 | 0.035 | 0.035 | 0.035 | 0.037 | 0.035 | 0.081 | 0.051 | 0.068 | 0.068 | 0.231 | 0.098 | |

| LRMID [34] | 0.04 | 0.039 | 0.041 | 0.040 | 0.053 | 0.06 | 0.153 | 0.073 | 0.122 | 0.184 | 0.387 | 0.214 | |

| LRTD [20] | 0.023 | 0.027 | 0.055 | 0.037 | 0.023 | 0.027 | 0.188 | 0.068 | 0.023 | 0.026 | 0.3 | 0.187 | |

| DL0S [16] | 0.016 | 0.031 | 0.139 | 0.069 | 0.016 | 0.034 | 0.236 | 0.088 | 0.024 | 0.061 | 0.362 | 0.195 | |

| Ours | 0.015 | 0.027 | 0.057 | 0.028 | 0.007 | 0.015 | 0.148 | 0.041 | 0.008 | 0.017 | 0.275 | 0.086 | |

| ERGAS | Noisy | 131.20 | 185.49 | 262.28 | 188.39 | 393.61 | 556.4 | 786.85 | 586.24 | 656.01 | 927.46 | 1311.4 | 951.44 |

| SGE [23] | 52.87 | 57.43 | 74.51 | 60.12 | 117.20 | 141.0 | 261.77 | 156.85 | 282.73 | 581.81 | 1187.8 | 786.75 | |

| WFAF [22] | 103.96 | 108.04 | 115.9 | 109.87 | 143.46 | 167.88 | 210.53 | 189.67 | 200.30 | 247.29 | 325.82 | 258.97 | |

| UTV [14] | 191.23 | 191.52 | 191.81 | 191.64 | 191.35 | 193.56 | 196.04 | 194.35 | 191.69 | 197.60 | 204.23 | 197.64 | |

| ASSTV [19] | 61.60 | 60.54 | 73.4 | 62.88 | 92.64 | 101.09 | 169.59 | 121.07 | 140.85 | 170.36 | 302.18 | 188.69 | |

| LRMID [34] | 39.81 | 40.06 | 59.9 | 42.81 | 89.02 | 113.91 | 203.93 | 128.94 | 162.79 | 228.39 | 395.39 | 235.67 | |

| LRTD [20] | 18.85 | 25.83 | 111.55 | 61.86 | 18.94 | 29.99 | 253.94 | 94.38 | 18.89 | 37.27 | 393.12 | 125.74 | |

| DL0S [16] | 27.11 | 32.40 | 101.17 | 58.94 | 27.20 | 33.81 | 169.91 | 87.14 | 31.19 | 51.03 | 264.12 | 119.68 | |

| Ours | 15.07 | 25.11 | 56.66 | 30.45 | 9.36 | 28.16 | 105.40 | 56.97 | 9.81 | 17.96 | 188.68 | 86.47 |

| Dataset | Index | UTV | WFAF | SGE | ASSTV | LRMID | DL0S | LRTD | GLTSA |

|---|---|---|---|---|---|---|---|---|---|

| (1) | MICV | 28.176 | 60.871 | 58.947 | 90.526 | 98.618 | 118.856 | 110.054 | 121.248 |

| MMRD (%) | 0.2013 | 0.1504 | 0.1706 | 0.1206 | 0.0951 | 0.0538 | 0.0864 | 0.0547 | |

| (2) | MICV | 16.315 | 31.056 | 30.842 | 36.118 | 46.211 | 53.137 | 53.204 | 52.176 |

| MMRD (%) | 0.0913 | 0.0844 | 0.0901 | 0.0628 | 0.0251 | 0.0198 | 0.0365 | 0.0139 |

| Stripe Type | UTV | WFAF | SGE | ASSTV | LRMID | DL0S | LRTD | GLTSA |

|---|---|---|---|---|---|---|---|---|

| Periodic | 10.94 | 8.89 | 3.99 | 92.14 | 209.12 | 179.85 | 785.98 | 182.33 |

| non-periodic | 11.23 | 9.18 | 4.37 | 95.33 | 214.51 | 183.64 | 789.46 | 186.75 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, X.; Zhao, Y.; Xue, J.; Chan, J.C.-W.; Kong, S.G. Global and Local Tensor Sparse Approximation Models for Hyperspectral Image Destriping. Remote Sens. 2020, 12, 704. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12040704

Kong X, Zhao Y, Xue J, Chan JC-W, Kong SG. Global and Local Tensor Sparse Approximation Models for Hyperspectral Image Destriping. Remote Sensing. 2020; 12(4):704. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12040704

Chicago/Turabian StyleKong, Xiangyang, Yongqiang Zhao, Jize Xue, Jonathan Cheung-Wai Chan, and Seong G. Kong. 2020. "Global and Local Tensor Sparse Approximation Models for Hyperspectral Image Destriping" Remote Sensing 12, no. 4: 704. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12040704